Integrate OpenAI with Twilio Voice Using ConversationRelay

Time to read:

Integrate OpenAI with Twilio Voice Using ConversationRelay

ConversationRelay is a product from Twilio that allows you to build real-time, human-like voice applications for conversations with any AI Large Language Model, or LLM. It opens a WebSocket so you can integrate with any AI API, allowing for a fluid, event-based interaction and fast two-way connection.

This tutorial will serve as a quick overview of a basic integration of OpenAI’s models with Twilio Voice using ConversationRelay. When you are finished with this quickstart, you will be able to deploy a Node.js server that allows you to call a Twilio phone number and get into a conversation with an LLM. When you’re done with the tutorial, you’ll have a solid base to add more advanced features.

Let's get started!

Prerequisites

To deploy this tutorial you will need:

- Node.js installed on your machine

- A Twilio phone number (Sign up for Twilio here)

- Your IDE of choice (such as Visual Studio Code)

- The ngrok tunneling service (or other tunneling service)

- An OpenAI Account to generate an API Key

- A phone to place your outgoing call to Twilio

Write the code

Start by creating a new folder for your project.

Next, initiate a new node.js project, and install the prerequisites.

For this tutorial, you’ll use Fastify as your framework. It will let you quickly spin up a server for both the WebSocket you'll need, as well as the route for the instructions you're going to need to provide to Twilio.

Start by creating the files you will need to run your connection.

To store your API key for OpenAI, you will need an .env file. Create this file in your project folder, then open it in your favorite editor.

Use the following line of code, replacing the placeholder shown with your actual key from the OpenAI API keys page.

Next, create a new file called server.js. This is where the primary code for your project server is going to be stored. Create this file in the same directory as your .env file.

Nice work – next, you will work on your imports and define the constants you'll need to change the behavior of the LLM.

Add the imports and constants

First, add the necessary constants to your file by putting in this code.

Here, notice that you are adding the system prompt that will sculpt out the personality for our AI. This prompt keeps it simple – and lets your AI know this conversation will be spoken aloud. Therefore, you want the AI to avoid using special characters that will sound awkward to spell out.

You also add the greeting that our AI can say when a caller rings in using the variable WELCOME_GREETING. As you can see, the greeting spaces out letters so the AI speaks them aloud correctly.

Write the Fastify server code

Great stuff. Now, you'll move on to the heart of the code: the server.

Next, add the following lines of code to server.js below where you let off before:

This code block is adding the connection to OpenAI. And the process.env.OPENAI_API_KEY line will get your API Key from the /env file.

Finally, beneath that, add the code to get your server started and complete the webhook connection.

This block of code is doing most of the heavy lifting. The first thing it does is establish a connection from your phone call to Twilio, at the route /twiml. That route returns a special dialect called TwiML, which gives Twilio instructions about how to connect to your WebSocket.

Then, it sets up a /ws route for Twilio to open a WebSocket app to you. This WebSocket is where you will communicate with ConversationRelay; you will receive messages from Twilio, but you will also need to pass messages from your LLM to Twilio to run the Text-to-Speech step.

We won’t go into all of the messages that will go in either direction. Here, you're handling the setup, prompt, and interrupt message type from ConversationRelay. You can find more detail on these message types here.

You can see the message types you can send back to ConversationRelay here. You’ll note that this tutorial is only demonstrating text messages (in the line ws.send()) here, but know that you can ask Twilio to play media, send DTMF digits, or even handoff the call!

Run and test

To finish setting up the ConversationRelay, there are a few more critical steps to connect your code to Twilio.

The first step is to return to your terminal and open up a connection using ngrok:

You need to open up the connection socket first, because you will need to keep the ngrok url for use in two places: in the Twilio console, and in your environment files.

Get the URL for your file and add it to the .env file using this line:

Replace the beginning of this placeholder with the correct information from your ngrok url. Note that you do not include the scheme (the “https://” or “http://”) in the environment variable.

Now you are ready to run your server.

Go into your Twilio console, and look for the phone number that you registered.

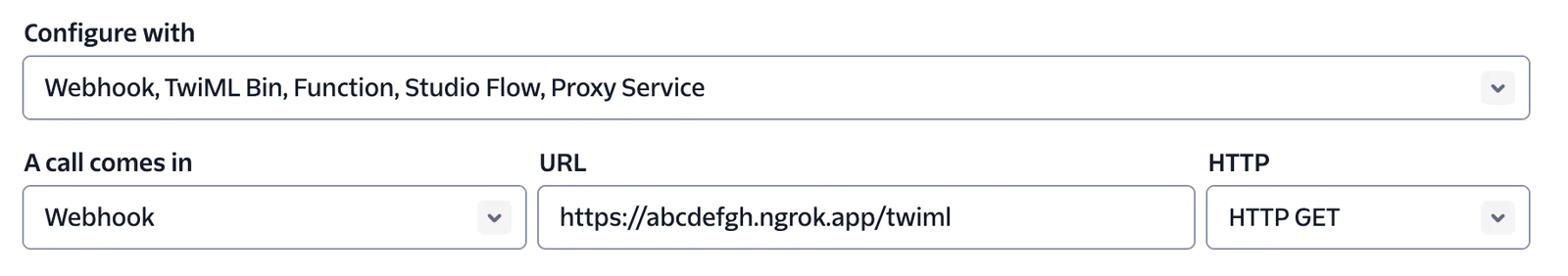

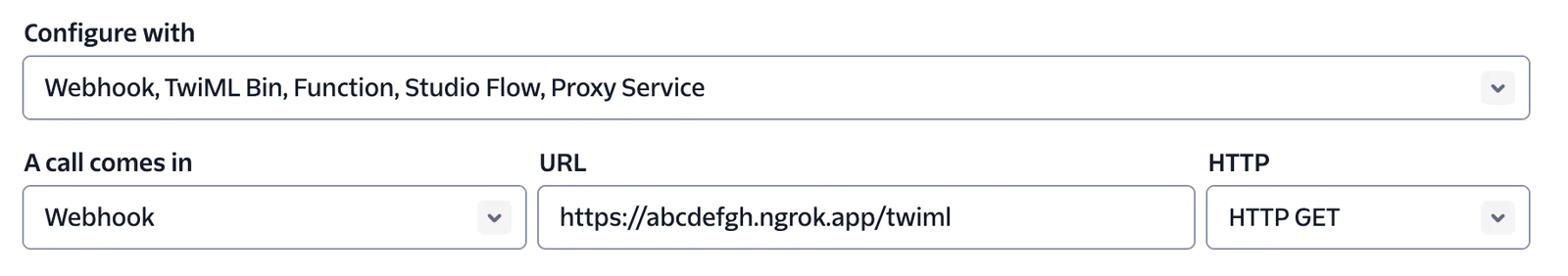

Set the configuration under A call comes in with the Webhook option as shown below.

In the URL space, add your ngrok URL (this time including the “https://”), and follow that up with /twiml for the correct routing.

Finally, set the HTTP option on the right to GET.

When a call is connected, Twilio will first get the greeting message that you provided. Then it will use the provided ngrok URL to connect directly to the websocket. That websocket connection will open up the line for you to have a conversation with OpenAI.

Save your configurations in the console. Now dial up the number on your phone.

If everything is hooked up correctly, you should hear your customized greeting. Say hello and ask the AI anything you like!

What's Next for ConversationRelay?

This simple demonstration works well, but it has limits.

For example, though you may be able to interrupt the conversation verbally, you may notice that the conversation text is generated before it's spoken aloud. With this code, the server does not have knowledge of exactly when in the conversation you interrupted it, which might lead to a misunderstanding down the line. You’ll also notice that this version of the code introduces quite a bit of latency when your prompt generates a lot of text from the LLM (try asking it to count to 100!).

In our next post, we’ll show you how to improve latency by streaming tokens to the LLM. We’ll also show you one way to maintain local conversation state with OpenAI with ConversationRelay’s interruption handling. Finally, we’ll show you how to add external tools your LLM can call using OpenAI function calling, and integrate everything into this same app.

We hope you had fun building with ConversationRelay! Let's build - and talk to - something amazing!

Appendix

Our colleagues have built some awesome sample applications and demos on top of ConversationRelay. Here’s a selection of use cases:

Amanda Lange is a .NET Engineer of Technical Content. She is here to teach how to create great things using C# and .NET programming. She can be reached at amlange [ at] twilio.com.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.