Pose Detection in Twilio Video with TensorFlow.js

Time to read:

Pose detection is one fun and interesting task in computer vision and machine learning. In a video chat, it could be used to detect if someone is touching their face, falling asleep, performing a yoga pose correctly, and so much more!

Read on to learn how to perform pose detection in the browser of a Twilio Video chat application using TensorFlow.js and the PoseNet model.

Setup

To build a Twilio Programmable Video application, we will need:

- A Twilio account - sign up for a free one here and receive an extra $10 if you upgrade through this link

- Account SID: find it in your account console here

- API Key SID and API Key Secret: generate them here

- The Twilio CLI

Follow this post to get setup with a starter Twilio Video app and to understand Twilio Video for JavaScript a bit more, or download this repo and follow the README instructions to get started.

In assets/video.html, import TensorFlow.js and the PoseNet library on lines 8 and 9 in-between the <head> tags.

Then in the same file add a canvas element with in-line styling above the video tag, and edit the video tag to have relative position.

Now it's time to write some TensorFlow.js code!

Pose Detection

In assets/index.js, beneath const video = document.getElementById("video"); add the following lines:

With that code we grab our canvas HTML element and its 2D rendering context, set the minimum confidence level, video width, video height, and frame rate. In machine learning, confidence means the probability of the event (in this case, getting the poses the model is confident it is predicting from the video). The frame rate is how often our canvas will redraw the poses detected.

After the closing brackets and parentheses for navigator.mediaDevices.getUserMedia following localStream = vid;, make this method estimateMultiplePoses to load the PoseNet model (it runs all in the browser so no pose data ever leaves a user’s computer) and estimate poses for one person.

PoseNet for TensorFlow.js can estimate either one pose or multiple poses. This means it has one version of the algorithm that detects just one person in an image or video, as well as another version of the algorithm that detects multiple people in an image or video. This project uses the single-person pose detector as it is faster and simpler, and for a video chat there's probably only one person on the screen. Call estimateMultiplePoses by adding the following code beneath localStream = vid;:

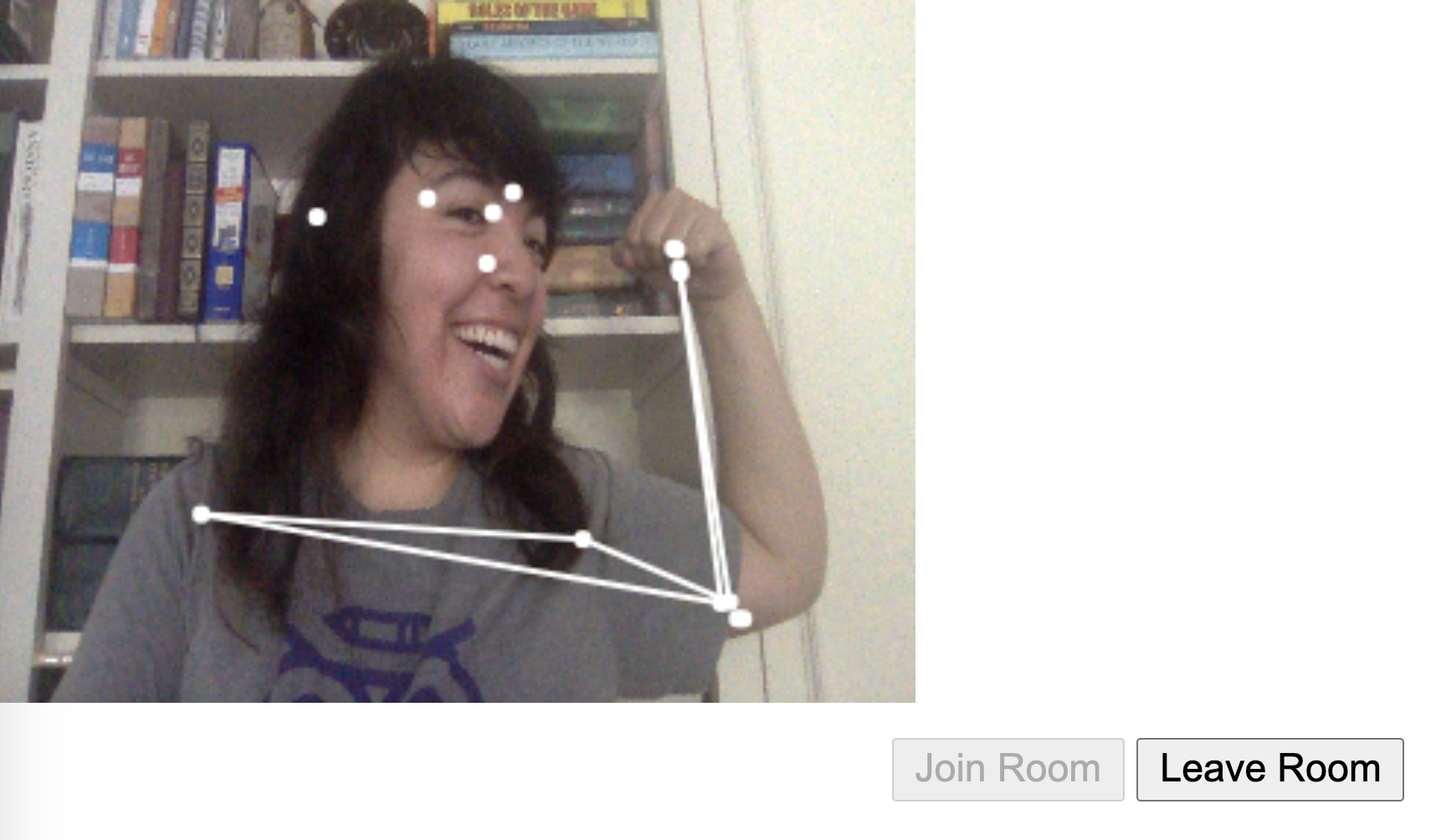

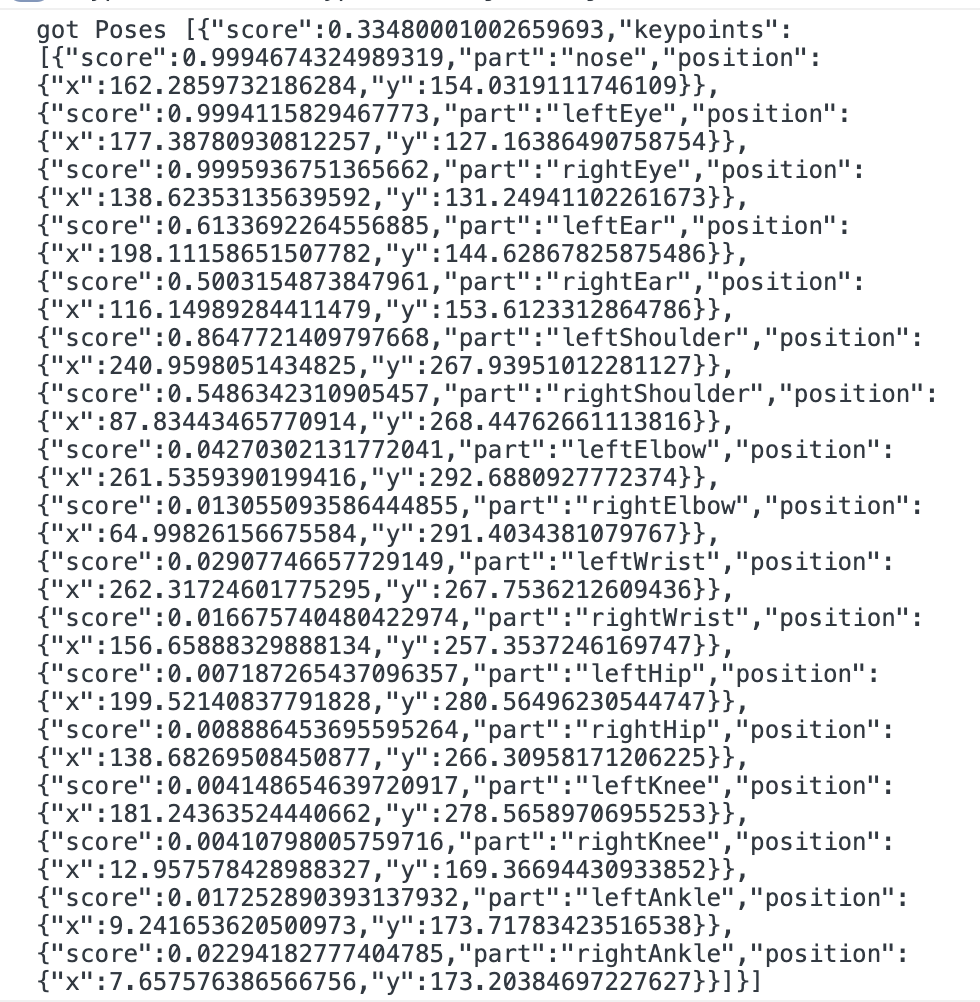

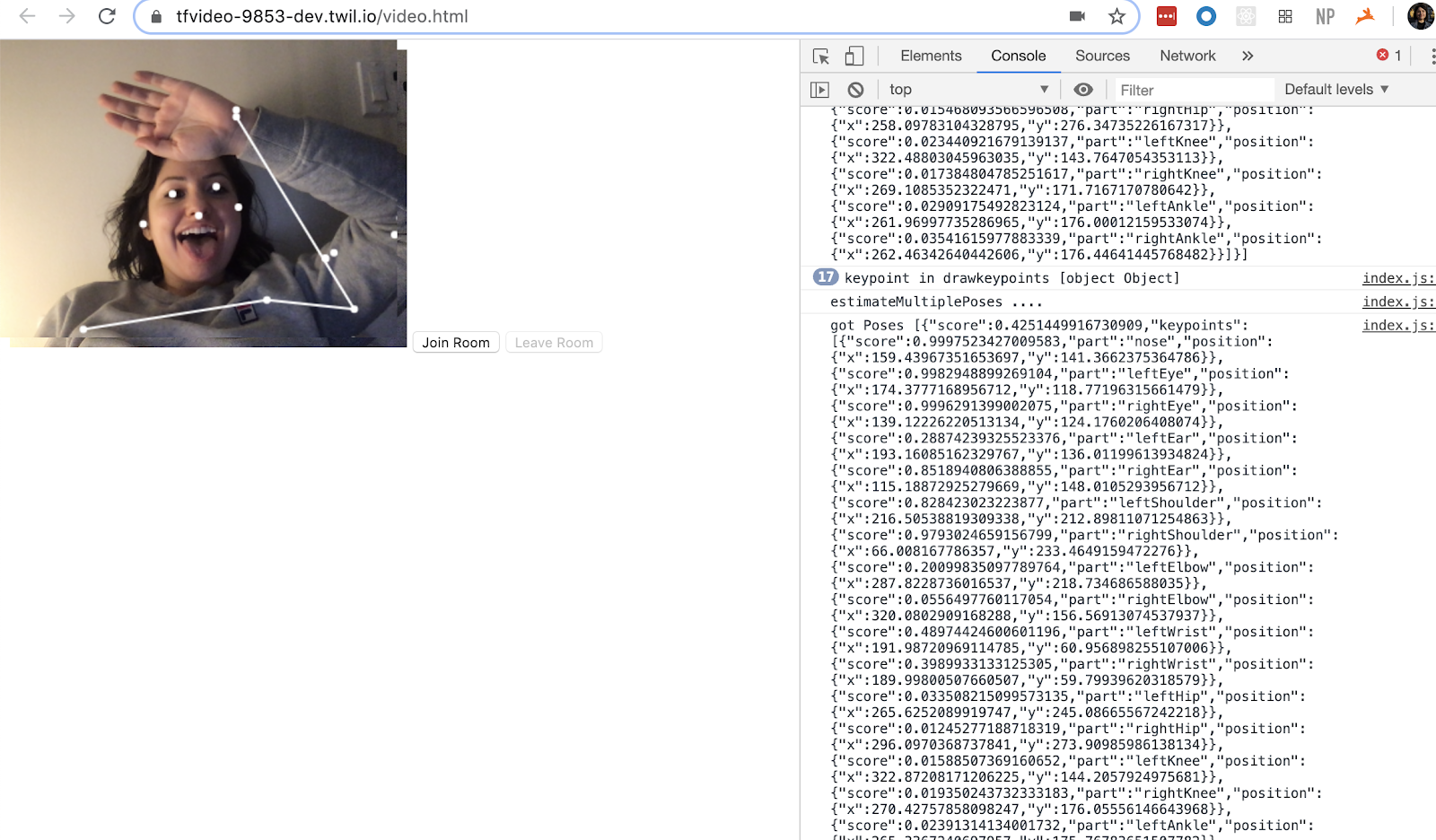

Now run twilio serverless:deploy on your command line and visit the assets/video.html URL under Assets. Open your browser’s developer tools where the poses detected are being printed to the console:

Nice! Poses are being detected.

Each pose object contains a list of keypoints and a confidence score determining how accurate the estimated keypoint position is, ranging from 0.0 and 1.0. Developers can use the confidence score to hide a pose if the model is not confident enough.

Now, let's draw those keypoints on the HTML canvas over the video.

Draw Segments and Points on the Poses

Right beneath the last code you wrote, make a drawPoint function. The function takes in three parameters and draws a dot centered at (x, y) with a radius of size r over detected joints on the HTML canvas.

Then, given keypoints like the array returned from PoseNet, loop through those given points, extract their (x, y) coordinates, and call the drawPoint function.

Next, make a helper function drawSegment that draws a line between two given points:

That drawSegment helper function is called in drawSkeleton to draw the lines between related points in the keypoints array returned by the PoseNet model:

To estimateMultiplePoses, add this code that loops through the poses returned from the TensorFlow.js PoseNet model. For each pose, it sets and restores the canvas, and calls drawKeypoints and drawSkeleton if the model is confident enough in its prediction of the detected poses:

Your complete index.js file should look like this:

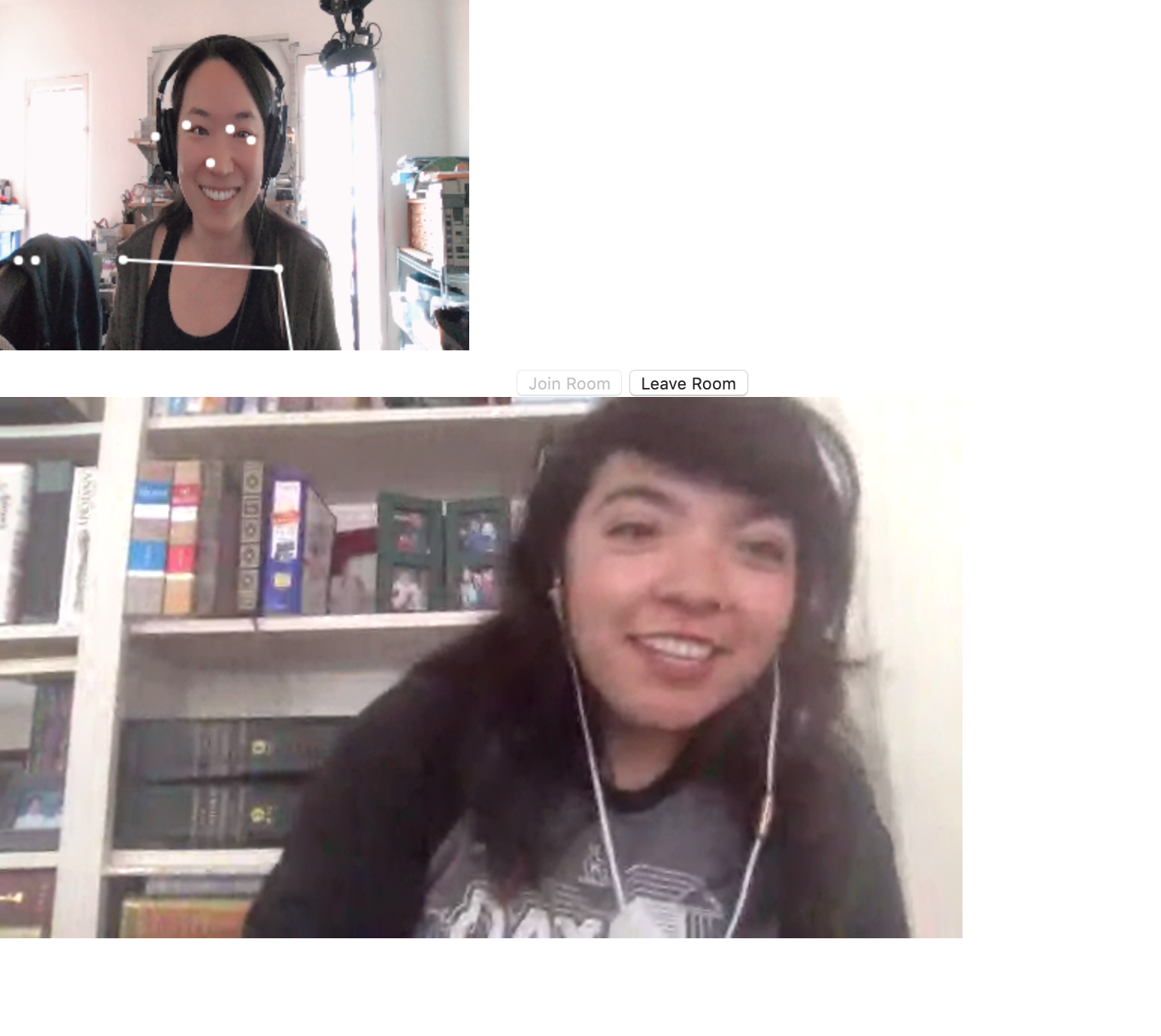

On the command line, run twilio serverless:deploy, visit the assets/video.html URL under Assets and see your poses detected in the browser in a Twilio Video application using TensorFlow.js.

Share it with friends and you have your own fun video chat room with pose detection using TensorFlow.js! You can find the completed code here on GitHub.

What's next after building pose detection in Programmable Video?

Performing pose detection in a video app with TensorFlow.js is just the beginning. You can use this as a stepping stone to build games like motion-controlled fruit ninja, check a participant's yoga pose or tennis hitting form, put masks on faces, and more. Let me know what you're building in the comments below or online.

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- email: lsiegle@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.