Getting started with Carthage to manage dependencies in Swift and iOS

Time to read:

This post is part of Twilio’s archive and may contain outdated information. We’re always building something new, so be sure to check out our latest posts for the most up-to-date insights.

Third party libraries are often a necessity when building iOS applications. Carthage is a ruthlessly simple tool to manage dependencies in Swift.

What about CocoaPods?

At this point, many iOS developers might be wondering how Carthage differs from CocoaPods which is another dependency manager for iOS described a recent tutorial tutorial. Carthage emphasizes simplicity, described in this Quora answer by a Carthage collaborator.

Unlike CocoaPods, which creates an entirely new workspace, Carthage checks out the code for your dependencies and builds them into dynamic frameworks. You need to integrate the frameworks into your project manually.

How to install dependencies with Carthage

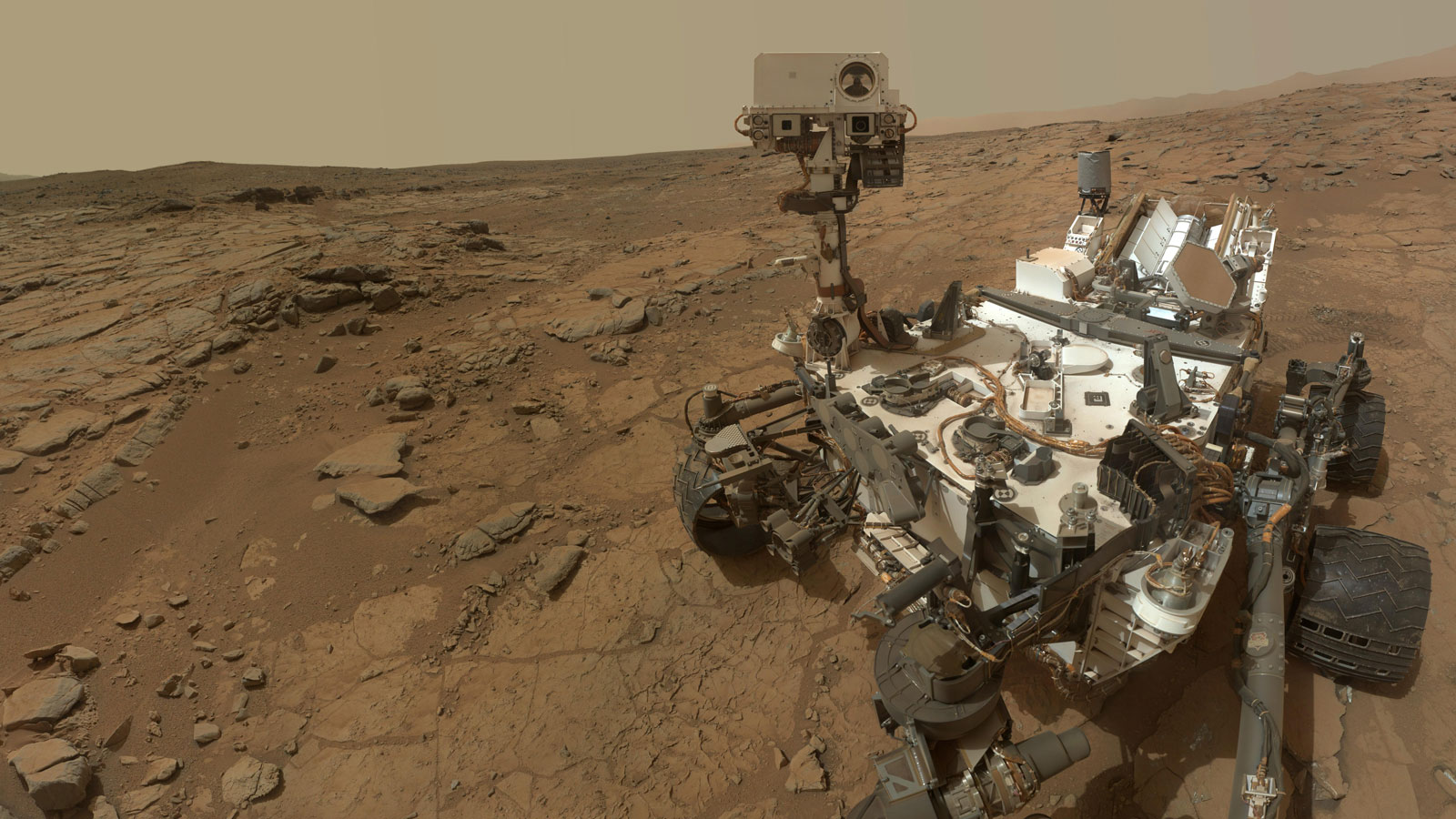

Let’s build an app that will display a recent picture taken on Mars using this NASA API with Alamofire to send HTTP requests and SwiftyJSON to make handling JSON easier. I will be using Xcode 7.3 and Swift 2.2 for this tutorial.

Start by creating a Single View Application Xcode project called PicturesFromMars. Select “Universal” for the device and enter whatever you want for the rest of the fields:

The three dependencies we are using are Alamofire, SwiftyJSON and AlamofireImage to load an image from a URL.

In order to install dependencies, we’ll first need to have Carthage installed. You can do this by installing it manually or via Homebrew by opening up your terminal and entering this command:

Carthage looks at a file called Cartfile to determine which libraries to install. Create a file in the same directory as your Xcode project called Cartfile and enter the following to tell Carthage which dependencies we want:

Now to actually install everything run the following in your terminal:

This is where things start to really differ from CocoaPods. With Carthage, you need to manually drag the frameworks over to Xcode.

Open your project in Xcode, click on the PicturesFromMars project file in the left section of the screen and scroll down to the Linked Frameworks and Libraries section in Xcode.

Now open the Carthage folder in Finder:

Drag the frameworks in the Build/iOS folder over to Xcode as seen in this GIF:

Now your Swift code can see these frameworks, but we need to make sure the device that the app is running on has them as well.

- In your project settings, navigate to your “Build Phases” section.

- Add a “New Copy Files Phase”

- Go down to the “Copy Files” section

- Under “Destination” select “Frameworks”

- Add the frameworks you want to copy over as seen in this GIF:

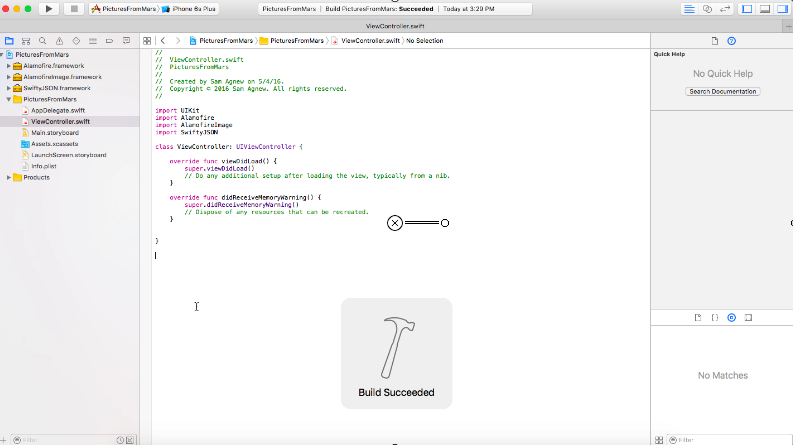

Now that you have the frameworks linked, head over to ViewController.swift and try importing the libraries to see if things are working:

You can see if everything builds correctly by pressing “Command-B.”

Getting ready to use the libraries we just installed

Before we can load images from Mars, we’ll need a UIImageView. Go over to Main.storyboard and add a UIImageView to your ViewController as seen in this GIF:

Set the constraints so that the UIImageView takes up the whole screen. Click on the “pin” icon and at the top in the four boxes, enter 0 and click on each of the directional margins. Also update the frames as seen in this GIF:

We have a UIImageView but no way to control it. Create an outlet for it in ViewController.swift called marsPhotoImageView.

You can do this several different ways, but I usually do this by opening the “Assistant Editor” with one screen having Main.storyboard open while the other displays ViewController.swift. While holding the “Control” key, click on the UIImageView in main.storyboard and drag the line over to ViewController.swift. Here is another GIF demonstrating how to do that:

The app will grab a picture from Mars taken on the most recent “Earth day” from NASA’s API for the Curiosity Rover and will load that image in our UIImageView.

Images are usually not available right away so let’s grab images from 5 days ago to be safe. We’ll need a quick function that generates a string that is compatible with this API. In ViewController.swift add this new function:

With this taken care of, we can send a request to the Mars Rover API, grab an image URL and load it in the marsPhotoImageView.

Handling HTTP requests with Alamofire and SwiftyJSON

Alamofire and SwiftyJSON are installed and imported in our code. All we need to do now is send a GET request using Alamofire to receive an image URL that we will use to load our UIImageView’s image property.

Replace your viewDidLoad with the following code:

Notice that we are replacing the http in the URLs with https because we can only send requests to secure URLs by default.

Run the app in the simulator and check out the latest picture from the Mars Rover!

Building awesome things is so much easier now

There are a ton of APIs out there that you now have access to using Carthage to manage dependencies. Twilio has some awesome APIs if you want to add Video chat or in app chat to your iOS app.

I can’t wait to see what you build. Feel free to reach out and share your experiences or ask any questions.

- Email: sagnew@twilio.com

- Twitter: @Sagnewshreds

- Github: Sagnew

- Twitch (streaming live code): Sagnewshreds

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.