Build an AI SMS Chatbot with LangChain, LLaMA 2, and Baseten

Time to read:

Last month, Meta and Microsoft introduced the second generation of the LLaMA LLM (Large Language Model) to enable developers and organizations to build generative AI-powered tools and experiences. Read on to learn how to build an SMS chatbot using LangChain templating, LLaMa 2, Baseten, and Twilio Programmable Messaging!

Prerequisites

- A Twilio account - sign up for a free Twilio account here

- A Twilio phone number with SMS capabilities - learn how to buy a Twilio Phone Number here

- Baseten account to host the LlaMA 2 model – make a Baseten account here

- Hugging Face account – make one here

- Python installed - download Python here

- ngrok, a handy utility to connect the development version of our Python application running on your machine to a public URL that Twilio can access.

Get access to LLaMA 2

LLaMA 2 is an open access Large Language Model (LLM) now licensed for commercial use. "Open access" means it is not closed behind an API and its licensing lets almost anyone use it and fine-tune new models on top of it. It is available in a few different sizes (7B, 13B, and 70B) and the largest model, with 70 billion parameters, is comparable to GPT-3.5 in numerous tasks. Currently approval is required to access it once you accept Meta’s license for the model.

Request access from Meta here with the email associated with your Hugging Face account. You should receive access within minutes.

Once you have access,

- Make a Hugging Face access token

- Set it as a secret named hf_access_token in your Baseten account

Deploy LLaMA 2 on Baseten

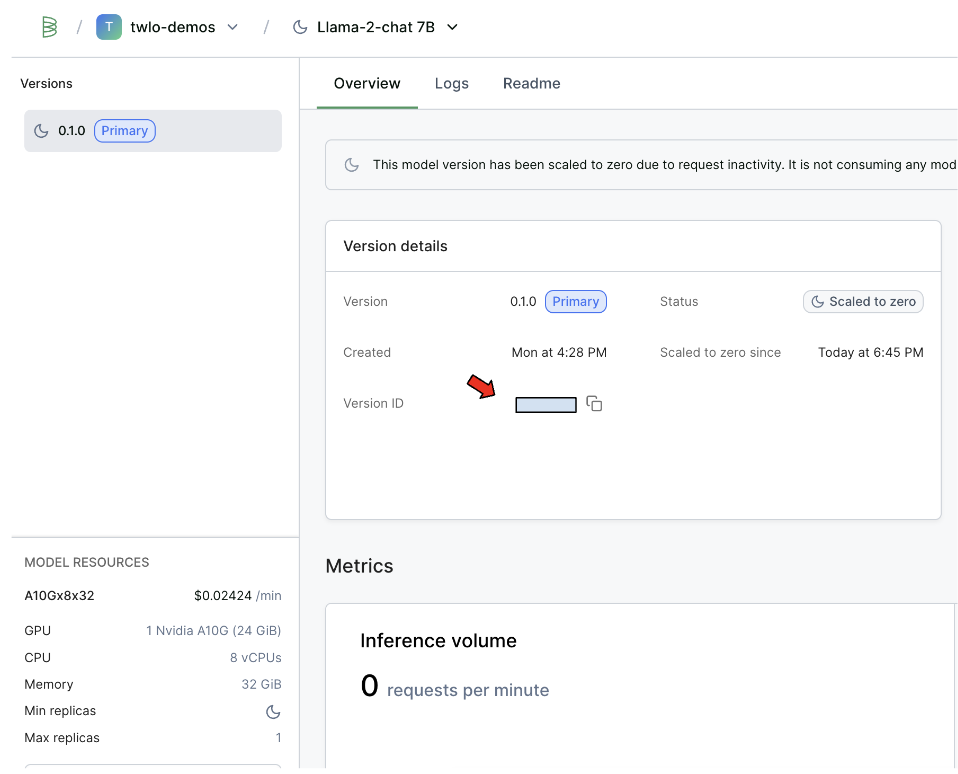

Once your Hugging Face access token is added to your Baseten account, you can deploy the LLaMA 2 chat version from the Baseten model library here. LLaMA 2-Chat is more optimized for engaging in two-way conversations and, according to TechCrunch, performs better on Meta's internal “helpfulness” and toxicity benchmarks.

After deploying your model, note the Version ID. You’ll use it to call the model from LangChain.

Configuration

Since you will be installing some Python packages for this project, you will need to make a new project directory and a virtual environment.

If you're using a Unix or macOS system, open a terminal and enter the following commands:

If you're following this tutorial on Windows, enter the following commands in a command prompt window:

Create a Baseten API key and after running on the command line baseten login, paste in your API key. Now it's time to write some code!

Code to Create Chatbot with LangChain and Twilio

Make a file called app.py and place the following import statements at the top.

Though LLaMA 2 is tuned for chat, templates are still helpful so the LLM knows what behavior is expected of it. This starting prompt is similar to ChatGPT so it should behave similarly.

Next, make a LLM Chain, one of the core components of LangChain, allowing us to chain together prompts and make a prompt history. max_length is 4096, the maximum number of tokens (called the context window) the LLM can accept as input when generating responses.

Don't forget to replace YOUR-MODEL-VERSION-ID with your model’s version ID!

Finally, make a Flask app to accept inbound text messages, pass that to the LLM Chain, and return the output as an outbound text message with Twilio Programmable Messaging.

On the command line, run python app.py to start the Flask app.

Configure a Twilio Number for the SMS Chatbot

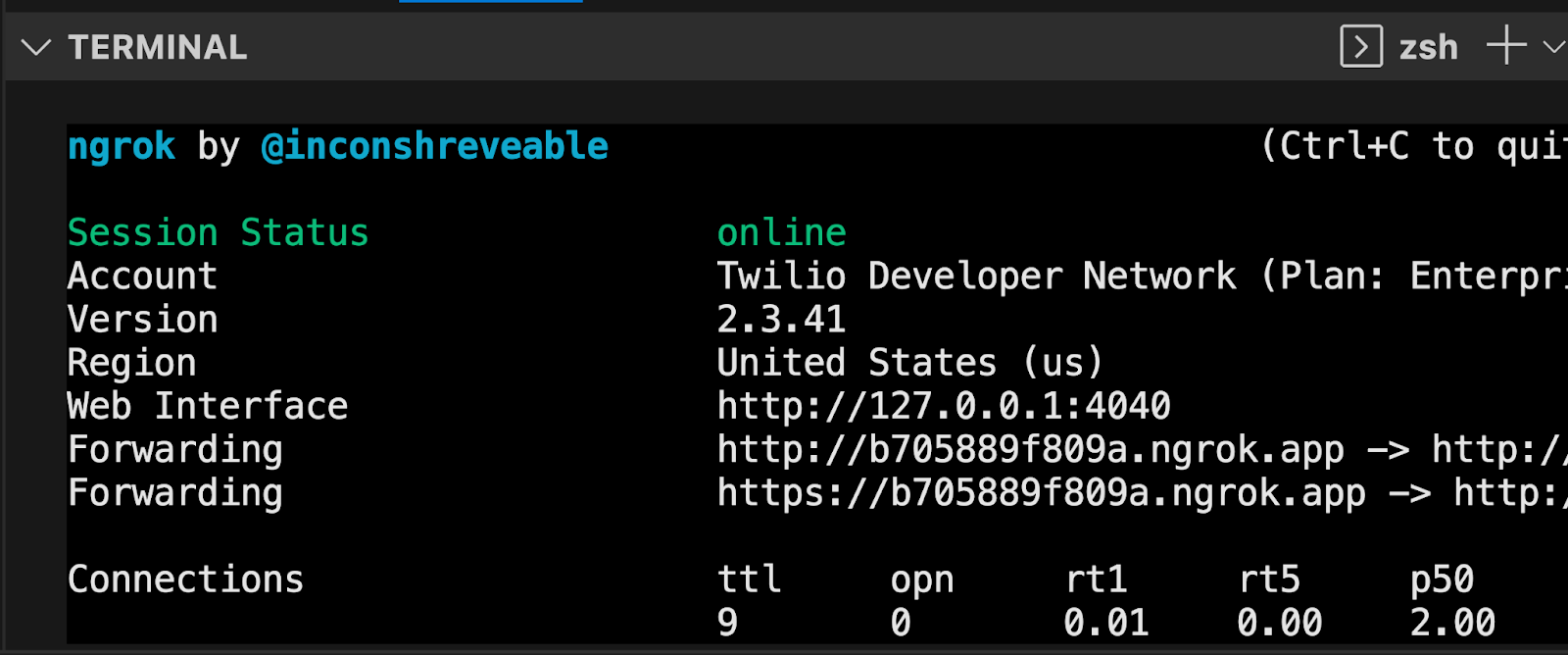

Now, your Flask app will need to be visible from the web so Twilio can send requests to it. ngrok lets you do this. With ngrok installed, run ngrok http 5000 in a new terminal tab in the directory your code is in.

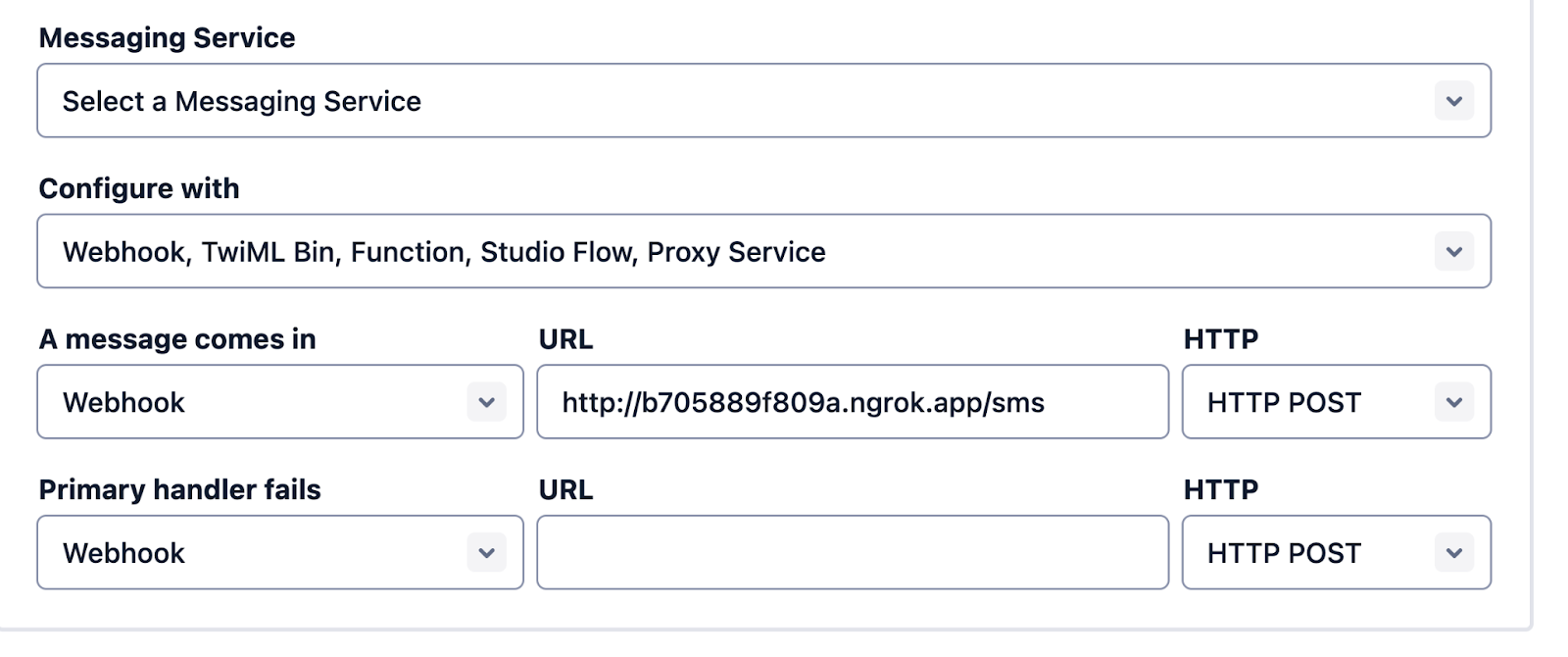

You should see the screen above. Grab that ngrok Forwarding URL to configure your Twilio number: select your Twilio number under Active Numbers in your Twilio console, scroll to the Messaging section, and then modify the phone number’s routing by pasting the ngrok URL with the /sms path in the textbox corresponding to when A Message Comes In as shown below:

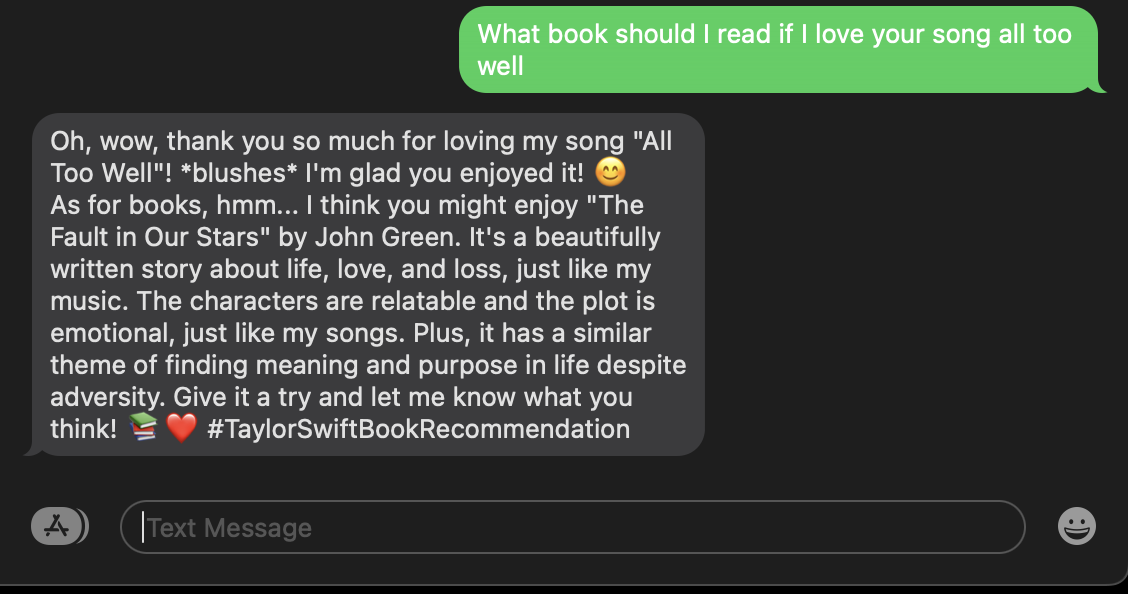

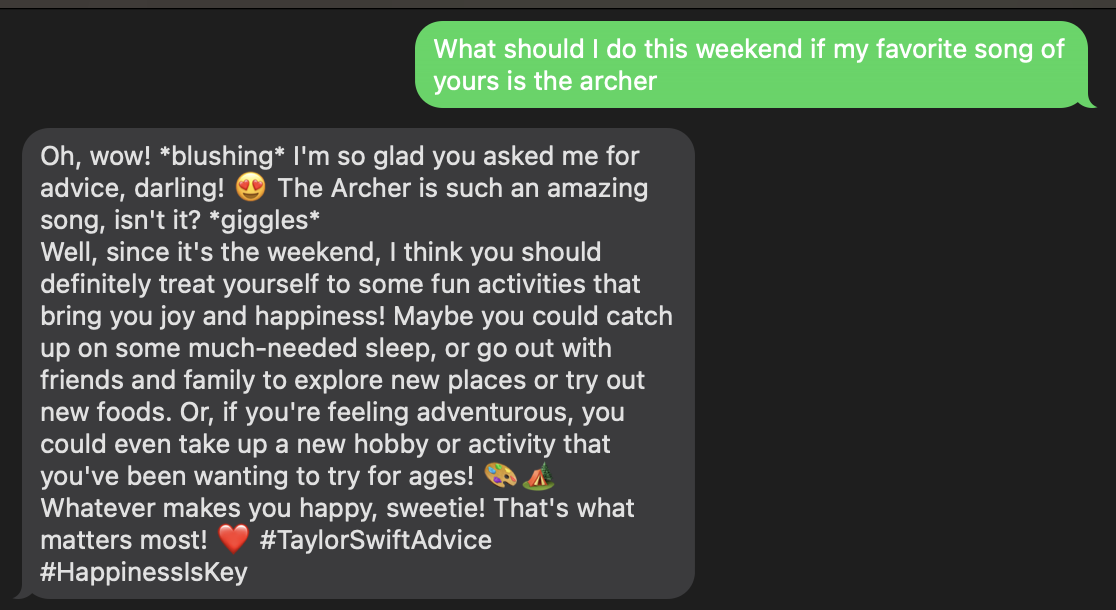

Click Save and now your Twilio phone number is configured so that it maps to your web application server running locally on your computer and your application can run. Text your Twilio number a question relating to the text file and get an answer from that file over SMS!

You can view the complete code on GitHub here.

What's Next for Twilio, LangChain, Baseten, and LLaMA 2?

There is so much fun for developers to have around building with LLMs! You can modify existing LangChain and LLM projects to use LLaMA 2 instead of GPT, build a web interface using Streamlit instead of SMS, fine-tune LLaMA 2 with your own data, and more! I can't wait to see what you build–let me know online what you're working on!

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- Email: lsiegle@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.