Build a Voice AI Application With Twilio ConversationRelay and BentoML

Time to read:

Building a voice AI application traditionally requires combining multiple components, including speech-to-text (STT), text-to-speech (TTS), real-time streaming, and large language models (LLMs). Managing these elements while maintaining low latency and delivering high-quality interactions can be a significant challenge.

In this tutorial, I’ll show you how to simplify this process by using Twilio ConversationRelay and BentoML. By the end of this tutorial, you will have a voice AI application that offers:

- Real-time voice conversations accessible via a phone call to a Twilio number

- Interruption handling, enabling users to redirect conversations

- Streaming responses with minimal latency

- Customizable serving logic with an open-source LLM deployed via BentoML

- Production-ready deployment in the cloud that can scale with traffic

Prerequisites

Make sure you have the following:

- A Twilio account with an available phone number. Sign up for free here.

- A BentoCloud account for model deployment. Sign up for free here.

- Python 3.11 or later installed on your machine

- Git for cloning the project repository

Build the application

Let’s break the development process into manageable steps. I’ll start with the architecture, move on to the setup, and then explain how to handle real-time conversations.

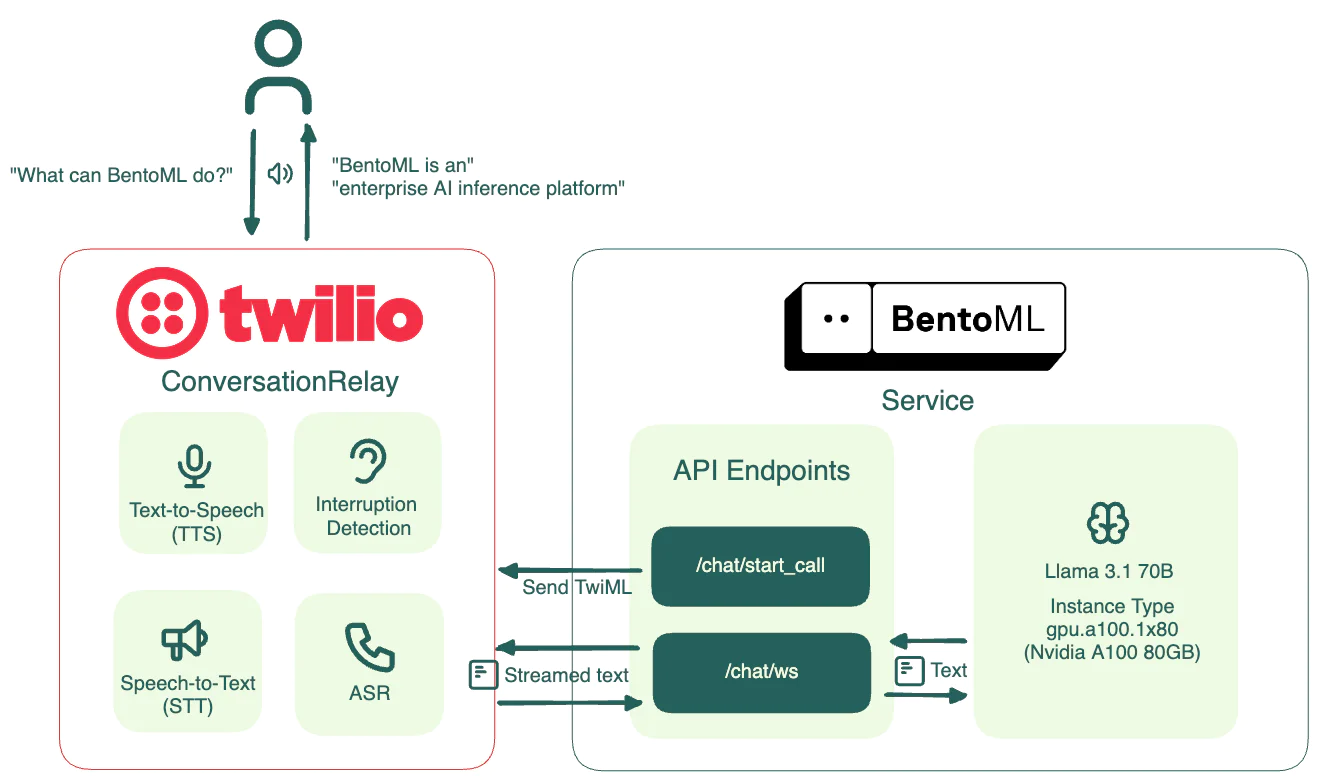

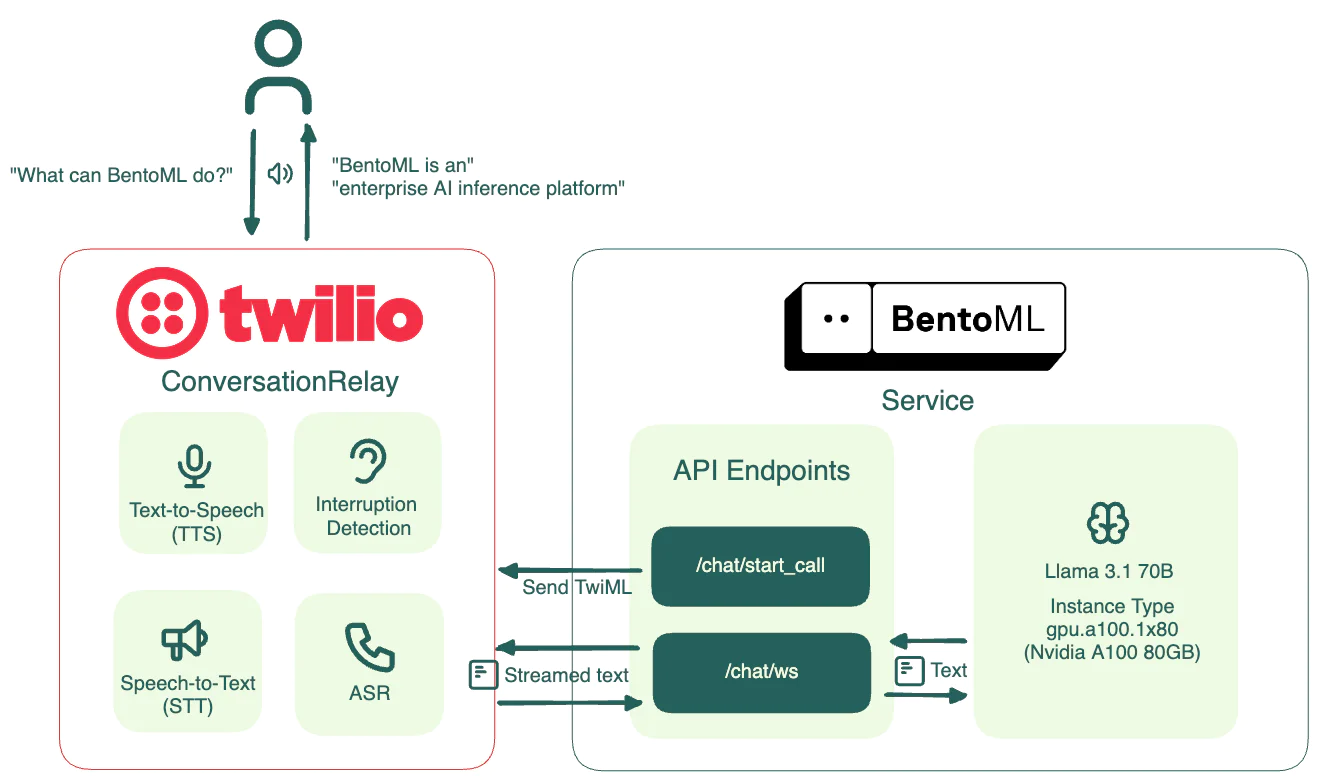

Understand the architecture

ConversationRelay simplifies voice AI development by managing critical features like STT, TTS, real-time streaming, and interruption handling. It eliminates much of the complexity while providing scalability.

With ConversationRelay handling the voice interaction layer, the remaining challenge is implementing and deploying the AI application. This is where BentoML comes in - an open-source framework that simplifies model serving, application packaging, and production deployment.

When building the voice AI application, BentoML helps handle two crucial aspects in particular:

- Automatically handle the hosting and deployment of a WebSocket server, providing seamless real-time communication between Twilio and the LLM. This saves developers from the complexity of setting up and maintaining WebSocket infrastructure themselves.

- Allow developers to easily switch between different LLMs for testing or deploying updates in production. This can be done without requiring changes to the existing infrastructure, enabling rapid experimentation.

Here’s how ConversationRelay and BentoML work together in this project:

Workflow:

- A user calls the Twilio phone number configured for the application.

- Twilio triggers the

/chat/start_callendpoint, which generates TwiML instructions. - The TwiML response includes

<ConversationRelay>, which tells Twilio to establish a WebSocket connection with the BentoML Service at/chat/ws. - Twilio converts user voice input to text and streams it to BentoML.

- The BentoML Service passes the transcribed text to Llama 3.1 70B.

- The model generates a response, streamed back to Twilio in real time via the BentoML Service.

- Twilio performs TTS conversion on the response and plays the audio back to the user.

Set up the project

Clone the repository. It contains everything you need for this project.

Install BentoML and log in to BentoCloud.

If you want to run this project locally, install all the dependencies:

Configure the BentoML Service

The application’s core logic is in the service.py file. Let’s walk through its main components.

First, configure the constants that control the model's behavior. Feel free to adjust them as needed.

Here I am using Llama 3.1 70B. Note that BentoML makes it straightforward to switch between AI models, whether for testing different configurations or deploying updates in production. This does not require you to overhaul your deployment infrastructure.

Then, create a FastAPI application as an entry point for managing call requests and WebSocket connections. In this project, BentoML converts your model and custom code into two API endpoints:

/chat/start_call: An HTTP endpoint for initiating Twilio voice calls. It is triggered when someone calls the Twilio number. It gets the BentoCloud Deployment URL environment variable and generates a TwiML response that uses<ConversationRelay>to establish a WebSocket connection./chat/ws: A WebSocket endpoint for real-time bidirectional communication, supporting voice streaming. You will configure it later within the BentoML Service.

Next, define the core BentoML Service.

Use the @bentoml.service decorator to mark a Python class (TwilioChatBot) as a BentoML Service. You can configure parameters like timeout and GPU resources to use in the cloud. For Llama-3.1-70B-Instruct-AWQ-INT4, I recommend you select an NVIDIA A100 80GB GPU.

Additionally, use the @bentoml.mount_asgi_app decorator to mount the FastAPI application at the /chat path. It allows the /start_call endpoint to be served together with the BentoML Service.

Within the Service, define your business logic and add custom APIs.

First, load Llama 3.1 70B from Hugging Face by instantiating a HuggingFaceModel class from bentoml.models. This API provides an efficient mechanism for loading AI models to accelerate model deployment. The model is downloaded during image building rather than Service startup. It is cached and will be mounted directly into containers, thus reducing cold start time and improving scaling performance.

Next, define the WebSocket endpoint /ws. It handles real-time communication between Twilio and the LLM via BentoML.

See the complete code for more details.

Deploy the application to BentoCloud

BentoCloud is an AI Inference platform that provides optimized infrastructure to build and scale AI applications. It allows you to keep AI deployments in your own VPC for better data control.

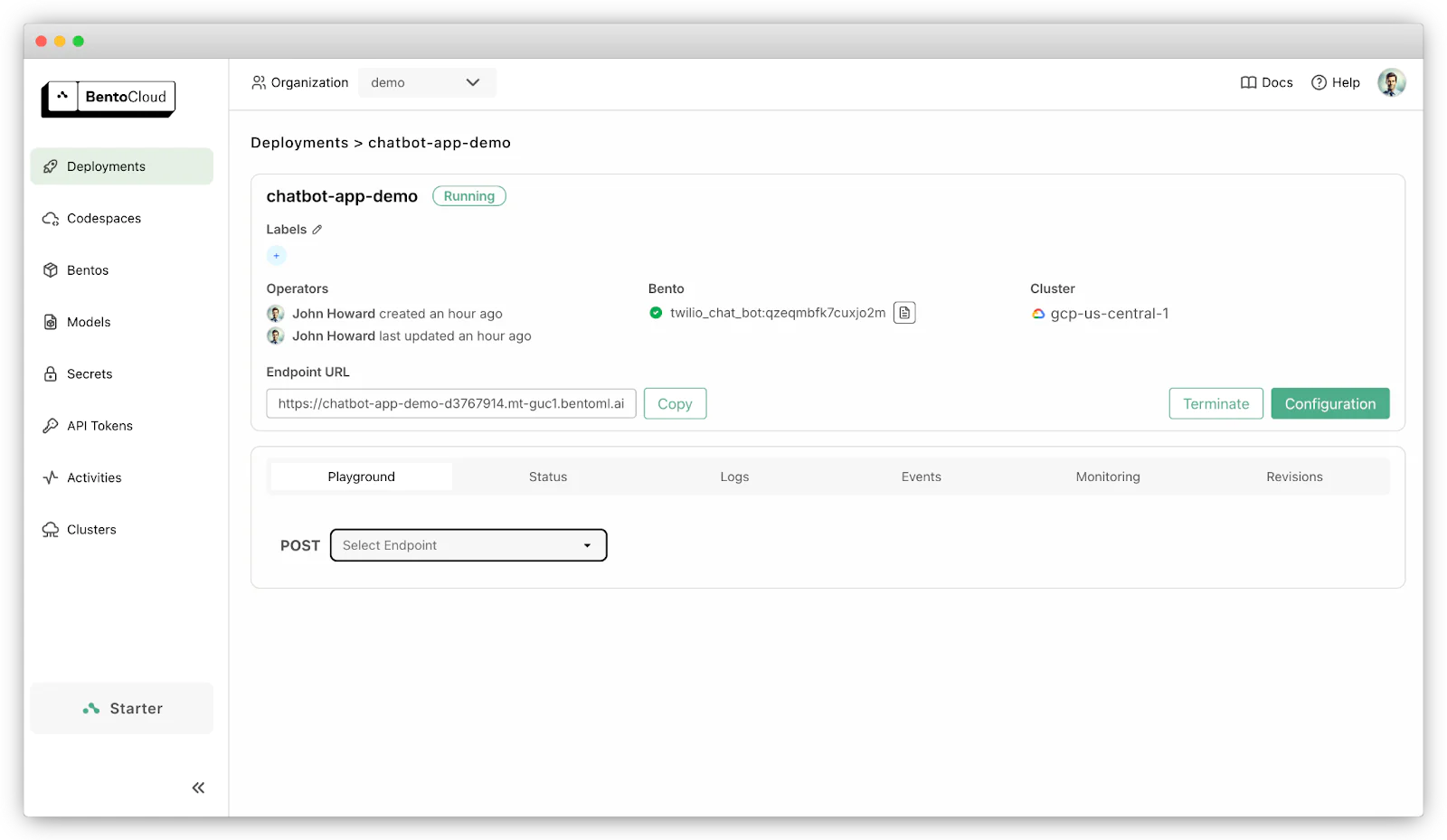

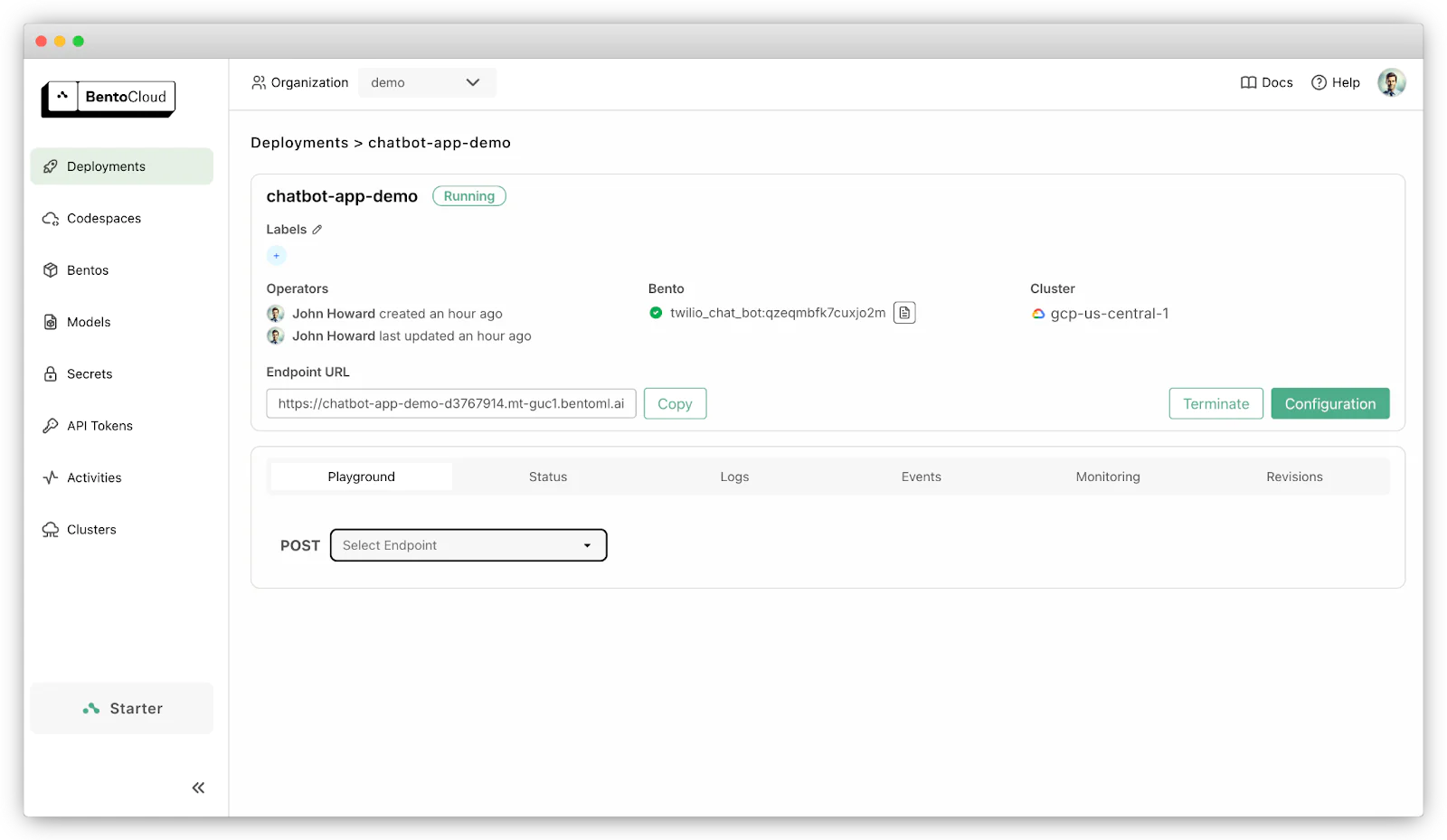

After building the Service with BentoML, deploy it to BentoCloud via bentoml deploy. Use the -n flag to specify the name.

Wait for it to be ready. Once deployed, navigate to the Deployment details page and copy the Endpoint URL.

Voice AI application deployed on BentoCloud

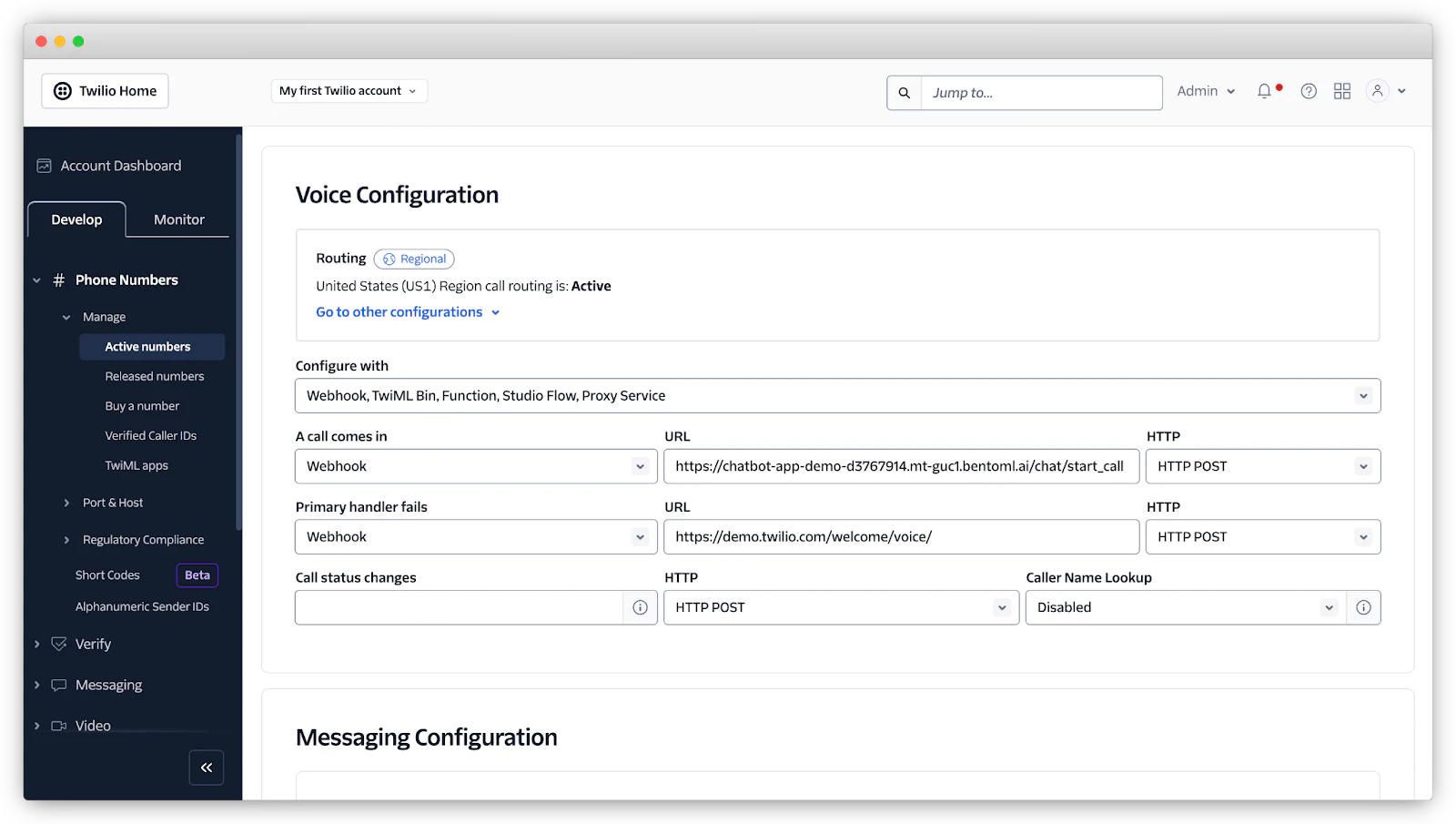

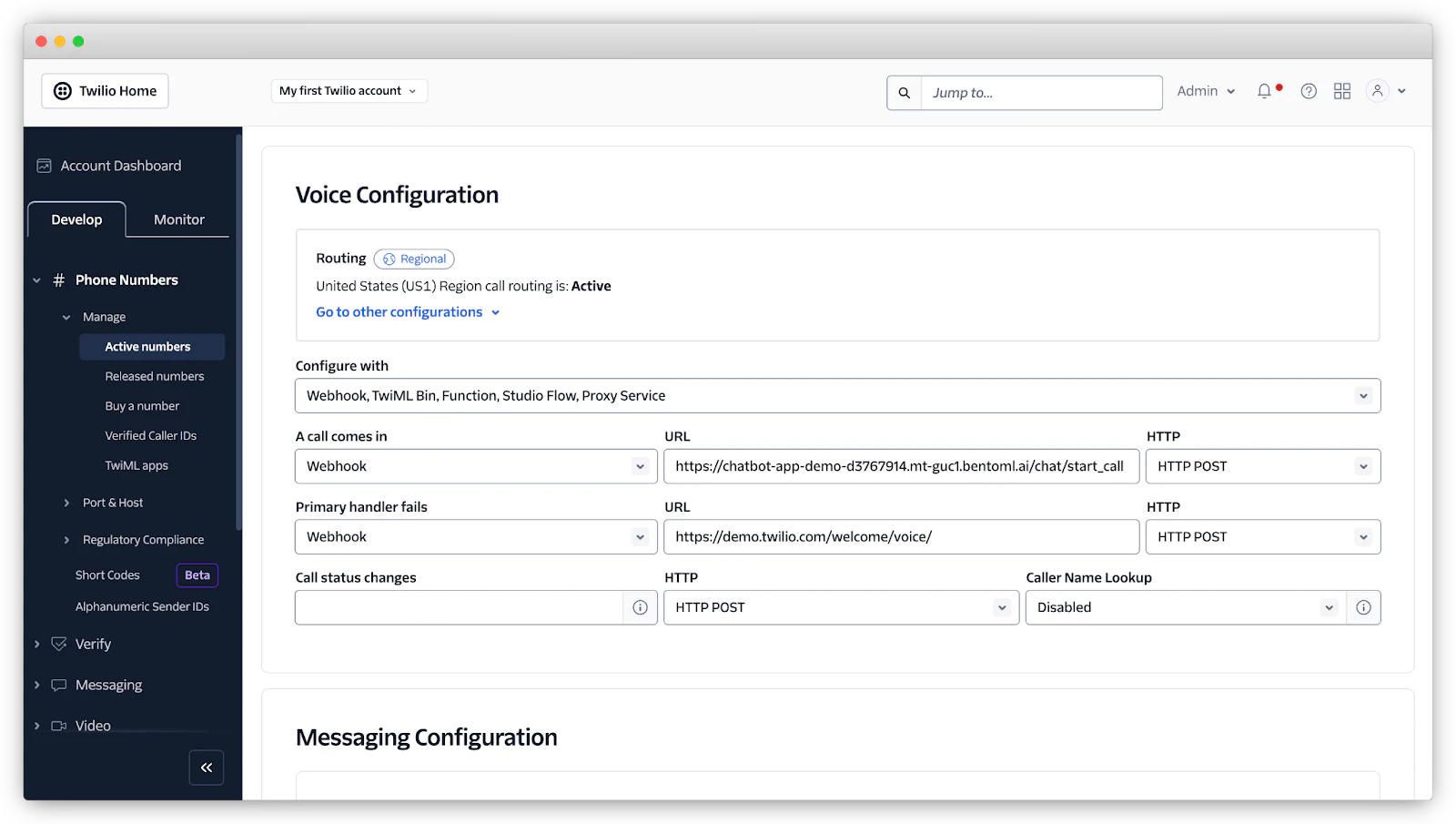

Configure Twilio

Go to your Twilio Console and select the phone number you want to use. Under Voice Configuration, select Webhook for A call comes in and paste your BentoCloud endpoint URL + /chat/start_call in the URL field:

Test the application

Now, make a call to your Twilio phone number to test your voice AI application. You’ll hear a greeting and can begin interacting with your AI in real time. The assistant supports dynamic interruptions, allowing you to redirect the conversation at any moment.

Check out the demo video:

Conclusion

In this tutorial, you built a production-ready voice AI application using ConversationRelay and BentoML. The combination handles all the complexities of voice processing while giving you full control over your LLM implementation and deployment.

Want to take it further? All the source code of this project is available here. You can easily customize it with your own prompts, RAG, or tool use workflow.

Sherlock Xu is a Content Strategist at BentoML. An open-source enthusiast and active technical blogger, Sherlock is keen on creating clear and engaging content about AI technologies. You can follow him on LinkedIn, X, and GitHub.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.