Build a Real-Time Voice AI Assistant with Twilio's ConversationRelay, LiteLLM, and Python

Time to read: 2 minutes

Voice AI assistants are evolving rapidly, and real-time interactions with LLMs are becoming smoother and more human-like. Twilio’s ConversationRelay helps you build on the trend – it lets you quickly integrate an LLM of your choice with the voice channel over Twilio Voice.

This project integrates Twilio's ConversationRelay with LiteLLM, allowing you to pick from multiple large language model (LLM) providers with a standardized API. In this tutorial, I’ll show you how to use ConversationRelay and LiteLLM to integrate LLMs from OpenAI, Anthropic, and DeepSeek with Twilio Voice in Python. Let’s get started!

Features

This Voice AI assistant is designed for a quick integration with Twilio Voice. Here are features of the setup you’ll build:

- Real-time streaming responses via a WebSocket server (using the FastAPI framework)

- Multi-provider LLM support using LiteLLM

- Smoother voice interactions with our prompt letting the LLM know it’s a voice assistant

- A straightforward Twilio Voice integration through ConversationRelay, letting you call the AI and chat at any hour!

Prerequisites

To get started, ensure you have the following:

- Python 3.8+

- API keys for the LLM providers you’d like to test (In this demo, I’ve shown support for OpenAI, Anthropic, and DeepSeek)

- ngrok for exposing your test server to Twilio

- A Twilio account and a registered phone number

- You can sign up for a free Twilio account here

- Search and purchase a Twilio phone number with these instructions (make sure you select one with Voice support.

Install the server

1. Clone the repository:

2. Create and enter a virtual environment:

3. Install dependencies:

4. Configure API keys: Create a .env file and add your API credentials:

5. Pick which LLM provider to use by selecting the model in function draft_response.

6. Improve or customize the system prompt as needed, using the voice-specific version below as a reference. (You can see our best practices here).

Run the server

1. Start the WebSocket server:

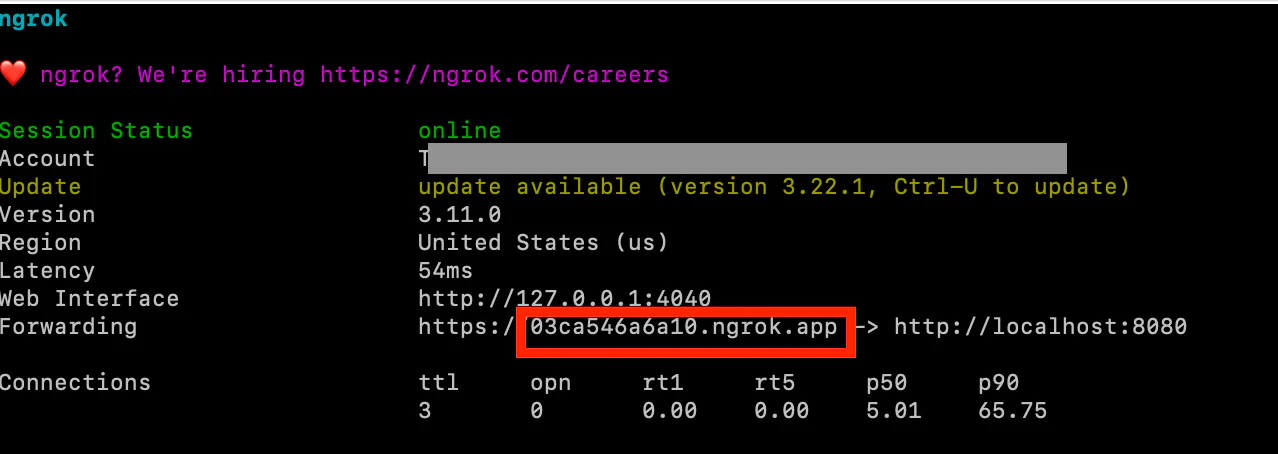

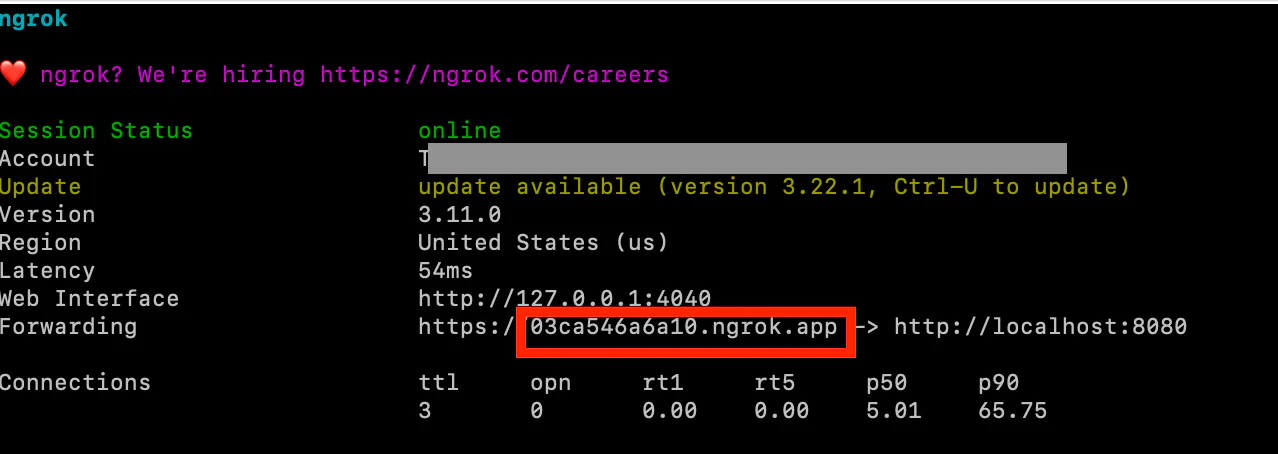

2. Expose the server with ngrok:

3. Copy the provided HTTPS url from ngrok, without the scheme (‘https://’). For example, I copied ‘03ca546a6a10.ngrok.app’.

Connect Twilio ConversationRelay

Now, we’ll integrate our server with Twilio Voice using ConversationRelay.

1. Set up a TwiML bin with the ngrok URL (without the scheme) you copied in the last step. (You can create a TwiML Bin from your Twilio Console)

2. Link your Twilio phone number to the TwiML bin. From your phone number, under Voice Configuration, change the A call comes in pulldown to your TwiML Bin.

3. Now you’re ready – make a test call to your Twilio number and interact with the AI assistant in real-time!

Conclusion

And there you have it. Your Voice AI assistant brings together Twilio's powerful ConversationRelay and LiteLLM's provider flexibility to quickly create a hotline for you to call an LLM. As you saw, you can switch between providers to test the capabilities of various LLMs for your use cases.

Want to extend its capabilities? Check out the ConversationRelay docs, or see our other ConversationRelay tutorials for topics such as advanced interruption handling and function calling. The possibilities are endless!

Hao Wang is a Solution Architect at Twilio, dedicated to empowering customers to maximize the potential of Twilio’s products. With a strong passion for emerging technologies and Voice AI, Hao is always exploring innovative ways to drive impactful solutions.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.