Transcribe phone calls in real-time using C# .NET with AssemblyAI and Twilio

Time to read:

Transcribe phone calls in real-time using C# .NET with AssemblyAI and Twilio

In this tutorial, you’ll build an application that transcribes a phone call to text in real-time using C# .NET. When someone calls your Twilio phone number, you will use the Media Streams API to stream the voice call audio to your WebSocket ASP.NET Core server. Your server will pass the voice audio to AssemblyAI's Streaming Speech-to-Text service to get the text back live.

Prerequisites

You'll need these things to follow along:

- .NET 8 (earlier versions of .NET will work as well with minor adjustments)

- A Twilio account

- A Twilio phone number

- Experience with the Twilio voice webhook and TwiML

- The ngrok CLI (or alternative tunnel service)

- An upgraded AssemblyAI account

Create a WebSocket server for Twilio media streams

You'll need to create an empty ASP.NET Core project.

Open up your terminal and run the following commands to create a blank ASP.NET Core project:

Then run the following command to add the Twilio helper library for ASP.NET Core:

Next, update the Program.cs file with the following C# code:

This code above responds to HTTP POST at /voice with the following TwiML:

The following TwiML will tell Twilio to say a message using speech to the caller using the <Say> verb, and then create a media stream that will connect to your WebSocket server using the <Connect> verb.

Next, add the following WebSocket server code before app.Run();:

The code above starts a WebSocket server and handles the different media stream messages that Twilio will send.

That's all the code you’ll need to implement the Twilio part of this application. Now you can test the application.

Run the application by running the following command on your terminal:

For Twilio to be able to reach your server, you need to make your application publicly accessible. Open a different shell and run the following command to tunnel your locally running server to the internet using ngrok. Replace <YOUR_ASPNET_URL> with the http-localhost URL that your ASP.NET Core application prints to the console.

Now copy the Forwarding URL that the ngrok command outputs. It should look something like this https://d226-71-163-163-158.ngrok-free.app.

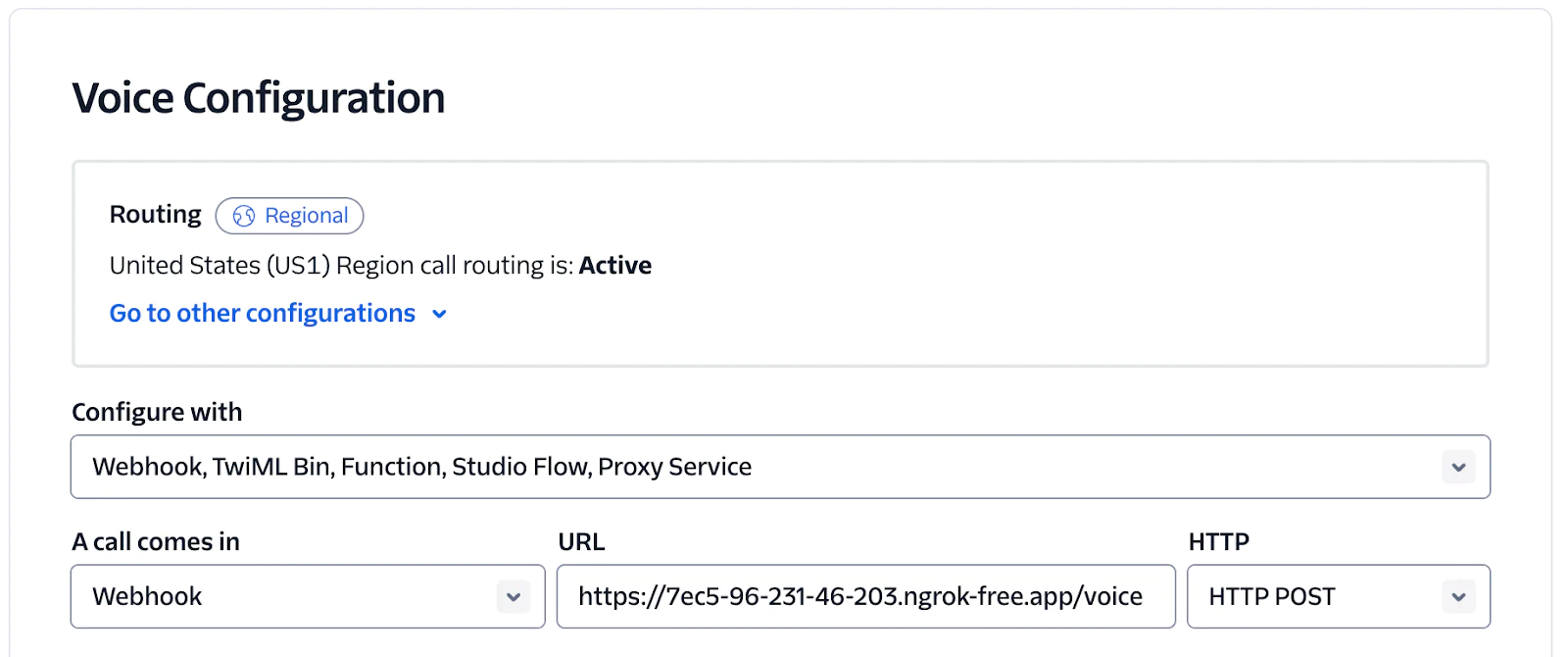

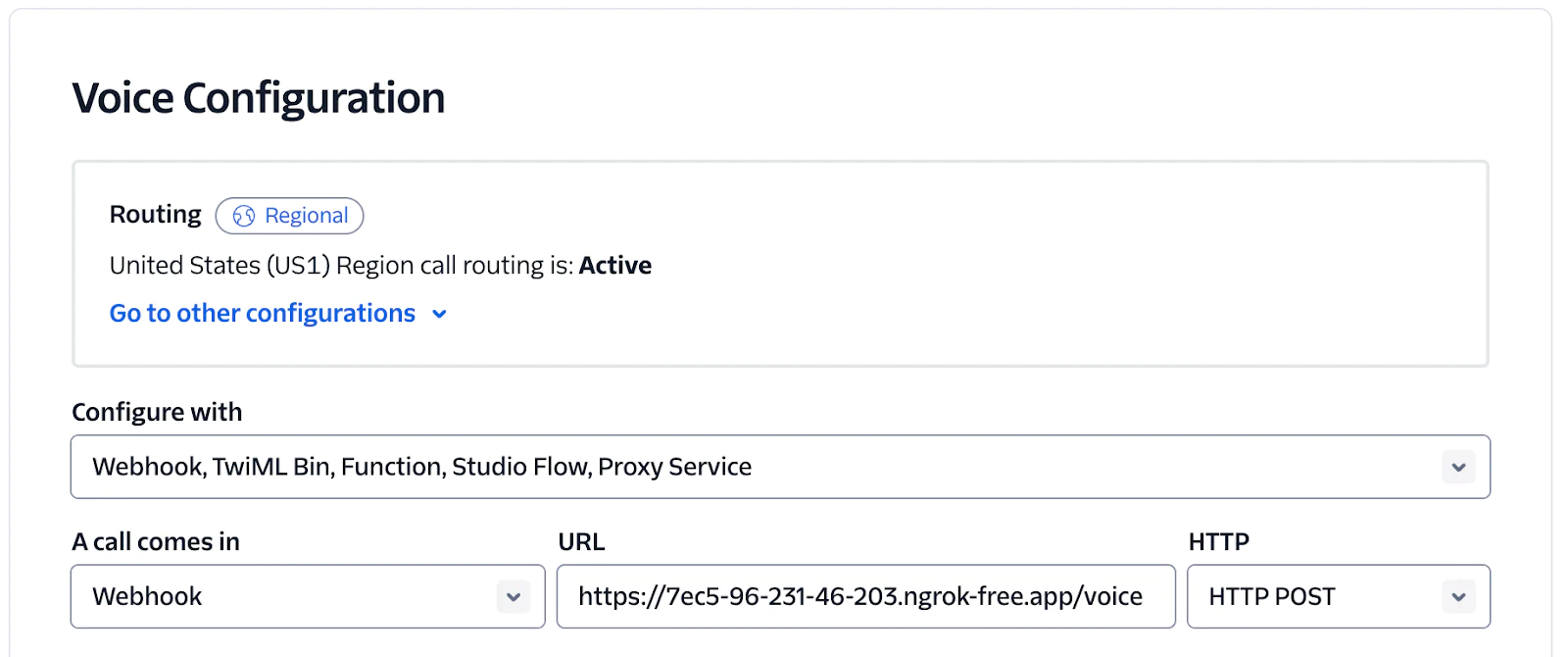

Go to the Twilio Console, navigate to your active phone numbers, and click on your Twilio phone number.

Update the Voice Configuration:

- A call comes in: Webhook

- URL: Set it to your ngrok forwarding URL suffixed by

/voice - HTTP: HTTP POST

Scroll to the bottom of the page and click Save configuration.

Call your Twilio phone number, say a few words, and hang up.

Then, observe the output on your terminal where you ran the application.

You'll see the logs of the different Media stream events and be bombarded with a lot of Media messages.

Great job! You finished one half of the puzzle. Now it's time to solve the other half.

Transcribe media stream using AssemblyAI real-time transcription

You're already receiving the audio from the Twilio voice call. Now, you must forward the audio to AssemblyAI's real-time transcription service to turn it into text.

Stop the running application on your terminal, but leave the ngrok tunnel running.

Add the AssemblyAI .NET SDK to your project:

Open the Program.cs file and add a using statement to import the AssemblyAI.Realtime namespace:

The real-time transcriber will need your AssemblyAI API key to authenticate. You can find the AssemblyAI API key here. Run the following command to store the API key using the .NET secrets manager.

Next, configure the RealtimeTranscriber to be created by the dependency injection container.The real-time transcriber should be configured to use a sample rate of 8000 and Mu-law encoding, as Twilio sends the audio in that format.

Now, update the WebSocket handler to pass the audio from Twilio to AssemblyAI and log the transcripts.

The code above requests a RealtimeTranscriber from the dependency injection container, subscribes to the lifecycle and transcript events and connects to the real-time transcription service. When Twilio sends audio data, the audio is passed to the real-time service using realtimeTranscriber.SendAudioAsync(audio);.

The partial and final transcript events both update the transcriptTexts dictionary using the start time of each word as the key and the word as the value. This way, the partial transcript words get updated with the final transcript words. The BuildTranscript local function converts the dictionary into a single string.

Test the application

That's all the code you need to write. Let's test it out. Start the ASP.NET Core application (leave ngrok running), and give your Twilio phone number a call. As you speak in the call, you'll see your words printed on the console.

Extending your AssemblyAI Application

In this tutorial, you learned to create a WebSocket ASP.NET Core application to handle Twilio media streams for receiving the audio of a Twilio voice call and transcribe that audio to text in real-time using AssemblyAI's Streaming Speech-to-Text.

You can build on this to create many types of voice applications. For example, you could pass the final transcript to a Large Language Model (LLM) to generate a response, then use a text-to-speech service to turn the response text into audio.

You can inform the LLM that there are specific actions that the caller can take. You can then ask the LLM to identify the action the caller prefers based on their final transcript and execute that action.

We can't wait to see what you build! Let us know!

Niels Swimberghe is a Belgian-American software engineer, a developer educator at AssemblyAI, and a Microsoft MVP. Contact Niels on Twitter @RealSwimburger and follow Niels’ blog on .NET, Azure, and web development at swimburger.net .

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.