Add Token Streaming and Interruption Handling to a Twilio Voice OpenAI Integration

Time to read:

Add Token Streaming and Interruption Handling to a Twilio Voice OpenAI Integration

ConversationRelay from Twilio allows you to build real-time, human-friendly voice applications for conversations with any AI Large Language Model, or LLM. It opens a WebSocket so you can integrate any AI API you choose with Twilio Voice, allowing for a fluid, event-based interaction and fast two-way connection.

Previously, we looked at a basic Node.js solution, showing how ConversationRelay can help you create a conversation with a friendly AI powered by OpenAI.

We also talked about some weaknesses of that integration. Because OpenAI generates the text before you hear it spoken aloud, it doesn't have context for the in-progress conversation. If you verbally interrupt the conversation, the AI has already stored all the text that was going to be spoken aloud. Its memory does not account for the point at which you interrupted it, which could cause some confusion for an end user.

We can improve how our AI tracks the voice conversation by adding some additional code. We’ll also add Token Streaming to your application, to improve the latency of the responses by allowing the speech to start before the AI finishes generating a response. Let’s get started now, before we’re interrupted!

Prerequisites

To deploy this tutorial you will need:

- Node.js installed on your machine

- A Twilio phone number ( Sign up for Twilio here)

- Your IDE of choice (such as Visual Studio Code)

- The ngrok tunneling service (or other tunneling service)

- An OpenAI Account to generate an API Key

- A phone to place your outgoing call to Twilio

Add Token Streaming

But first, what exactly is Token Streaming?

Think of a "token" as the smallest unit produced by the AI. Typically, this means a word, but can also mean a punctuation mark, number, or special character. When you stream tokens, the server returns each token one by one as the AI model generates them, rather than waiting for the AI’s entire response before converting the text to speech.

Streaming allows us to eliminate a lot of latency from our voice AI app. We’re also going to use the returned tokens to track where a caller interrupted the AI, so we can better inform OpenAI how much a user “heard” from the response.

Let's add token streaming to your server.js file.

Once you have the previous tutorial prepared, open your existing server.js file and look for the function called aiResponse. That function currently looks like this:

You will be changing this function to support streaming. Rename the function to aiResponseStream. Revise the code of this function to this new block:

When we set stream:true, OpenAI sends us one chunk at a time incrementally. For each token we get from OpenAI, we send it to ConversationRelay with last: false, signifying that more tokens are coming. When the response is done, we send one last text message with last: trueso ConversationRelay knows not to expect more tokens.

To make sure to call your new function correctly, look for this block of code in server.js:

Replace that all with this call:

That's it: now the token streaming should be working correctly.

Add conversation tracking and interruption handling

If you test this application now, the streaming will be working. This should lower the latency of your application: try asking your app to “give me a long, compounded sentence with many commas” and note how much quicker you hear a voice. You might also notice that the output in your developer console looks different. Instead of getting a long pause and a large chunk of text, you'll see the tokens appearing one at a time. There's an example in the screenshot below.

This is a good first step. But you still have not changed the way the AI reacts to interruptions. The next step is to track the conversation so that the AI has context for where the interruption occurred.

You will be making a few changes to the code to account for the entire conversation.

The first change is to the same function you altered in the previous step. This is the revised code. Note the lines that have been changed.

This code is accumulating your tokens into a conversation. Underneath the above code, erase the existing code for your application and instead add this. The changes from the previous version of the code have been highlighted here.

Finally, add the function that will handle the interruptions.

Use this code in your server.js, replacing the code at the end of the file:

This is the code that will handle more elegant interruptions for your streaming application. The handleInterrupt function is only called if a voice interaction is interrupted. When it is called, it finds the position at which the conversation was interrupted, and updates your local model of the conversation (in sessionData.conversation). When you pass that conversation back to OpenAI the next time you call aiResponseStream(conversation, ws), the AI has context for when the interruption occurred.

If you got lost making any of these changes, the final version of the server.js file is here.

Testing your AI application

You're now ready to test your application. Use the same steps that you used to test before. Start by going into your terminal and opening up a connection using ngrok:

ngrok will provide you with a unique URL for your server running locally. Get the URL for your file and add it to the .env file using this line:

Replace the beginning of this placeholder with the correct information from your ngrok url. Note that you do not include the scheme (the “https://” or “http://”) in the environment variable.

Go to the terminal, and, in the folder where your application is located, run your server using:

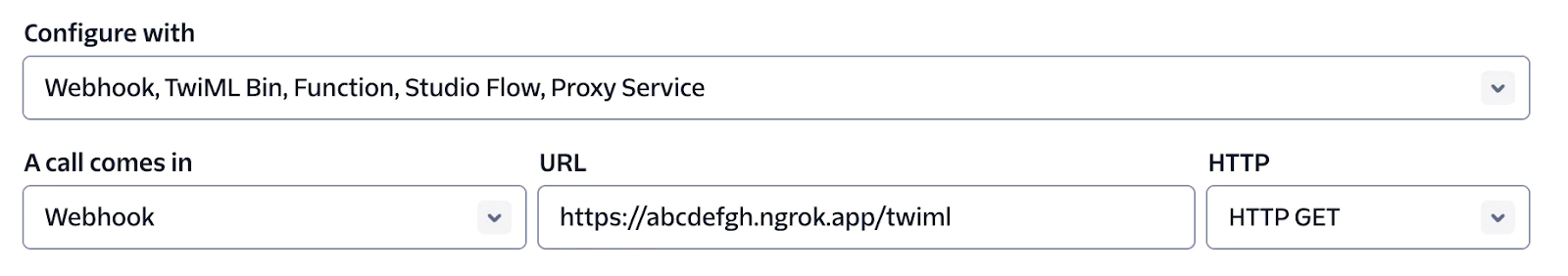

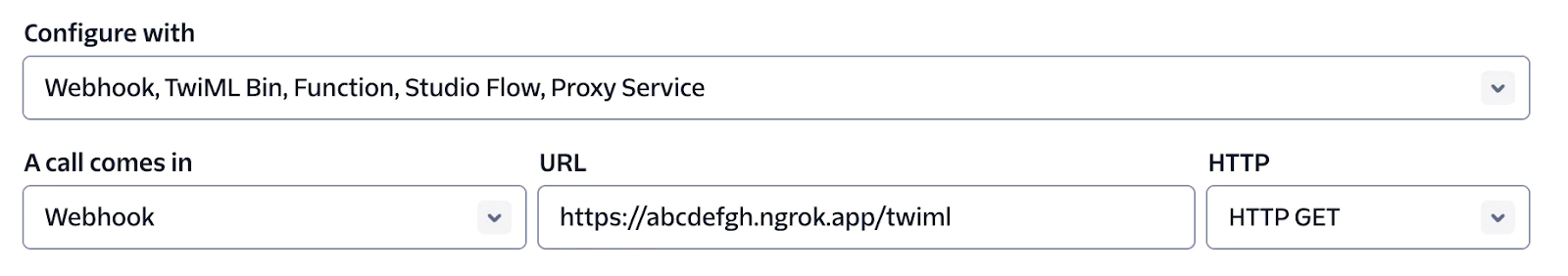

Go into your Twilio console, and look for the phone number that you registered.

Set the configuration under A call comes in with the Webhook option as shown below.

In the URL space, add your ngrok URL (this time including the “https://”), and follow that up with /twiml for the correct endpoint.

Finally, set the HTTP option on the right to GET.

Save your configurations in the console and dial your registered Twilio number to test. Your AI voice greeting will pick up the line, and now you can begin a conversation with your AI.

During testing, try interrupting the conversation with your AI. Ask a question that is likely to result in a longer answer. Try "recite the Gettysburg address," or "What happened in the year 1997?" Cut your AI off mid-sentence with an interruption. Then ask it where it left off in the conversation.

Towards more robust voice conversations with AI

Congratulations on building a more robust AI application! The next post will review Tool or Function Calling so your application can interface with additional APIs. This will provide more functionality to your users and make a truly robust AI voice application!

Some other posts you might be interested in:

- ConversationRelay Architecture for Voice AI Applications Built on AWS

- Translate Human-to-Human Conversations using ConversationRelay

- Integrate Twilio ConversationRelay with Twilio Flex for Contextual Escalations

- Build a Voice AI Application with Twilio ConversationRelay and BentoML

Let's build something amazing!

Amanda Lange is a .NET Engineer of Technical Content. She is here to teach how to create great things using C# and .NET programming. She can be reached at amlange [ at] twilio.com.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.