Summarize Text from Images Using AI, Python, and Twilio

Time to read: 7 minutes

Content, content, content! Are you overwhelmed by the amount of content you’re asked to read on a daily basis? Don’t you wish you could quickly summarize large chunks of text? It’d be a huge timesaver, especially for college students who read a lot of content!

In this blog post, I will teach you how to build an app in Python that performs text recognition on photos, summarizes that text, and then sends you a summary via SMS.

Here’s a typical use case: you see a large wall of text that you don’t want to read, so you pull out your phone to take a picture of that text, then you receive a SMS with a nice summarization. Boom, time saved!

Prerequisites

Before getting started, it’s important to have the following before moving on:

- Python 3.7 or higher installed on your machine.

- A Twilio account. If you haven’t yet, sign up for a free Twilio trial.

- A Google Cloud account, get started for free.

- An OpenAI account, sign up for a free account.

- ngrok installed on your machine. ngrok is a useful tool for connecting your local server to a public URL. You can sign up for a free account and learn how to install ngrok.

- A phone with a US or Canada phone number.

Access the Code

If blog posts aren’t your thing and you’d prefer to just look at the code, it’s available in this GitHub repository.

Table of Contents

For context, this blog post is structured as follows:

- Setup Google Cloud Vision: Set up our Google Cloud account and enable the Vision API

- Setup OpenAI: Set up our OpenAI account

- Setup Local Environment: Set up our local development environment

- Cloud Vision API: Using ML, detect words from images using the Google Cloud Vision API

- OpenAI API: Using AI, generate a summary of text from the OpenAI API

- Twilio SMS API: Send a text message (containing the summary) when the application is triggered

Setup Google Cloud Vision

To use the Google Cloud Vision API, we need to set it up by following the quickstart guide. This process does take some time, but don’t get discouraged. Just follow the quickstart guide step-by-step, or continue along here (if you don’t want to tab out).

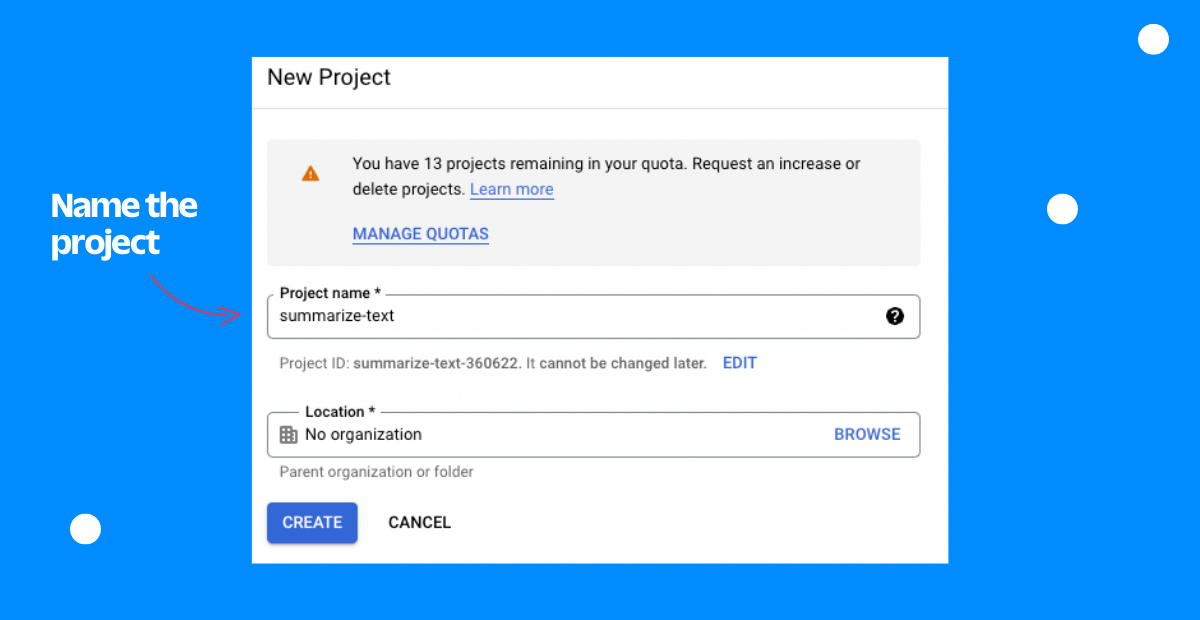

Assuming you already have a Google Cloud account, you’ll need to create a new project within Google Cloud. Give it a Project name of summarize-text and click Create.

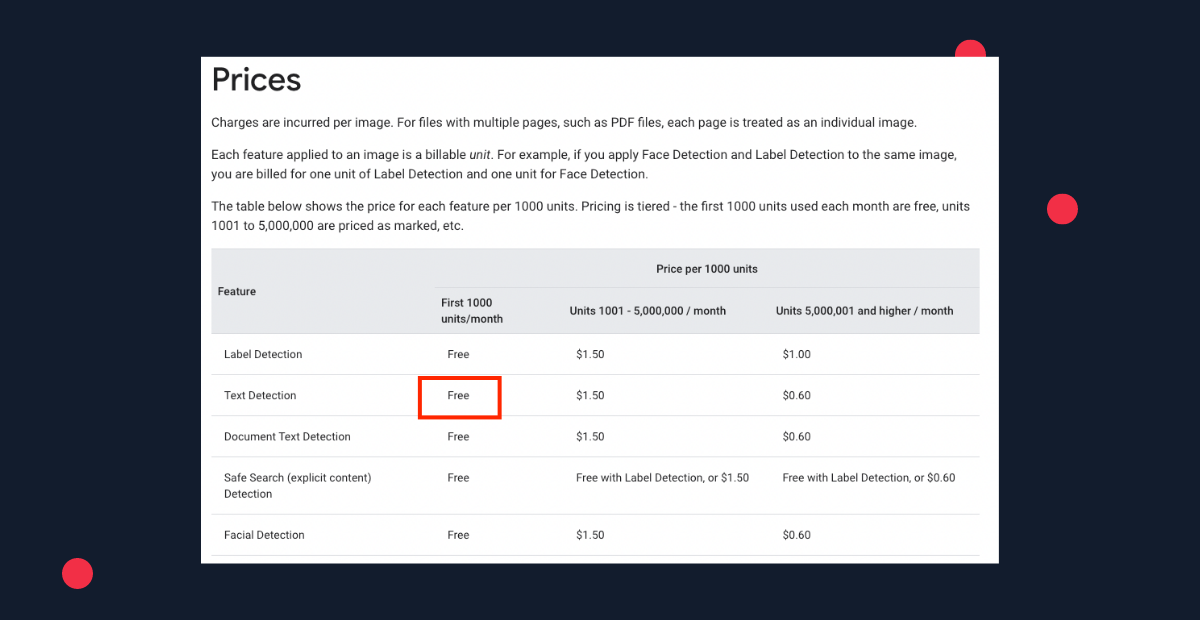

Next, enable billing for the project we just created. But don’t worry, you won’t be charged unless you exceed the Cloud Vision monthly limits. Learn how to check if billing is enabled on a project.

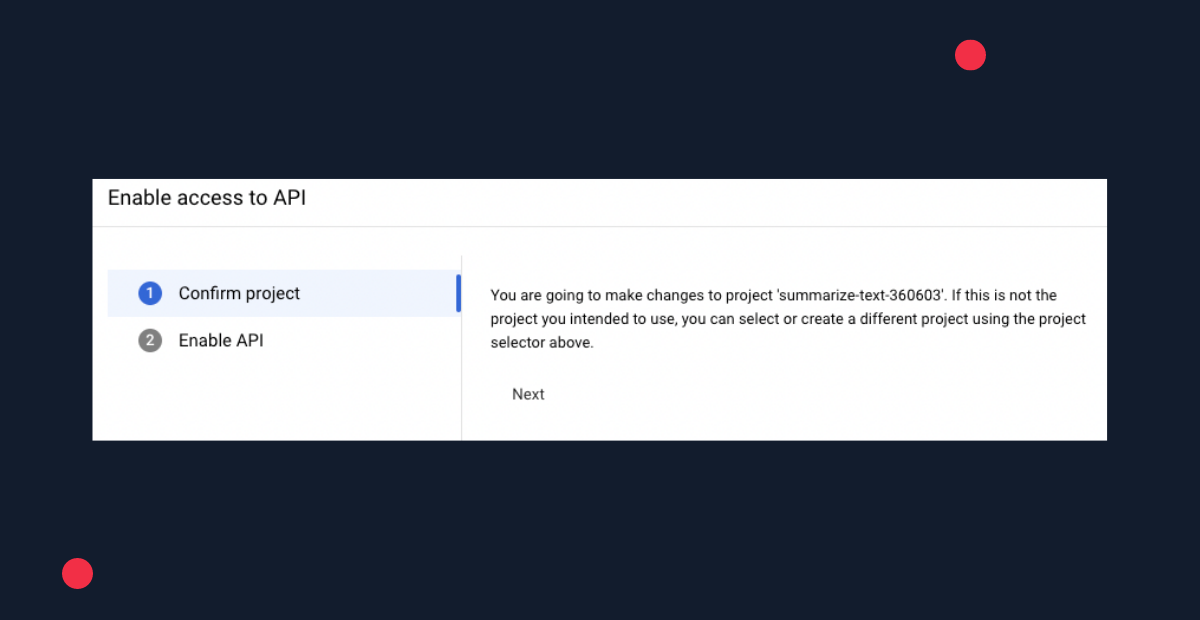

Enable the Vision API for the project we created earlier, called summarize-text.

Next, set up authentication with a service account. Go to Create a service account, select our project (summarize-text), in the Service account name field, enter a name of summarize-text, in the Service account description field, enter a description of Service account for summarize-text. Continue and then grant the role of Project > Owner to your service account.

After creating a service account, create a service account key by clicking on the email address of service account: summarize-text. Click Keys, then Create new key. After doing this, a JSON key file will be downloaded to your computer. You’ll need to store this file in a location of your choice and then set an environment variable pointing to the path of this JSON file.

For example, on Linux or MacOS, in .zshrc:

For example, on Windows with PowerShell:

Next, install the Google Cloud CLI. Since this is different for each operating system, follow the steps outlined in Google’s gcloud CLI installation guide.

Finally, install the Python client library with the following command:

Setup OpenAI

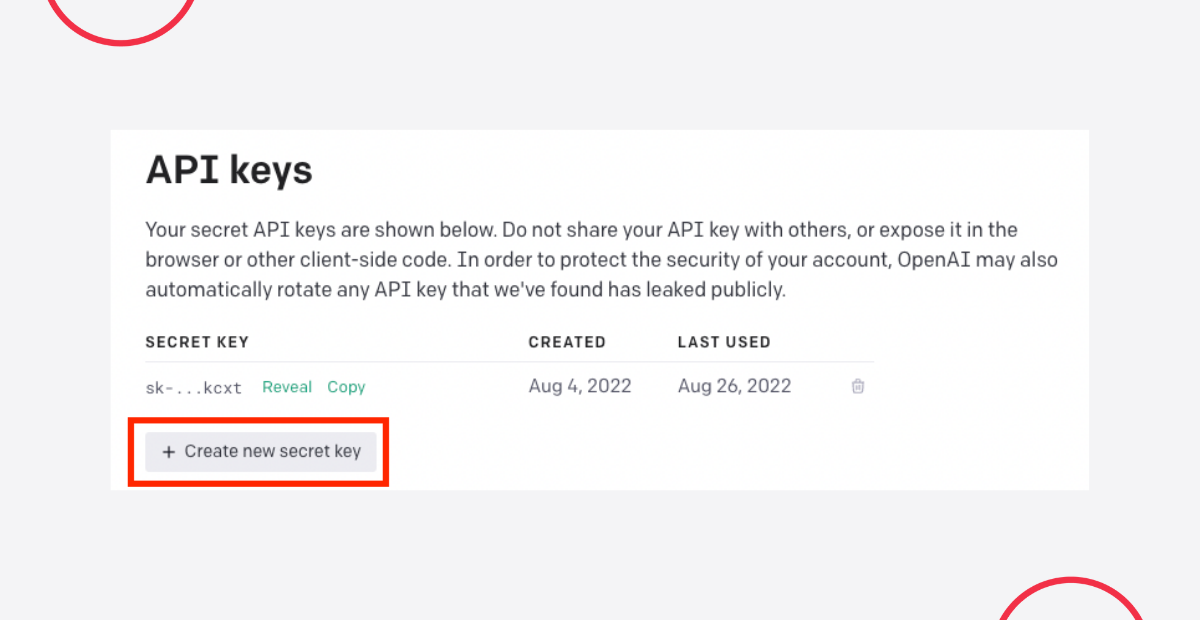

Assuming you already registered for an account with OpenAI, you’ll need to create an API key in your user account settings, which will allow you to authenticate your application with OpenAI. Copy this key and don’t share it with anyone!

We will securely store this API key in the following section.

Setup Local Environment

Create an empty project directory:

Then change into that directory as that’s where our code will be.

Create a virtual environment:

Activate our virtual environment:

Install dependencies to our virtual environment:

Let’s create a file called .env in the project’s root directory to store our API keys in environment variables.

Within that file, we’ll create an environment variable called OPENAI_API_KEY.

(Replace PASTE_YOUR_API_KEY_HERE with the API key that you copied earlier.)

For example:

Since we’ll also be working with our Twilio account, we’ll need to modify this file even more. Log into your Twilio console, then scroll down to find your Account SID and Auth Token. Add two additional lines to the .env file, but change the values to equal your unique Account SID and Auth Token.

For example:

If you’re pushing these to a Git repository, please make sure to add the .env file to your .gitignore so that these credentials are secured.

We’ll be working with local images, so in your project’s root directory, create a new directory called resources. For now, it will be an empty directory, but later this is where images will be stored.

Cloud Vision API

Since we set it up already, you may be wondering what the Vision API is. It’s a Google API that offers powerful pre-trained machine learning models through REST. With the API, you can do things like detect faces, identify places, recognize celebrities, and much more. For this app, we will be using Optical Character Recognition (OCR) to recognize text in images.

Create a file called detect.py in the project’s root directory and copy and paste the following code into the file:

The detect_text function will look at a local file from your computer–in this case an image from the resources/ directory called image.jpg. Then, we will read the content from that image and use the text_detection function from the Vision API to detect text. Finally, we’ll return that text.

If you were to run the detect_text function as is, it wouldn’t work since we are reading an image called image.jpg from the resources/ directory that doesn’t currently exist. But we’ll come back to this later.

Create a new file called utilities.py in the project’s root directory and paste the following code into the file:

The save_image function will take an image url and save it as a file called image.jpg within the resources/ directory.

OpenAI API

Now that we’ve written the code for interacting with the Cloud Vision API that allows us to perform text recognition on photos, we can use the OpenAI API to summarize that text. OpenAI is an AI company (surprise, surprise) that applies models on natural language for various tasks. You give the API a prompt, which is natural language that you input, and the AI will generate a response. For example, if you input a prompt “write a tagline for an ice cream shop” you may see a response like “we serve up smiles with every scoop!”

In the project’s root directory create a file called summarize.py and paste the following code into the file:

The summarize_prompt function uses the OpenAI API create function to respond to a prompt that we give it (generate_prompt). The model we are specifying (text-davinci-002) is OpenAI’s most capable GPT-3 model. The max_tokens parameter sets an upper bound on how many tokens the API will return, or how long our response will be. generate_prompt will create a prompt that summarizes text in one sentence. get_text_from_image will call our previously created functions from the previous section.

Twilio SMS API

Now, we’ll create the code in our application that will all us to text our Twilio phone number and get back a response. This is called sending an Inbound SMS. Think of inbound as an inbound SMS to a Twilio phone number triggering your application. In this case, we will be sending a text to a Twilio phone number (our trigger), then having it respond by sending a reply containing a summary.

Create a new file (in the same directory) called app.py. Using Flask, a Python web framework, we will create an app that runs on a local server. Paste the following code into app.py:

Run the application on your local server with this command in your console (from the root directory):

Your application should be running on http://localhost:8080. Output will look similar to this:

As of now, our application is only running on a server within your computer. But we need a public-facing URL (not http://localhost) to configure a Webhook so Twilio can find it. By using a tool, called ngrok, we will “put localhost on the Internet” so we can configure our webhook.

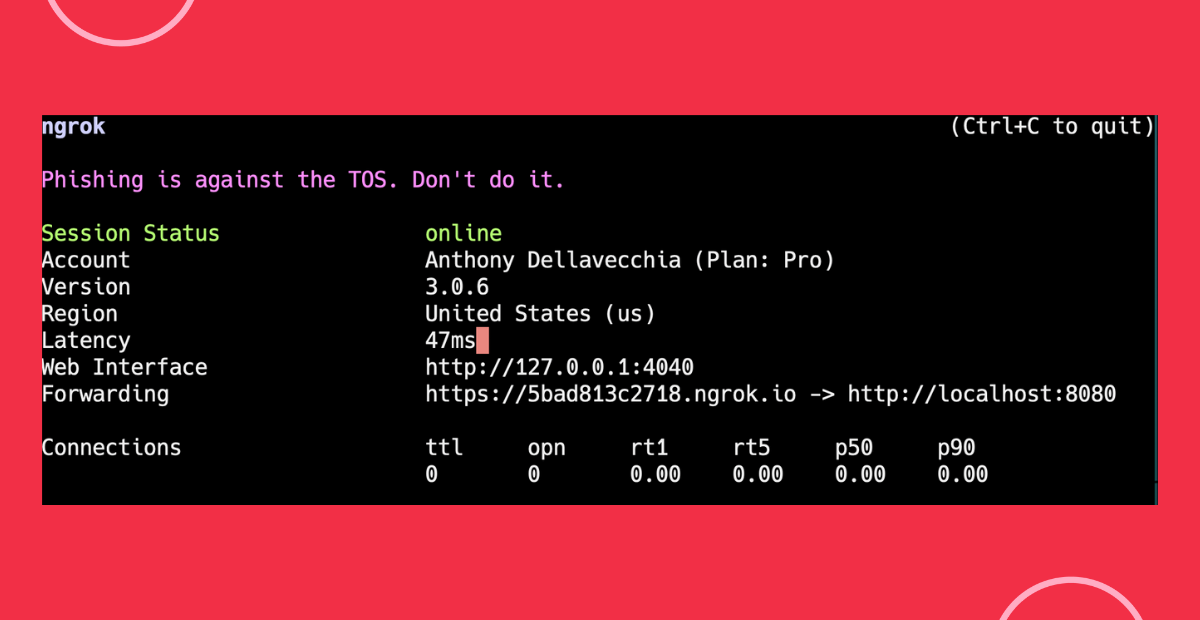

In another console tab run the command:

This will create a “tunnel” from the public Internet into port 8080 in our local machine, where the Flask app is listening for requests. You should see output similar to this:

Take note of the line that says “Forwarding”. In the image above, it shows:

This means that our local application is running publicly on

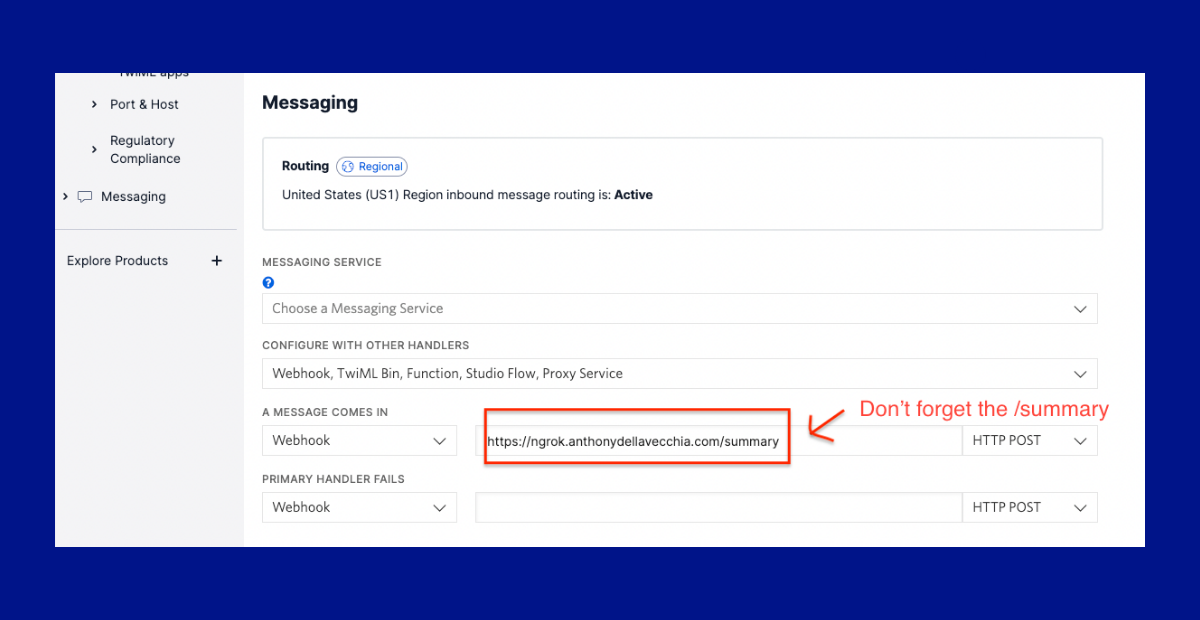

Within the Console, enter in the ngrok URL as a Webhook when “A Message Comes In”.

Please be aware that unless you have a paid ngrok account, each time you run the ngrok command a new URL will be generated, so be sure to make the changes within the Twilio console.

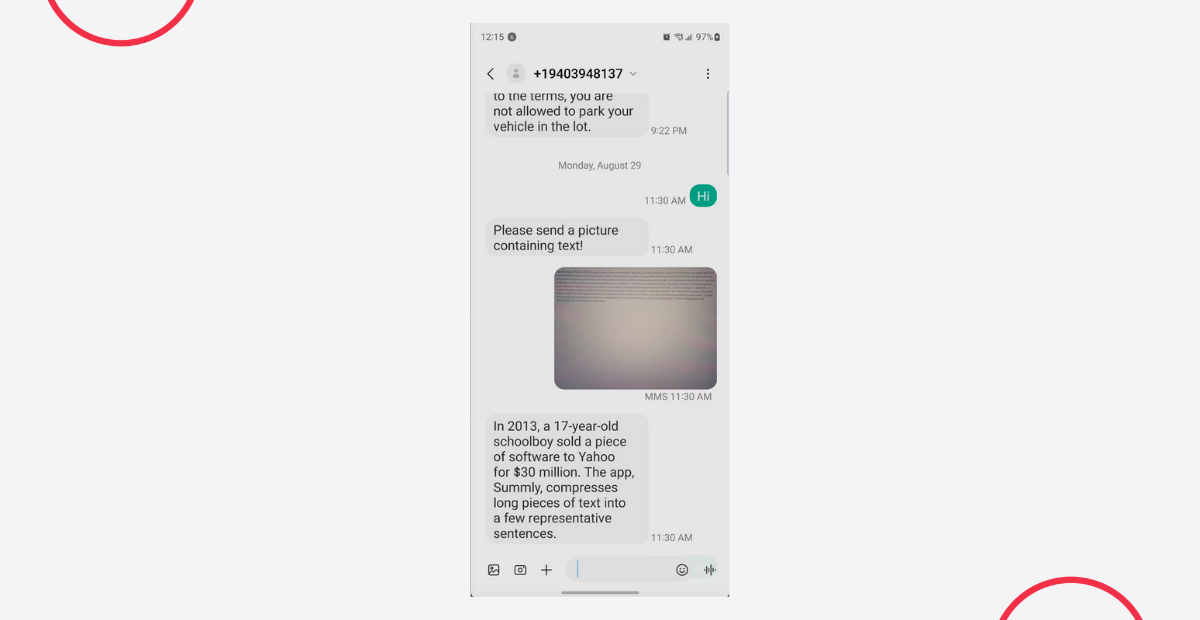

Since our application and ngrok are running, we can send a text message to our Twilio phone number and it will respond back with a summary of text!

Show Me What You Build

Now if there’s a big wall of text that you don’t want to read, pull out your phone, take a picture, and then text it to your Twilio number. You’ll get a response back with a short summary!

Thanks so much for reading! If you found this tutorial helpful, have any questions, or want to show me what you’ve built, let me know online. And if you want to learn more about me, check out my intro blog post.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.