Is it safe to say at Thanksgiving? with Transfer Learning and SMS

Time to read:

This Thanksgiving, you might find yourself in interesting discussions with family members who have differing viewpoints. Read on to see how to determine whether or not something would be divisive to say at Thanksgiving dinner using Zero-Shot Text Classification and Twilio Programmable SMS in Python.

Prerequisites

- A Twilio account - sign up for a free one here and receive an extra $10 if you upgrade through this link

- A Twilio phone number with SMS capabilities - configure one here

- Set up your Python and Flask developer environment. Make sure you have Python 3 downloaded as well as ngrok.

Gentle Intro to Zero-Shot Classification and Hugging Face🤗

What is Zero-Shot Learning/Classification?

Humans can recognize objects they have never seen before given some background information. For example, if I said that a ping pong paddle is like a tennis racquet but smaller, you could probably recognize the paddle when you see it especially if you’ve played tennis before. This example uses knowledge transfer: humans can transfer and use their knowledge from what they learned in the past.

image: upload.wikimedia.org/wikipedia/commons/thumb/a/ad/Knowledge_transfer.svg

This brings us to transfer learning, a machine learning (ML) paradigm where a model is trained on one problem and then applied to different problems (with some adjustments).

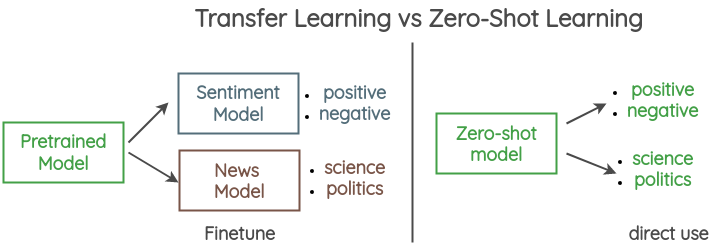

A subset or variation of transfer learning is zero-shot learning (ZSL). Both ZSL and transfer learning involve a machine learning to categorize objects it has not seen before during training (it lacks any labeled training data to help with the classification); however, with ZSL you can perform tasks like sentiment and news classification directly without having to perform any task-specific training. That training might include fine-tuning a new model for each dataset. In transfer learning, information about the new tasks is provided as a set of labelled instances. ZSL differs from one-shot learning, which is where there are one or a few training samples for some categories.

image: kaggle.com/foolofatook/zero-shot-classification-with-huggingface-pipeline

A subset or variation of transfer learning is zero-shot learning (ZSL). Both ZSL and transfer learning involve a machine learning to categorize objects it has not seen before during training (it lacks any labeled training data to help with the classification); however, with ZSL you can perform tasks like sentiment and news classification directly without having to perform any task-specific training. That training might include fine-tuning a new model for each dataset. In transfer learning, information about the new tasks is provided as a set of labelled instances. ZSL differs from one-shot learning, which is where there are one or a few training samples for some categories.

What is Hugging Face🤗?

Hugging Face provides a wide variety of APIs and pre-trained ML models to use for Natural Language Processing (NLP) tasks like classification, information extraction, question answering, summarization, translation, text generation, and more in 100+ languages (WOW!).

This tutorial will use the pipeline API of their open-source Transformers NLP library to classify texts and determine whether or not they are safe to say at Thanksgiving dinner. Transformers seamlessly integrates with two very popular deep learning libraries, PyTorch and TensorFlow.

Setup your Python Project and a Twilio Number

Use the command line to make a folder called huggingface. Switch into that directory and install a Python virtual environment. Activate the virtual environment in Python 3 and then install your dependencies:

Your Flask app will need to be visible from the web so Twilio can send requests to it. Ngrok lets you do this. With ngrok installed, open a new terminal tab and from the project directory, run the following command: `ngrok http 5000`.

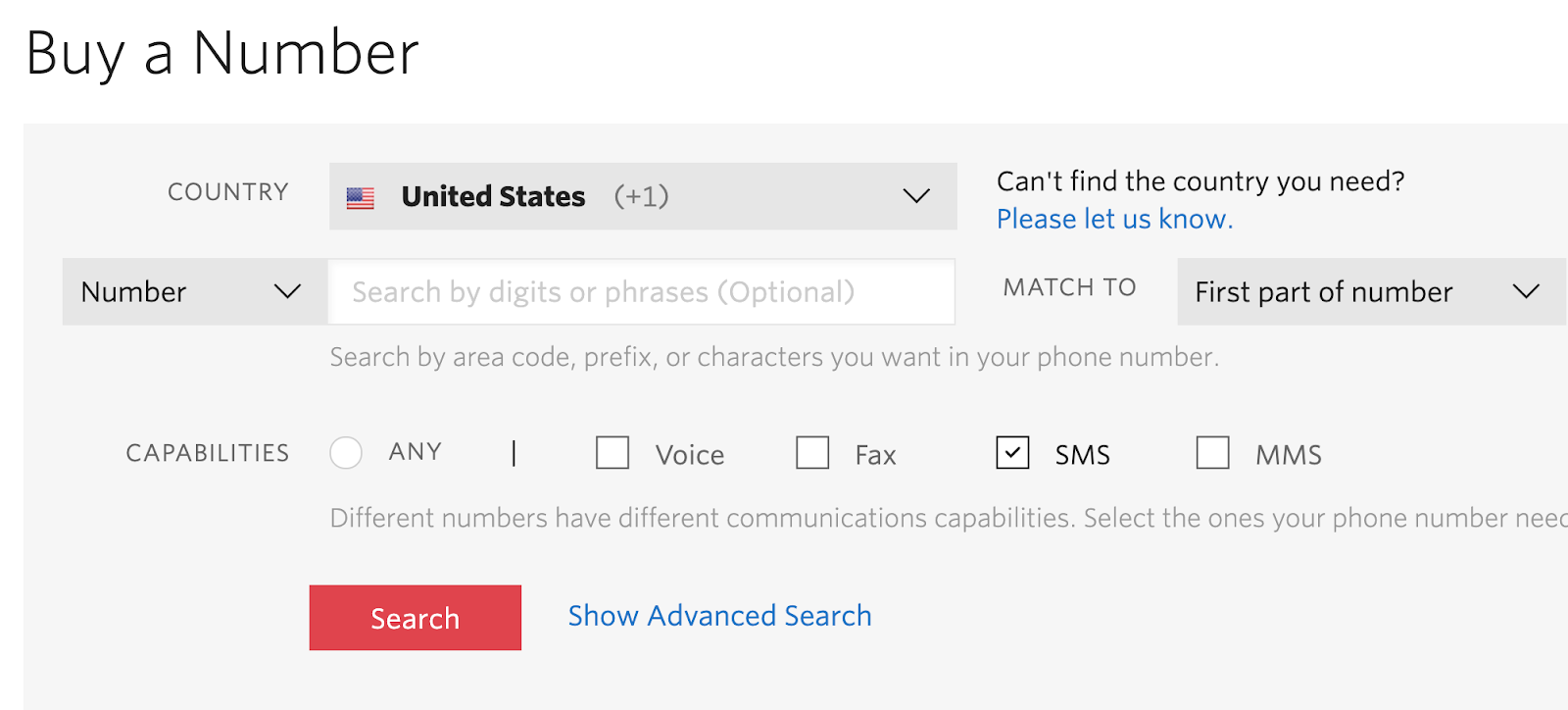

You should see the screen above. Grab the https:// ngrok forwarding URL to configure your Twilio number in the Twilio Console. If you don't have a Twilio number yet, purchase one in the Phone Numbers section of the Console by searching for a phone number in your country and region, making sure the SMS checkbox is ticked.

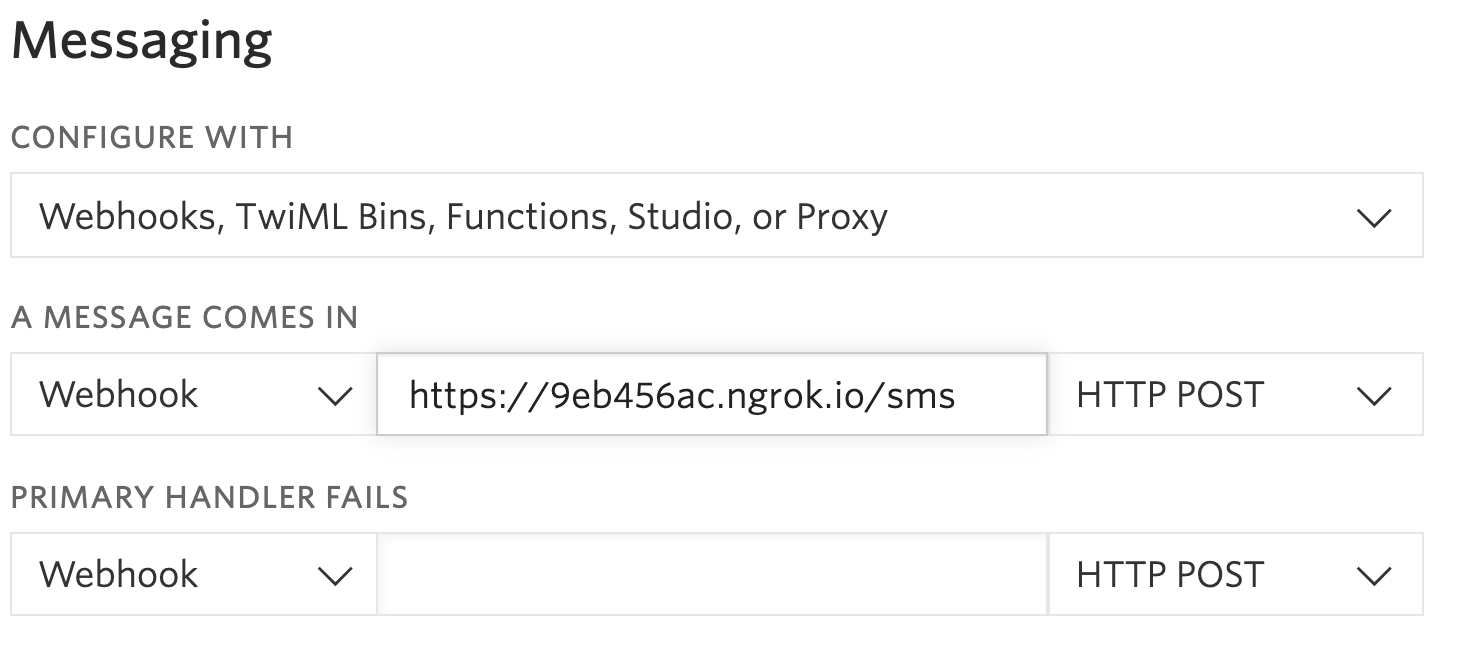

After purchasing your number, looking in its Configuration settings, under Messaging. In the A Message Comes In section, set the Webhook to be your ngrok https URL, appending /sms to the URL. Hit Save.

Classify Twilio Programmable SMS Texts with Transfer Learning in Python

Create a Python file in your working directory - mine is called thanksgiving.py. At the top of your Python file, import the required libraries:

Hugging Face Transformers provides the pipeline API to help group together a pretrained model with the preprocessing used during that model training--in this case, the model will be used on input text. Add this line beneath your library imports in thanksgiving.py to access the classifier from pipeline.

Zero-shot classification lets you define your own labels and make a classifier to assign a probability to each label. With Hugging Face you could also perform multi-class classification where the scores are independent, each falling between zero and one.

This tutorial uses the default option where the pipeline assumes that only one of the candidate labels (basically the classes that the classifier will predict) is true, returning a list of scores for each label that add up to one.

Next, make your Flask application able to handle inbound text messages by defining an /sms endpoint that listens to POST requests and then creating a variable called inb_msg for that message.

Now, loop and classify each message setting multi-class classification to true, extract the scores associated with the labels, create a dictionary where the key is the label and the value is the corresponding score (confidence that the input should be in that category), and get the key with the maximum score. If the category with the maximum score is "politics", we return a message saying you should not say that input message aloud to your family. Otherwise, your message is safe to say at Thanksgiving dinner.

The complete Python file should look like this:

Now run the following commands on your command line to run the Flask app if you have a Mac machine. If you're on a Windows machine, replace export with set.

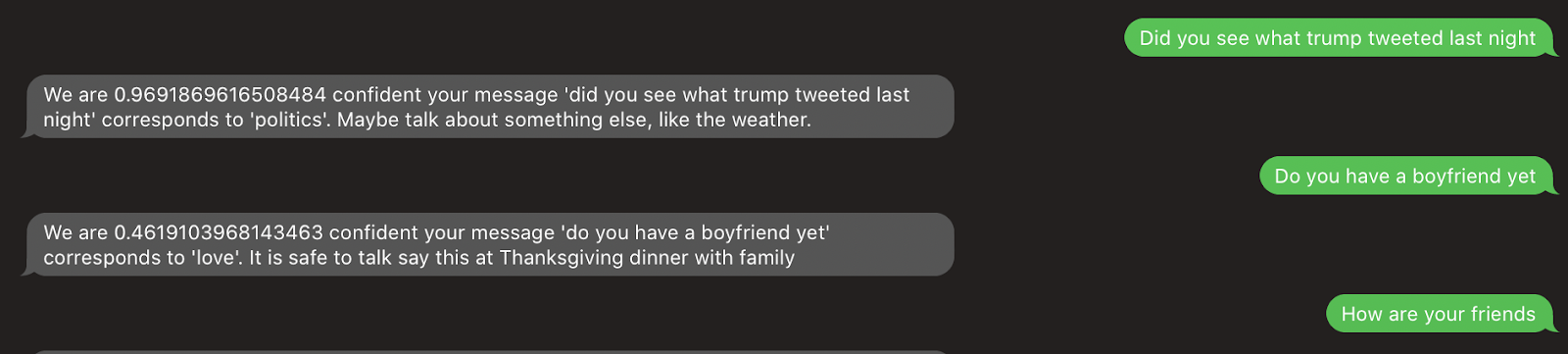

Finally it’s time to test your app! Text your Twilio number a message you might want to say at Thanksgiving with your family. You should get a response like this:

You can view the complete code on GitHub and an example run through of the code in this Colab notebook.

What will you Classify Next?

This is just the beginning for performing zero-shot classification and developing with Hugging Face. You can take this app to the next level by using other general-purpose architectures like BERT, GPT-2, RoBERTa, XLM, DistilBert, etc), using PyTorch (or another tool like TensorFlow) along with Hugging Face to train and fine-tune your model, generating text to respond with using Hugging Face, and more!

Let me know in the comments or online what you're building with machine learning or how you're safely celebrating Thanksgiving.

- Twitter: @lizziepika

- GitHub: elizabethsiegle

- Email: lsiegle@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.