How to Build a Language Learning Application with Programmable Voice and Speech Recognition

Time to read:

Have you ever wanted to build an interactive language learning assistant that provides real-time feedback on pronunciation and fluency? If so, then you’re in luck! In this tutorial, we’ll walk you through how to create a voice-based language learning assistant using Twilio Programmable Voice, Flask, and Google Cloud Speech-to-Text among others.

By the end of this guide you’ll have a working application that interacts with users via voice, transcribes their speech, and provides feedback on their language skills. Pretty cool, right? Well, let’s dive right in!

Prerequisites

In order to get your assistant up and running, you will need the following

- A basic understanding of Python.

- A Twilio account (free or paid). Create one for free here if you haven’t already.

- A voice-enabled phone number from Twilio. Get one here.

- Python 3.12 installed on your machine.

- A Google Cloud account and project, with Speech-to-Text and Storage APIs enabled.

- Flask and Twilio Python libraries.

- ngrok

Building the Language Assistant

Once you have all of the above requirements in place, you’re ready to start building your very own language assistant.

Set up the Python environment

First, you’ll need to get a Python virtual environment up and running. This is an environment that is created on top of an existing Python installation. It gives you the benefit of isolating your Python development projects from the base environment, thus allowing each project to become its own self-contained application. If you’re familiar with Node.js, think of this as the node_modulesfolder in a Node.js project.

Now that you have a basic understanding of what a Python virtual environment is, go ahead and run the following commands in your terminal to set it up:

If you are following along on a Macbook (or using the Git Bash terminal on Windows), enter the following commands instead (use python for Git Bash):

A couple of things to note:

- It is considered good practice to name your virtual environment folder with a leading dot so it is hidden away from the rest of the files in your project directory.

- If you are using Git, be sure to include the name of this folder in a .gitignore file so you do not accidentally commit and upload it to a cloud platform like GitHub.

Next, create your .env file. This is where you’ll store sensitive information like API keys and Auth Tokens, rather than in your code. The .env file should also be included in the .gitignore file. You can create one in your file explorer, or from the command line like so:

In your .env file, add your Twilio AccountSid and Auth Token. You can find these on the homepage of the console:

Now, install the following packages:

Handle incoming calls with Twilio

To have Twilio respond when someone dials your voice-enabled number, create a file named app.py This will be the entry point of your, well, app, and will house the main logic required to set up your Flask server. Go ahead and input the following code:

Let’s take a closer look at what each block of code does.

First, we’re importing the packages we installed earlier with the pip command. The dotenv package reads the key-value pairs from the .env file and loads them as environment variables with the load_dotenv() line.

Flask—a powerful and lightweight web framework—provides a quick and easy way to set up a Python web server.

VoiceResponse enables us to set up the voice commands that will guide the user during the call, and Gather allows us to collect numeric and voice input.

In the last line of imports for this file, we’re importing two helper functions: transcribe_audio and provide_feedback from a feedback.py file which we will be creating and setting up later.

The line app = Flask(__name__) creates an instance of the imported Flask class which represents the web application. The __name__ argument helps Flask determine the root path for the application, and this is useful for locating resources.

Next, we use a simple language map to store the initial prompt in different languages. This allows us to speak to the user in the language that they intend to learn. The appropriate message is retrieved based on the user’s selection.

Define the routes

We then define two routes that interact with the Twilio API to handle incoming voice calls.

The first is the /gather route which handles HTTP GET and POST requests. In this route, we generate TwiML (Twilio Markup Language) responses for voice calls via the VoiceResponse class, and we use the response object to build the TwiML response.

Gather is a Twilio verb used to collect digit input from the user. This configuration collects one digit and sends the data to the /voice URL. The say method specifies the instructions to be spoken to the user in different languages.

The response object adds the Gather object to VoiceResponse, so it will be included in the TwiML response sent to Twilio. If the user does not provide any input, redirect sends the user back to the /gather route, creating a loop until the user makes a selection. Finally, str(response) converts the VoiceResponse object to a string containing the TwiML XML, which is returned as the HTTP response to Twilio.

The /voice route handles the user’s language selection from the previous route. The presence of the Digits parameter in the incoming request indicates that the user entered a digit. The choice variable stores the value of this digit.

If the user entered a valid digit (i.e. it is one of the options found in language_mapping), we retrieve the corresponding language code and prompt text. We then use the say method to ask the user what they would like to talk about, while specifying the language to go with the voice. This approach ensures a more cohesive and user-friendly experience.

After this, we set up the record verb to record the caller’s voice input. We limit this to 30 seconds (Google Speech-to-Text), and pass the recording to the /handle-recording endpoint along with the language and choice parameters.

At the end of the block, we’re handling an edge case scenario where we redirect the user back to /gather if they entered an invalid choice.

At this point, we’re able to determine what language the user would like to interact in, and prompt the user to record a short message in that language. But we’re not really doing anything with that message right now. So go ahead and add the following code to your app.py file:

A quick rundown of what’s going on inside these two routes:

/handle-recording, as the name implies, handles the recorded message from the user. Twilio passes the RecordingUrl and RecordingSid parameters along with its request to the URL we specified in the action attribute in the /voice route.

We then transcribe the recording using the transcribe_audio helper function, loop through the results to get the transcript, and say the feedback to the user. We then prompt the user to either record again or hang up the call.

Similar to the /voice route, the /record route handles the user’s selection from the previous route. If the caller presses “1,” we initiate a new recording session with a 30-second limit, and then send the recording to /handle-recording. If the caller presses “2,” we thank them and end the call.

Define the helper functions

To keep things neat and organized, we will be making use of helper functions to perform specific tasks.

To do that, we’ll need to install the modules that these functions depend on.

In your terminal, enter the command below:

Next, create a feedback.py file to handle audio transcription and provide language feedback using Google Cloud’s Speech-to-Text API.

As with the app.py file, import the installed modules into feedback.py:

Remember when we talked about environment variables and the .env file? Well, the os module helps us retrieve those variables so the dotenv module can properly load them. These credentials are important because they allow us to authenticate our app to Twilio so we can access the recording when it is ready.

Google Cloud Storage and the Speech-to-Text API

The Google Speech-to-Text API transcribes audio recordings into text using powerful machine learning models. You send your audio data to the API, and it responds with a text transcription of the audio data.

Currently, you can only supply remote audio files to the API via a Google Cloud Storage URI.

Google Cloud Storage (GCS) is a service that allows you to store and access data such as files and images, which are stored in containers called buckets. You can interact with GCS using the web interface, client libraries, or the command line interface (CLI) to perform operations such as downloads, uploads, etc.

To get your Google Cloud account up and running with these two services, the cloud docs provide a set of straightforward guides for each service. Follow the links below to set up your project:

After you’re done setting up both services, it’s time to transcribe the voice recording. Add the lines of code below to your feedback.py file:

In the helper function upload_to_gcs, we initialize a storage_client which we use to retrieve the bucket from our GCS account. We choose a destination blob name which in this case is the file path where we want to store our blob object. We then create a blob object from the file and it is this blob object that we upload to our bucket on GCS. We can then craft a valid GCS URI for use with the Speech-to-Text API.

In transcribe_audio, we instantiate a SpeechClient from the google.cloud.speech library. Next, we requisition the audio file from Twilio while making sure we’re authenticating properly. We upload this audio file to GCS with the previously described upload_to_gcs function and it returns the URI to us. We use this URI to configure the request to our desired transcription settings. Finally, we call the recognize method on the client with our configuration settings, and then return the response that we receive from the API.

Remember, we’re calling transcribe_audio from the /handle-recording route and then looping through the results. It is also in this route that we provide feedback to the user via the provide_feedback helper function. This is the final piece of the puzzle, so go ahead and add these lines to feedback.py:

In analyze_pronunciation we evaluate pronunciation accuracy based on the confidence scores of the words that were provided in the results response. We iterate through the words and collect low-confidence words with a score below 0.8. If there are none, we return a positive feedback message. If there are, we construct a message asking the user to check their pronunciation of those words.

In the analyze_fluency function we’re evaluating speech fluency based on transcribed audio results. We calculate the total speaking time and word count from the speech segments to compute the speech rate in words per minute. We then provide feedback by suggesting improvements if the speech rate is too slow or fast, and commend if it is within optimal range.

In analyze_pauses we check for significant pauses in speech. This enables us to track the time between spoken words so we can identify pauses longer than one second. If we detect pauses, we give the suggestion to reduce them, while noting the longest pause. If no significant pauses are found, we commend the user for maintaining a steady pace.

provide_feedback consolidates the feedback from all three analysis functions, combines and returns the messages into a single feedback string.

Running the Application

Now that you’ve written all the code required to run your app, there are still a few more steps before it can start to function. First, you’ll need to get your local server running. In the root directory of your project, open a terminal and enter the command:

You should see a message printed to your console that confirms your app is running.

Expose the web server with ngrok

To make your application accessible over the internet, you’ll need to expose your local server with a tool called ngrok. With the quickstart docs, you can be up and running in minutes.

Configure Twilio Webhooks

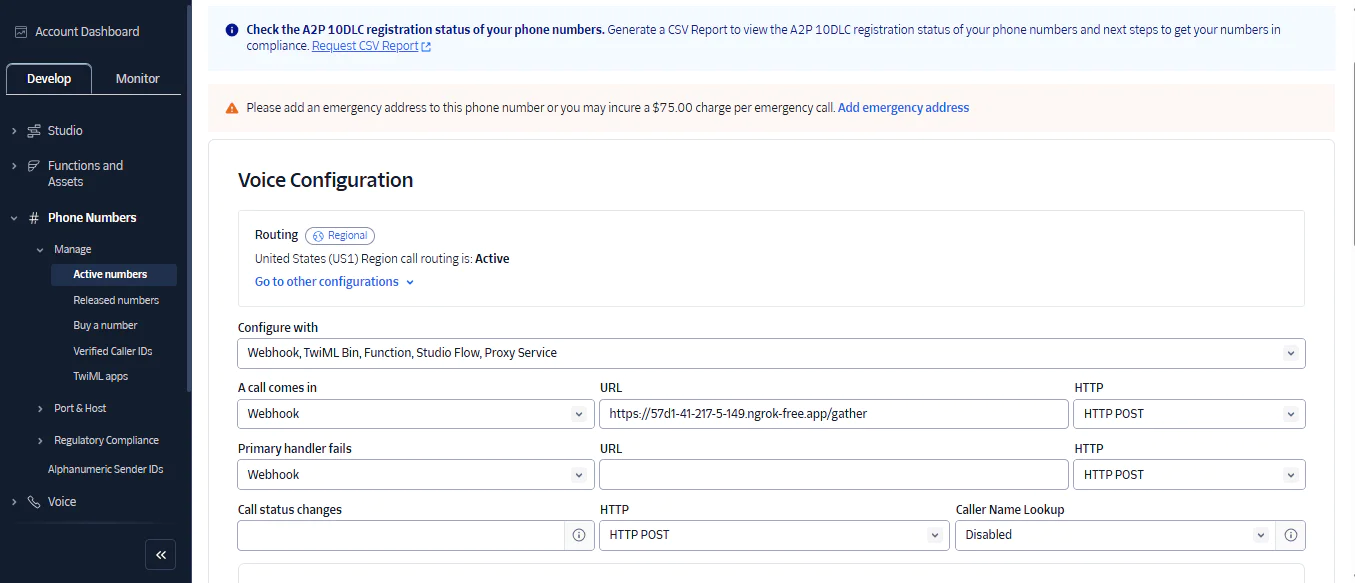

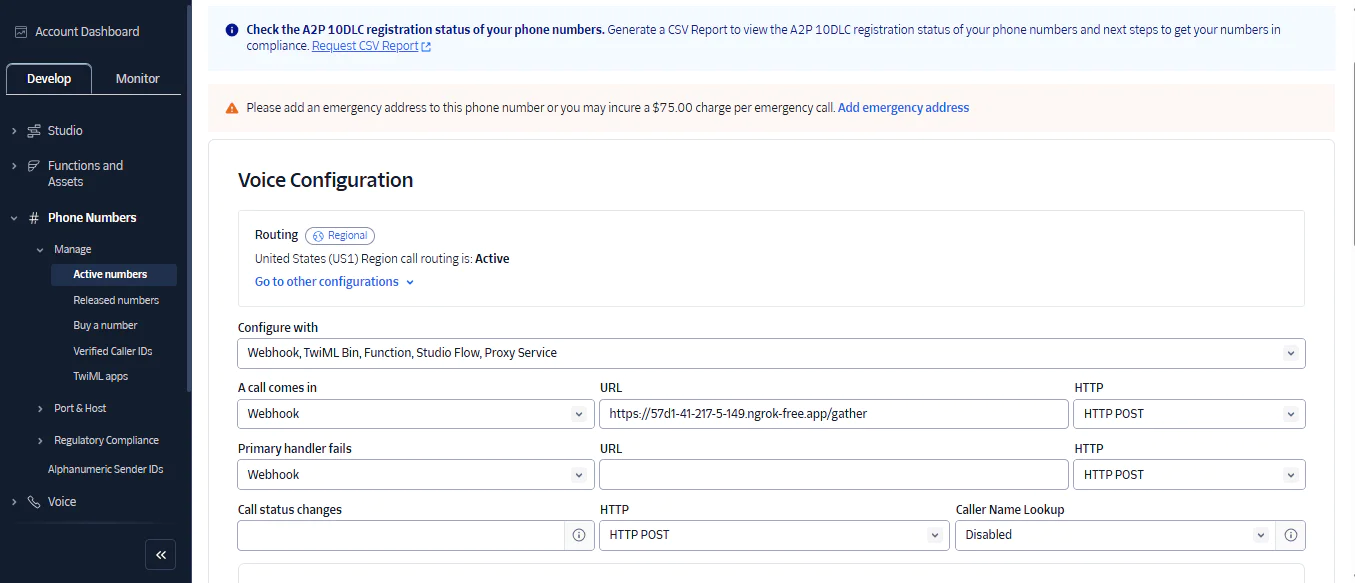

After you’re done setting up ngrok, it’s time to configure the Twilio webhook for your app:

- Log in to your Twilio Console

- Navigate to Phone Numbers under the Develop section

- Click on Manage and then Active numbers

You should see a screen similar to the image below:

- Under Configure with, select Webhook, TwiML Bin, Function, Studio Flow, Proxy Service.

- Under A call comes in, select Webhook.

- In the URL input to the right, enter the URL provided by ngrok, making sure to append the appropriate route (e.g.

/voice) to it. - Scroll to the bottom of the page and save your configuration.

That’s it! Your Twilio phone number is now configured to route incoming calls to your local server via a webhook.

Test your app

You can confirm that your app works by placing a call from your personal phone to your Twilio number. You should be greeted with the message from the /gather route.

A couple of pro tips… Always remember to:

- Activate your virtual environment if it ever gets deactivated for any reason

- Append your app’s entry route to your ngrok webhook if you’re not using a static URL

Always make sure to activate the virtual environment.

What's next for building Programmable Voice apps?

Congratulations! You have successfully built a Language Learning Assistant that provides speech analysis and evaluates pronunciation accuracy and fluency.

I hope this was as much fun for you as it was for me.

You can improve your app’s functionality by integrating other tools such as the OpenAI completions API and setting up a conversation between the user and AI in their language of choice.

Danny Santino is a Software Development Team Lead at Tommie-Farids Limited. You can find his professional portfolio at dannysantino.com and on GitHub .

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.