Offline Transcription and TTS using Vosk and Bark

Time to read:

Speech Recognition and Text-to-speech (TTS) are crucial parts of any phone tree, call center, or telephony app. All of the traditional methods to build these features typically have one thing in common – they rely on external API calls that require third party access and transmitting your recording files over the Internet. Don’t get me wrong – this process works great, and Twilio’s secure connections for products such as Voice and AI Assistants are discussed on our subprocessors page.

Out of curiosity though: is it possible to generate TTS and transcribe recordings locally, using AI? In this article, we will look at using Vosk and Bark for offline speech recognition and generation.

Prerequisites

Before starting, be sure to have the following ready:

- A Twilio account

- Python 3.x

- Twilio Python Helper Library

- Vosk

- Vosk Models

- Bark

Recording and Transcribing call audio with Twilio

A Call Recording is an audio file containing part or all the RTP media transmitted during a Call.

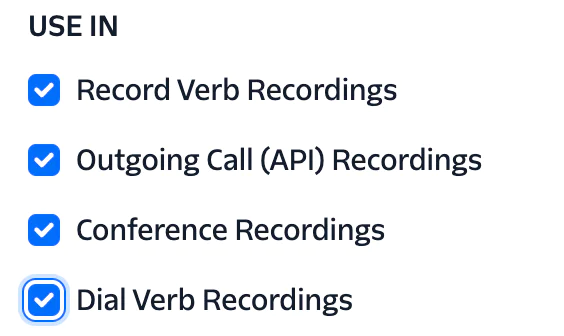

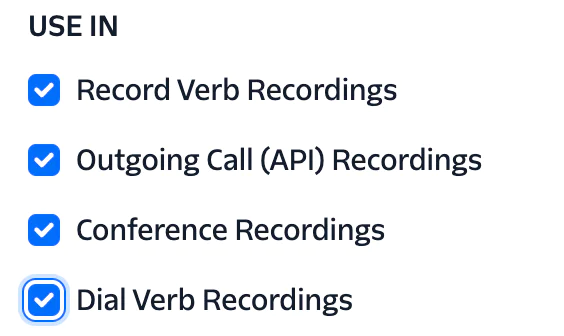

With Twilio, customers can record and transcribe phone calls by setting certain parameters when using <Record>, <Dial>, Conferences, <Transcription>, or Outbound API.

You can also generate dynamic messages and responses on a call with <Say> and <Play>.

Recordings can be modified during an in-progress call, so the recording time and call time may not be the same.

<Record>

Setting the transcribe attribute to True will send the recorded audio to our transcription provider, and the transcription will be returned.

A transcribeCallback URL is optional, but a good idea for tracking the status of the Transcription

When the transcription has been processed (or if it fails for some reason) the transcribeCallback URL will be hit with a payload containing the text, status, and other related info.

<Dial>

Outgoing Calls made using <Dial> can be recorded a few different ways, and the Recording file can be transcribed after completion of the call, using an external service.

Conferences

Conference legs made using <Conference> TwiML can be recorded by setting the record attribute.

Similarly, Conferences made using the Conference Participant API can be recorded in different ways using the record, conferenceRecord, recordTrack, and other attributes. It can get complex, so test various configurations that work for you.

In any case, transcriptions of Conference recordings would need to be made after the fact as well.

Media Streams

From the docs: “Media Streams gives access to the raw audio stream of your Programmable Voice calls by sending the live audio stream of the call to a destination of your choosing using WebSockets.”

A common implementation of real time call transcriptions involves Media Streams in conjunction with an external speech recognition provider. This demo code seamlessly integrates Google Cloud Speech API to the websocket server receiving the forked RTP media on an ongoing call.

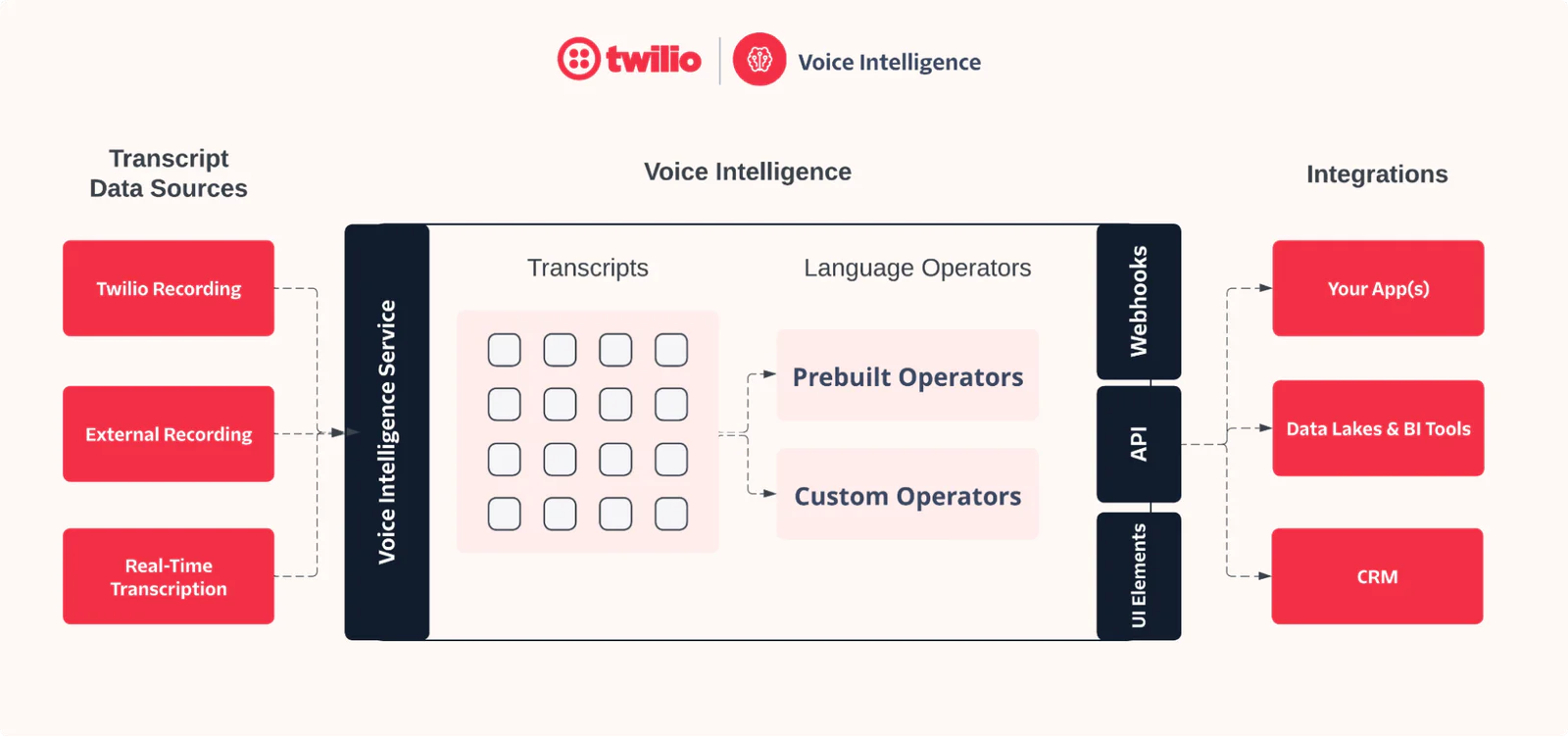

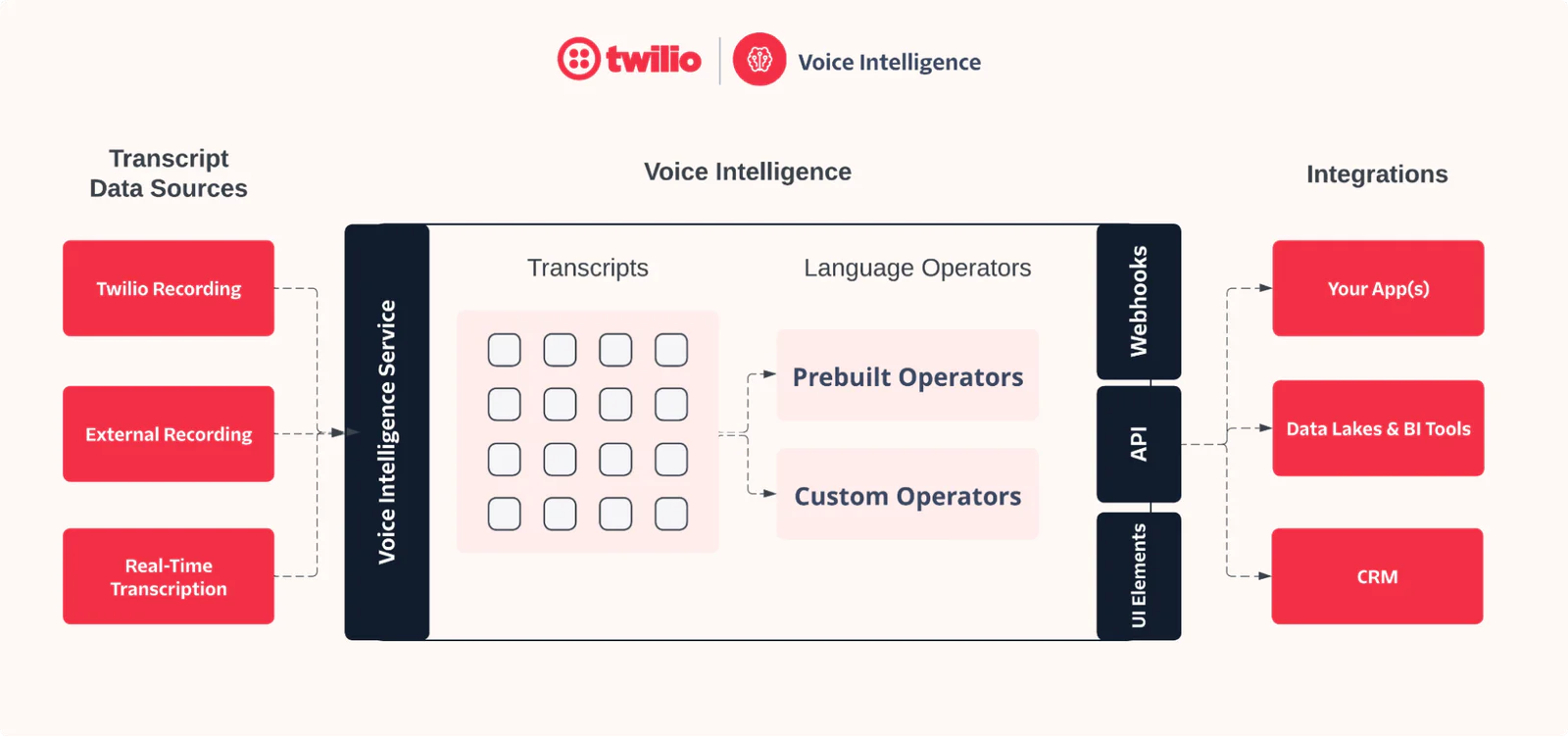

Voice Intelligence Transcripts

Voice Intelligence leverages artificial intelligence and machine learning technologies to transcribe and analyze recorded conversations.

Check out the docs for a deeper dive on Transcripts.

<ConversationRelay>

Twilio customers can now pipe call audio over websockets to their own LLM provider for transcription, translation, and much more. The <ConversationRelay> noun, under the <Connect> verb, supports a wide array of languages and voices, and is a great AI-powered enhancement to your call flows.

Check out the <ConversationRelay> Onboarding guide (and some cool blog posts) for more information.

Marketplace

The Marketplace allows customization and flexibility by integrating different external providers and functionality for operations like transcribing calls.

For example, the IBM Watson Speech to Text add-on will transcribe <Dial> and other types of recordings automatically, and hit a status callback URL when done.

TTS with Twilio

<Say>

TTS, or Text To Speech is a technology that converts written text into spoken words. <Say> uses TTS to generate audio of given text on the fly, which can be played on a live call.

The Basic voices, man and woman, are free to use.

You can use Google and Amazon Polly voices with the <Say> verb for a small additional cost.

Furthermore, the Standard Voices enable use of Speech Synthesis Markup Language (SSML) to modify speed and volume, or to interpret different strings in different ways.

<Play>

Some organizations opt for recording human speech or including music/sound effects in their phone app to reinforce a seamless customer experience across all interactions. Once the recording is stored somewhere, the <Play> TwiML verb can be invoked to “play” these media files on a live call.

Playing media files like this is less flexible, since the recordings need to be made ahead of time. However, you can prepare pre recorded numbers, letters, and common words to Play dynamically for account numbers, addresses, or certain phrases.

Building Local Options

Speech Recognition with Vosk

Vosk is a speech recognition toolkit that incorporates machine learning models trained to convert spoken language into written text. During inference, Vosk processes audio input to extract features like phonemes and matches them against the learned patterns to predict the most likely words and phrases being spoken.

- Vosk is an offline speech recognition library, using various language models that can be downloaded and used locally.

- Vosk has an asterisk module for more… intense integrations.

- Vosk has been used before to transcribe calls in real time with Twilio Media Streams.

To transcribe Call Recordings locally, we should create a few pieces of logic to handle different stages of the process.

First, we need to save the Recording files locally. When a recording has been processed and is ready to download, the RecordingStatusCallback URL will be hit. We can use this opportunity to download the Call Recording locally, and delete it from Twilio’s stores once the local download has been validated. This keeps your data local, and helps avoid storage costs.

My plumb-bob node/express app has a built-in custom RecordingStatusCallback endpoint that takes care of this.

This route is not fit for production use, but it illustrates the desired logic for demonstration purposes.

- This endpoint is hit with the Recording Status payload

- The recording is downloaded locally

- The Recording is deleted from Twilio’s cloud storage

Alternatively, recordings can be downloaded in batches by periodically looping through the Recordings Resource, and performing the download/delete functions one by one.

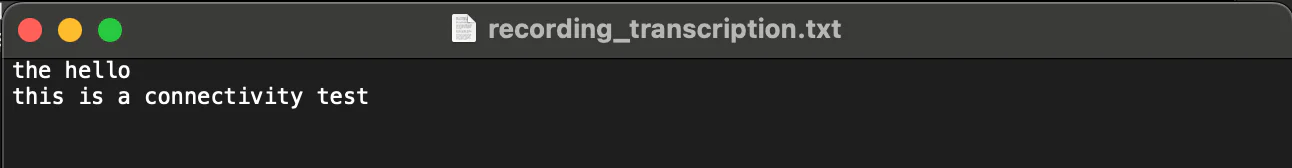

Either way, once you have recordings to transcribe locally, Vosk comes with a simple vosk-transcriber command line tool.

The output file is simple, but gives us what we asked for. The text of the recording - simple as.

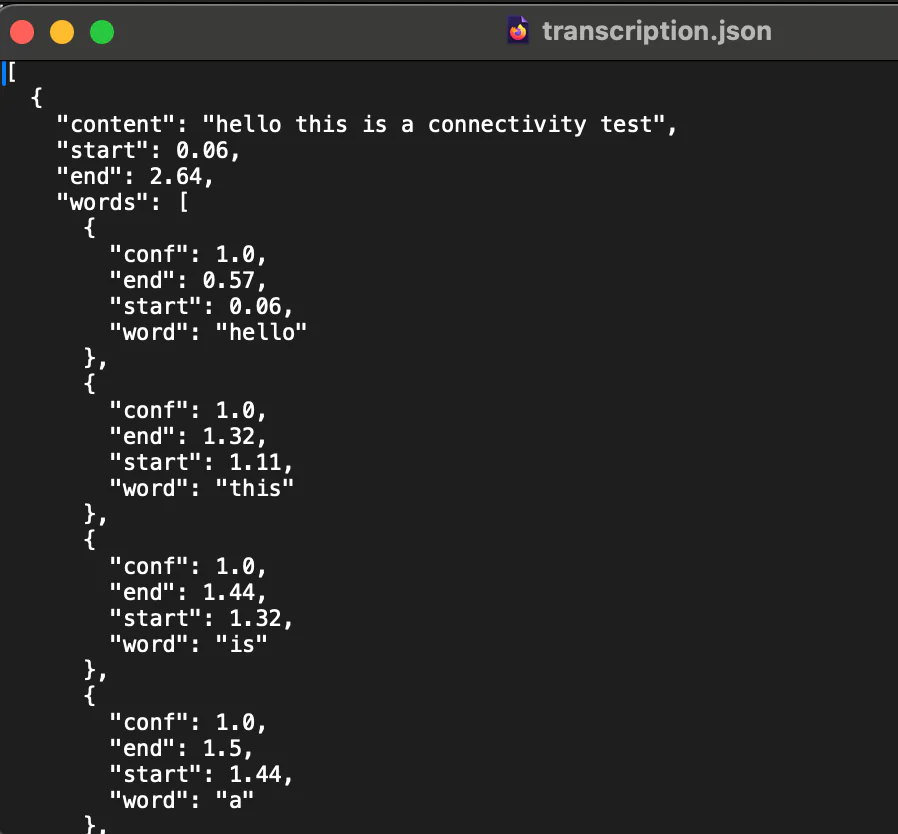

This example is a bit more complex, outputting a timestamped JSON.

It begins by loading a pre-trained Vosk model and opening an audio file for reading. The KaldiRecognizer is initialized and the Vosk model is fed the audio data in chunks. If the recognizer accepts the audio chunk, it parses the recognized result into JSON to extract the transcribed text and its word-level timing information. Once the entire audio is processed, the transcription results are structured with each segment's text content, start time, and end time.

Finally, the transcription with all segments is saved in a JSON file.

N-gram analysis can enhance customer service and improve decision-making processes in telephony applications by providing insights into communication patterns and customer interactions.

- Sentiment Analysis - By examining frequent n-grams (sequences of words) associated with positive or negative sentiments, companies can gauge customer satisfaction and sentiment.

- Keyword Spotting - Identifying common phrases or keywords can help in detecting recurring themes or topics in calls, such as frequently asked questions or common routing issues.

- Fraud Detection - Unusual or suspicious n-grams can be flagged to identify potential fraudulent activities or calls.

TTS with Bark

From github:

“ Bark is a transformer-based text-to-audio model created by Suno.”

A transformer-based model uses transformer architecture, a type of neural network architecture used for processing text and other sequential data.

Bark converts text input into audio output, generating spoken language or other audio forms from written text. A lot of services perform the same function, but Bark works locally, using your machine's resources instead of someone else’s computer in the sky.

This example from the readme ‘just works’.

Upon the initial execution, the models will be downloaded. Subsequent runs will be faster as the models are already in place.

From there, try messing around with different voices and settings. Once the file is generated, you can upload it to Assets or your own storage to be used with <Play>!

Your results may vary in consistency and hilariousness, but fine tuning is worth a shot.

TTS with Voice Puppet

Voice Puppet (from the same dev as videogrep mentioned above) uses an audio file of speech to ‘clone’ the voice for use in generating text to speech.

This can come in handy if you want a specific voice but you have too many recordings for a human to reliably provide at scale. For instance, if your phone app is designed to send various announcements or alerts, you may need to constantly make individual recordings yourself. With Voice Puppet, you can use an example recording as a source, and dynamically generate the speech each time.

To get started, grab the code and install with pip.

Depending on your setup, you may need to pin the torch version to something < 2.6, for reasons.

This is okay for the purposes of this demonstration, but please do your own research before using it in a production setting.

In the pyproject.toml file, change line 9 from this:

To this:

Then, make sure you have the build package installed.

To build the package, in your project's root directory (the one containing pyproject.toml), run:

This will generate a dist/ directory containing the wheel and source distribution.

Install with

I tried this out by recording my own voice, and pitch shifting it down for fun.

The resulting file can again be uploaded to the Internet to be used with <Play>.

Conclusion

Twilio offers a wide variety of cutting edge solutions such as <Say>, Voice Intelligence Transcripts, ConversationRelay, and more for adding Speech Recognition and Text-to-speech (TTS) functionality to your application. That said, in experimenting with Vosk, Bark, and other open source technologies we've shown that it's possible to locally transcribe and generate audio effectively as well. The underlying technologies have been around for decades, but recent developments and accelerated research have made AI powered telephony features more accessible and easy to use than ever.

Please browse these additional resources for more information.

- https://blogs.nvidia.com/blog/what-is-a-transformer-model/

- https://www.twilio.com/docs/voice/twiml/say/text-speech

- https://www.youtube.com/watch?v=clJ6TZSqhF0

- https://www.youtube.com/watch?v=0rAyrmm7vv0

- https://www.youtube.com/watch?v=dI0HErKSz4s

Matt Coser is a Senior Field Security Engineer at Twilio. His focus is on telecom security, and empowering Twilio’s customers to build safely. Contact him on linkedin to connect and discuss more.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.