Face It: Detecting Emotions in Video Calls with Twilio and AWS Rekognition

Time to read:

Introduction

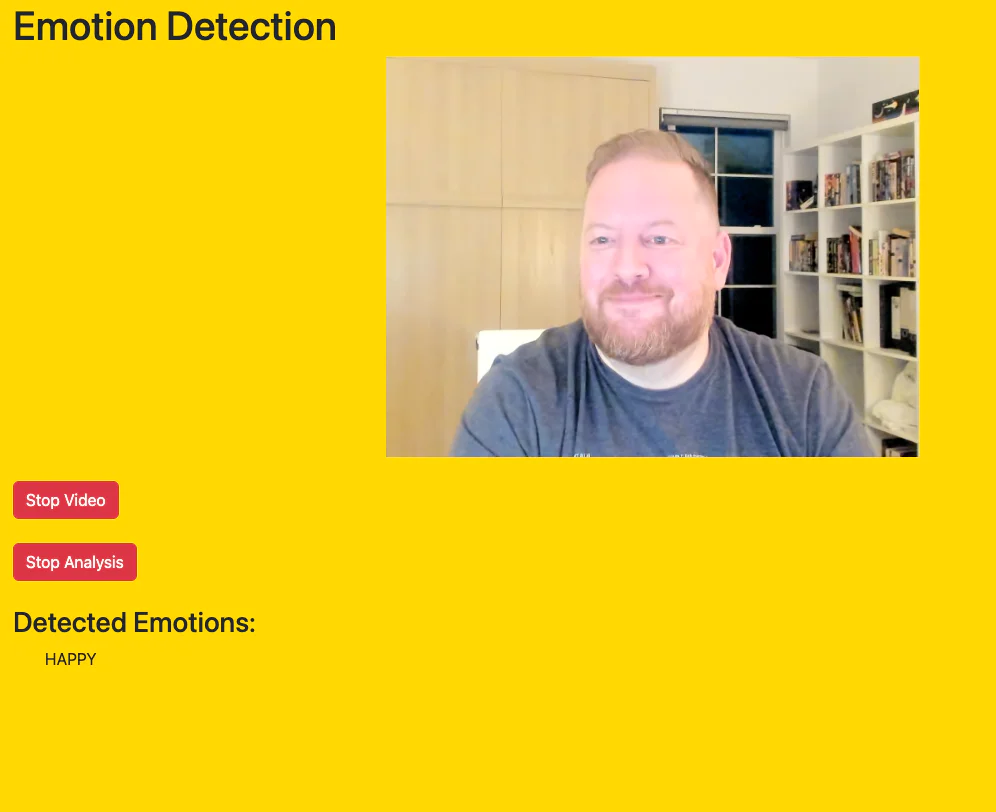

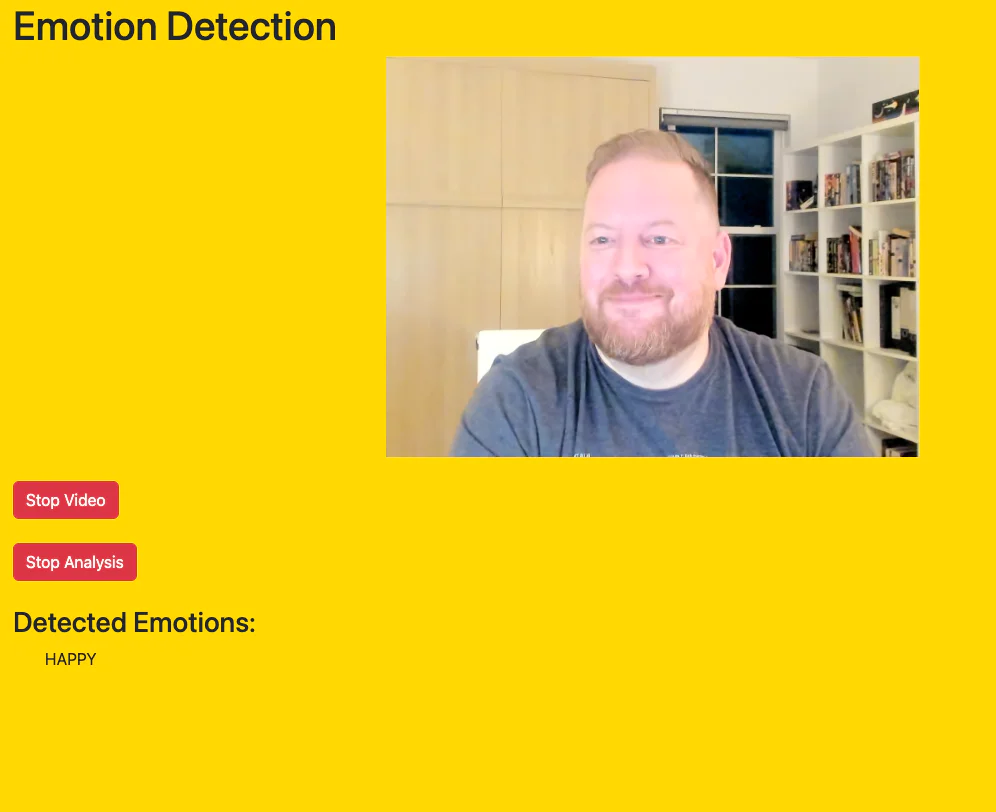

In the world of virtual meetings and video calls, what if we could take things to the next level by actually detecting emotions from Twilio Video in real time? With the power of Twilio Video and AWS Rekognition, we’ll dive into how you can build a system that analyzes facial expressions and gives you insight into how your participants are truly feeling. The Video Emotion Detector app combines real-time video calling via Twilio Video with advanced facial analysis provided by AWS Rekognition. It captures video frames from a participant's webcam, processes them through a canvas element, and sends the frame data to AWS Rekognition to analyze facial expressions. Detected emotions, such as happiness, anger, or sadness, are displayed in real-time, and the app dynamically updates the background color to visually represent the dominant emotion. This seamless integration of technologies provides an interactive and empathetic layer to virtual communication, making video calls more engaging and emotionally aware.

Prerequisites

- A Twilio account with an Account SID, an API Key and an API Secret

- AWS Account with an AWS Rekognition User, Access token, and Access token secret.

- Familiarity with Next.js

- Familiarity with Typescript

- Familiarity with React

- Familiarity with React-Bootstrap

Building a Real-Time Video Emotion Detection App with Twilio Video and AWS Rekognition

In this guide, we’ll walk through the process of building a video emotion detection application using TypeScript, Next.js, React, React-Bootstrap, Twilio Video, and AWS Rekognition. The goal of this app is to analyze the facial expressions of participants in a Twilio Video room and detect their emotions in real time. By leveraging the capabilities of Twilio Video for handling video calling and AWS Rekognition for emotion analysis, we can create a system that provides meaningful insights into how users are feeling during video calls.

Setting Up the Environment

To begin, you’ll need to set up a new Next.js project with TypeScript and the necessary dependencies. You can easily do this by running the following commands in your terminal:

Setting Up the Twilio API Key

Once the project is set up, ensure you have Twilio and AWS accounts ready. You’ll need a Twilio API Key and Secret to interact with Twilio Video, and you’ll also need an AWS Rekognition API key to access facial recognition features. See creating a Twilio API Key for help in creating your own API Key and Secret.

Setting Up AWS Rekognition

The simplest way to do this is to login to AWS and navigate to the IAM center and create a user with a name like ‘rekogntion-user’. Once the user is created, navigate to the Permissions tab and click Add permission > Create inline policy in the Permission policies section. Select Rekognition under the Service dropdown and select the All Rekognition actions box to enable full access to the Rekognition service. In the Resources section, select All to enable your permission to all your resource ARNs. Finally, click Next, enter your policy name and the click Create policy. Once you have done this, then you can create an access key and secret which you should note down for adding to your environment. You can create this by clicking Create access key in the user details section.

Create a .env file

Create your own .env file in the root of your project. Because we are using next, on the client side there is a special way to prefix env variables e.g. NEXT_PUBLIC_KEY_NAME and on the server side, next has taken care of pre-loading dotenv so that you can call them in the normal manner e.g. process.env.KEY_NAME. Copy and paste the following in your .env file and replace the placeholders with their respective values:

For this project and because we are using next we are going to take advantage of the App router feature of next@latest. If you want to know more about how to set up your project and ensure the layouts etc are all properly set up then take a look at the Nextjs Project Structure. Basically we are going to have a src

folder which will contain all that we need to build this out and take advantage of some of next.js features.

Everything related to the app, like the initial Page and the Layout will exist within the app folder. We are creating a components folder to contain the Video Emotion component so that we can re-use if we so wish. Finally, the pages folder contains the api folder which will contain any api components we need to call from the client side. Nextjs expects this structure and when a call to /api/twilio-token is made it expects the code to live within the pages folder.

React Bootstrap

Navigate to your terminal and enter the following command to install Bootstrap as a dependency:

Now, navigate to the layout.tsx file within the app folder and add the above line below to the top of the file to import types. This will give us access to a well known react component library that makes things that much easier.

While you are at it, if you want the layout to look exactly like the example here, you might as well replace the default layout function with the following code snippet.

Setting up the Page

The page.tsx file that lives within the app folder needs to be modified so that we actually display the VideoEmotionDetector component. The beginning of the code starts with a directive to the next.js framework to use the client as this tells it that this is solely a pre generated client side component. Next we import dynamic from the next/dynamic library. The reason we are doing this is because we are going to import the VideoEmotionDetector component dynamically and set server side rendering to false. This is to reduce the impact of Hydration issues that sometimes spring up when working with the server side generation paradigm. I go into more detail on this in the Troubleshooting section. Replace the existing code in page.tsx with the following:

Integrating Twilio Video

Twilio Video provides an easy-to-use API for managing video rooms and participants. To get started, we’ll need to create a server-side API route that generates a Twilio access token. This token is essential for authenticating users and participants within a Twilio Video room. You can do this by creating an API route within your Next.js app that uses Twilio's SDK to generate a token for your client-side application. This token will allow your app to connect to a Twilio Video room and participate in video calls.

In the backend, you’ll create a new API route, that will live within the pages folder and called api/twilio-token.ts, where you can authenticate using your Twilio account SID, API key, and secret. The token generated by this route will be used by the client to join the Twilio Video room. You’ll also need to ensure that the client is properly connected to the Twilio Video room, where it can send and receive video tracks. Copy the following code within the newly created api/twilio-token.ts file:

Building the Video Emotion Component

Within the components folder create a new file called videoEmotionDetector.tsx, this will contain all the code you need to create the integration between Twilio Video and AWS Rekognition. Copy the following code within the videoEmotionDetector.tsx file:

To start we are going to create the scaffolding of the component with setting up a directive to next.js that says this is a client side component, we do this by stating ‘use client’ . Then we are going to import all the things we need to make this work. Since we are using React, we can take advantage of React Hooks in the form of useRef, useEffect and useState to handle data changes and to update the client side. Next, import three things from twilio-video, we will need these to create rooms, tracks and to connect participants to rooms. Then, we want to import Rekognition from the AWS SDK, this gives us the power to interpret emotions from the video stream. Finally, import the Form component and the Button component from react-bootstrap, we will use these in the user interface later.

The rest of the code in the following sections will be placed inside the VideoEmotionDetector function component.

Create Some Variables

Lets create some references to the things we are going to need to tie all of this up together. Begin by declaring the two UI components we will use to contain the webcam video and the canvas for getting an image at any particular point in time. Declare the emotions, room, streamingRef and backgroundColor useState and useRef hooks. I will go into detail about why the streamingRef had to use the useRef hook instead of useState in the troubleshooting section. Finally, ensure there is a common place to reference the video room name.

Rather than just outputting text which tells us what the emotion is, how about changing the color of the background screen to a color that makes sense. Here we create an enum that holds hex values for the color of the screen given a particular emotion value which we know we will get back from AWS Rekognition.

Lets Use an Effect

The useEffect hook is used to clean up and disconnect from the Twilio video room when the component is unmounted or the room state changes.

Start Twilio Video

Inside the startTwilioVideo function, a request is made to an API endpoint (api/twilio-token) to obtain a Twilio token, which is used to connect the client to a Twilio video room. Once connected, the local participant's video track is retrieved, and the video is displayed in a video element using a reference (videoRef).

Stop Twilio Video

The stopTwilioVideo function disconnects from the video room and clears the video reference when the user wants to stop the video feed.

Integrating AWS Rekognition for Emotion Detection

AWS Rekognition is a powerful tool for image and video analysis, offering facial recognition and emotion detection features. Once the user’s webcam feed is available through Twilio Video, we can pass the video frames to AWS Rekognition to analyze emotions. AWS Rekognition detects various emotions such as happy, sad, surprised, angry, and more by analyzing facial expressions.

To integrate Rekognition, you need to install AWS SDK in your project and configure it with your AWS credentials. When a participant’s video feed is displayed, the WebRTC stream will be captured in real time. You can use the HTML5 canvas or offscreen canvas to extract frames and send them to AWS Rekognition for analysis. AWS Rekognition will return emotion data, which can then be used to modify the UI accordingly.

Analyze Frame

The analyzeFrame function captures the current video frame, draws it onto a canvas, and sends it to AWS Rekognition for emotion analysis. It creates a Blob from the canvas image, converts it into a byte array, and then sends it to AWS Rekognition using the SDK. The Rekognition service detects faces and their associated emotions, which are filtered based on a confidence level of over 50. The detected emotions are then stored in the component’s state (setEmotions) and used to determine the background color of the video feed. For example, if a participant's emotion is identified as happy, the background color may change to yellow. If no emotion is detected, a default background color is applied. This process allows the app to provide a dynamic and real-time response based on participants' facial expressions during video calls.

How we Chop up the Video Stream

The startRealTimeAnalysis function begins the process of continuously analyzing the video stream for emotions at regular intervals. It sets a streamingRef to true, indicating that real-time analysis is active. It then starts an interval that runs every second (1000 milliseconds) to invoke the analyzeFrame function, which captures and processes the video frame for emotion detection. If streamingRef.current is set to false (indicating that analysis should stop), the interval is cleared to stop the repeated execution of analyzeFrame.

The stopRealTimeAnalysis function stops the real-time analysis by setting the streamingRef.current to false, signaling that no further frame analysis should occur. It also clears any detected emotions by resetting the emotions state to an empty array and sets the background color back to its default value (#ffffff), effectively halting the analysis and resetting the UI.

Handling Emotion Detection and UI Updates

Once the emotion detection is complete, the UI should update in real-time to reflect the emotional state of the participant.

Testing

To test this out, build your project with npm run build and start the server with the command npm run dev. Navigate to http://localhost:3000 and you should see your own face and can start the analysis of the emotions you pull then hooray! It works!

Troubleshooting

TypeScript can be tricky if you haven’t worked with it before. It is a statically typed language that helps ensure type safety by catching type-related errors at compile time, so it's crucial to thoroughly check and validate your types before deploying to avoid runtime issues and ensure the reliability of your application.

A quirk of React is that if you use the useState hook then its values are updated asynchronously, this means that sometimes if you need to access that variable immediately after setting it with useState, it may have not updated yet. Here, useRef was used in place of useState because it allows for storing mutable values that persist across renders without causing re-renders, making it ideal for tracking values like video streams or flags (e.g., streamingRef) that need to be updated programmatically but don't need to trigger a component re-render.

Common issues with Next.js often arise from misconfigurations related to routing like having the pages folder in the wrong place. Other frequent challenges include handling server-side rendering (SSR) with external APIs, managing state between client and server, and dealing with performance optimizations, especially when using large images or complex dependencies that don't properly optimize during the build process. Oh hydration issues! Hydration issues in Next.js occur when there is a mismatch between the HTML rendered on the server and the content generated on the client, causing React to fail when it tries to "hydrate" or take over the server-rendered content, often resulting in warnings or unexpected UI behavior. A quick check you can perform to resolve this is if you declare ‘use client’ on a client side component. This will resolve some but not all issues. Next, check if something you are updating is causing a mismatch between the server side rendering of the HTML and the client side.

You may have noticed something about my code that may have other developers throw their arms up into the air in extreme disgust. Yes, I don’t use semi-colons in my Typescript code, they are useless and in my opinion they are quite redundant. I will challenge anyone to a semi-colon off to prove me wrong.

Conclusion

The combination of AWS Rekognition and Twilio Video opens up a world of possibilities beyond emotion detection. For instance, Rekognition’s facial analysis capabilities could be used for real-time identity verification in secure video conferencing applications, adding a layer of biometric authentication. Object and scene detection could be integrated to identify items in a participant's background for use cases like virtual education or live event tagging. Additionally, sentiment analysis could be expanded to group dynamics, such as identifying overall mood trends in team meetings. These technologies could also support accessibility features, like detecting and highlighting sign language gestures, or even monitoring for safety concerns by detecting unusual activities or objects in a video stream. The synergy of these tools paves the way for innovative applications in virtual collaboration, education, and security.

My name is Paul Heath and I am a Solutions Architect at Twilio. I specialize in AI and continually try to think about what next, in that field. My email address is pheath@twilio.com

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.