Creating a Recipe Recommendation Chatbot with Ollama and Twilio

Time to read:

Creating a Recipe Recommendation Chatbot with Ollama and Twilio

In this tutorial, you will learn how to build a WhatsApp recipe recommendation chatbot that uses Retrieval-Augmented Generation (RAG) to provide recipe suggestions and computer vision to process images containing cooking ingredients. You'll create a chatbot that can understand recipe requests, analyze ingredient photos, and engage in natural conversations about cooking.

To build this application, you will use the Twilio Programmable Messaging API for handling WhatsApp messages and Ollama, an open-source tool for running large language models (LLMs) locally. You'll implement a RAG system that combines the Nomic embed text model and Chroma for generating and storing recipe embeddings with the Mistral NeMo model for generating contextual responses and the LLaVA model for processing ingredient images.

RAG is a technique that enhances language model responses by retrieving relevant information from a knowledge base before generating answers. This approach improves accuracy and grounds the model's responses in factual data.

Embeddings are numerical representations of text that capture semantic meaning, allowing the system to understand and compare the similarity between recipes and user queries effectively.

The Twilio Programmable Messaging API is a service that enables developers to programmatically send and receive WhatsApp messages from their applications.

Ollama is a tool that simplifies running and managing different AI models locally.

Chroma serves as a vector database for efficiently storing and retrieving recipe embeddings.

Here's what your chatbot will look like in action by the end of this tutorial:

Tutorial Requirements

To follow this tutorial, you will need the following components:

- A machine with a GPU with a minimum of 12GB VRAM.

- Node.js (v18+) and npm installed.

- Ngrok installed and the auth token set.

- Docker installed.

- A free Twilio account.

- A free Ngrok account.

Creating The Project Directory

In this section, you will create a new project directory for your WhatsApp recipe recommendation chatbot, initialize a new Node project, and install the required packages to interact with Ollama, Twilio, and Chroma.

Open a terminal window and navigate to a suitable location for your project. Run the following commands to create the project directory and navigate into it:

Use the following command to create a directory named images, wherethe chatbot will store the images sent by the user:

Download this image and store it in the images directory and name it image.jpg. This image shows a bunch of fruits and vegetables on top of a wooden table.

Download this recipes.txt file and store it in your project root directory. This file contains a list of recipes that might be suggested to the user.

Run the following command to create a new Node.js project:

Using your preferred text editor open the package.json file and set the project type to module.

Now, use the following command to install the packages needed to build this application:

With the command above you installed the following packages:

ollamais a client library that allows you to use Javascript to interact with the Ollama server API. It will be responsible for handling communication with LLMs and managing response times for longer computations.cross-fetch: is a package that adds Fetch API support to Node.js, enabling resource fetching, like API requests. It will be used to send HTTP requests to the Ollama server, preventing request timeouts that may occur if an LLM requires extra time to generate a response.chromadb: A JavaScript package for working with the Chroma database. It will be used to store and retrieve embeddings that will be created for the recipes stored in the recipes.txt file.twilio: is a package that allows you to interact with the Twilio API. It will be used to create the Twilio Whatsapp chatbot.express: is a minimal and flexible Node.js back-end web application framework that simplifies the creation of web applications and APIs. It will be used to serve the Twilio WhatsApp chatbot.body-parser: is an express body parsing middleware. It will be used to parse the URL-encoded request bodies sent to the express application.dotenv: is a Node.js package that allows you to load environment variables from a .env file intoprocess.env. It will be used to retrieve the Twilio API credentials and the Ollama base URL that you will soon store in a .env file.

Setting Up Ollama

In this section, you’ll set up Ollama to interact with LLMs. You’ll download the necessary models and start the Ollama server, which will handle natural language processing tasks for your chatbot.

To get started with Ollama, download the correct installer for your operating system from the official Ollama site.

With Ollama now installed, you can begin interacting with LLMs. Before using any commands, start Ollama by opening the app or running this command in your terminal:

This command will start the Ollama server, which runs on port 11434, making it ready to accept commands. You can confirm it’s running by visiting http://localhost:11434 in a browser.

To download a model without running it, use the ollama pull command. For instance, to pull the Nomic embed text model, run:

This command will download the model, making it available for later use. The Nomic embed text model is a model designed to create text embeddings. You will use this model to generate embeddings for the recipes stored in the recipes.txt file and for the user queries.

You can check all downloaded models by running:

The output will show a list of models, including their sizes and the date they were last modified:

Now use the same command to pull the LLaVA model:

LLaVA (Large Language and Vision Assistant) is a multimodal AI model that integrates natural language processing with computer vision capabilities. It processes and analyzes images to identify objects, their relationships, scene composition, and visual attributes, then generates accurate descriptions using natural language. You will use this model to describe the images sent by the user and enumerate possible ingredients in them.

To see all available models on your device so far, run:

You should see something similar to:

To pull a model and execute it in one step, use the ollama run command. For example, to run the Mistral NeMo large language model, type:

If the model isn’t already on your device, this command will download it and start the model so you can begin querying it right away. The Mistral NeMo model is a versatile conversational LLM that is ideal for dialogue-based interactions, such as chatbots and virtual assistants. You will use this model to answer the user queries.

When executed, your terminal will display:

Try sending a simple prompt to test the model’s capabilities:

Here is the response:

The prompt asks the model to introduce itself. The response may vary but typically includes a brief, friendly introduction about the model’s purpose and functions.

Once you are done testing the model send the command /bye to end the chat.

Setting Up Chroma With Docker

In this section, you will set up Chroma using Docker to store and retrieve embeddings for your recipes. This step ensures that your chatbot can efficiently search and recommend recipes based on user queries.

To set up Chroma with Docker, run the following command in your terminal from the root directory of your project:

Here is a breakdown of what the command above does:

docker run: Starts a new Docker container based on the specified image, in this case,chromadb/chroma.-d: Runs the container in the background (detached mode), allowing the terminal to be free for other tasks.--rm: Automatically removes the container once it stops, keeping your system clean and free of unused containers.--name chromadb: Assigns the name "chromadb" to the container, making it easier to refer to in future Docker commands.-e IS_PERSISTENT=TRUE: Sets an environment variable that ensures data stored in Chroma is persistent, meaning it will not be lost after the container stops or restarts.-e ANONYMIZED_TELEMETRY=TRUE: Enables anonymized telemetry, allowing Chroma to collect usage statistics for improving the product without tracking any personal information.-v ./chroma:/chroma/chroma: Mounts a volume from your local./chromadirectory to the/chroma/chromadirectory inside the container. This allows Chroma to store data in a location on your local system, which can persist across container restarts.-p 8000:8000Maps port 8000 on your local machine to port 8000 in the container, making Chroma accessible via http://localhost:8000.

After running this command, Chroma will be set up and running, with its data stored persistently in a subdirectory named chroma located in your project root directory.

To ensure that the Chroma container is running, run the following command:

This command lists all the active containers currently running on your system. You should see output similar to the following:

The docker ps output shows the container's unique CONTAINER ID, the image used (chromadb/chroma), the command it's executing, and how long ago it was created. The STATUS confirms it's running, while PORTS shows that port 8000 is mapped to your local machine.

If you see a similar output, it confirms that the Chroma container is successfully running and accessible on port 8000.

Collecting And Storing Your Credentials

In this section, you will collect and store your Twilio credentials that will allow you to interact with the Twilio API.

Open a new browser tab and log in to your Twilio Console. Once you are on your console copy the Account SID and Auth Token, create a new file named .env in your project’s root directory , and store these credentials in it:

Now, open the .env file and save the Ollama base URL in it:

Setting Up The Database Helper

In this section, you will create database helper functions to manage recipes and query embeddings. These functions will handle the creation, storage, and retrieval of embeddings using Ollama and Chroma.

First, take a look at the content of the recipes.txt file. Open it in your preferred text editor, and you should see something like this:

This file contains several recipes, each formatted with a title, ingredients, and cooking instructions. Each recipe is separated by the marker --+--.

Now, you will create the database helper functions to manage recipe embeddings. First, Create a file named dbHelper.js in your project root directory.

Next, Add the following code to set up the necessary imports and initialize the Ollama and ChromaDb clients:

This code initializes the Ollama and Chroma clients. The code uses Ollama for generating embeddings and Chroma as the aplication vector database. The collection where the embeddings will be stored is named recipes and it is configured to use cosine similarity for comparing recipe embeddings.

Next, add the functions for processing the recipe data:

These functions handle the text chunking and embedding generation. The splitIntoChunks function reads the recipes file and places each recipe into its own text chunk, while generateEmbeddings converts these chunks into numerical vectors using Ollama and the Nomic embed text model.

Now, add the function to create and store recipes embeddings:

This function manages the entire embedding creation process. It generates unique identifiers for each recipe chunk and stores them as documents in Chroma along with their embeddings and metadata.

Finally, add the function to retrieve recipes based on user queries:

The retrieveRecipes function performs a semantic search using embedding similarity. It converts the query into an embedding, finds the most similar recipes in the database, and limits the number of results to the one specified in the numberOfRecipes property found in the args object.

To ensure that the database helper functions are working correctly, you can add a test function. In dbHelper.js, add the following code:

This test function first calls the createEmbeddings() function to generate and store embeddings for the recipes. It then performs a test query to retrieve recipes that include cheese and limits the number of results to two by calling the retrieveRecipes() function and passing the args object as an argument. The results are logged to the console, allowing you to verify that the embeddings and retrieval functions are working correctly.

Run the following command to test the functions:

You should get an output similar to the following

The output above shows that the embeddings were created for all the recipes stored in the recipes.txt file, and also shows the result for the test query to retrieve recipes that have cheese as an ingredient.

After you are done testing the functions in the database helper, comment out the test() function call:

Adding Image Processing

In this section, you will add image processing capabilities to your chatbot. This step involves managing images sent by the user, using Ollama and the LLaVA model to describe the images, and identifying ingredients.

In your project root directory, create a new file named handleImages.js to manage image-related functionality. Start with the basic setup:

This code sets up the image processing environment, creating an Ollama client and defining the path where the application will temporarily store uploaded images.

Add the function to handle image storage:

The storeImage function downloads images from Twilio's media URLs and saves them in the images directory under the name image.jpg for processing.

Add the image description function:

This function uses Ollama and the LLaVA model to analyze the image stored in the images directory, provide detailed descriptions, and enumerate visible items.

If there aren’t any errors the function returns the response generated by the LLaVa model otherwise it returns a message stating that the application failed to use the vision model to describe the image.

To ensure that the image processing functions are working correctly, you can add a test function.

Add the following code at the bottom of the handleImages.js:

This test function calls the describeImage() function to generate the sample image description and logs the result to the console. This function allows you to verify that the image processing functions are working correctly.

Run the following command to test the functions:

You should get an output similar to the following

The output above shows that the LLaVA model was able to make a decent description of the image contents.

After you are done testing the functions in the handleImages.js file, comment out the test() function call:

Implementing The Chat Functionality

In this section, you will create a new file called chat.js to handle all of your chatbot's conversational logic. This file will manage the interaction between the user and the chatbot, using Ollama for interacting with the Mistral NeMo LLM.

Create a new file named chat.js in your project root directory. In the chat.js file, start by importing the required dependencies and setting up the Ollama client:

This initial setup establishes the connection to Ollama and imports necessary modules.

Next, define the message history array and the retrieval tool for recipe searches:

Here you created a messages array to maintain conversation history and define a retrieval tool that follows OpenAI's function calling format. This tool specification tells the LLM how to structure recipe search requests. This tool will parse the user query to extract ingredient names and the desired number of recipes, then store them in the RAGQuery and numberOfRecipes properties, respectively as the function parameters.

Add the function to manage chat history:

This simple yet crucial function maintains the conversation context by adding each message exchange to the history array. This context helps the LLM provide more coherent and relevant responses throughout the conversation.

Now, implement the main chat function with initial query processing:

This section of the chat function initializes the conversation. It sets the model to Mistral NeMo and creates and executes an initial prompt that helps the LLM understand whether to use the tool defined earlier to search for recipes or provide a direct answer.

If the LLM decides to not use the tool the code returns the direct answer to the query. However, if an error occurred while trying to query the LLM the code returns a message stating that the chatbot failed to use an LLM to answer the query.

Add the recipe retrieval processing:

This section handles the actual recipe retrieval. When the LLM decides to search for recipes, it calls the retrieveRecipes function (located in the dbHelper.js), passing the RAGQuery and numberOfRecipes as arguments. It then processes the results, joining multiple recipes into a single string.

Finally, add the response generation:

This final section generates the response to the user. It creates a RAG prompt that includes the retrieved recipes and asks the LLM to formulate a helpful response, limiting the number of recipes to prevent overwhelming the user.

To ensure that the chat functionality is working correctly, you can add a test function.

Add the following code at the bottom of the chat.js file:

This test function performs two queries: one to suggest recipes with tomatoes and another to provide more details about the first recipe retrieved in the first query. The results are logged to the console, allowing you to verify that the chat functionality is working correctly.

Run the following command to test the chat functionality:

The output for the first query should look similar to the following:

The LLM analyzes the first query and decides to use the tool to retrieve recipes that have tomatoes as an ingredient. Then the code adds the recipes found to the RAGPrompt and sends the prompt to the LLM to generate a final response.

Here is the output for the second query on the test() function:

The LLM analyses the second query and decides not to use the tool and uses the context provided in the message history to directly answer the query.

After you are done testing the chat functionality, comment out the test() function call.

Setting Up The Server

In this section, you will create a new file called server.js to handle web server setup and Twilio integration. This file will manage incoming messages, process them through the chatbot, and send responses back to the user.

Create a new file called server.js to handle web server setup and Twilio integration. Start with the necessary imports:

This brings in all required dependencies, including Express for your web server and Twilio for WhatsApp messaging.

Set up the Express server and Twilio client:

This code initializes your Express application and configures middleware for parsing incoming requests. It also sets up the Twilio client using the credentials stored in the environment variables.

Add the message splitting utility:

This function handles Twilio's message length limitations by splitting long messages into smaller parts. It preserves message readability by using newlines to split messages before exceeding 1600 characters.

Implement the message sending function:

This function sends messages through Twilio's WhatsApp API, handling single and multi-part responses when messages exceed Twilio's length limit.

Add the image processing route:

This code defines the /incomingMessage endpoint which handles incoming text and media messages.

When an image is attached to a message, the application saves the image and generates a detailed description. Both the generated description and the prompt used to describe the image, are stored in the chat history. The application then sends the image description back to the sender.

Finally, add the text message handling:

The code added completes the endpoint by handling text messages. When the application receives text messages it processes them through the chat() function ( located in the chat.js file) and sends the generated response back to the sender.

Finally, the code starts the server and prints a message saying that the server is running on port 3000.

Go back to your terminal and run the following command to start serving the chatbot application:

Open another tab in the terminal and run the following command to expose the application:

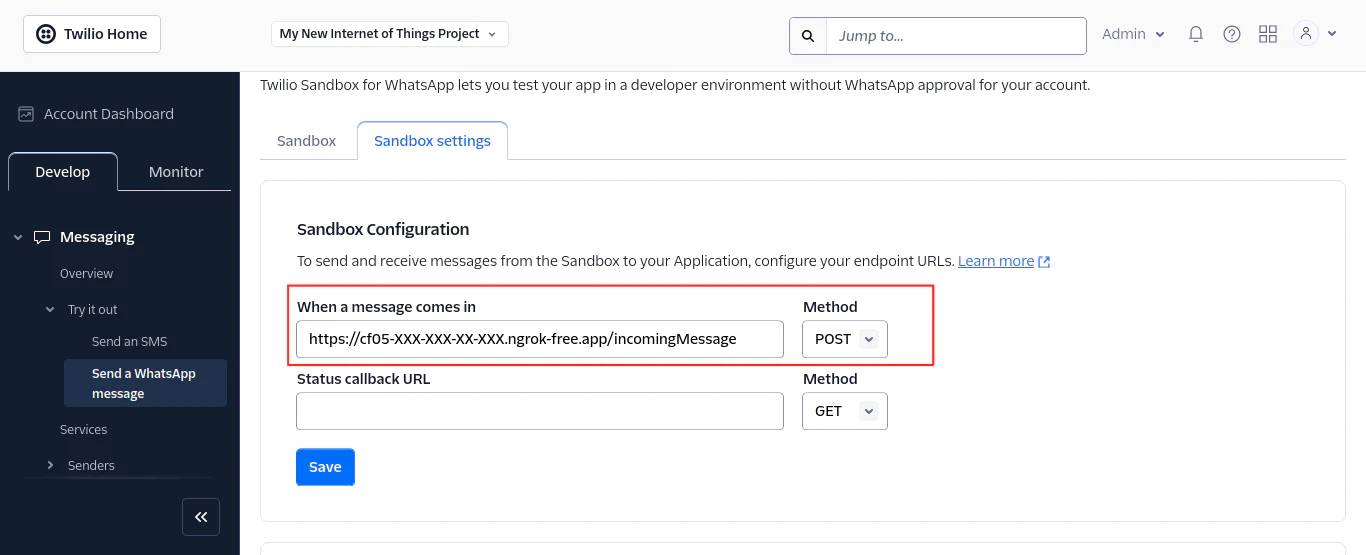

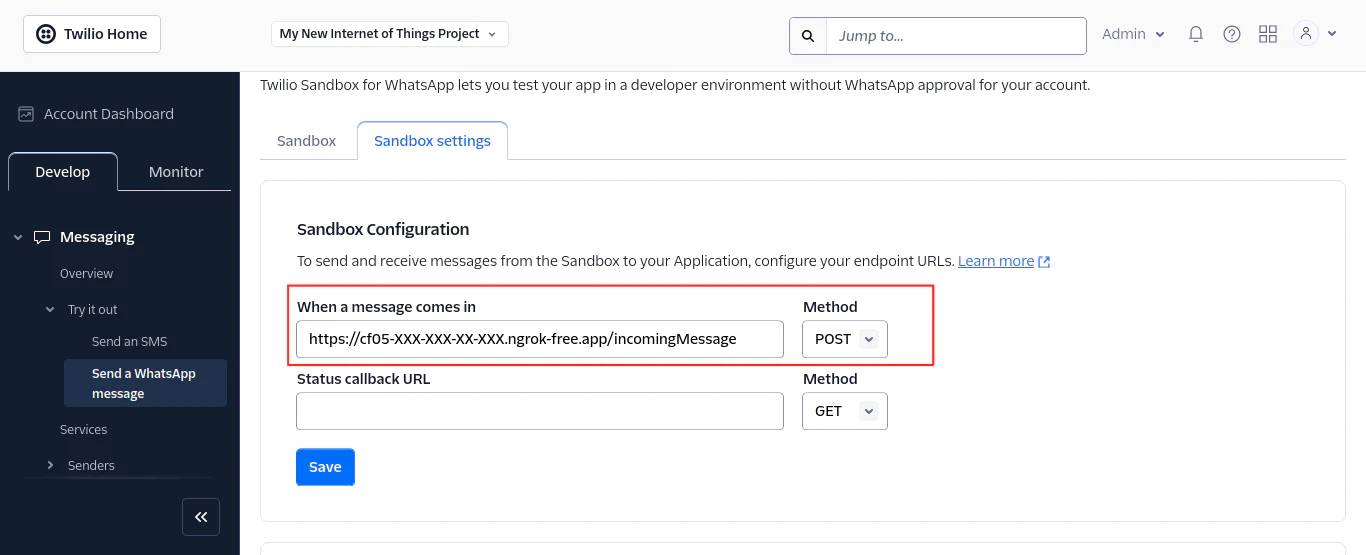

Copy the https Forwarding URL provided by Ngrok. Return to your Twilio Console homepage, select the Develop tab, navigate to Messaging, select Try it out, and choose Send a WhatsApp message to access the WhatsApp Sandbox section.

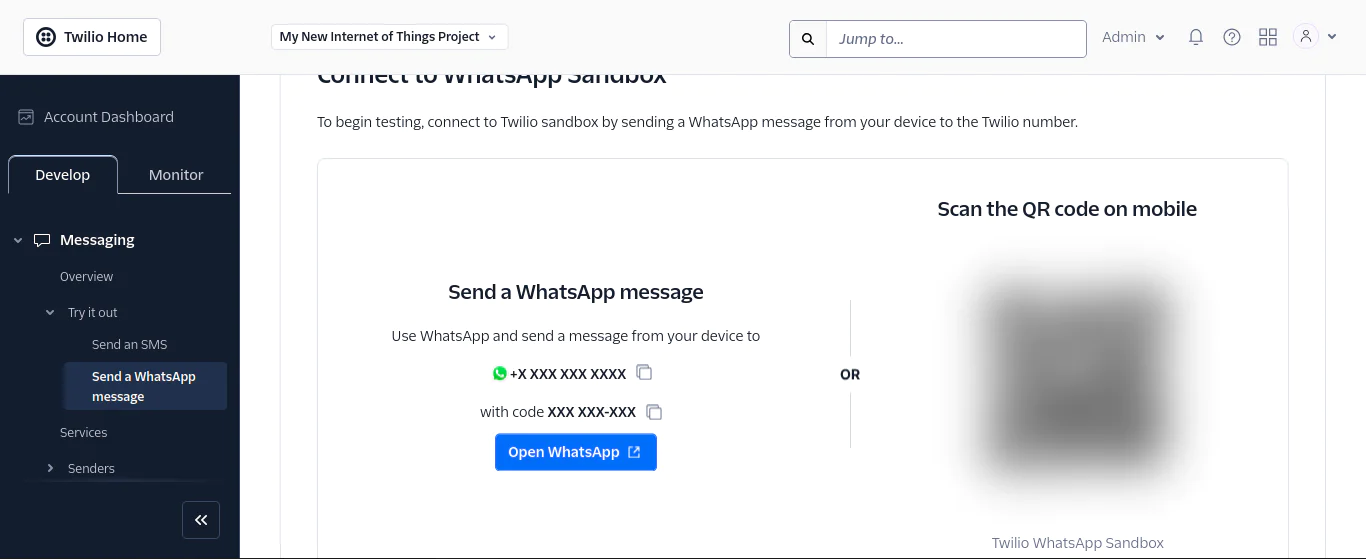

On reaching the Sandbox page, scroll to find the connection instructions and follow them to connect to the Twilio sandbox. You'll need to send a specified message to the provided Twilio Sandbox WhatsApp Number.

Once connected, return to the top of the page and click the Sandbox settings button to view the WhatsApp Sandbox configuration. In the settings, input your ngrok https URL into the "When a message comes in" field, adding /incomingMessage at the end. Choose POST as your method, save your changes, and your WhatsApp bot will be ready to process incoming messages. Verify that your URL matches the format shown below.

Open a WhatsApp client, send a message containing an image of cooking ingredients and the chatbot will respond with a detailed image description containing the ingredients that it was able to identify.

Next, send a message asking for a recipe containing a specific ingredient found in the image, and the chatbot will respond with recipe recommendations.

Conclusion

In this tutorial, you learned how to create a WhatsApp recipe recommendation chatbot that combines computer vision with retrieval-augmented generation (RAG). You've learned how to utilize the Twilio Programmable Messaging API for handling WhatsApp messages, integrate Ollama to run AI models locally, and implement a RAG system using Chroma for efficient recipe storage and retrieval.

You've learned how to combine multiple AI models for different tasks: the Nomic embed text model for generating recipe embeddings, the Mistral NeMo model for natural conversation, and the LLaVA model for image analysis. Through this process, you've built a chatbot that not only understands recipe requests but can also analyze images of ingredients and maintain contextual conversations about cooking.

The code for the entire application is available in the following repository: https://github.com/CSFM93/twilio-recipes-chatbot .

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.