Creating a Voice AI Assistant using Twilio, Meta LLaMA 3 with Together.ai and Flask

Time to read:

Creating a Voice AI Assistant using Twilio, Meta LLaMA 3 and Together.ai

Introduction

Twilio Programmable Voice helps to build a scalable voice experience with the Voice API and SDKs that connect millions around the world. It offers extensive customization options and convenience for automating telephone workflows.

In this article, we will explore how to use Twilio and Meta LLaMA-3 with together.ai and Flask to create an AI assistant which can answer the customer queries via voice call based on your use case.

Meta Llama 3 is the latest generation of open-source large language model (LLM) from Meta (formerly Facebook). The model is available in 8B and 70B parameter sizes, each with a base and instruction-tuned variant. Together.ai provides the necessary computational resources and infrastructure to efficiently run and deploy Meta LLaMA 3, making it more accessible for users without extensive hardware or technical expertise.

Prerequisites

This tutorial is designed for users operating on Windows OS, but it can also be used by Mac and Linux users by setting up a virtual environment. Refer to this link for instructions. To proceed with the tutorial, ensure you meet the following prerequisites:

- Python 3.6 or a more recent version. If your system doesn't come with a Python interpreter, you can download an installer from python.org.

- A Twilio account. If you don’t have one, you can register for a trial account here.

- A phone that can make phone calls, to test the project. You can also use Twilio Dev Phone for this tutorial.

- Install ngrok and make sure it’s authenticated. You can install ngrok from here.

Building the app

Setting up a together.ai account

As a first step, we need to create a new account in together.ai. It has pre-configured instances of popular models like LLaMA, Gemma, Mixtral etc. which can be used by just getting the personal API Key. You can find the full list of available models here - https://docs.together.ai/docs/inference-models

You can get started with creating a free account which gives $1 credit for your development work. You can refer to Pricing page for more details - https://www.together.ai/pricing

Once the account is created, you’ll be redirected to the homepage where you can find an API key just for you. Copy it for your future reference. Remember to never share API keys with anyone.

Setting up your development environment

Before building the AI assistant, you need to set up your development environment. The first step is to create a new folder twilio-meta-ai, and move into it:

Create a virtual environment:

This command makes a venv folder inside your project. That folder contains the Python interpreter and all installed packages.

Activate the virtual environment:

When activated, your shell prompt should change to show (venv), indicating you’re now using the virtual environment.

Create a src folder for your Python code:

Inside src is where you’ll keep your Python files or project code.

If you’re using Git, you’ll typically want to ignore the venv folder so it isn’t committed to version control. From the root of your project twilio-meta-ai, you can create a .gitignore file:

Now you are ready to install Flask and other necessary dependencies. Create a file called requirements.txt to store all the dependencies inside your working directory twilio-meta-ai and add the following lines to it:

Here is a breakdown of these dependencies:

- twilio - A package that allows you to interact with the Twilio API.

- flask - Flask is a lightweight web application framework for Python, designed to make it easy to build web applications quickly.

- together - Official Python client for Together.ai API platform, providing a convenient way for interacting with different open source AI models

Then install all the packages with pip from your terminal:

Create a Flask Server

Let us set up a Flask server. In your source code directory src, create a file app.py and paste the code snippet given below:

The code begins by importing the necessary dependencies. Then, it will set up a Flask application, using the flask module. Additionally, it initializes a client for the Together.ai API, using the together module and supplying an API key for authentication purposes. Make sure to replace API_KEY with your Together.ai API Key.

To manage the conversation history for each session, the code initializes a dictionary named sessions. This dictionary serves as a storage mechanism for retaining the context and progression of conversations between users and the chat assistant.

Design the prompt

To provide context to your Chat assistant, you can create an initial prompt based on your use case. The following prompt is an example to create an assistant which can answer queries related to products, and you can design the prompt depending on your use case. Paste the following code in your app.py file under the code you already pasted.

Create an incoming voice handler

To enable users to interact with the chatbot via voice calls, you will create a route named /voiceHandler. Copy and paste the following code into the app.py file to implement the /voiceHandler route.

The /voiceHandler endpoint is used to handle your incoming voice call. It begins by extracting the unique call identifier (CallSid) from the incoming request. Utilizing this identifier, the system initializes or retrieves the session associated with the call from the sessions dictionary. In cases where a new session is detected, a default conversation history is established to kickstart the interaction. To facilitate interaction with the user, Twilio's VoiceResponse is configured to construct a tailored response for the incoming call. This response includes a message prompting the user and awaits their input.

Once the input is gathered from the user, it is then added to the conversation history within the session. Then, using the Together.ai model, the system generates a response from the assistant based on the accumulated conversation history. Subsequently, the assistant's response is appended to the conversation history.

Finally the app.run(port="3000") line will initiate a web server using Flask on port 3000 which exposes the /voiceHandler endpoint which is required to handle the incoming voice call requests.

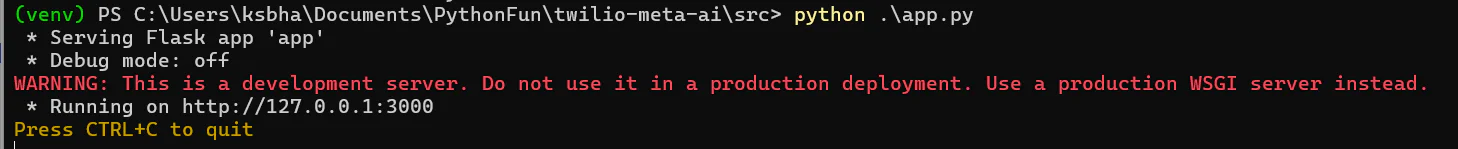

Setup the Server

Now, start your server so that you can handle the incoming calls. Navigate to the source code directory src and run the below command to start your Flask server:

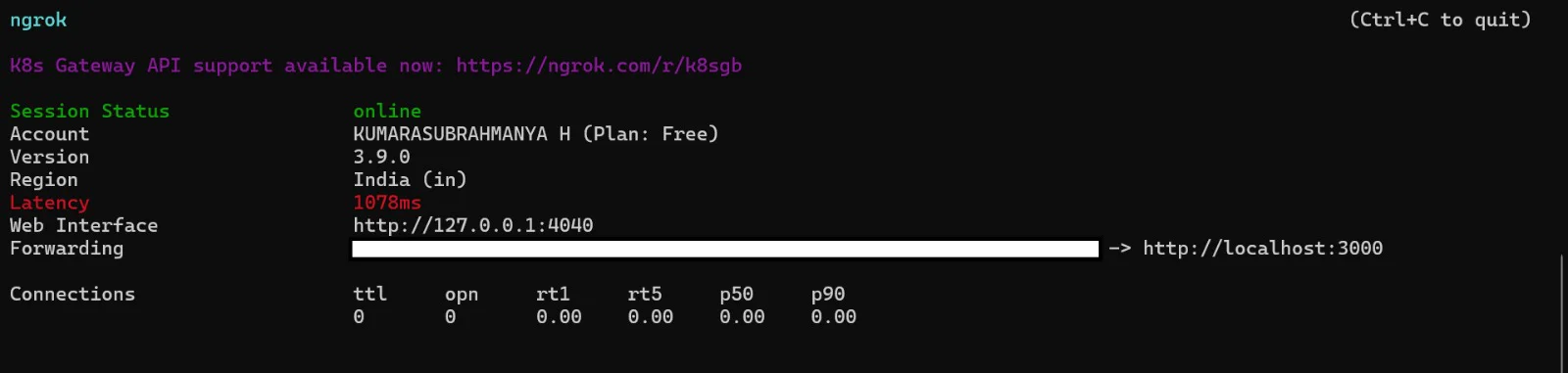

By configuring the port to 3000, your server is accessible via http://localhost:3000. However, for Twilio to access this URL, your app must be hosted on a server, and it must be publicly accessible. For testing purposes we can rely on ngrok. If you're unfamiliar with ngrok, you can consult this blog post for guidance on creating an account. To initiate the ngrok tunnel, execute the following command in a separate terminal tab.

The command above establishes a connection between your local server operating on port 3000, and a public domain generated on the ngrok.io website. Upon execution, you'll observe the response displayed below. For security reasons, the ngrok domain is struck through and displayed in white color.

Now, our application is accessible via the ngrok URL. Copy the URL provided by ngrok, as you'll need it in the next steps.

Configuring the Twilio webhook

In the Twilio Console, head over to Explore Products in the left-hand menu, then select Explore Products → Phone Numbers → Manage → Active numbers to access the list of available numbers.

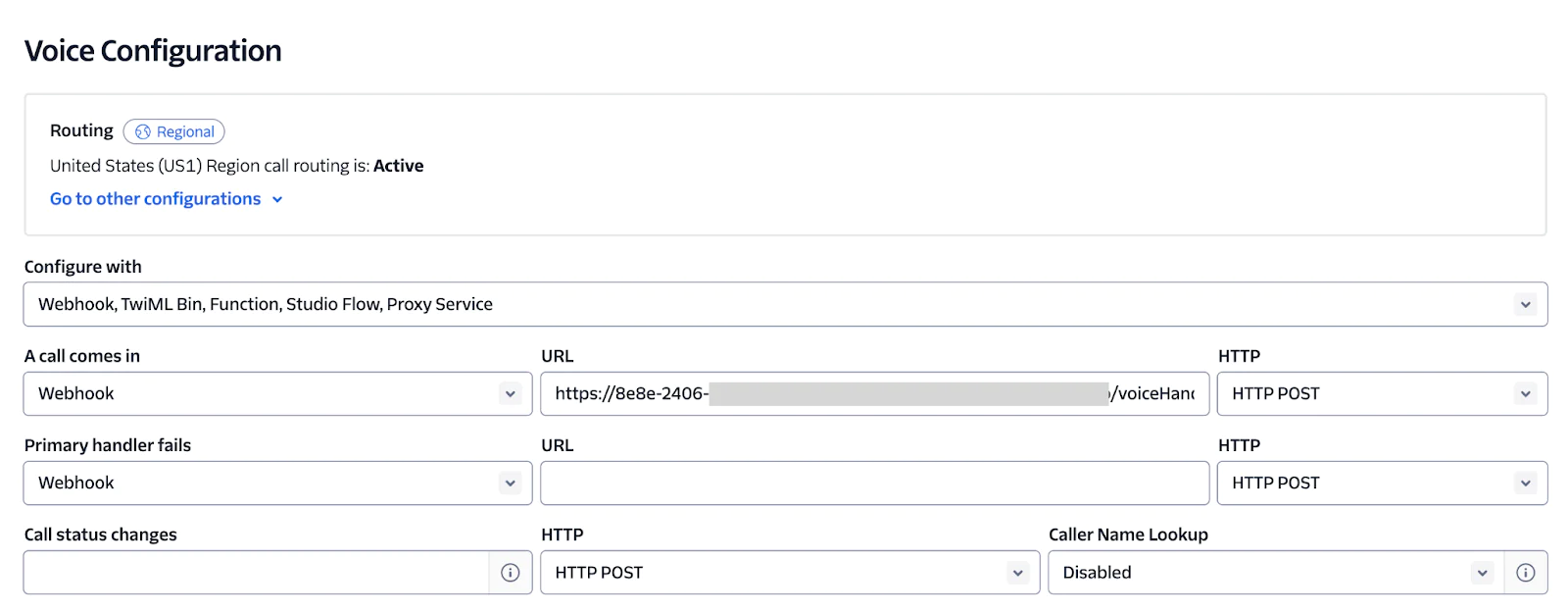

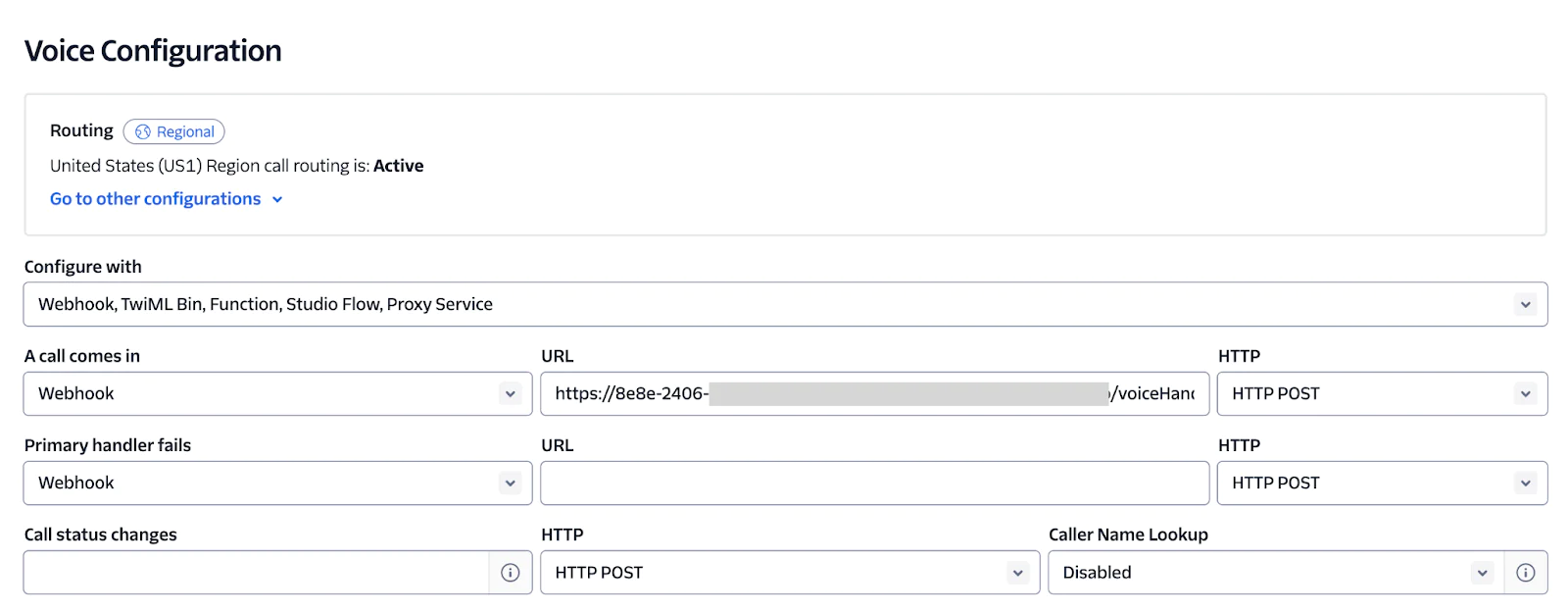

Next, click on any available Twilio number and proceed to the Configure tab. Under Voice Configuration, set up an incoming call webhook to point to the URL provided by ngrok. Remember to append '/voiceHandler’ to the base URL to match the endpoint on our server, and ensure the HTTP method is set to POST.

Once configured, click on the Save configuration button at the bottom to save the changes. Kudos! Now you're all set to perform your initial test.

Test your application

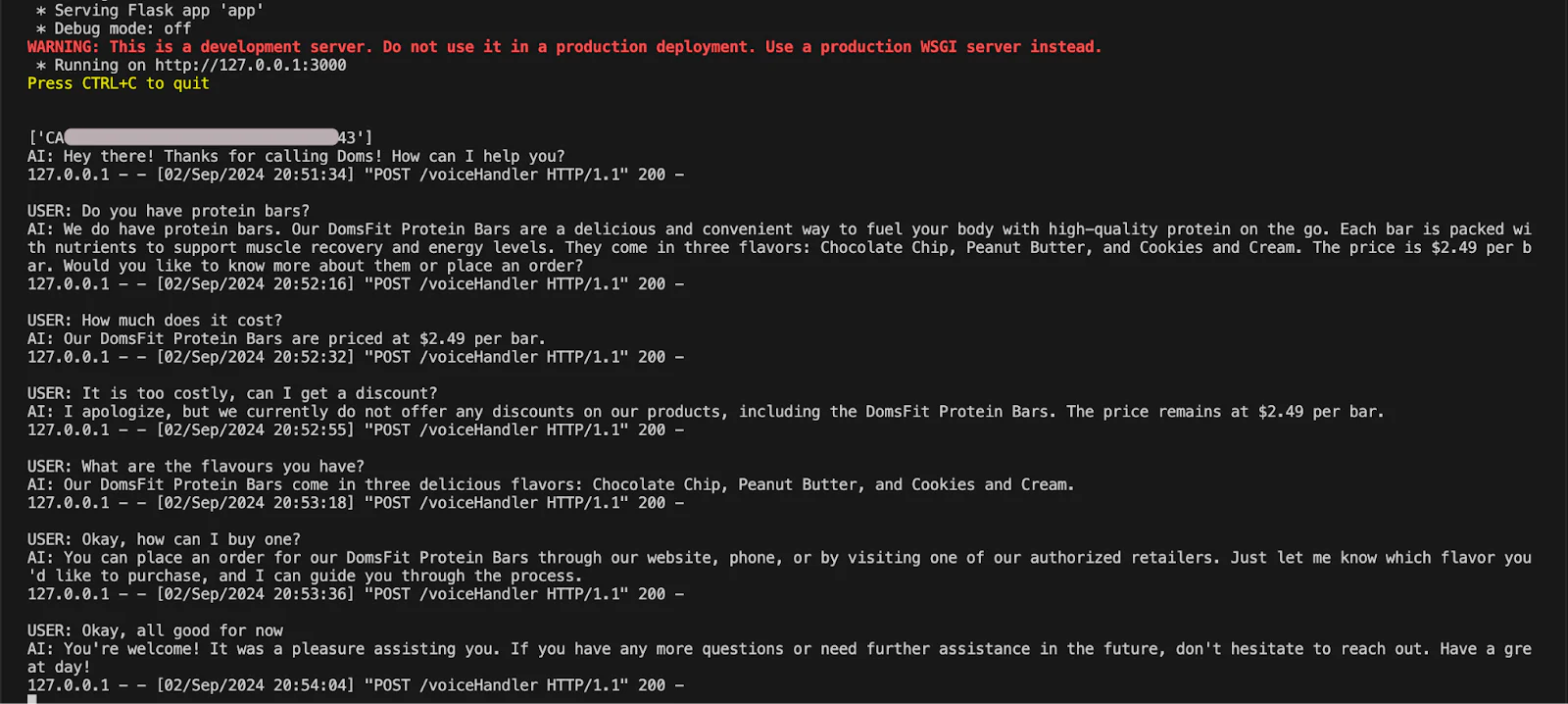

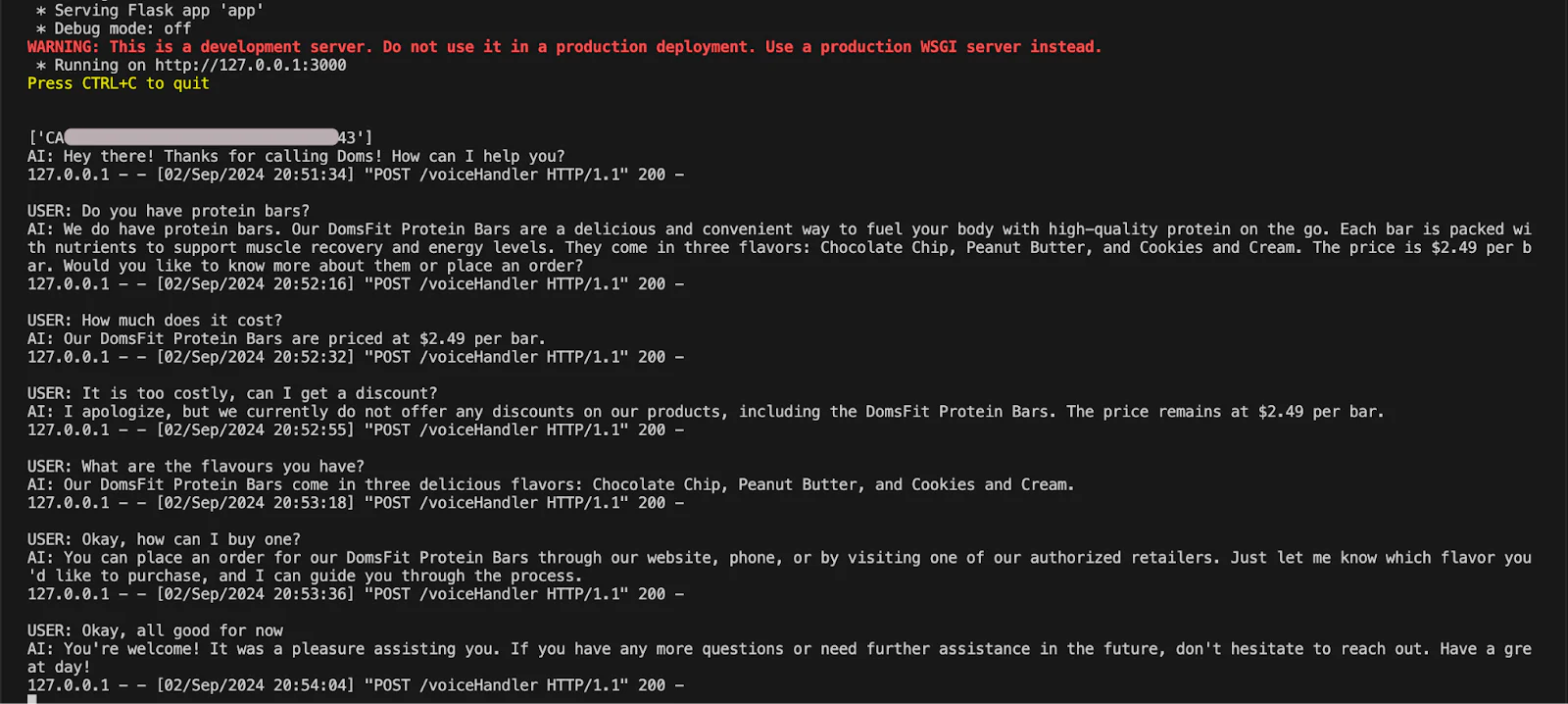

Now that you have completed setting up the incoming webhook handler for your Twilio phone number, make a phone call from your mobile phone to the Twilio number. You should observe an HTTP request in your ngrok console. Your Flask app will then process the incoming request and respond with your initial TwiML message: 'Hey there! Thanks for calling Doms! How can I help you?' Feel free to ask any questions to the AI assistant, and it will respond.

For testing purposes, you can also use Twilio Dev Phone, a developer tool for testing SMS and Voice applications. Please follow the instructions here to set up Dev Phone locally. Once the setup is completed, you can run the Dev Phone with the following command:

The command above will open the Dev Phone in your browser. Simply dial the configured phone number to start interacting with the chatbot. Your interactions will be displayed in your terminal similar to the screenshot below.

Let's Keep the Conversation Going!

You have successfully developed a virtual AI assistant using Meta LLaMA 3 with together.ai and Twilio Programmable Voice. This innovative feature will allow users to ask questions directly to the virtual assistant, which promptly responds with relevant information or assistance. Using Twilio Programmable Voice, this virtual assistant ensures seamless communication and provides valuable support to the users.

To personalize your new bot, try changing the language in the bot's prompt to something relevant to you, your business, or your interests. Happy building!

Kumarasubrahmanya Hosamane is a Software Developer from the IT hub of The United States, San Jose who is keenly interested in exploring latest technologies. He can be reached at Kumarasubrahmanya Hosamane .

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.