Collect Survey Responses with Twilio Voice, Airtable and OpenAI

Time to read: 7 minutes

Collect Survey Responses with Twilio Programmable Voice, Airtable and OpenAI

In today's digital landscape, the convergence of communication and data management tools provides a powerful avenue for enhancing user interactions and data collection. This technical article is your gateway to a project centered around the creation of engaging phone surveys. We'll achieve this by combining the capabilities of Twilio Programmable Voice and the Airtable API.

This application handles incoming calls from users who dial in to participate in surveys. After receiving a call, the system prompts the user to choose a survey, retrieves relevant details from Airtable, and then guides them through a series of questions. The responses are recorded and automatically added as a new row in an Airtable document.

Prerequisites

Before delving into the tutorial, it's essential to ensure that certain prerequisites are in place:

- Node.js v16+ installed on your machine.

- A preferred IDE such as VS Code.

- An active Twilio account (sign up for a free account if you don't have one).

- The ngrok CLI for testing purposes.

- (Optional) An OpenAI account for the "Spicing it up" section.

- An Airtable account.

- A Twilio phone number with phone call support.

Refer to the GitHub Repository for the final code.

Setting up the server

To initiate your project, follow these commands in your terminal:

This sequence of commands creates a new directory for the project, initializes a Node.js project, and installs the necessary dependencies for a basic Express server. Following this, a new file, index.js, is created with the boilerplate code for a basic server:

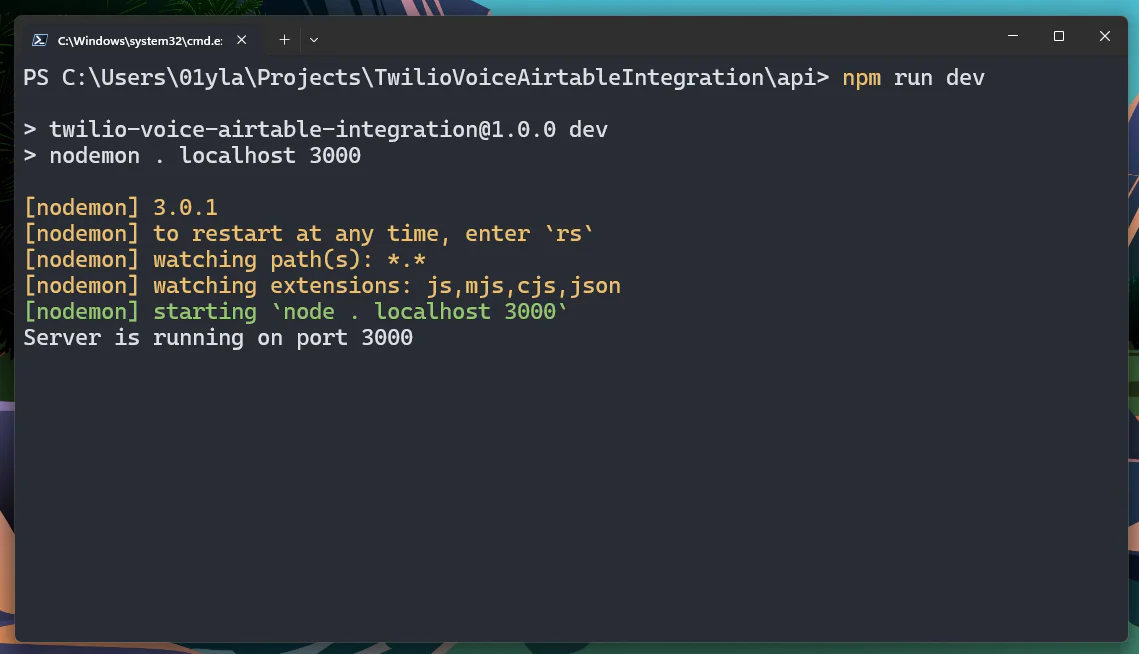

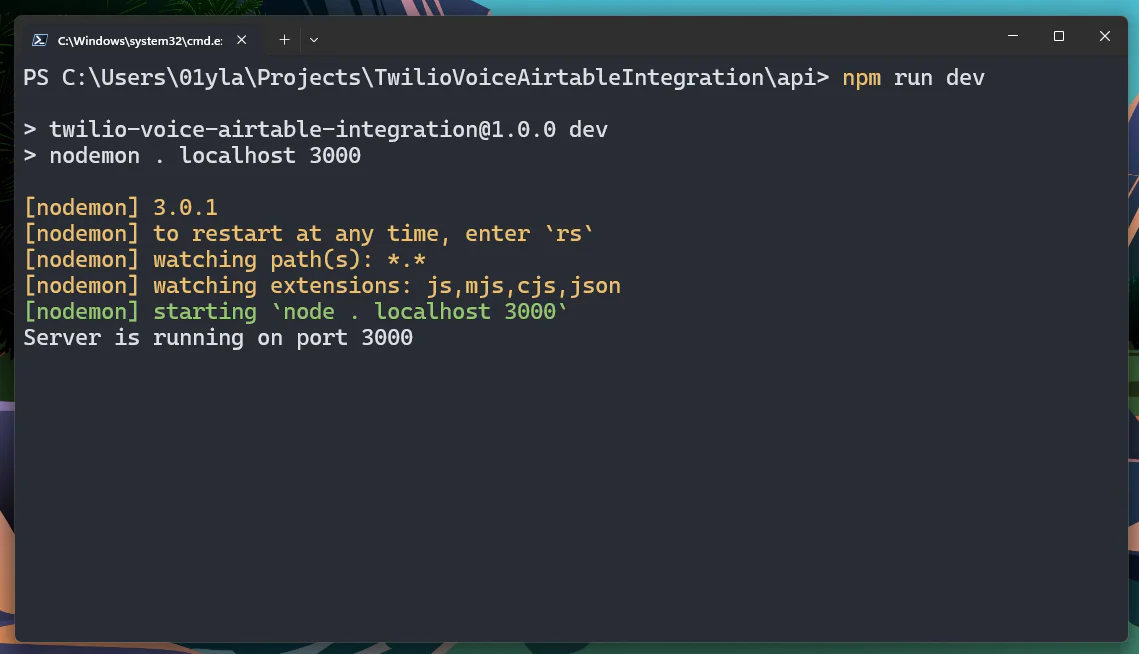

Start your server with the command node ./index.js or use nodemon for automatic restarts.

Add the following script to your package.json:

Run it from your terminal:

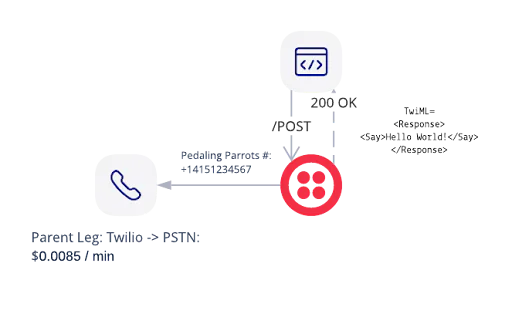

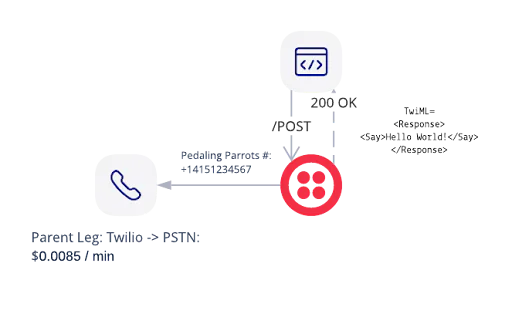

Handling incoming calls

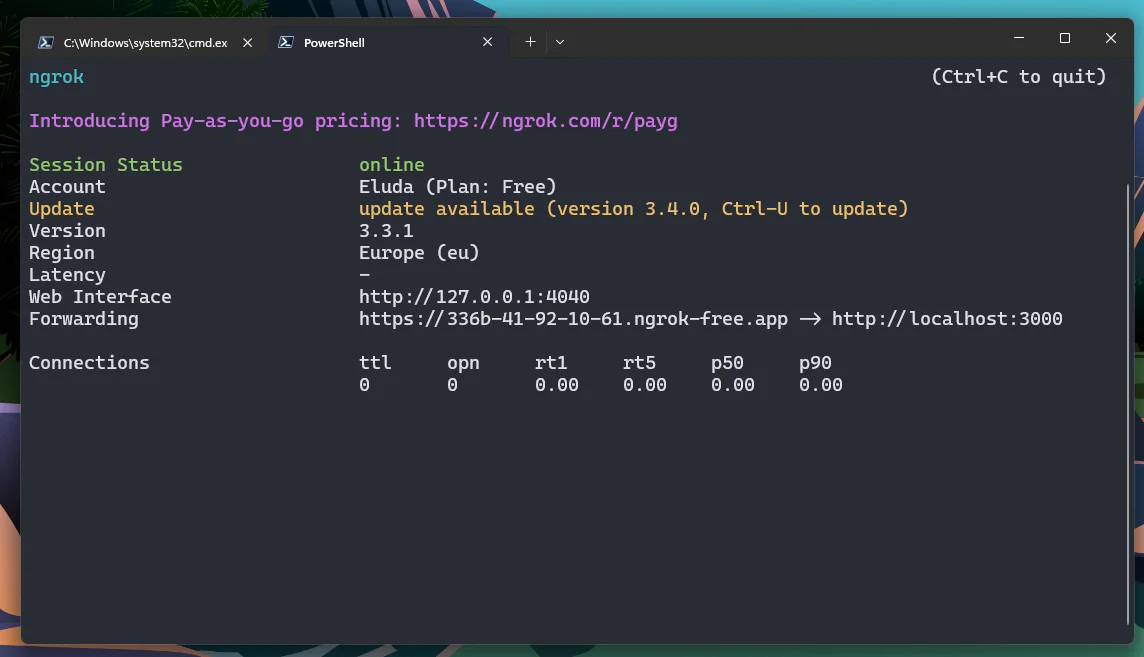

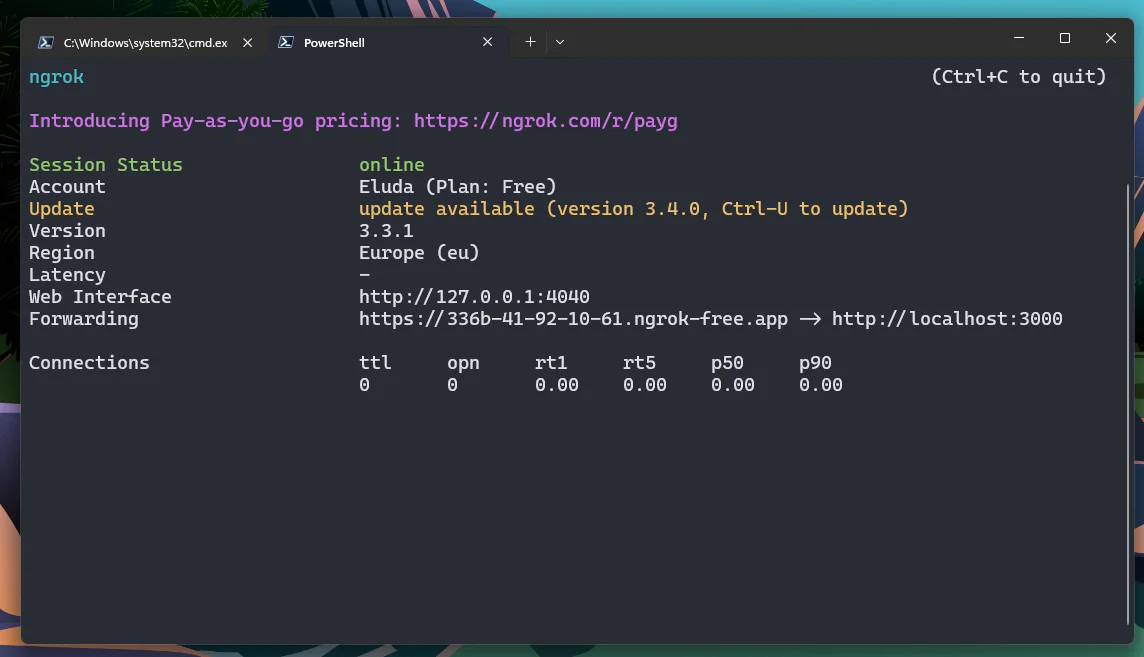

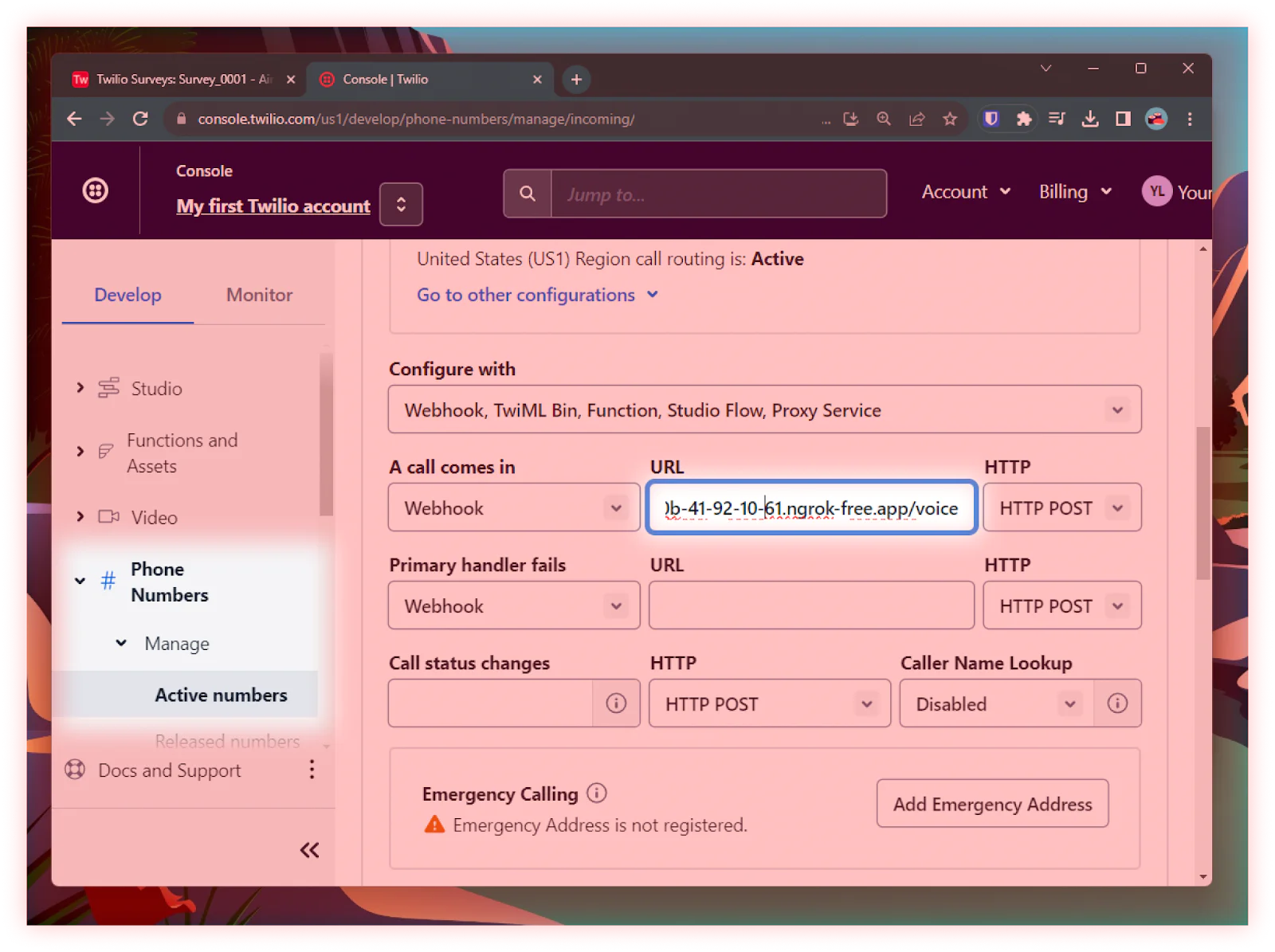

When a user calls your Twilio phone number, a webhook should call your server with necessary logic. Expose your app using ngrok for development:

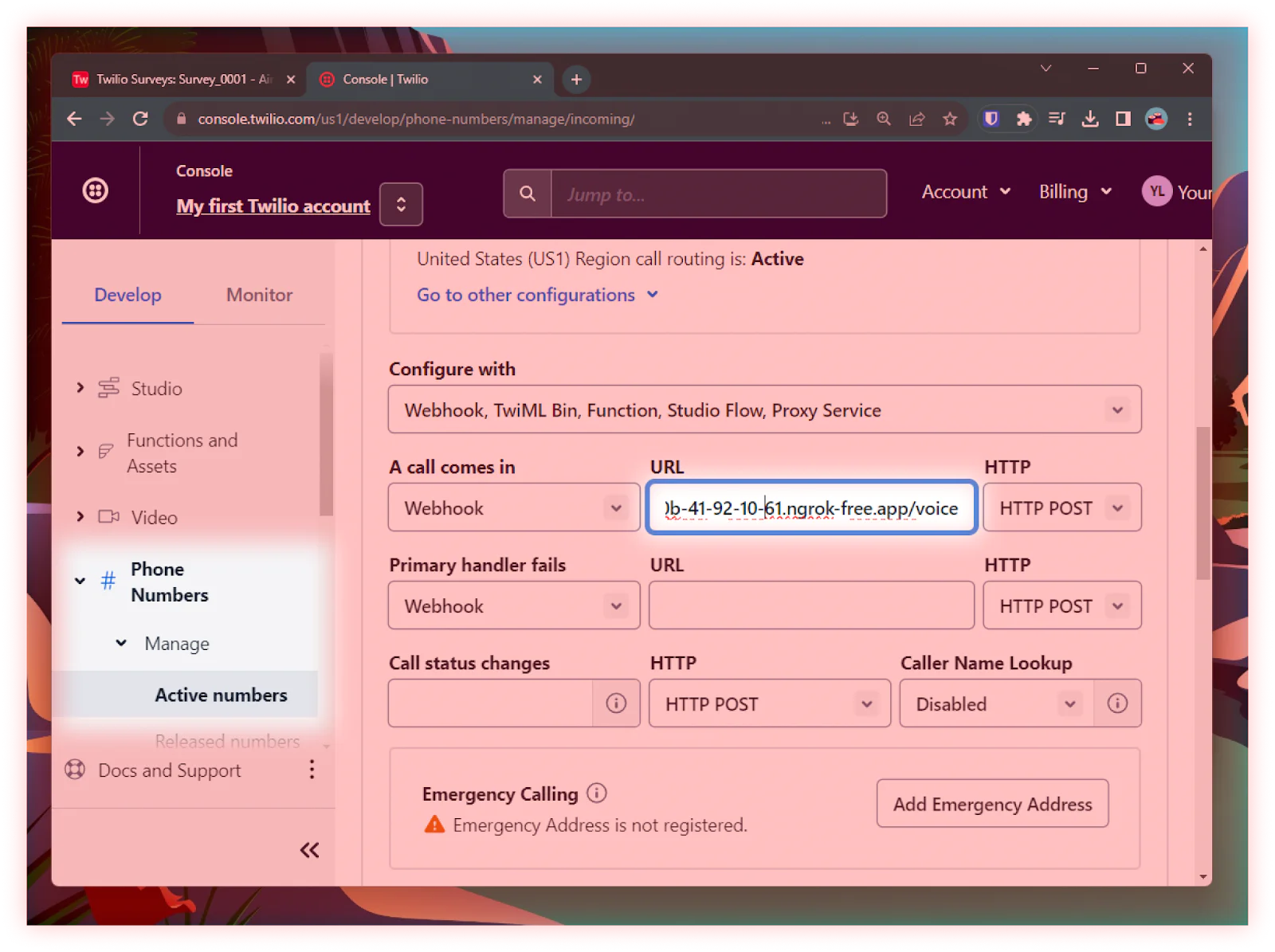

Copy the forwarding URL and paste it into your Twilio dashboard under Phone Numbers > Manage > Active Numbers > your_number > Configure > Voice Configuration > A call comes in > paste_your_url + /voice.

Let’s implement this POST endpoint /voice to handle incoming calls. And while you can manually write TwiML code, which is a subset of XML, it’s much more delightful to use Twilio’s node package:

Import the package, create an instance of twiml.VoiceResponse, and use it to define the desired response:

When a user calls, Twilio will prompt them to enter a four-digit code using DTMF (Dual-Tone Multi-Frequency) input. Let's explain the <Gather> function, which collects user input during a call:

<Gather>: This TwiML verb allows you to collect digits or speech from the caller. In this case, it's set up to collect a four-digit code using DTMF input.

The action attribute specifies the endpoint where Twilio will send the collected input for further processing.

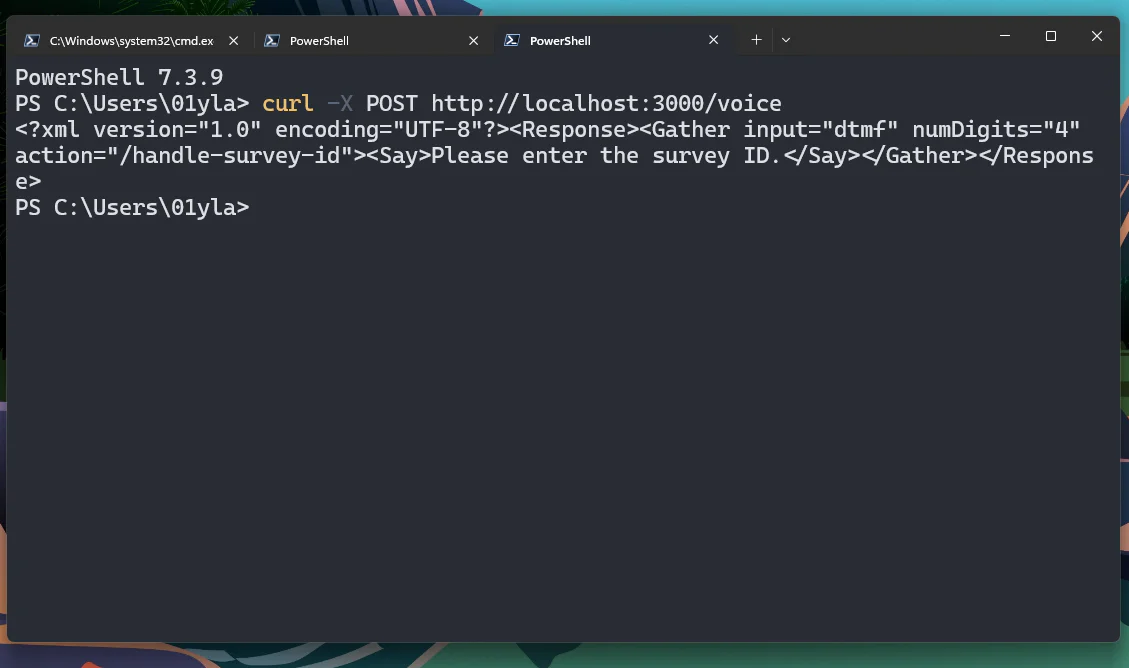

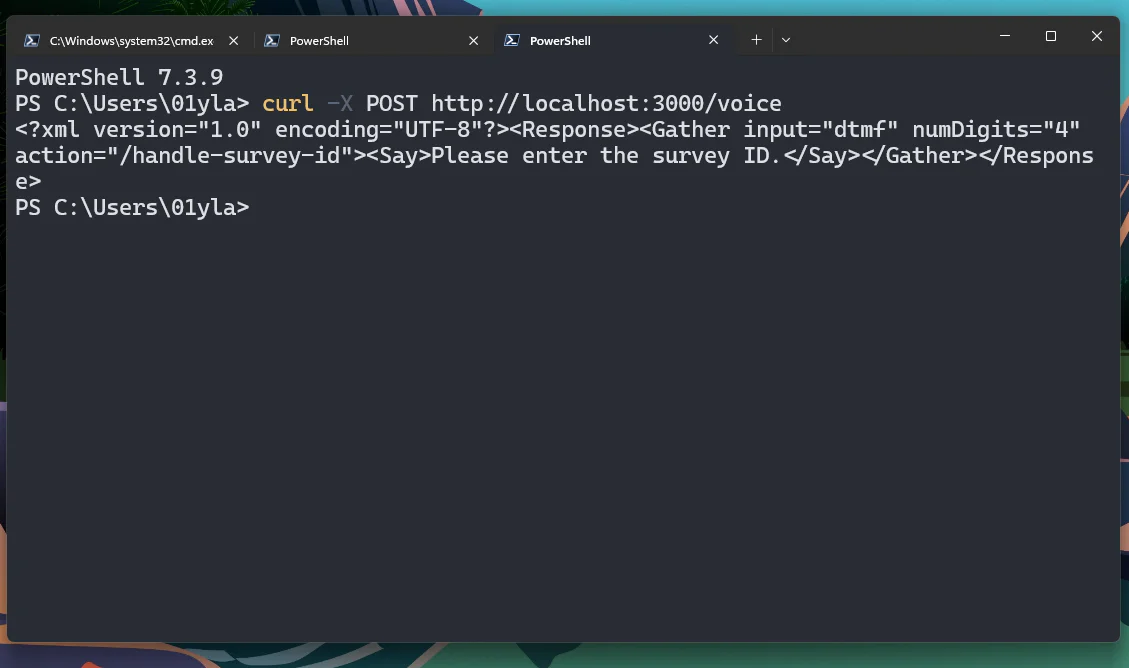

Try this out! In your console, use curl to make a POST request to the /voice URL:

Processing gather digits

After the user inputs four digits, Twilio calls the /handle-survey-id URL, providing you with the digits in the request’s body.

These lines of code configure Express to parse the request body as JSON and handle URL-encoded data.

We’ll now attempt to create a new record in this table. If it fails, then the table doesn’t exist and the survey ID is invalid, in which case we should inform the user and end the call.

If the record is successfully created, then we should begin the flow of asking the user to fill out each field of that row. You can do that by redirecting the user to another URL, which we’ll implement in the next section:

Using speech recognition to fill out the form’s fields

Using Airtable’s Metadata API, you can gather all tables that are in your database, find the survey in question, and return the field names:

Similar to gathering the survey ID using the dtmf input format, you can use the speech format to tell Twilio that it should gather the user’s speech, transcribe it, and feed it back to your server:

This will immediately end the call if there are no fields in the current survey. Otherwise, it will ask the user to fill out the first column, and then redirect back to the same URL.

To keep this flow running, meaning that after processing the first field, it asks for the next one until there are none left, we can use a Query parameter, call it remainingFields, and keep truncating it until there are no fields left.

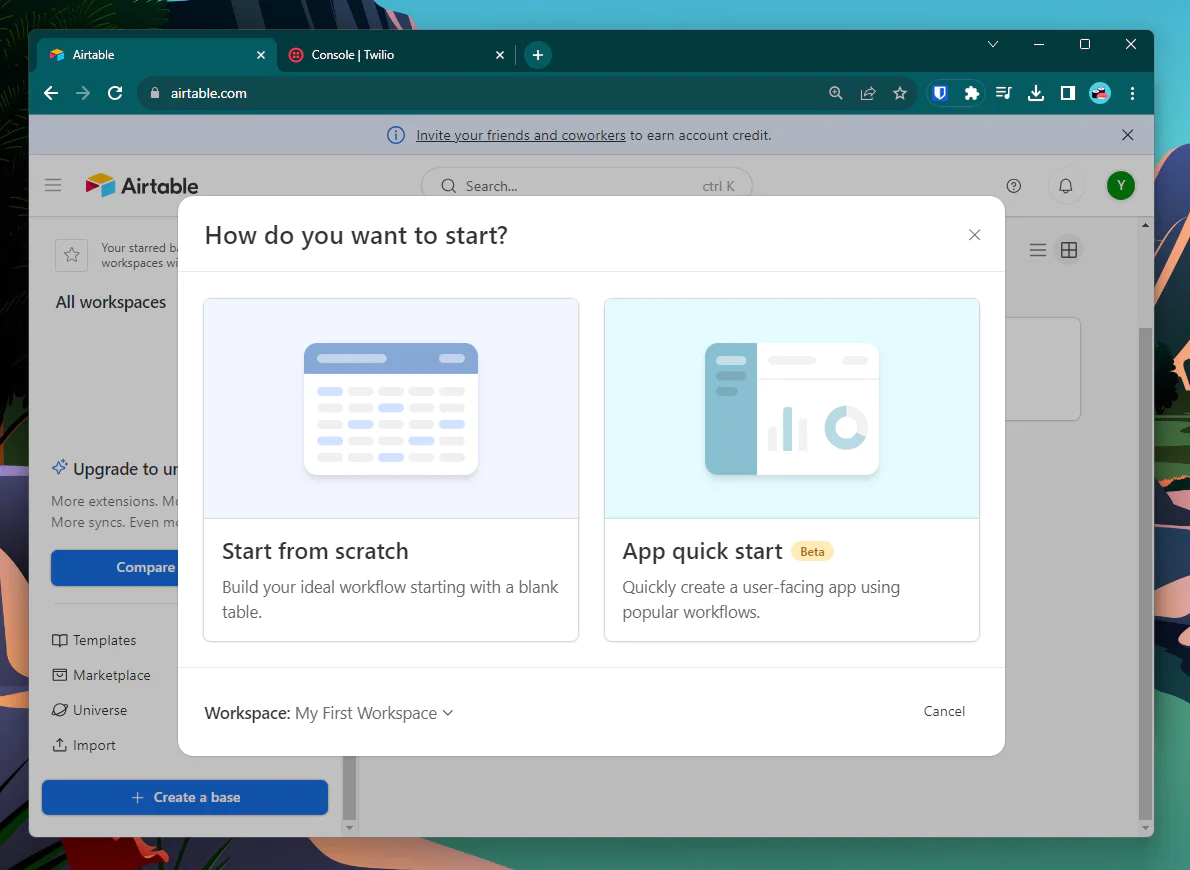

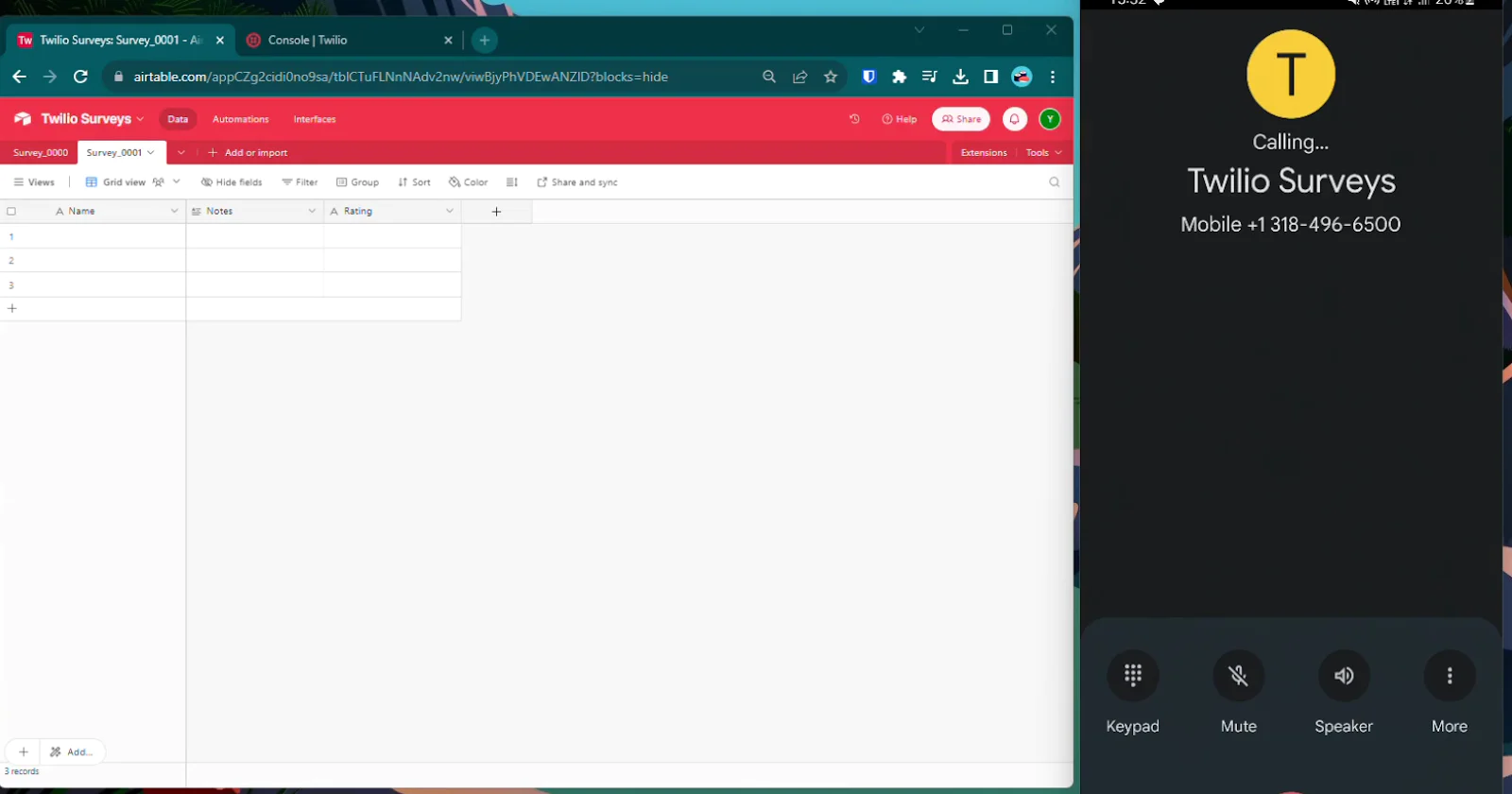

After creating your Airtable account, create a new base, select "Start from Scratch", and name it whatever you want. You can then call the first table Survey_0000 because that’s the format that your application expects: Survey_{ID}.

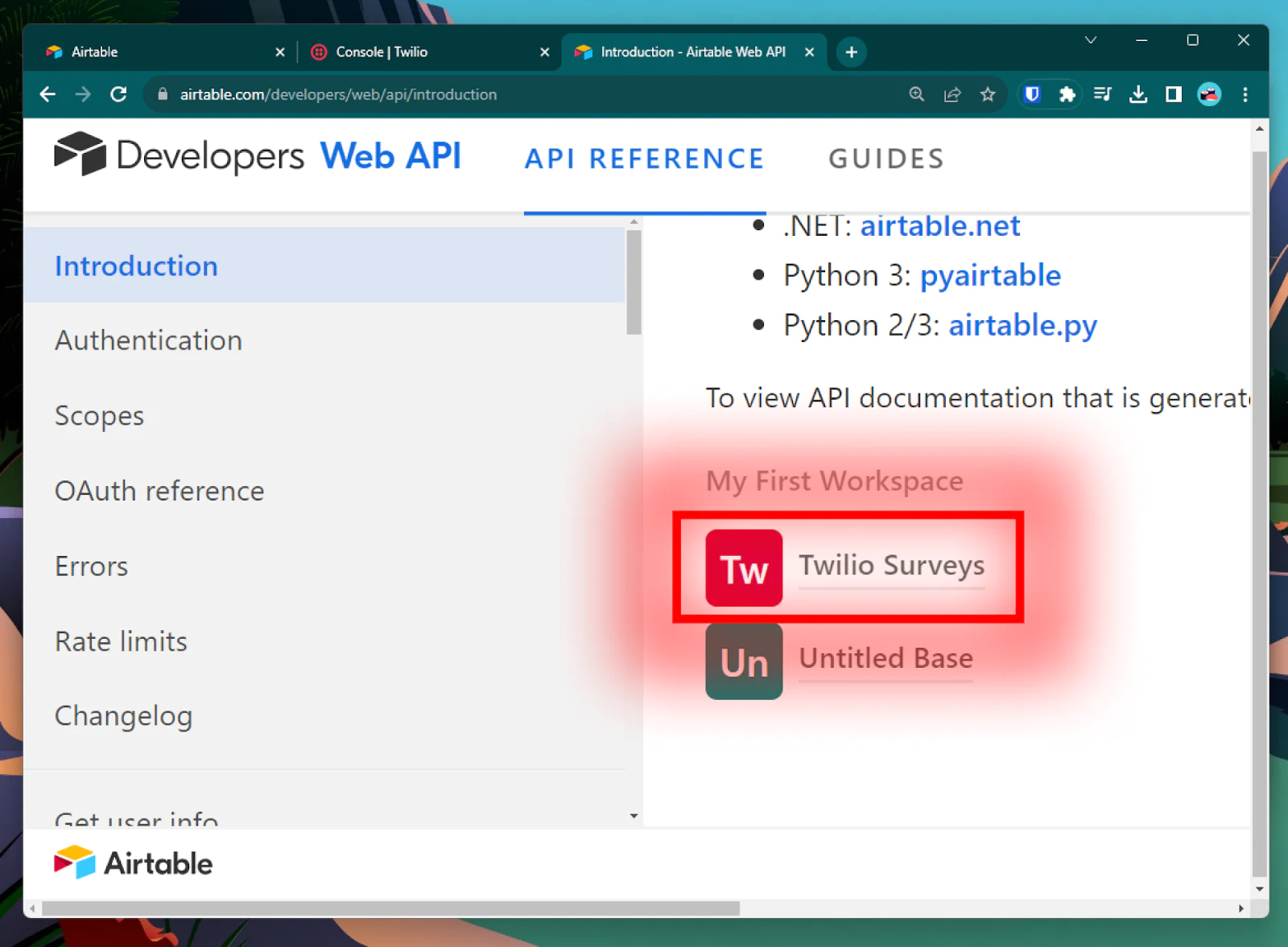

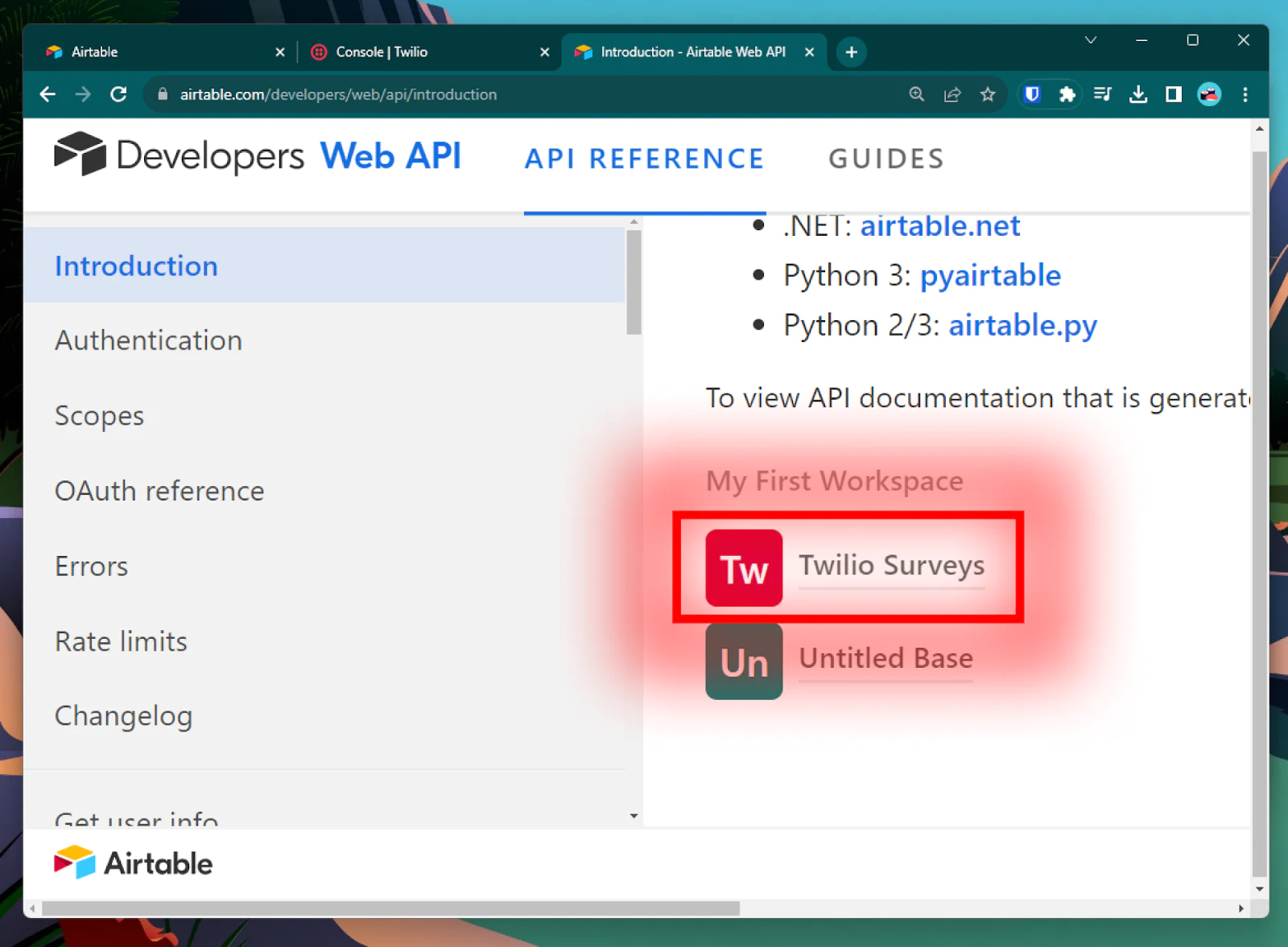

After that, you should head to the Airtable developers page, find your base, and open its API documentation. It will redirect you to a URL that follows this format:

https://airtable.com/appXXXXXXXXX/api/docs

Copy the code between the hostname and the /api path: appXXXXXXXXXX. This is your base ID.

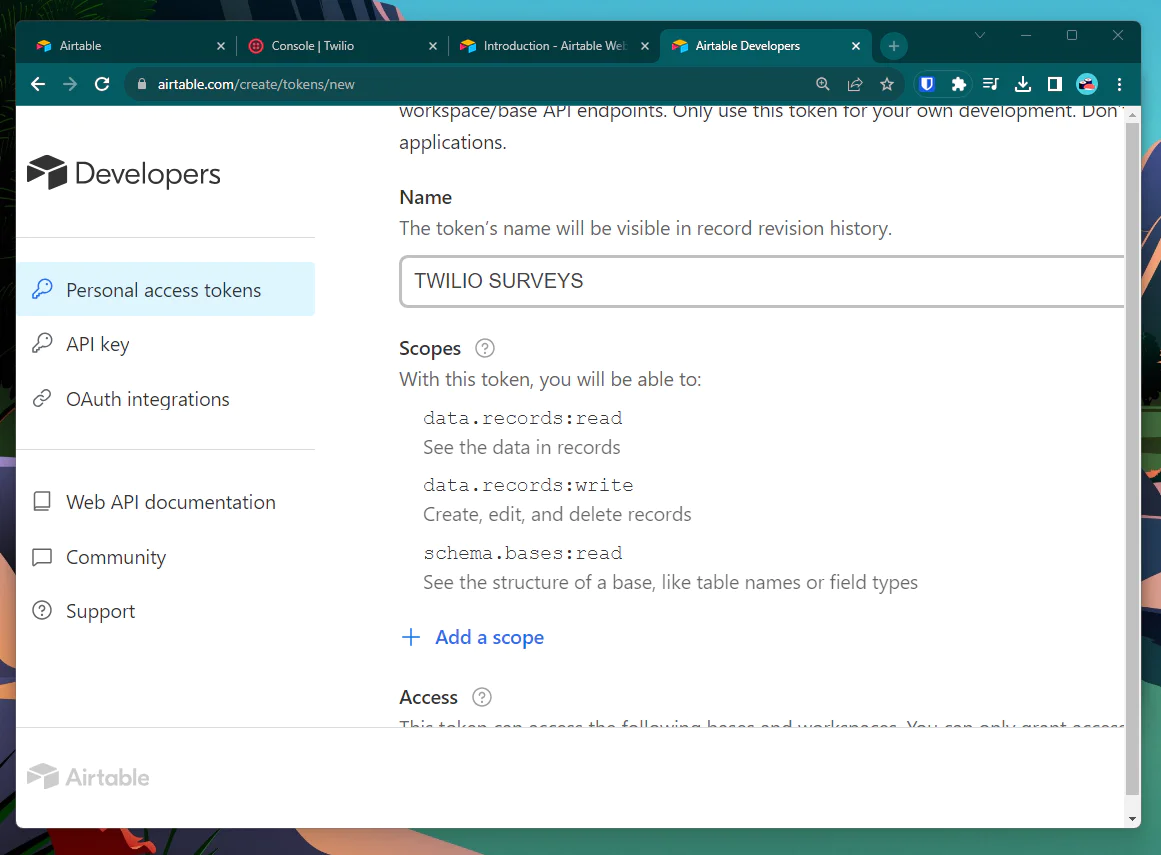

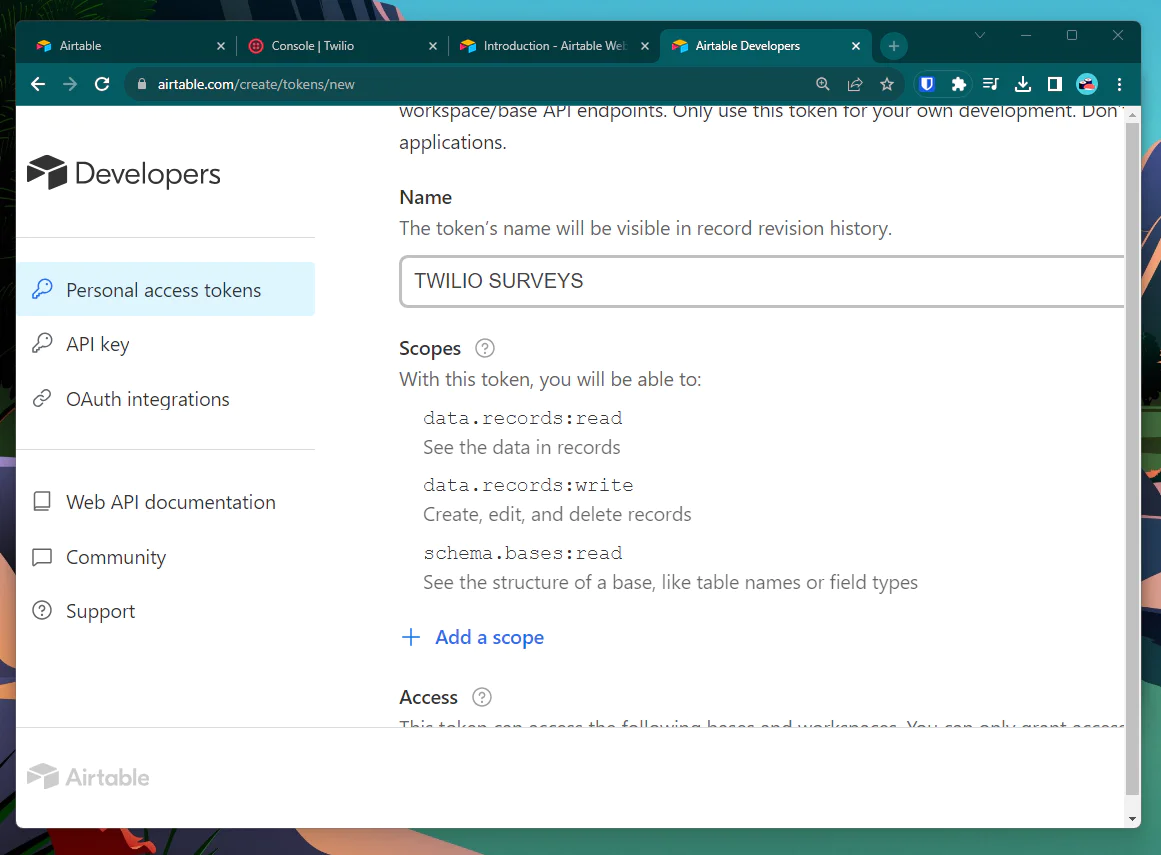

After that, head over to the API access page and create a new personal access token. You can call it whatever you want, but you should grant it the following scopes:

- data.records:read

- data.records:write

- schema.bases:read

This will allow your application to read and write the Survey table and fetch metadata, which we’ll be using at the end of this guide.

Now, create a .env file at the root of your project, and paste your personal access token and base ID into the AIRTABLE_KEY and AIRTABLE_BASE_ID variables:

And to use your keys in the application, install the dotenv module, which loads the .env file. This is also the time to download the airtable Node module:

And finally configure your app to use Airtable:

Saving the responses to Airtable

After the user fills out the first field, meaning the second time /handle-response is being called, which again means that the remainingFields Query param is not null, we should grab the user’s response and push it to the table’s data.

For speech inputs, this is encoded in the request body as SpeechResult.

There’s also a Confidence parameter that scores the transcription’s accuracy, but we won’t be using it in this application.

Spicing it up: Using ChatGPT to communicate with user, and parse responses

Our Twilio app, as it stands, sounds a bit robotic, consistently prompting users with the same line: "Please enter the value for field X." While efficient, real surveys are conducted with a human touch for better communication. Fortunately, leveraging Large Language Models (LLMs), such as ChatGPT, enables our automated survey app to mimic human-like interactions.

Moreover, we can enhance our system by not merely copying the user's exact response into our Airtable database. Instead, we can process responses as natural language, extracting precisely what we need for a production-ready table.

Generating humanized prompts

To infuse a human touch into our automated prompts, let's extract additional information from the database, specifically its schema. We'll feed this information to GPT-4 to help it communicate business goals more effectively.

Begin by installing axios, as the Airtable node module doesn't implement the Metadata API. Use the following command:

Now, add the following code to your project:

This function, getTableFieldNames, fetches the schema of the specified table from Airtable and extracts the field names.

To humanize our prompts further, we want our app to react to previous responses, saying things like "Great response." To achieve this, save the last responses in a query parameter:

Now, when prompting the user, you can reference previous responses for a more context-aware interaction.

Setting up OpenAI for dynamic prompts

To introduce dynamic prompts using ChatGPT, install the openai package:

Configure OpenAI by importing the package and authenticating with your API key (store it in the .env file as OPENAI_KEY):

Now, utilize the following prompt template to generate a dynamic response:

In this code, ChatGPT is given a user prompt with context about the surveyor's role and current form field. The generated response is then used to humanize the interaction with the user.

Reading dynamic prompts to the user

Lastly, incorporate the generated dynamic prompt into your TwiML response to be read out to the user:

Now, when the user interacts with the system, they'll experience prompts that are not only context-aware but also dynamically generated, mimicking a more natural and engaging conversation.

Parsing user inputs using NLP

Users might provide responses in a conversational manner, such as saying, "My phone number is X." To extract the relevant information, we'll use ChatGPT with context about the table schema, the user's current response, and prompt it to extract the useful part:

This code snippet integrates ChatGPT to extract useful information from user responses, ensuring that the system understands and captures the intended data.

Trying it out: real-world example

Let's set up an Airtable database for collecting user feedback about an imaginary game developed by a firm. The fields include:

- Your name

- Your phone number

- Star rating: from one to five stars

- Explanation: describe your feedback in words

- Can we reach out to you? Yes or No

Follow the instructions in the article to call your Twilio number, input the correct survey ID, and witness the magic unfold.

Conclusion

In conclusion, our revamped Twilio-powered survey app now boasts a more engaging and human-like interaction, thanks to the integration of GPT-4. By employing dynamic prompts, our system responds contextually, creating a personalized user experience. The use of NLP enhances data extraction from user responses, contributing to a more seamless and efficient survey process. This integration showcases the potential of combining technologies to elevate automated interactions, emphasizing user engagement and adaptability.

Useful resources:

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.