Build a Multilingual AI Support Agent with SendGrid and Langchain.js

Time to read: 10 minutes

Building a Multilingual AI Support Assistant with Twilio SendGrid, Langchain.js, and OpenAI

Introduction

Providing timely and effective customer support is mandatory when it comes to maintaining customer satisfaction. Given the increase in the number of customers and customer diversity in businesses that have an online presence, it becomes more work for human customer service assistants to respond effectively and promptly. This is where a multilingual AI support assistant complements the human agents.

In this guide, you will learn how to build a multilingual AI support assistant using Twilio SendGrid for email communication, Langchain.js for text processing and document analysis, and OpenAI for natural language generation. The AI assistant will handle user queries and pass the query to a human agent when it does not know the answer.

Prerequisites

To comfortably follow through with this article, you need to have:

- A basic understanding of JavaScript.

- Node Js installed on your computer.

- A basic understanding of LangChain JS.

- A free Twilio SendGrid account.

Setting up Your Environment

Before you start writing code, you will need to prepare your environment by installing the necessary libraries. To achieve this, create a new directory in your preferred location naming it to your liking. Then open the directory with your preferred IDE. Once, the IDE is open, proceed to the terminal and run the following command:

Here is a breakdown of what you will use each library for:

- @langchain/core: You will use this library to create prompts, define runnable sequences, and parse output from OpenAI models.

- @langchain/openai: You will use it to interact with OpenAI's API and generate human-like email responses based on user input.

- @sendgrid/mail: You will use it to send emails generated by the app to customers.

- Cors: You will use it to allow cross-origin requests in your Express.js server. This will ensure the server can handle requests from clients hosted on different domains without security restrictions.

- Dotenv: You will use it to load environment variables from a .env file. The file will contain sensitive information such as API keys for Twilio SendGrid and OpenAI.

- Express: You will use it to create a server for handling HTTP requests from clients and serving your AI support assistant.

- Axios: You will use it for making HTTP requests to your app’s API endpoint when demonstrating a practical use case.

- LangChain: You will use it to preprocess and analyze support documents in multiple languages.

- Pdf-parse: You will use it to extract text content from PDF files for further processing.

Once the libraries are installed, your environment is set up and you are ready to start coding. In your project’s root directory, create a new file and name it assistant-core.mjs. The .mjs extension indicates your code will use ECMAScript Module. This will enable you to arrange your code into reusable modules. Then, proceed to import the required libraries.

Importing the Required Modules

To use the functions and methods provided by the libraries you installed, you need to import the necessary modules into your code.

The above code imports the modules needed to create your support assistant. What each imported module is used for will become clear as you move on with the tutorial.

Configuring the Environmental Variables

After importing the necessary modules, you need to configure the Twilio SendGrid and OpenAI variables. These environmental variables will store the API keys to both services as you will need to authenticate yourself before you can use the services.

To achieve this, create a file in your project’s root directory and name it .env. Then proceed to the OpenAI website and obtain an API key if you don’t have one. Also, proceed to the Twilio SendGrid website and create an account. Then obtain your SendGrid API key. The SendGrid free tier allows you to send up to 100 free emails daily.

Since you now have both API keys, open your .env file and store them in the following format:

Then, load the environmental variables into your code.

Building the AI Assistant

Since you have imported the necessary libraries and configured the environmental variables, it’s time to create the logic the AI support assistant will follow.

Loading and Preprocessing Documents

Start by defining a function that will load the documents that will act as the knowledge base for the assistant.

The function reads and splits documents that are in PDF or TXT format. It uses the PDFLoader and TextLoader classes respectively to load the documents from a specified folder. If your documents are in another format consider using online tools to convert them to PDF or TXT format. It then splits the loaded documents into chunks. Splitting the documents makes them more manageable and also allows for faster and more efficient processing.

The function uses the RecursiveCharacterTextSplitter as it prioritizes maintaining the meaning and context within a document. It works by recursively splitting the text based on a set of characters while keeping paragraphs and sentences together as much as possible. This avoids splitting words in half.

Initializing the Vector Store with Documents

After splitting the documents, the next step involves generating the document embeddings and storing them in a vector store. A vector store allows for easy storing and retrieval of semantically related embeddings. Before you use the vector store for retrieval, you need to initialize it with documents.

The function above takes a list of preprocessed documents as input. It then initializes a vector store using OpenAI embeddings to store document embeddings. Embeddings are numerical representations of the documents, The closer the values are to each other, the more related the documents are. The function uses MemoryVectorStore class from Langchain.js to create an in-memory vector store. You will later perform retrieval operations in this vector store.

Retrieving Related Documents From the Vector Store

After storing the documents and their embeddings in the vector store, you need a way to retrieve the relevant documents to a user query.

Start by creating a rephraseQuestionChainPrompt function. The function will take a user question or description of an issue and use GPT 3.5 turbo to rephrase it. This provides a clear and concise version of the input. Which helps standardize the format of questions or instructions, making them easier to process and understand.

The function starts by specifying the system's objective and providing an instruction to the LLM to rephrase the provided question or instruction. It then uses a StringOutputParser to parse the LLM’s response which contains the standalone question. Using the string output parser ensures the response generated by the large language model is formatted in a visually appealing manner.

Since you now have the standalone question, use it to retrieve related documents from the vector store.

The function creates a runnable sequence that takes the standalone question as input and uses a specified retriever to retrieve related documents. It then converts the documents to strings to make them more meaningful as they were previously split into chunks.

Creating the Answer Generation Chain and Making the Assistant Multilingual

As of now, you have retrieved the documents related to a user query. You need a way to pass them to GPT 3.5 Turbo so that the LLM can use them as the context for the user query.

Start by creating the prompt template that will contain the relevant documents as the context to a user query. It should also contain the instructions the LLM will follow while giving the response. This includes passing the user’s preferred language.

In the prompt instructions, provide the logic that will make GPT 3.5 Turbo converse and give the email response in the user’s selected language. This will enable your support assistant to converse in any language supported by the LLM, making it multilingual.

Then, create the answerGenerationChainPrompt that incorporates the system template. It should then prompt the language model to answer the user's standalone question based on the provided context.

You now have all the functions needed to use Retrieval Augmented Generation and GPT 3.5 Turbo to create an email response to a user query using your knowledge base. The next step is providing the logic of how your app will use the functions. You achieve this using a conversational retrieval chain.

Creating the Conversational Retrieval Chain

Create a function that will provide the logic flow of your app.

The function combines the rephrase question chain, document retrieval chain, and answer generation chain. It first rephrases the user's question to make it standalone, then retrieves relevant documents from the vector store, and finally generates an answer based on the provided context and the standalone question.

Sending Email Response Using SendGrid

Having generated an email with an answer to the user's question or issue description, you need a way to respond to the user. Here is where Twilio SendGrid comes in. It allows you to programmatically send emails with very minimal code.

The function uses the SendGrid API key to authenticate the app with the SendGrid API. It then extracts the content element from the LLMS response. This element is the one that contains the actual email. It then sends the email via SendGrid to the email submitted by the user. Don’t forget to replace the sender's email with your own.

Handling Support Request

Create a main function that will handle a support request. Then take the customer's email, issue description or question, and preferred OpenAI-supported language as input. The language format can be its full name or its ISO 639 language code. For example, a user can use English or en to receive their support email in English. Also, create a docs folder at the root of your project and upload your documents there. For example, we will use the HP Troubleshooting Guide to test our app later. Download it and place the pdf file into the docs folder you just created.

The function loads and splits the documents from the ./docs folder using the loadAndSplitChunks function. It then initializes the vector store with the split documents using initializeVectorstoreWithDocuments. Then, it creates a retriever from the vector store using vectorstore.asRetriever(). It continues to create the conversational retrieval chain using createConversationalRetrievalChain with the retriever.

It further invokes the conversational retrieval chain with the issue description, selected document content, and language. If the response is "I don't have that information.", it escalates the issue to a human-managed email by calling sendEmailToHumanSupport. On the other hand, if the response is not "I don't have that information.", it sends the email response to the customer using sendEmailResponse.

Handling Scenarios with Unknown Answers

Sometimes the AI will not find an answer to a user query. In this case, you need to forward the issue to a human-monitored email.

The function is used by the handleSupportRequest function to forward the issue to a human assistant. It does this by using Twilio SendGrid to send the issue to an email monitored by a human.

You have completed developing the core functionality of the AI support Assistant.

Serving Your App Through Express

The final step is to make the app usable to users. You can achieve this by creating an Express server that serves the app. Create a file named server.js in the root directory of your app and paste the following server code.

The server code sets up an Express.js server that serves static files, handles POST requests to the /support endpoint by invoking the handleSupportRequest function from ./assistant-core.mjs, and provides a global error handler. It also sets up middleware for CORS and parsing JSON request payloads.

Run the following command on the terminal to start the server:

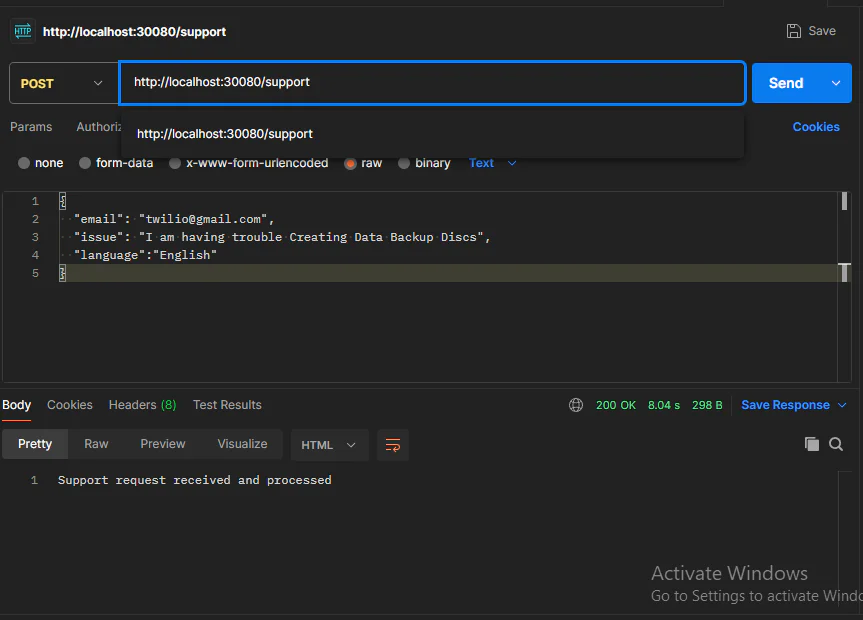

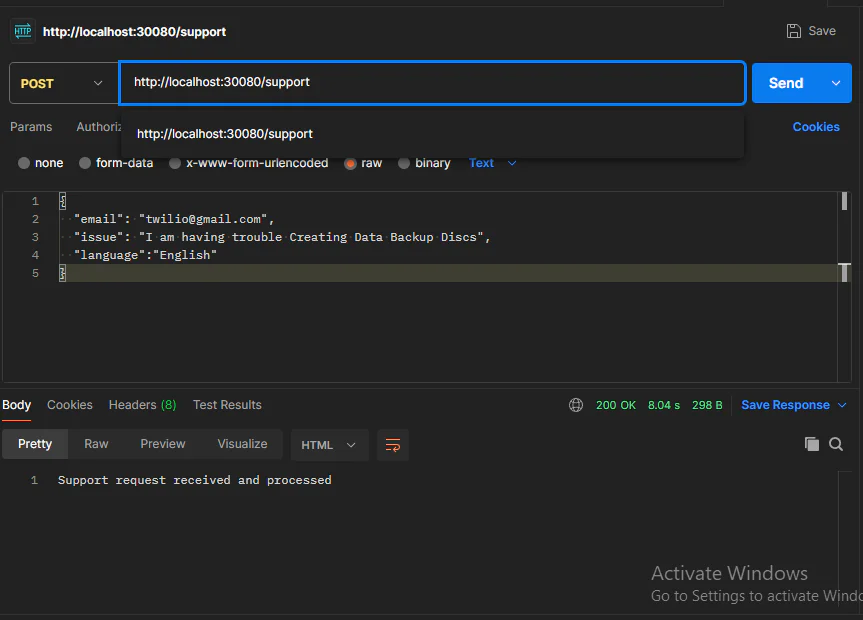

Then, proceed to Postman and make a post request to the server using this payload structure.

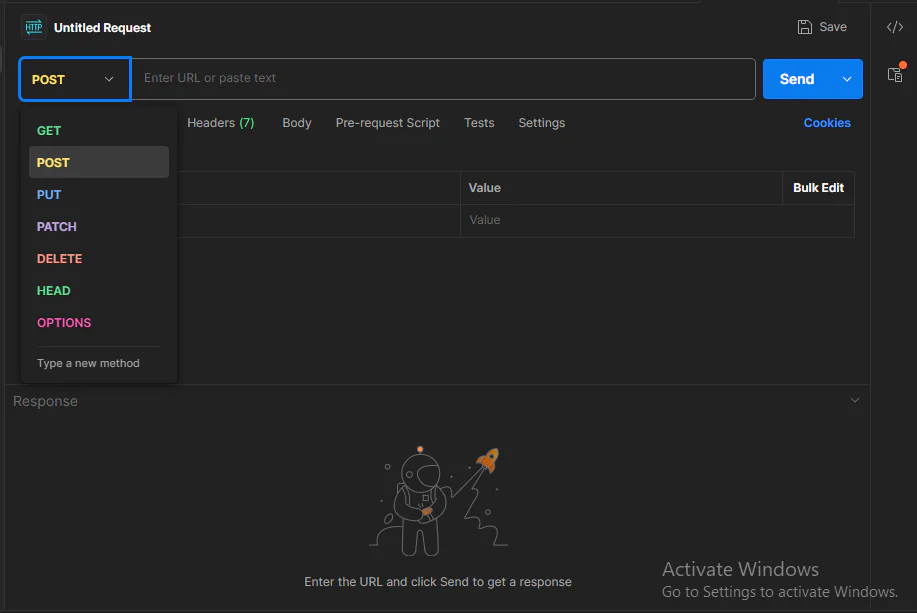

Start by selecting the Post request option in Postman. This indicates you want to send data to your Express server.

Then, enter the URL to your/support endpoint which will be http://localhost:30080/support. Afterward, proceed to the Body section, select the raw option, and pass the payload from above. Remember to use a real email andfill out the issue and language fields.Navigate to the Headers tab and set the Content-Type to application/json so that your Express application is able to read the incoming raw body.

When you submit the request, the AI assistant will email the user an answer to their question or a guide to their issue description.

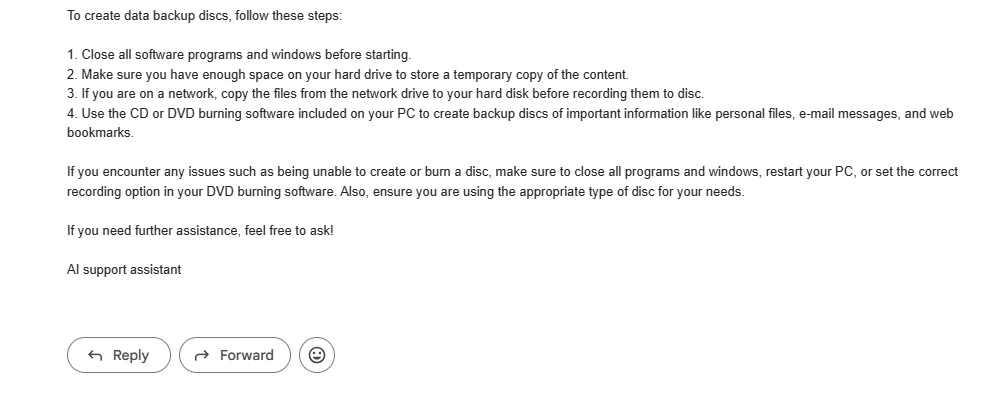

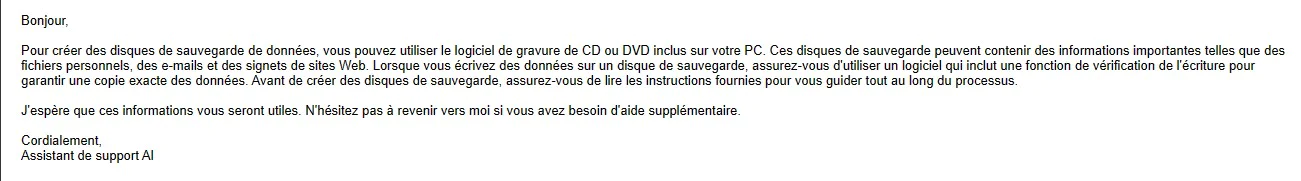

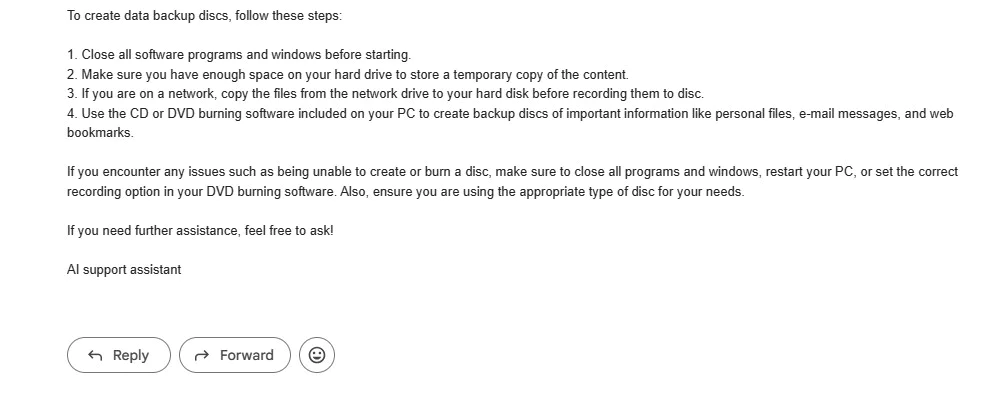

Here is a screenshot of a sample email sent when the issue I am having trouble Creating Data Backup Discs is submitted and the HP troubleshooting guide is passed to the assistant.

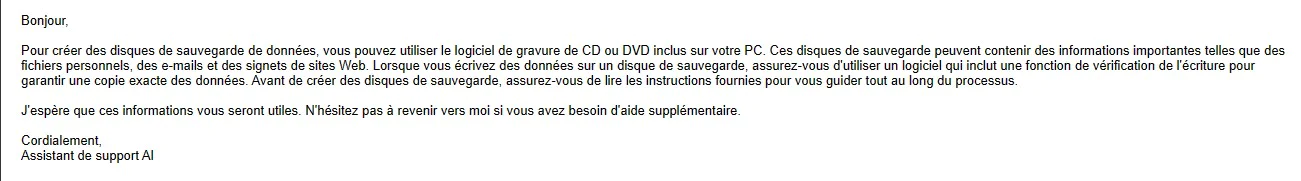

When the language is set to French in the payload, the response from the app is as shown below.

When asked How do I reach HP headquarters, the assistant escalated the issue and forwarded it to the human assistant.

The results show you that the multilingual support assistant is working as expected.

Creating a Frontend Form

The final step in creating a multilingual support assistant is creating a simple user interface. Proceed to the root directory of your project and create a folder named build. Then, create a file named index.html inside the folder . The server will serve this file in the default route. Paste the following code in the index.html file.

The code renders a simple web page with a form for submitting support requests. The form includes input fields for the user's email, issue description, and preferred language. When the user submits the form, JavaScript code is executed to prevent the default form submission behavior, gather the form data, and send a POST request to the server endpoint /support.

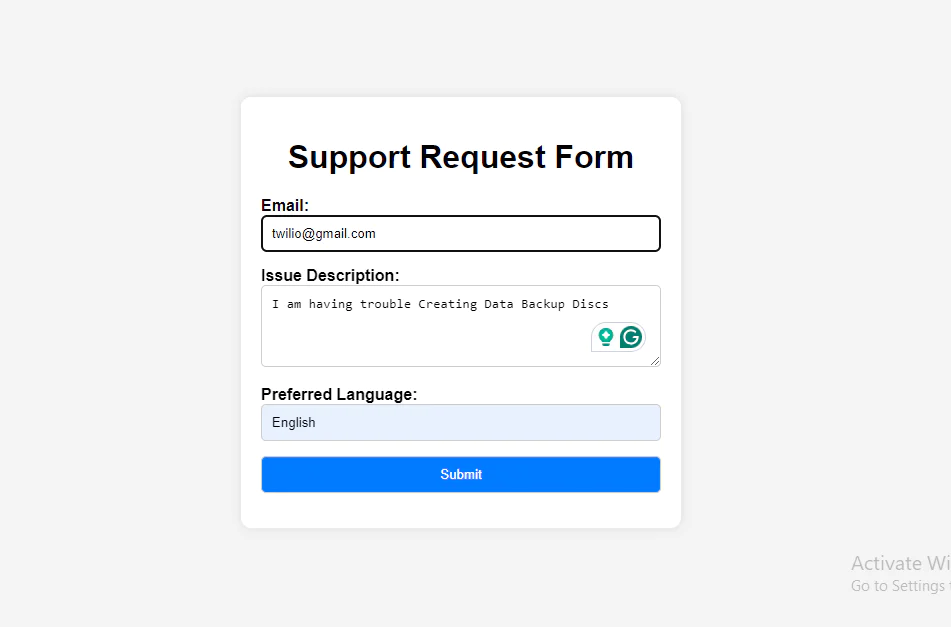

The user interface looks like this:

If you do not want to use Postman to interact with the assistant you can use the above form.

Testing the App

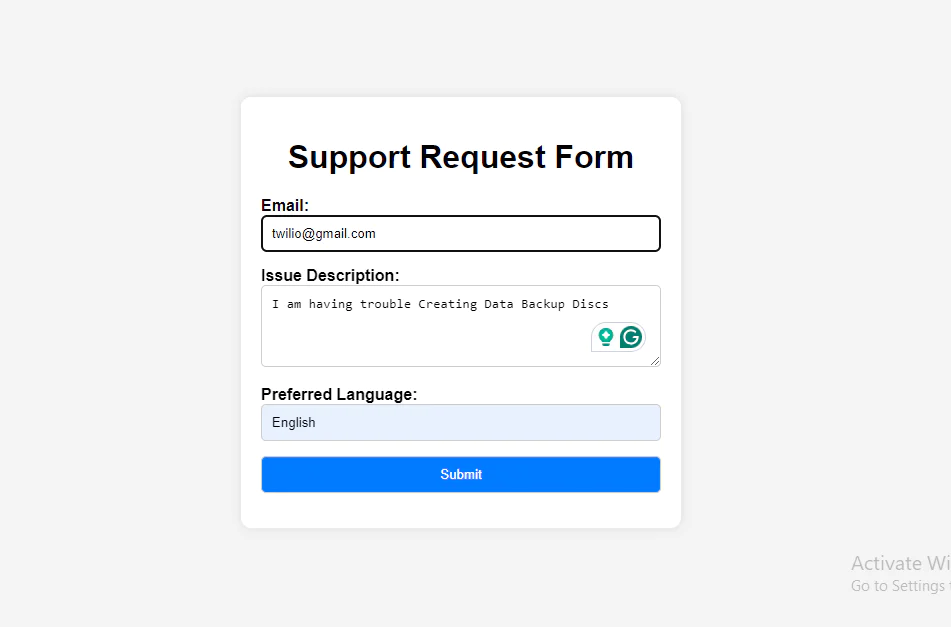

Proceed to the http://localhost:30080/ URL on your browser to access the user interface. Then fill in the form fields and click submit.

When you click submit, the assistant will process your query and send an email response. Here is a screenshot of the received email for the issue I am having trouble Creating Data Backup Discs.

The email received is identical to the one you got through Postman for the same query. From the email contents, it is evident the assistant understood the issue and provided a correct response.

Conclusion

You have learned how to create a Multilingual AI assistant using Twilio SendGrid, OpenAI, and LangChain JS. The field of artificial intelligence when combined with Twilio products provides unlimited choices to the AI products you can create. Consider advancing the chatbot to use Twilio Programmable voice to call an agent when an issue escalates in addition to the email.

Happy coding! Denis works as a machine learning engineer who enjoys writing guides to help other developers. He has a bachelor's in computer science. He loves hiking and exploring the world.

https://www.linkedin.com/in/denis-kuria-869769218/

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.