Build an Image Editing WhatsApp Chatbot with Twilio and AI

Time to read:

Build an image-editing WhatsApp Chatbot with Twilio and AI

In this tutorial, you will learn how to build an AI-powered Whatsapp chatbot capable of removing and replacing backgrounds in images.

To build this application, you will use The Twilio Programmable Messaging API for sending and receiving WhatsApp messages. You will use the Transformers.js library to load pre-trained models capable of classifying intents in messages and removing backgrounds in images. You will also use the Sharp module to perform image manipulation tasks that come with background removal and replacement.

The Twilio Programmable Messaging API is a service that allows developers to programmatically send and receive SMS, MMS, and WhatsApp messages from their applications.

The Transformers.js library is designed to mirror the functionality of Hugging Face's transformers Python library, allowing you to run the same pre-trained models with a highly similar API.

The Sharp module is a high-performance image processing library for Node.js, enabling developers to perform image manipulation tasks such as resizing, cropping, and format conversions with ease.

By the end of this tutorial, you will have a chatbot that allows you to remove and replace the background of any given image:

Tutorial Requirements

To follow this tutorial, you will need the following components:

- Node.js (v18.18.1+) and npm installed.

- Ngrok installed and the auth token set.

- A free Twilio account.

- A free Ngrok account.

Setting up the environment

In this section, you will create the project directory, initialize a Node.js application, and install the required packages.

Open a terminal window and navigate to a suitable location for your project. Run the following commands to create the project directory and navigate into it:

Use the following command to create a directory named images, where the chatbot will store the images that will be edited:

Run the following command to create a new Node.js project:

Now, use the following command to install the packages needed to build this application:

With the command above you installed the following packages:

express: is a minimal and flexible Node.js back-end web application framework that simplifies the creation of web applications and APIs. It will be used to serve the Twilio WhatsApp chatbot.body-parser: is an express body parsing middleware. It will be used to parse the URL-encoded request bodies sent to the express application.dotenv: is a Node.js package that allows you to load environment variables from a .env file into `process.env`. It will be used to retrieve the Twilio API credentials that you will soon store in a .env file.node-dev: is a development tool for Node.js that automatically restarts the server upon detecting changes in the source code.twilio: is a package that allows you to interact with the Twilio API. It will be used to send WhatsApp messages to the user.@xenova/transformers: is a library that allows you to use pre-trained transformer models. It will be used to load and apply models for user intent classification and image background removal.sharp: is a high-performance image processing library for Node.js. It will be used for resizing, manipulating, and converting images in various formats.

Collecting and storing your credentials

In this section, you will collect and store your Twilio credentials that will allow you to interact with the Twilio API.

Open a new browser tab and log in to your Twilio Console. Once you are on your console copy the Account SID and Auth Token, create a new file named .env in your project’s root directory, and store these credentials in it:

Server Setup

In this section, you will set up and expose an Express server to handle incoming WhatsApp messages, process image uploads, and manage AI-driven image processing tasks.

In the project’s root directory, create a file named server.js and add the following code to it:

The code begins by importing necessary modules: express for creating the server, bodyParser for parsing request bodies, and twilio for interacting with the Twilio API. Additionally, it loads environment variables using dotenv/config.

Next, it initializes an Express application and sets the port to 3000. The Twilio account SID, auth token, and ngrok base URL are retrieved from environment variables, and a Twilio client is created using these credentials (Twilio account SID, auth token). After creating the Twilio client, the code declares variables to store the detected user intent and image URLs.

The code then sets up middleware to parse JSON requests, and URL-encoded bodies, and serve static files from the 'images' directory. Finally, the server is set to listen on port 3000, and a log message confirms that the Express server is running and ready to handle requests.

Go back to your terminal, open a new tab, and run the following command to expose the application:

Copy the https Forwarding URL provided by Ngrok. Go back to your . env file and store the URL in the .env file as the value for NGROK_BASE_URL:

You will use this NGROK_BASE_URL not only to expose your Whatsapp chatbot application but also to send the edited images back to the user because the Twilio API requires a publicly accessible URL for sending media files.

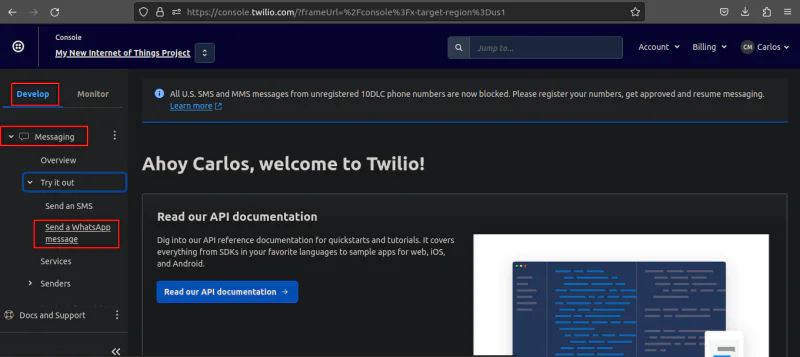

Go back to your Twilio Console main page, Click on the Develop tab button, click on Messaging, click on Try it out, then click on Send a WhatsApp message to navigate to the WhatsApp Sandbox page.

Once you are on the Sandbox page, scroll down and follow the instructions to connect to the Twilio sandbox. The instructions will ask you to send a specific message to a Twilio Sandbox WhatsApp Number.

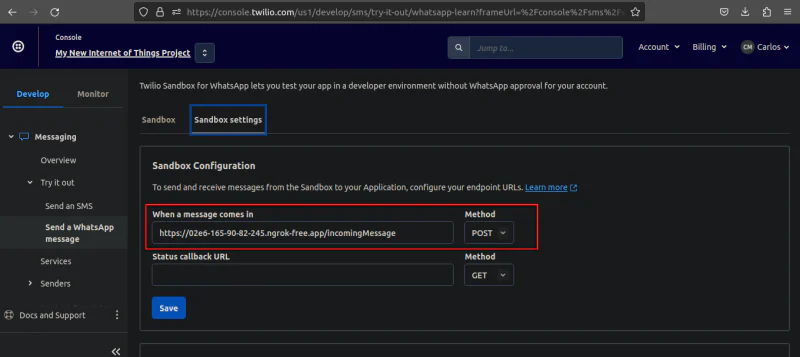

After following the connect instructions, scroll back up and click on the button with the text Sandbox settings to navigate to the WhatsApp Sandbox settings page. Once on the Sandbox settings, paste the Ngrok https URL in the “ When a message comes in” field followed by /incomingMessage, set the method to POST, click on the Save button, and now your WhatsApp bot should be able to receive messages. Ensure your URL looks like the one below:

Open the package.son file in your project root directory and edit the scripts property to look like the following:

Here, you set the "type" to "module", which tells Node.js to treat the code as an ES module, allowing you to use import/export syntax. Additionally, you added a start script that, when called, will use the node-dev module to start the server.

Run the following command to start the server and then proceed to the next section:

Handling incoming messages

In this section, you will configure the AI manager, and add the function responsible for classifying the intent in incoming messages. Then, you will integrate this manager with your server.js file to handle WhatsApp messages and send appropriate responses.

Create a file named AIManager.js in your project root directory, and add the following line of code to it:

This line of code imports several modules from the Transformers.js package and Sharp package. The AutoModel, AutoProcessor, pipeline, and RawImage will be used to load pre-trained models, pre-process image inputs, run inference, and convert images sent by the user to their raw pixel form respectively. The sharp module will be used to perform image manipulation.

Add the following code to the bottom of the AIManager.js file:

These lines declare three variables: classifierModel, bgRemovalModel, and bgRemovalProcessor, and a function named progressCallback that logs a model loading progress. The classifierModel will be used to store the pipeline object of the model that will be used to determine the intent in the incoming messages, and bgRemovalModel and bgRemovalProcessor will be used to store the pipeline and processor objects of the model that will be used to remove images backgrounds.

Add the following code below the progressCallback() function:

This code declares a function named getIntent(), which determines the intent behind a given message.

Within this function, the code first checks If the classifier model has been loaded. If that isn’t the case it loads a pre-trained model named Xenova/mobilebert-uncased-mnli for zero-shot classification and passes the progressCallback() function as an argument to log the model loading progress.

Next, it uses this model to classify the message into one of three categories: greet, remove, or replace, and then returns the predicted intent.

Zero-shot classification is a machine learning technique where a model is trained to predict classes it has never seen during training. Using zero-shot classification allows the chatbot to understand user intents without needing specific commands to be hard coded, making interactions more natural and intuitive for users so they won't need to memorize chatbot commands.

Go to your server.js file and add the following line of code on the very top, to import the getIntent() function:

Add the following code below the middleware setup block:

The code declares a function named sendMessage(), that takes four parameters: the message body, sender, recipient, and any associated media URL. Within this function, the code creates a payload object based on whether a media URL is provided and then sends the message using the Twilio client's messages.create() method.

Add the following code below the sendMessage() function:

Here, the code declares a function named handleIncomingMessage(), responsible for processing incoming messages. It takes a request object as its parameter.

Inside the function, it extracts the recipient, body, and sender from the request body. It then initializes an empty message and outputImageURL variable.

The function then calls the getIntent function to determine the intent of the incoming message based on its body. Depending on the intent (remove, replace, or greet), the function sets the message variable to an appropriate response message.

Finally, the function returns an object containing the message and outputImageURL, which will be used to send a response back to the user.

Add the following code below the handleIncomingMessage() function

This code sets up an Express.js route at '/incomingMessage' to handle incoming HTTP POST requests. This route is responsible for receiving the WhatsApp messages sent by the user, distinguishing between text messages and media uploads, and generating appropriate response messages.

First, the code extracts the message details like recipient, body, sender, and media URL from the request.

If there's no media URL, it processes the message using the handleIncomingMessage() function, sets the response message based on the intent, and sends it back to the user using the sendMessage() function.

If there's a media URL, it checks the intent to determine the response. If the intent is not replace the code informs the user that the image has been received and prompts them for further action. If the intent is replace the code asks the user to wait while the image background is being replaced.

Go back to your preferred WhatsApp client, send a greeting message to the chatbot, wait for a response, and then send separate messages requesting the chatbot to remove and replace a background.

Implementing the background removal feature

In this section, you will enhance your AI manager by adding a function to remove the background from an image provided via a Whatsapp media URL. Next, you will integrate this functionality into your server to notify users of the background removal process and provide a URL to the modified image.

Go back to the AIManager.js file and the following code to the bottom:

This code defines an asynchronous function named loadBGRemovalModel, which loads the background removal model and its processor.

It first checks if the background removal model hasn’t been loaded. If so, it loads a model named briaai/RMBG-1.4 using the AutoModel.from_pretrained() method with custom configurations and a progress callback. Next, it loads the model’s processor with the AutoProcessor.from_pretrained() method, setting parameters like normalization, resizing, and image properties.

Add the following code below loadBGRemovalModel function:

The code defines a function named removeBackground(), responsible for removing the background from an image provided via a URL.

The code begins by loading the background removal model and its processor, then fetches the image from the provided URL.

The image is preprocessed to obtain pixel values, which the model uses to separate the foreground from the background and generate an alpha matte, a mask indicating the background. This mask is then resized to match the original image's dimensions.

Add the following code below the mask constant:

The code added begins by extracting raw pixel data from both the image and the mask.

Next, It creates a new RGBA image data array, copying RGB values from the original image and setting the alpha values from the mask. Using Sharp, the code creates a new image from this RGBA data array.

Finally, the code saves the new image as a PNG, with the background removed, in the images directory as a file named bg-removed.png.

Now, go to the server.js file and import the removeBackground() function:

Next, navigate to the handleIncomingMessage() and replace the code inside the remove intent block with the following:

In this update remove intent block, the code first sends a notification to the user indicating that the background removal process is in progress.

Next, it calls the removeBackground() function to remove the background from the image and generates a URL for the modified image (removed background).

Lastly, the modified image’s URL is used to update the message response, which is then returned as the result of the function.

Because the modified image is a PNG with a transparent background, you must send a URL back to the user. This is necessary because Twilio converts PNG files to JPG by default, which would cause a loss of transparency.

Go back to your preferred WhatsApp client, send a greeting message to the chatbot, send the image you want to edit, and then send another message asking the chatbot to remove the background.

Implementing the background replacement feature

In this section, you will extend the functionality of your chatbot by adding a background replacement function to the AI manager. you will also update the /incomingMessage endpoint in your server to handle image replacement requests.

Go back to the AIManager.js file and add the following code below the removeBackground() function:

The code defines a function named replaceBackground, responsible for replacing an image background by overlaying the image foreground on top of another background image.

The code first loads the background image (base image) from the provided URL using and initializes a Sharp instance with the image's width, height, and channels properties. It also loads the foreground image (overlay image) stored in the images directory with the name bg-removed.pngand retrieves its width and height.

The background image is then resized to match the dimensions of the foreground image using the resize method with the `fit: sharp.fit.fill` option, which ensures that the base image fully covers the overlay image.

Add the following code below the line where the code resizes the background image:

The code added, first, converts the foreground image to a buffer.

Next, it composites the overlay foreground onto the background image using the composite() method with the gravity: sharp.gravity.centre option to center the overlay.

Finally, the resulting image is saved to a file named bg-replaced.png in the images directory.

Go back to the server.js file and import the replaceBackground() function:

Now, navigate to the /incomingMessage endpoint and replace the code in the else block with the following:

In this updated else block, which is triggered when the request contains media, the code begins by setting a message based on the chatbot's current intent.

If the intent is not replace, the code informs the user that the image has been received and prompts them for further action.

If the intent is replace, the code informs the user that the background image has been received and initiates the background removal process by calling the replaceBackground() function and passing the media URL extracted from the request body as an argument. After the background is replaced, the code sends a message confirming the replacement and returns an HTTP status code of 200.

Go back to your chatbot conversation, and send a message to the chatbot asking it to replace the background of the previously edited image. When the chatbot prompts you to send a new background image, provide the image you wish to use.

Conclusion

In this tutorial, you've learned how to create an AI-powered WhatsApp chatbot capable of removing and replacing backgrounds in images. You've used the Twilio Programmable Messaging API for message handling, the Transformers.js library for classifying intents and removing backgrounds using pre-trained models, and the Sharp module for image manipulation.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.