A Proof of Concept to Analyze and Process Inbound MMS from Twilio using OpenAI and AWS

Time to read: 12 minutes

Let me get this out of the way, first: no, this is not a blog post about chatbots! Chatbots are great, but let’s get visual – what if customers could send in photos from their mobile devices instead of typing out text? The old adage that a “picture is worth a thousand words” still rings true, and new AI capabilities promise to supercharge how customers interact with businesses.

New AI Models such as OpenAI GPT Vision have the ability to take images as input. While this capability is emerging, it is clearly powerful and has many potential business applications.

In this blog post, we will spin up a proof of concept using Twilio, OpenAI, and AWS. Twilio’s Messaging platform with MMS is the natural choice to enable your customers to interact with images. The OpenAI Vision model is exciting and gives your business the ability to review, classify, and understand images programmatically. AWS provides the infrastructure to coordinate events and data.

This blog post is split into two parts:

- Multiple examples of how businesses could use this capability.

- Instructions to configure and deploy this proof of concept.

The ‘how’ is more interesting. We will start there to get your gears spinning on how your business can programmatically understand customer images with computer vision.

Part 1: How can businesses use this capability with Twilio?

This proof of concept is straightforward to use. Just take any MMS-enabled device and send images to your Twilio number. You can include a keyword along with your image to trigger different kinds of analysis.

For example, I can send an image of a damaged automobile along with the word “insurance” and that would trigger a response back with an assessment of the damage. Or, imagine a customer sending a screenshot of an application error and receiving a response telling you how to fix it. Or, perhaps, a customer could send a picture of a damaged product to kick off a return process. Using images to trigger helpful solutions has the possibility to resolve customer inquiries much quicker than we can today.

While you are going through the examples, be sure to remember that the results from the AI image analysis can be handled programmatically and trigger additional events (actions) and replies based on the image and the specific state of the customer.

Learning by video can be effective. Please feel free to reference this video at any part of this exercise:

Picture and keyword examples

In the next section, I will show you some example uses for the solution we’ll build in this post. In each, I’ll include the keyword for context, explain the scenario, show the prompt to OpenAI, and show the response I received while testing.

Keyword: screenshot

Imagine you are stuck using a software application and instead of calling or chatting with a bot or an agent, you start by sending in a screenshot that could determine where in your application a customer is stuck and pull out any errors or warning. The understanding of the screenshot would certainly give a support agent valuable context to begin a conversation and in some cases, perhaps trigger a fix to solve the customer’s issue instantly without any human agent involvement.

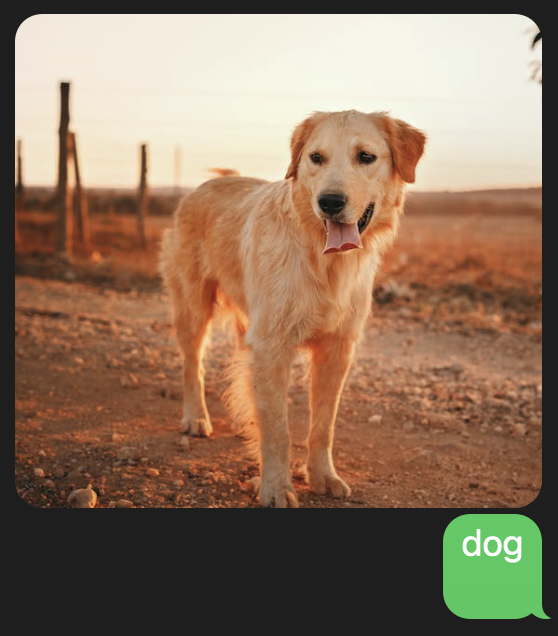

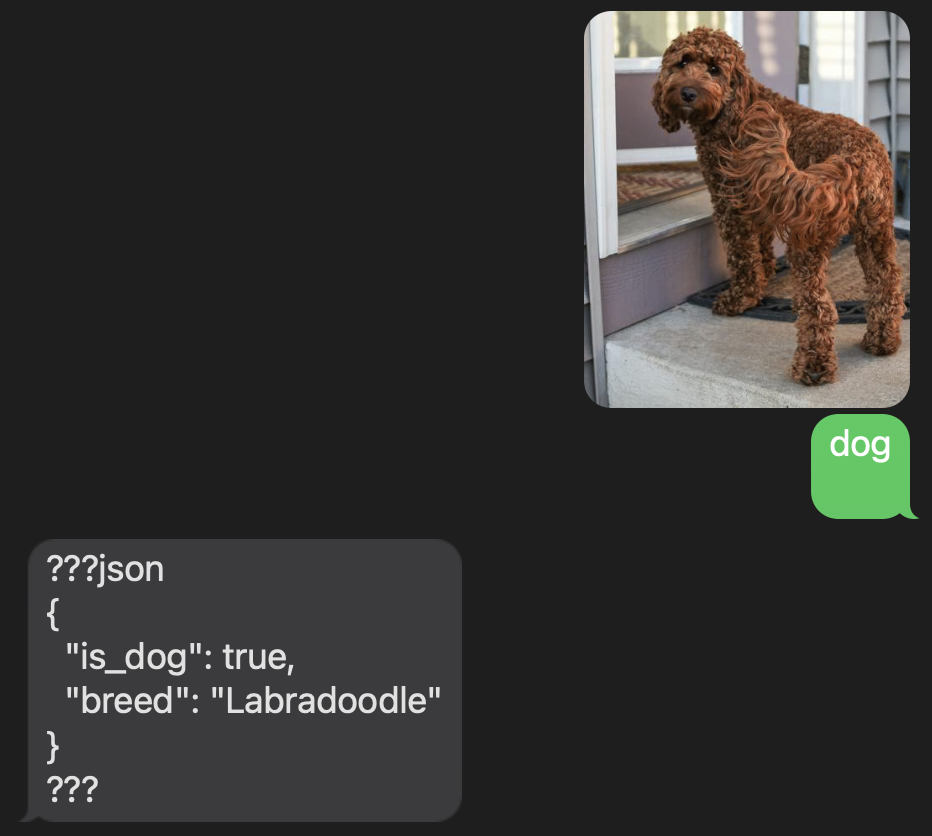

Keyword: dog

Next, let’s look at a simple analysis: send in a photo and determine if there is a dog in the photo. If there is, identify the breed. Return the response in JSON format so a developer can use your API to identify dogs and dog breeds. Throughout all of these examples, imagine the response from OpenAI being handled programmatically and triggering additional steps in your business systems.

Keyword: people

How could this be used? For numerous reasons, it could be helpful to know whether an image contains people – or, really, anything else. Depending on the answer, your system can process accordingly. For example, if there are people in the photo, do not process it further. Conversely, your business rules may require “something” to be present in order to proceed – the AI model can make this determination.

Going further, your business may want to screen or prevent images with certain objects or characteristics from additional processing. Your prompt could check for these forbidden objects and then PASS or FAIL.

Keyword: retail

How could this be used? The applications in retail are numerous and span from returns, to sales, to upsells, to brand awareness and more. To start, what could you do for a customer after identifying and classifying an item they send in?

Keyword: recommend

How could this be used? OK, we saw a basic retail prompt. What about a more advanced scenario, being able to recommend products? Remember, a production implementation would include a trained model to recommend from a specific catalog! What if you could recommend something based on a color or a theme in the submitted image?

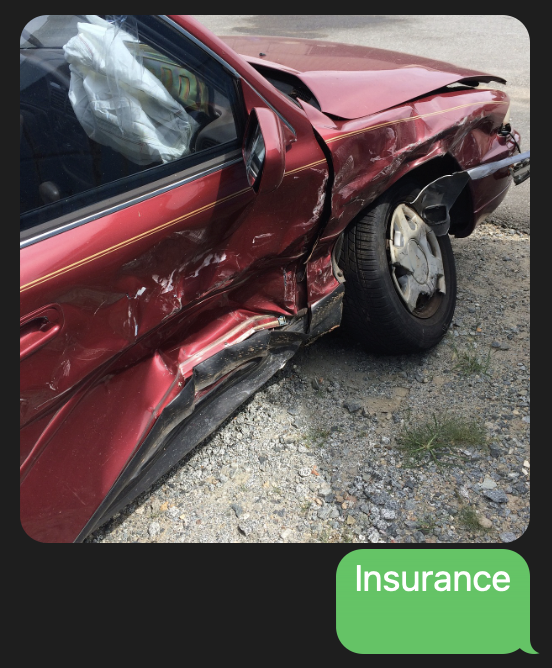

Keyword: insurance

How could this be used? Shifting gears, could computer vision be helpful in analyzing insurance claims? How about starting a claims interaction by sending in photos of the damage?

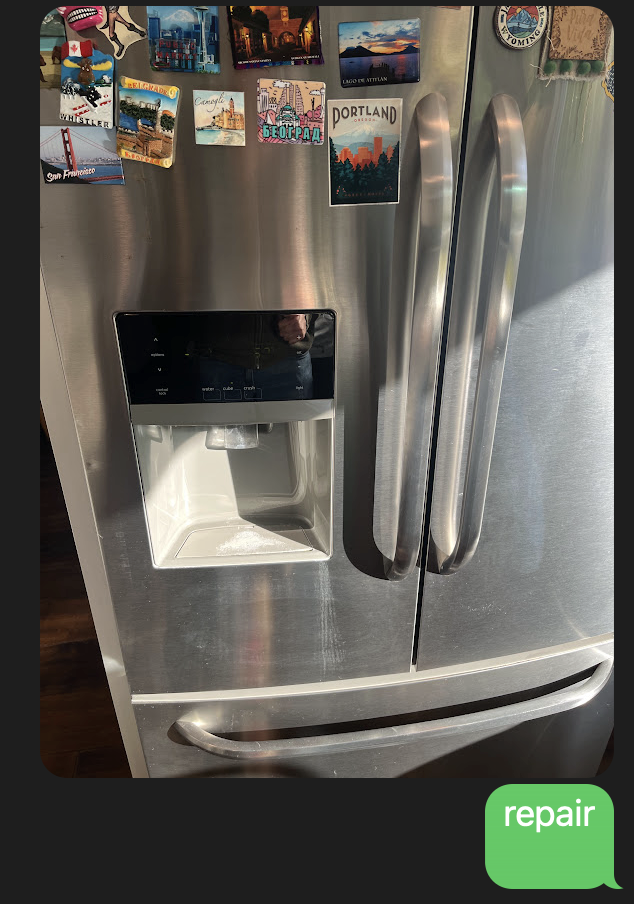

Keyword: repair

How could this be used? How about household repairs? Sending in an image might enable self-help, quickly route requests to a specialist, or could potentially identify a dangerous situation.

Keyword: tool

How could this be used? Ever get stuck trying to assemble or fix something?

Keyword: returns

How could this be used? Handling returns and complaints about consumer products is another area with huge potential. Submitted images could trigger a response or actions which quickly address customer concerns while providing powerful feedback.

Keyword: ingredients

How could this be used? Enjoyed your meal? Want to know the ingredients?

Keyword: category

How could this be used? Lastly, how about categorizing the submitted image and providing a description?.

Wow! Building this proof of concept and working with OpenAI’s Vision model has been eye-opening for me, and hopefully these examples have inspired some use cases for you. Using images submitted by your customers truly has tremendous potential to supercharge how you engage.

Go to part 2 to see how you can spin up this proof of concept application using Twilio, OpenAI, and AWS!

Part 2: Configure and Deploy

Learning by video can be effective. Please feel free to reference this video at any part of this exercise:

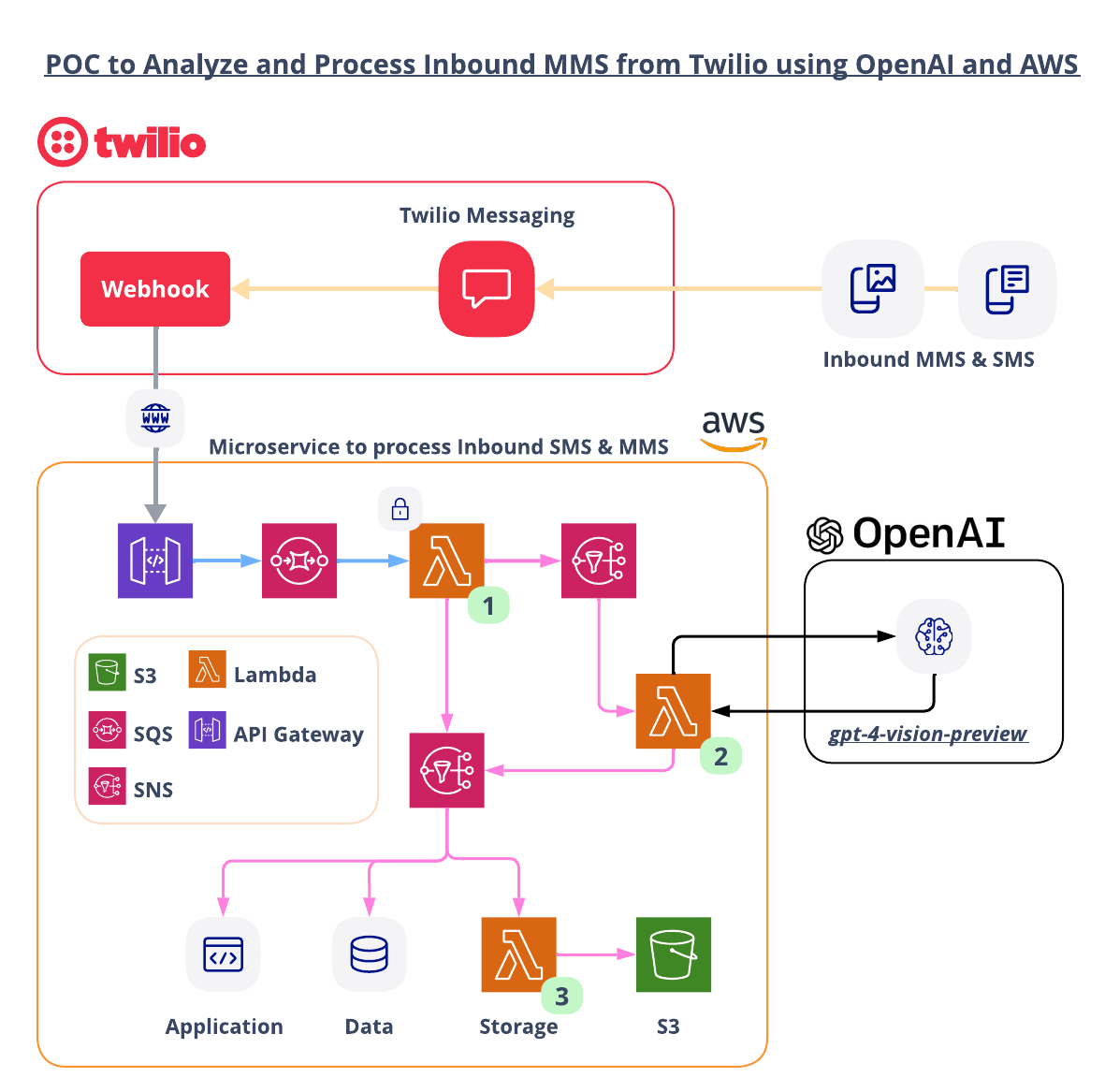

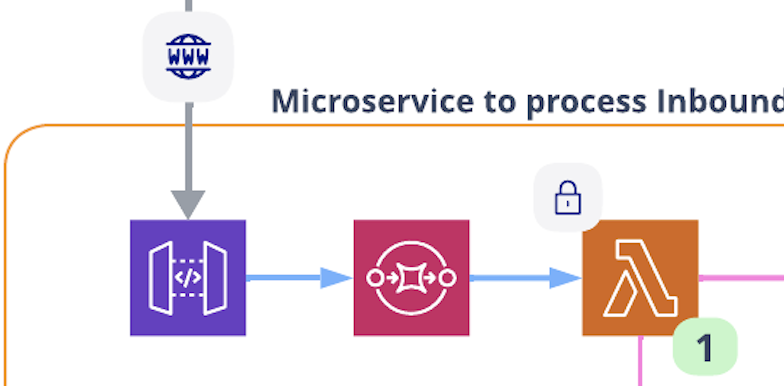

Here is a blueprint of the proof of concept with details of each section below:

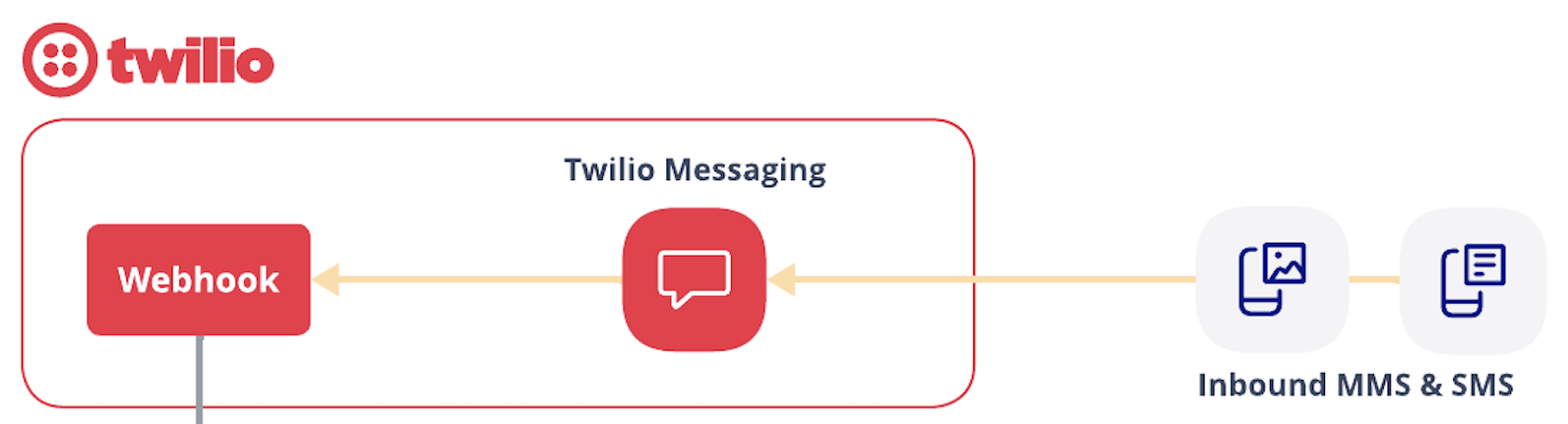

Twilio handles inbound MMS and SMS messages (WhatsApp messages are handled the same way), and routes them to AWS. AWS handles the event-based system which includes calling OpenAI’s gpt-4-vision-preview model for image analysis.

1. Inbound MMS and SMS

As a Leader in CPaaS according to Gartner’s 2023 Magic Quadrant, Twilio is an excellent choice for your enterprise messaging. Your Messaging Senders (Long Codes, Toll Free Numbers, Short Codes, WhatsApp) in Twilio can be configured to route inbound messages to a Webhook of your choice. Once a webhook is set, messages will be posted to your endpoint in real time.

2. Queue, Security Check and Initial Processing

AWS API Gateway will allow you to create an endpoint to receive webhooks from Twilio. To handle spiky demand, this proof of concept has a direct integration between API Gateway and AWS SQS (Simple Queue Service). In addition to protecting your system from demand spikes, a queue could also be used to control how quickly you send images to analysis.

An AWS Lambda function will pull messages from the queue at a set rate. This lambda will first check to make sure that the message actually came from your Twilio Account. Next, the function sends the entire webhook message to an AWS SNS topic for additional processing while also pulling out media files and individually sending them to a different SNS topic for analysis.

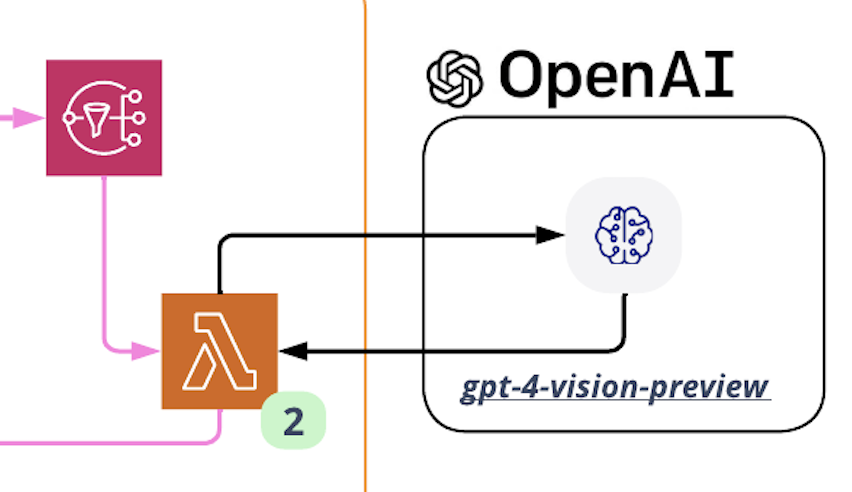

The Lambda marked with #2 is responsible for formatting the prompt used to send along with the image. This proof of concept has several examples, but you will certainly want to engineer your own prompts to meet your needs. This lambda uses a Layer to hold the OpenAI libraries.

Architects may protest that this Lambda function will have to wait for a response from OpenAI, and that could be wasteful. I completely agree, but this is just a proof of concept. A production solution will likely want to use a different compute solution to call OpenAI. AWS Bedrock could be an option.

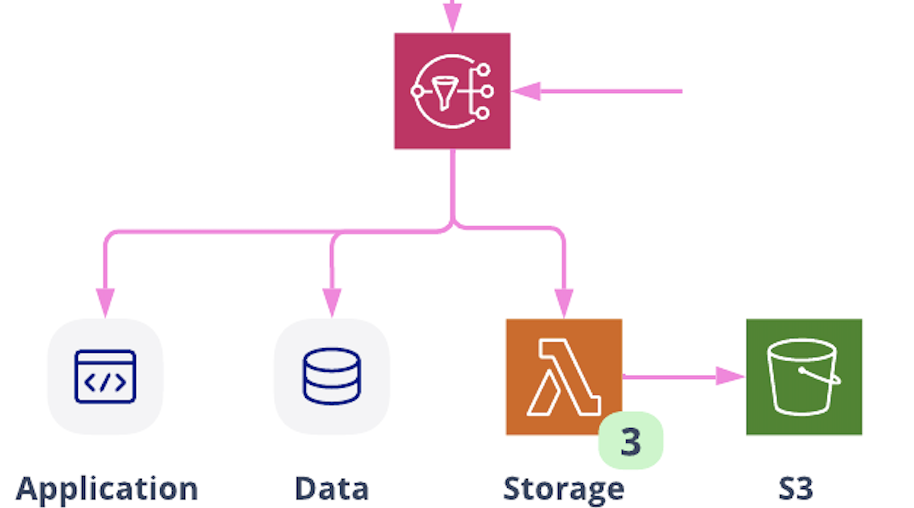

4. Additional Processing

This proof of concept has two processors. The Lambda marked with #3 reads in the message and then saves it to a S3 bucket. There is an additional lambda that reads in responses from OpenAI and calls the Twilio API to send an SMS containing the OpenAI response back to the “customer”. You can think of that lambda occupying the “Application” box above.

For real use cases, you will tie in your own applications and data systems and build interactivity. Images sent into this system could trigger additional events based on the understanding of those images which, in turn, trigger other system events.

Prerequisites

This is not a beginner level build! You need to have some knowledge of Twilio, AWS, and OpenAI to complete this tutorial.

- Twilio Account. If you don’t yet have one, you can sign up for a free account here.

- A SMS and MMS enabled phone number in your Twilio Account. Note that different countries have different registration requirements for utilizing phone numbers. You’ll need to fulfill the requirements for the number you purchase before continuing the demo.

- OpenAI Account and an API Key with access to the gpt-4-vision-preview model, or some model (or future model after this post is published) that has the ability to analyze images.

- AWS Account with permissions to provision Lambdas, step functions, S3 buckets, IAM Roles & Policies, an SQS queue, SNS topics, and a custom EventBus. You can sign up for an account here.

- AWS SAM CLI installed

Let’s Build it!

Here are the basic steps of our serverless multichannel build today.

- Download the code and enter your API Keys

- Deploy the stack

- Set the Twilio Webhook

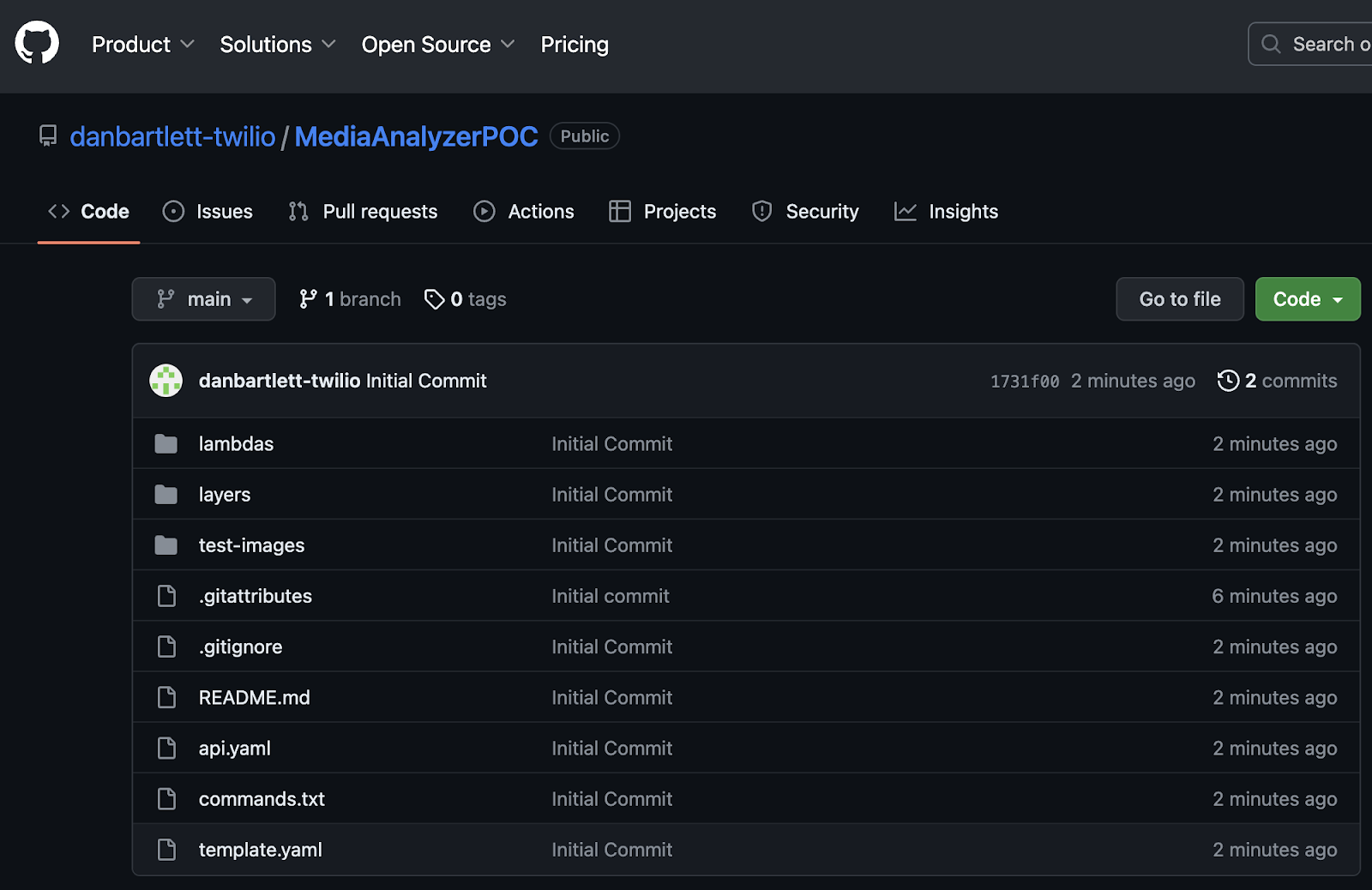

1. Download the Code for this Application

Download the code from this repo, and then open up the folder in your preferred development environment.

The repo contains all you need to spin up an AWS CloudFormation stack.

First, we need to install a couple of node packages. From the parent directory, cd into the two directories listed below and install the packages. Here are the commands:

Next, open up the file template.yaml in the parent directory in your favorite code editor. This yaml file contains the instructions needed to provision the AWS resources.

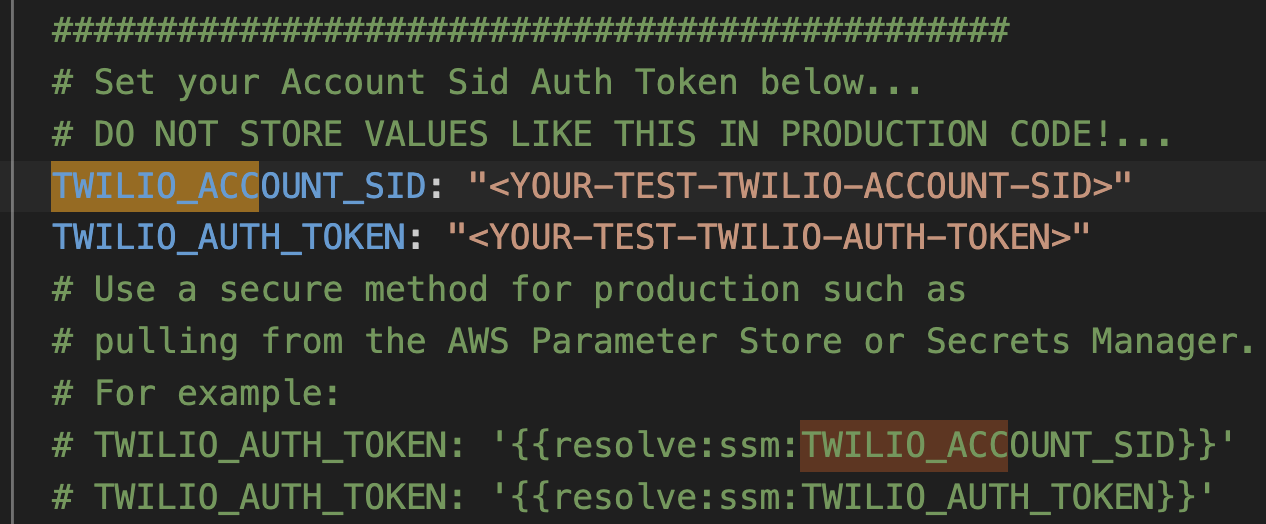

For this step you will need your OpenAI API Key, your Twilio Account SID and your Twilio Auth Token.

Use the FIND function and search for TWILIO_ACCOUNT_SID and replace the placeholder value with your value.

Use the FIND function and search for TWILIO_AUTH_TOKENand replace the placeholder value with your value.

Use the FIND function and search for OPENAI_API_KEY and replace the placeholder value with your value.

Here is an example of what it will look like in template.yaml for the TWILIO_ACCOUNT_SID:

2. Deploy the Stack

With those settings in place, we are ready to deploy! From a terminal window, go into the parent directory and run:

This command goes through the yaml file template.yaml and prepares the stack to be deployed.

In order to deploy the SAM application, you need to be sure that you have the proper AWS credentials configured. Having the AWS CLI also installed makes it easier, but here are some instructions.

Once you have authenticated into your AWS account, you can run:

This will start an interactive command prompt session to set basic configurations and then deploy all of your resources via a stack in CloudFormation. Here are the answers to enter after running that command (except, substitute your AWS Region of choice):

After answering the last questions, SAM will create a changeset that lists all of the resources that will be deployed. Answer “y” to the last question to have AWS actually start to create the resources.

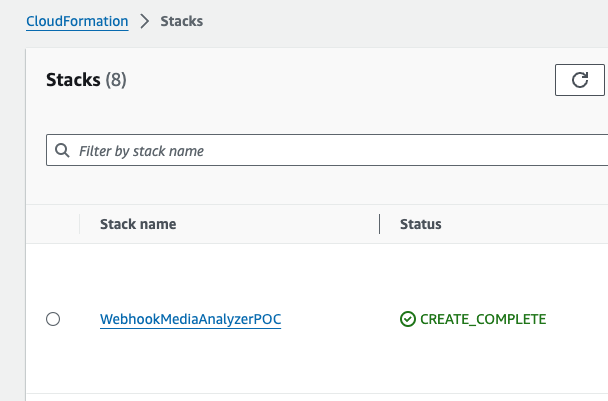

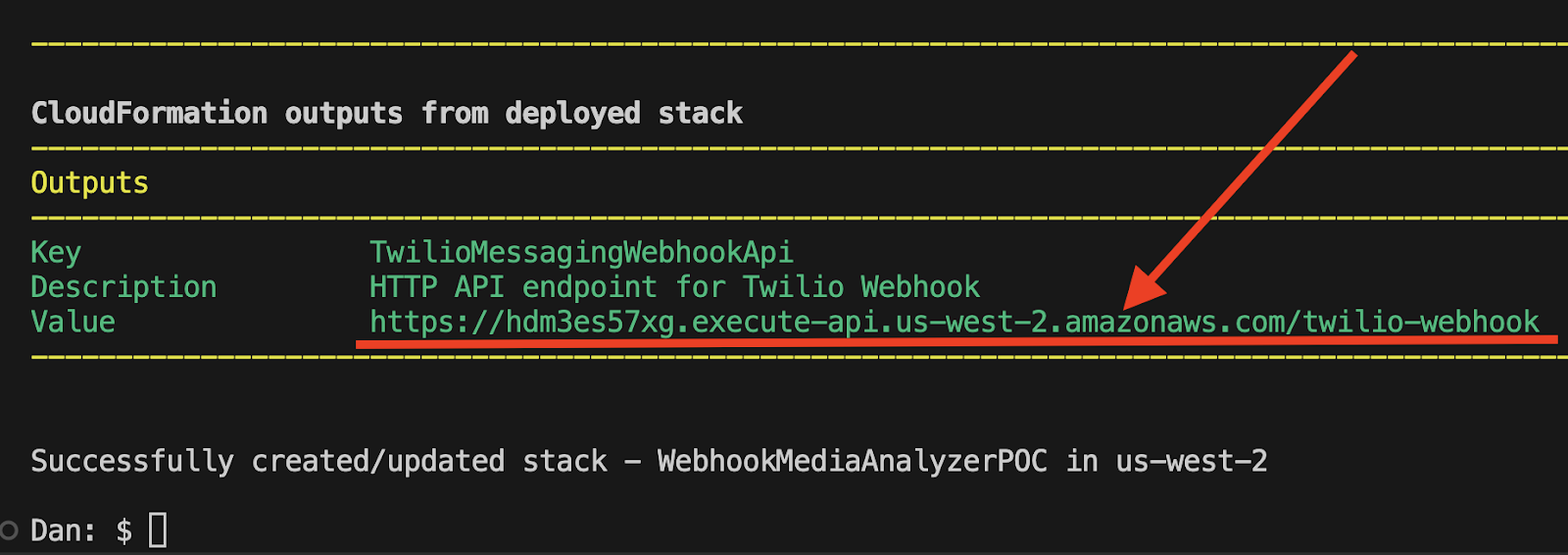

The SAM command prompt will let you know when it has finished deploying all of the resources. You can then go to your AWS Console and CloudFormation and browse through the new stack you just created. All of the Lambdas, Lambda Layers, S3 buckets, IAM Roles, SQS queues, SNS topics are all created automatically. (IaC – Infrastructure as Code – is awesome!)

Also note that the first time you run the deploy command, SAM will create a samconfig.toml file to save your answers for subsequent deployments. After you deploy the first time, you can drop the --guided parameter of sam deploy for future deployments.

Back in your terminal window that you used to deploy this stack, the last output will contain the endpoint that you will need to enter in your Twilio Console to direct the webhook to the system you just spun up. Copy the endpoint in your terminal window as shown in the example below:

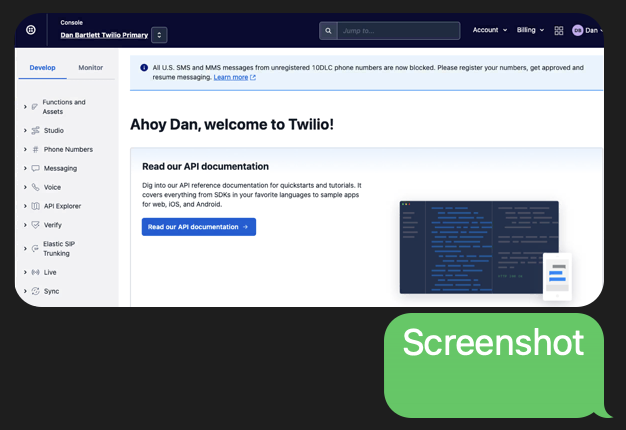

3. Set the Twilio Webhook

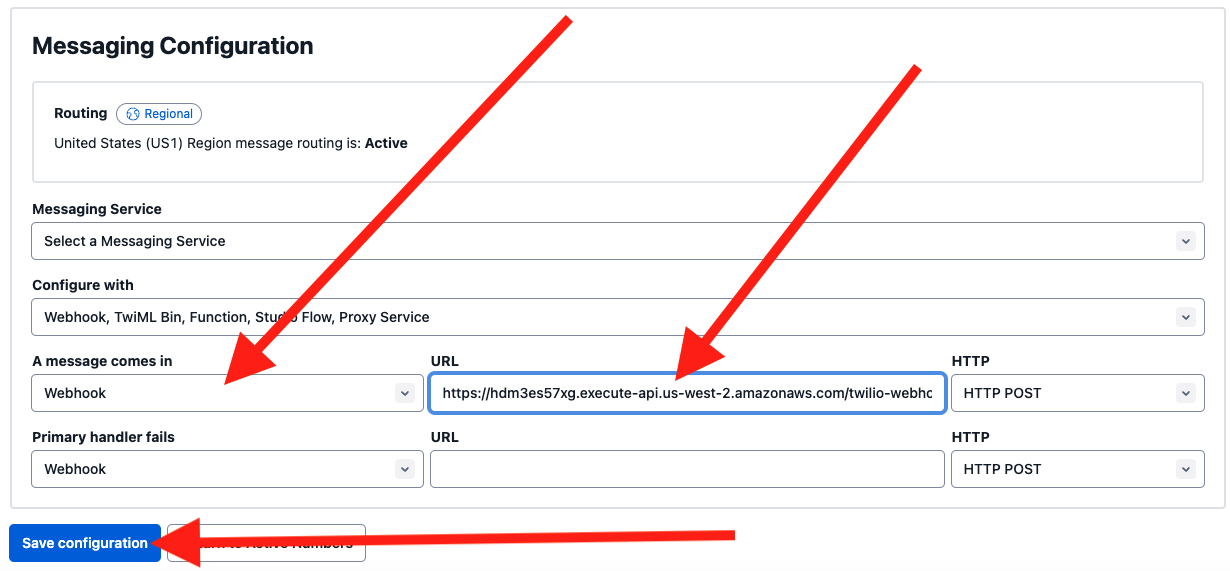

Now you just need to configure that endpoint to one of your Twilio Phone Numbers. From the Twilio Console, go to Phone Numbers >> Active Numbers and select the number you want to use. On the Configure tab for that phone number, scroll down to Messaging Configuration.

For A message comes in, select Webhook and paste in your endpoint from above into the URL field. Save your changes, and you are good to go!

To test it out, you can send any image without text to that phone number and it will use the default prompt, which will return a caption for the image.

If I send in the Twilio logo, it should look something like this:

Or, I can send in my favorite photo of my dog and get this:

Things get more interesting when you send in text along with the images as that will initiate more complex prompts. The prompts I’ve added in the demo were covered thoroughly in part 1, so scroll back up to review the sample prompts with images!

To recap, just send a text prompt along with your image. For example:

…and that will return:

Here are the available prompts:

- dog => Is there a dog in this image? If yes, determine the breed. Give your response in JSON where the is_dog variable declares whether a dog is present and the breed variable is your determination of breed.

- screenshot => Is this image a screenshot? If yes, is there a warning message? Respond with a yes or no regarding if there are warning messages. Summarize the messages in less than 15 words.

- category => Can this image be categorized as a photograph, a cartoon, a drawing, or a screenshot? Give your response in JSON where the category goes in the category variable and then add a description variable and give a concise description of the image. Respond with a JSON object with category and description properties.

- text => Is there any text in this image? If yes, what are the first few words?

- insurance => Does this image show damage to a vehicle? If yes, where is the damage and what type of vehicle?

- retail => Are the clothing items in this image from the Men’s or Women's Department? What type of clothing is it?

- recommend => Please recommend some products that go with the product in this image.

- tool => What type of tool should I use for this screw or bolt?

- repair => Is there any appliance in this image and if yes, what type of appliance is in the image? Is there any damage to the appliance?

- people => Are there people in this image? If yes, how many?

- ingredients => Please identify the ingredients in this meal.

- returns => What type of product is in the image? Does there appear to be any damage to the product in the image? Give a concise response.

- default => Write a caption for this image that is less than 15 words.

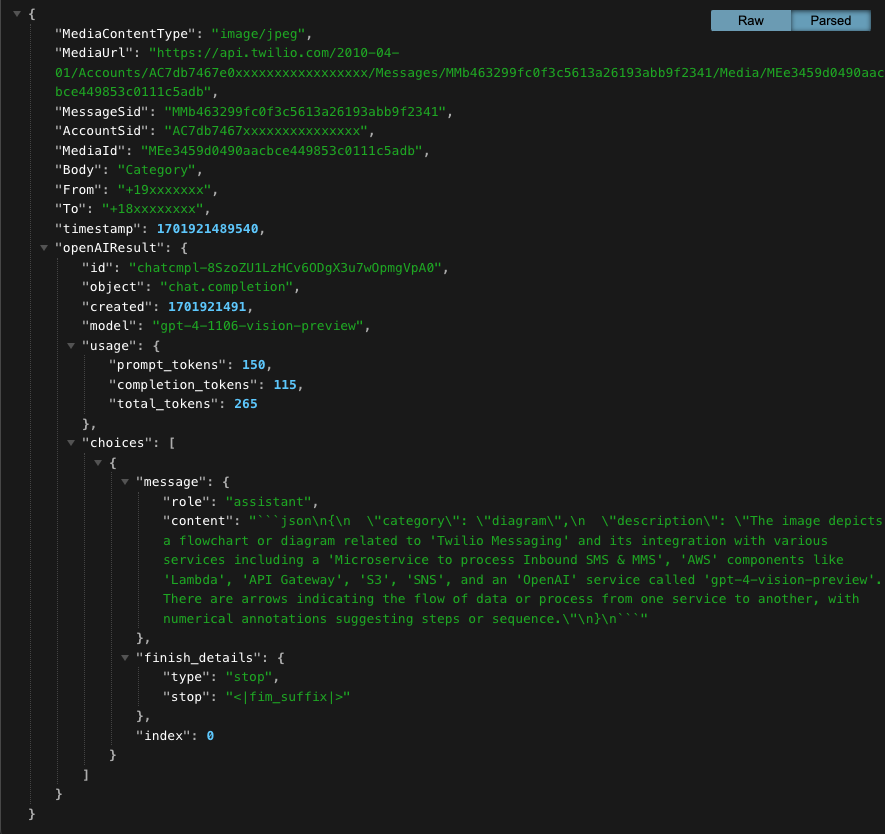

Using a request and response for this proof of concept where the system receives a MMS message and returns a SMS reply with the results from OpenAI is the most user friendly way to engage with this material. It is important to point out, though, that all of the JSON objects are stored in a S3 bucket. As you try this out in your own environment, be sure to view the JSON objects in the S3 bucket so that you are able to visualize how to consume these events in your system and turn them into actionable events and key data points for your customer personas.

Here is a sample JSON object:

Conclusion

In this post, you learned how to spin up an AI image analysis-and-understanding proof of concept using Twilio Messaging, OpenAI, and AWS. The goal of this blog post was to get you excited about the possibilities of using images, AI, and Twilio Communications Channels. This post focused on MMS, but all of this could be used with Twilio’s WhatsApp Business API or email using Twilio SendGrid (here is another blog post about SendGrid’s Inbound Parse).

The examples in this post cover many use cases and industries, and hopefully they have sparked ideas for your own business. This proof of concept should be straightforward to spin up in your own AWS environment and allow your company to start experimenting with the computer vision capabilities of AI and then turning those results into events which can then further build into delightful, personalized experiences based on images instead of text.

I want to emphasize that last point. This proof of concept shows an image being submitted via MMS and then a response is returned via SMS. The analysis of these customer-submitted images can be turned into a deeper understanding of what your customer actually wants. The amount of information in an image dwarfs what your customers can convey in a text chat conversation. With an increase in data from your customers, your organization can reply with much more considered responses and, ultimately, superior engagement.

Note that GPT Vision is currently in preview and has limitations and costs. Be sure to do your due diligence to determine the best model to meet your needs.

Exciting times! Happy building!

Dan Bartlett has been building software applications since the first dotcom wave. The core principles from those days remain the same but these days you can build cooler things faster. He can be reached at dbartlett [at] twilio.com.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.