AI Agent for Sending Emails Through WhatsApp Voice Notes Using Twilio, Sendgrid, .NET

Time to read:

AI Agent for Sending Emails Through WhatsApp Voice Notes Using Twilio, Sendgrid, .NET

Imagine leaving a voice note on WhatsApp and having it instantly transcribed and delivered as a well-structured email—no typing, no hassle. Voice messages capture tone, intent, and urgency in a way that text alone often fails to convey. Now, what if you could transform them into professional emails effortlessly?

By integrating Twilio's API, the .NET framework, and AI-driven speech recognition and enhancement using AssemblyAI and OpenAI, this system converts WhatsApp voice notes into text, refines them, and sends them via email—bridging the gap between casual voice messaging and formal business communication. Whether for customer support, internal updates, or professional correspondence, this solution enhances productivity, accessibility, and engagement in a truly innovative way.

Let's dive into the technical bit.

Prerequisites

In order to be successful in this tutorial, you will need the following:

Install the following:

Sign up for required services to obtain the required credentials needed by your application for external services:

- Twilio Free Account (to handle WhatsApp messaging)

- OpenAI Account (GPT-4 for email personalization)

- SendGrid Account (for email delivery)

- AssemblyAI (for transcription)

- Ngrok (to expose your local URL for webhooks)

- Familiarity and basic knowledge in C#.

With all these checking out, let’s roll.

Create the .NET application

In this section, you'll set up your project structure using the .NET CLI. This structure forms the foundation of your application, separating concerns between the API and the core business logic.

Set Up the Project Structure

Open Visual Studio Code and head to the terminal. Run the following commands to create a main directory, initialize a solution, and set up two projects: one for your API and the other one for your core business logic.

This set of commands initializes your solution with two projects. The API project handles HTTP requests and controllers, while the Core project will contain models and interfaces used across the application. The required packages are installed to support OpenAI, SendGrid, AssemblyAI, and Entity Framework for data persistence.

With the project structure ready, move on to create your core models and interfaces.

Create Core Models and Interfaces

In this section, you define properties such as sender/recipient email, audio URL, transcribed text, enhanced content, timestamp, and status. This model will be used to track the voice message’s journey from transcription to email sending. In the VoiceToEmail.Core folder, create a new Models folder and add the file VoiceMessage.cs. Update it with the code below.

This model encapsulates all the necessary data for a voice message, including unique identifiers, timestamps, and various stages of processing, that is, transcription and content enhancement.

Next, create a new file in the same Models folder called WhatsAppMessage.cs to define the WhatsApp message model. Update it with the code below:

The WhatsAppMessage class represents an incoming WhatsApp message, capturing essential details like the sender's phone number, message body, number of media attachments, and media URLs. The ConversationState class helps manage the conversation flow and track pending actions for each user interaction.

Create Service Interfaces

Next, you will define the interfaces that outline the responsibilities of various services in your application. These include transcription, content enhancement, email sending, and handling WhatsApp messages. Still in the VoiceToEmail.Core directory, create an Interfaces folder to add service interfaces. Create a new file and name it ITranscriptionService.cs. Update it with the code below.

These interfaces decouple the implementation details from the rest of your codebase, allowing you to easily swap out service implementations if needed.

Now, add another instance and name it IWhatsAppService.cs. Update it with the code below.

The IWhatsAppService interface defines how incoming WhatsApp messages will be processed. This abstraction lets you focus on handling the specifics of message parsing and response generation.

Implement the Services

With your interfaces in place, it's time to implement the actual business logic. In this section, you'll create the service classes that interact with external APIs that are AssemblyAI for handling voice transcription, OpenAI for personalizing the transcribed voice and composing the email, and SendGrid for sending the emails.

WhatsAppService

In VoiceToEmail.API, create a Services folder and add service implementations. Start by creating the WhatsappService.cs file. This service will parse and process incoming WhatsApp messages. Update it with the code below.

During initialization, the constructor retrieves Twilio credentials from the configuration and initializes the Twilio client. If the credentials are missing, an exception is thrown. It also sets up authentication headers for the HTTP client, allowing secure media downloads from Twilio.

The main method HandleIncomingMessageAsync() processes incoming WhatsApp messages by first checking if the user is expected to provide an email address. If so, the message is handled as an email input. If the message contains a voice note, it is downloaded, transcribed, and processed. If neither condition is met, the service responds by prompting the user to send a voice note or an email.

If a voice note is detected, the service downloads the media from Twilio, transcribes the voice note, and attempts to extract an email address from the transcription. If an email address is found, the content is enhanced using IContentService before being sent to the detected email. If no email is found, the system stores the voice note and waits for the user to manually provide an email address. The conversation state is updated to ensure that when the email is received, the system knows which voice note to associate it with.

When a user provides an email after sending a voice note, the service validates the email address using a regular expression. If the email is valid, the pending voice note is retrieved, processed again, transcribed, enhanced, and then sent to the given email address. If no pending voice note exists, the user is informed and asked to resend it.

The email extraction process uses a regular expression to find valid email patterns in messages. If an invalid email is provided, the service responds with an error message and prompts the user to re-enter a valid email. Finally, it will generate a response, a confirmation message that is sent back to Twilio WhatsApp.

TranscriptionService

This service handles audio transcription by uploading the audio data to AssemblyAI, creating a transcription request, and polling until the transcription is complete. Go ahead and create a TranscriptionService.cs file. Update it with the code below.

The TranscriptionService class is responsible for handling voice note transcription using AssemblyAI. It takes an audio file as a byte array, uploads it to AssemblyAI, requests a transcription, and continuously polls until the transcription is completed. Once transcribed, it returns the text output. If any step fails, the service logs the error and throws an exception. This service is essential for converting WhatsApp voice messages into email-friendly text before being sent.

The class implements the ITranscriptionServiceinterface, ensuring it follows a contract for transcription-related functionality. It uses dependency injection to receive an HttpClientfor making API requests, an ILoggerfor logging, and an API key for authentication. The API key is loaded from the configuration, and if it is missing, an exception is thrown to prevent unauthorized API requests.

The core functionality is implemented in TranscribeAudioAsync(byte[] audioData), which follows three key steps. First, it uploads the audio file to AssemblyAI, receiving a unique upload URL in response. If the upload fails, it logs the error and throws an exception. Second, it creates a transcription request using the upload URL and submits it to AssemblyAI. If the request is unsuccessful, it logs an error and stops execution. Third, the service enters a polling loop where it checks the transcription status every second for up to 60 seconds. If the transcription is completed, it returns the extracted text. If an error occurs, it logs the issue and throws an exception. If the timeout limit is reached, it throws a timeout exception.

To support this process, the service defines helper classes to structure JSON responses from AssemblyAI. The UploadResponse class stores the upload URL returned after an audio file is uploaded. The TranscriptionRequest class defines the JSON structure for transcription requests, including the audio_url and an option for language_detection. The TranscriptionResponse class stores the transcription ID, status (queued, processing, completed, or error), the final transcribed text, and any error messages.

This service ensures seamless voice-to-text conversion and integrates efficiently with the WhatsApp message handling workflow.

Next, create the ContentService.cs.

ContentService

The ContentService class is responsible for enhancing transcribed text by transforming it into a professional email format. It integrates with OpenAI's API to generate well-structured and formal emails from voice transcriptions. This service ensures that voice-to-text conversions are polished and properly formatted before being sent via email. Update it with the code below.

The class implements the IContentServiceinterface, establishing a structured approach to processing content. It is initialized in the constructor by retrieving an API key from the configuration settings and using it to create a new instance of ChatClient with a specified model ("o3-mini-2025-01-31"). This setup readies the service for interacting with OpenAI's chat-based API.

The primary work is performed in the EnhanceContentAsync(string transcribedText) method. Here, a list of chat messages is created with two key components:

- A system message that instructs the AI to transform the given message into a professional email. It directs the AI to keep the core content intact while formalizing the structure and adding a proper greeting and closing.

- A user message that carries the original transcribed text.

The ChatClient then processes these messages asynchronously by calling CompleteChatAsync(messages). Finally, the method retrieves the last message from the AI's response, trims any extra whitespace, and returns this polished version of the transcription as a professional email.

Proceed to create the EmailService.cs file.

EmailService

The EmailService class is responsible for sending emails using SendGrid, ensuring that transcriptions and enhanced content reach the intended recipients. Update it with the code below.

It implements IEmailService, enforcing a contract for email-sending functionality. The service is initialized with a SendGridClient, which requires an API key, and it retrieves the sender's email address and name from the configuration settings. If any of these configurations are missing, the constructor throws an exception to prevent misconfigured email sending.

The primary method, SendEmailAsync(string to, string subject, string content), handles email dispatch. It first constructs the sender and recipient email addresses using SendGrid’s EmailAddress class. Then, it uses MailHelper.CreateSingleEmail() to create an email message, formatting the content with HTML styling for better readability. The email is then sent via _client.SendEmailAsync(msg), and if the response indicates failure, an exception is thrown to signal the issue.

This service ensures reliable email delivery, making it an essential component for sending professionally formatted transcriptions via email.

Move forward to the controllers.

Implement the Controller

The MessageController class handles HTTP requests for processing voice messages and converting them into text-based emails. Create a Controllers folder in VoiceToEmail.API and create Controllers.cs. Update it with the code below.

This code uses dependency injection to access ITranscriptionService, IContentService, and IEmailService. The controller exposes a POST endpoint that accepts an audio file and a recipient's email address as input.

When a request is received, the controller first validates the uploaded audio file to ensure it exists and has content. It then reads the audio data into memory and calls TranscribeAudioAsync() to obtain a text-based transcription. The transcribed text is further enhanced using EnhanceContentAsync(), which formats it into a professional email. Finally, SendEmailAsync() is called to send the processed transcription to the specified recipient.

Throughout the process, the controller logs key steps, ensuring issues can be traced and debugged effectively. If any errors occur, they are logged, and the API responds with a 500 Internal Server Error. On success, the response includes the original transcription, enhanced content, recipient email, and a status message.

Proceed to WhatsAppController to bind it with the webhook.

WhatsAppController

The WhatsAppController class serves as the webhook endpoint for Twilio’s WhatsApp API, allowing WhatsApp messages to be processed in real-time. Create a WhatsAppController.cs file and update it with the code below.

The controller is initialized with IWhatsAppService, which handles incoming messages, and an ILogger for logging.

A GET request to the controller verifies that the WhatsApp webhook is accessible, responding with a basic success message. The primary functionality, however, lies in the POST webhook endpoint, which Twilio calls when a message is received. This method extracts form data from the incoming request, logs the details, and constructs a WhatsAppMessage object containing metadata such as the message sender, recipient, text body, and any media attachments.

If media such as voice notes is present, the controller iterates through the media URLs and adds them to the message object. The fully constructed message is then passed to HandleIncomingMessageAsync(), which determines the appropriate response based on the message content. The response is formatted into TwiML (Twilio Markup Language) and returned to Twilio, ensuring the sender receives an appropriate reply.

If any errors occur during processing, the controller logs them and returns a fallback TwiML response, apologizing for the issue. This controller is essential for seamlessly handling WhatsApp voice messages, allowing them to be processed, transcribed, and sent as emails via Twilio’s messaging system.

Configure Application

The JSON configuration file defines essential API keys, logging settings, and service credentials required for the VoiceToEmail API to function properly. It provides structured environment settings for external services such as OpenAI, SendGrid, Twilio, and AssemblyAI. Update the appsettings.json as shown below. Remember to replace the placeholders with real credential values.

For detailed instructions on obtaining API keys and service credentials (OpenAI, SendGrid, Twilio, and AssemblyAI), please refer to the Prerequisites section.

The OpenAI section contains the ApiKey, which is used in ContentService to enhance transcribed text by converting it into a professionally formatted email. The SendGrid section includes an ApiKey for authentication, along with FromEmail and FromName, which define the sender’s email address and display name when sending emails. These settings allow EmailService to manage email delivery reliably.

For logging, the configuration specifies different logging levels. By default, general logs are set to "Information", ensuring key events are recorded, while ASP.NET Core framework logs are filtered to "Warning" to prevent excessive log noise. This improves monitoring and debugging by focusing on important events.

The Twilio section holds credentials for handling WhatsApp messages, including the AccountSid and AuthToken, which authenticate API requests. Additionally, WhatsAppNumber specifies the Twilio WhatsApp sender number used for messaging, allowing seamless integration with WhatsAppService. You can use the phone number of a phone that you have access to with WhatsApp installed. If you want instructions on setting up a registered sender for WhatsApp, review the documentation.

The AssemblyAI section contains an ApiKey required for speech-to-text transcription, enabling TranscriptionService to process voice messages and convert them into text-based content. This API plays a crucial role in ensuring accurate transcriptions before they are formatted and sent via email.

Finally, the AllowedHosts setting is configured as * to permit requests from any domain, which is useful during development. However, in production, this setting may need to be restricted for security reasons.

Next, update the Program.cs with the code below to bundle everything in our application together and bring it to life.

The Program.cs file is responsible for configuring and starting the VoiceToEmail API application. It initializes dependency injection, configures services, sets up HTTP request handling, and enables logging and debugging features.

The application is built using ASP.NET Core, and it follows the modern Minimal API approach. It begins by creating a WebApplicationBuilder, which is used to configure services and middleware. The AddControllers() method is called to enable API controller functionality, including support for XML serialization. Additionally, AddEndpointsApiExplorer() is registered to facilitate API endpoint discovery for tools like Swagger.

To improve developer experience, the application integrates Swagger for API documentation. The AddSwaggerGen() method sets up Swagger UI, allowing developers to visually explore API endpoints. The application also configures CORS (Cross-Origin Resource Sharing) to permit requests from any origin during development, making it easier to test the API from different front-end clients. The application then builds and configures the request pipeline, enabling routing, authorization, and endpoint mapping for API controllers.

Finally, logging is enabled using ILogger, ensuring that important events such as application startup and environment configuration are recorded. The application then runs the server, making the API available for handling requests. This file acts as the entry point for the entire system, bringing together all the services and middleware required to process voice messages and convert them into emails.

Test the Application

To verify that the whatsapp-Voice-to-Email API is working correctly, follow these steps:

Navigate to the project directory and build the application. Run the application using the command below:

This will start the server and display the API URL and listening port http://localhost:5168. Feel free to allocate your own port number.

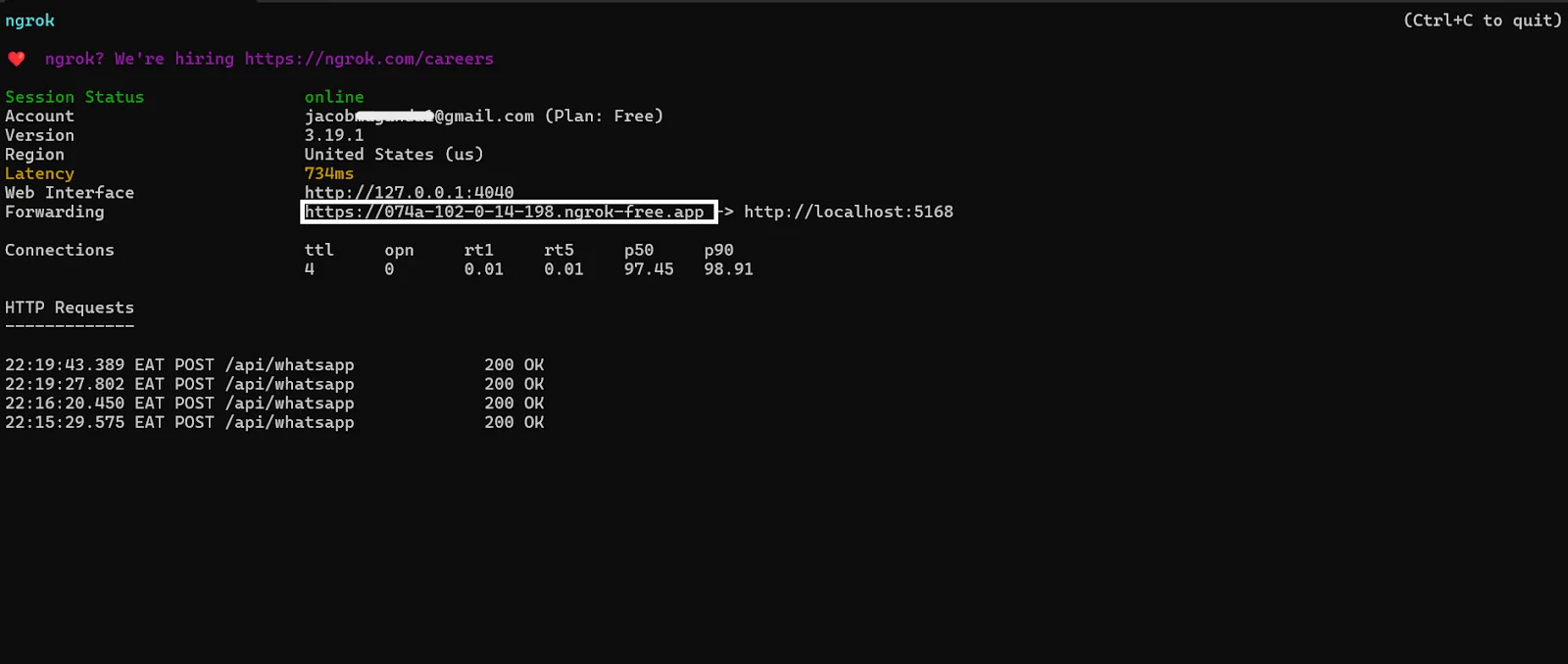

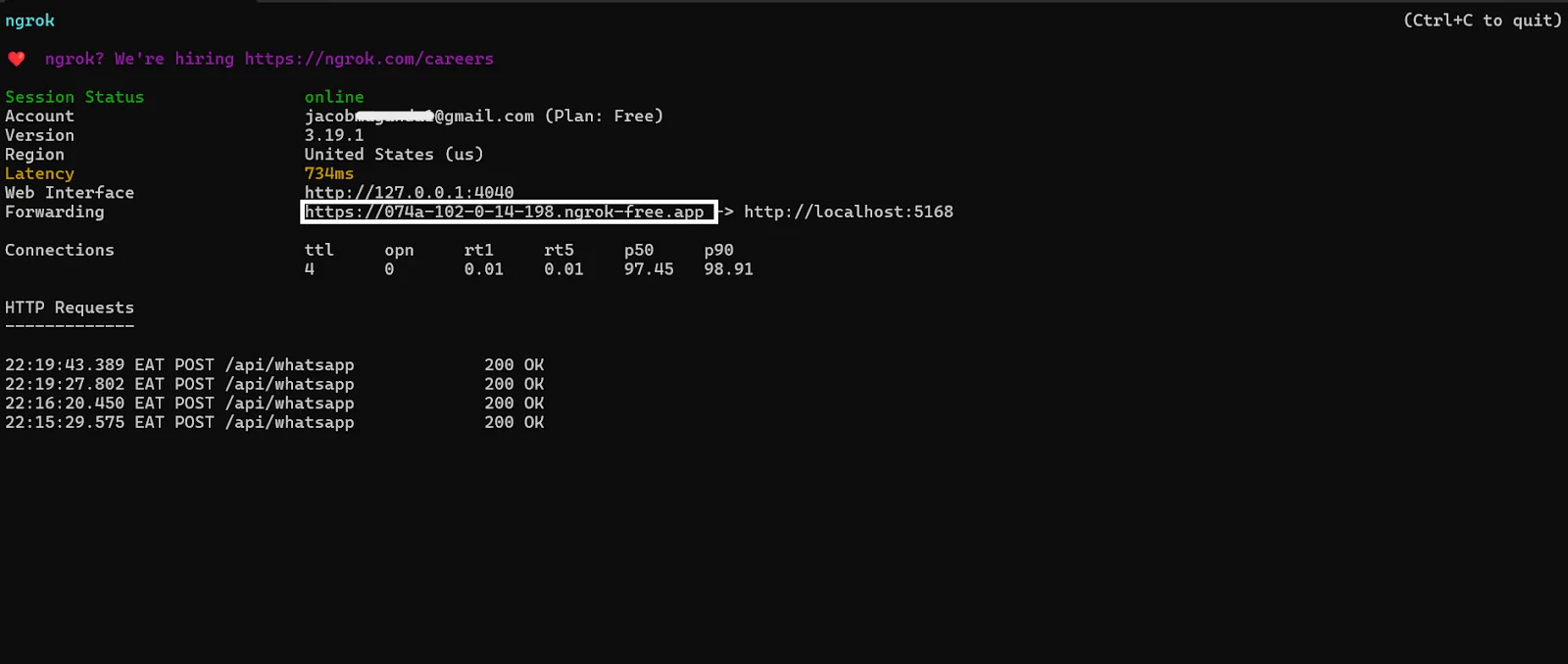

Since Twilio needs a publicly accessible URL for its WhatsApp Webhook, use ngrok to expose your local server to the internet. Run the command below to start ngrok.

Once ngrok is running, it will generate a public URL e.g., https://randomstring.ngrok.io as displayed in the screenshot below.

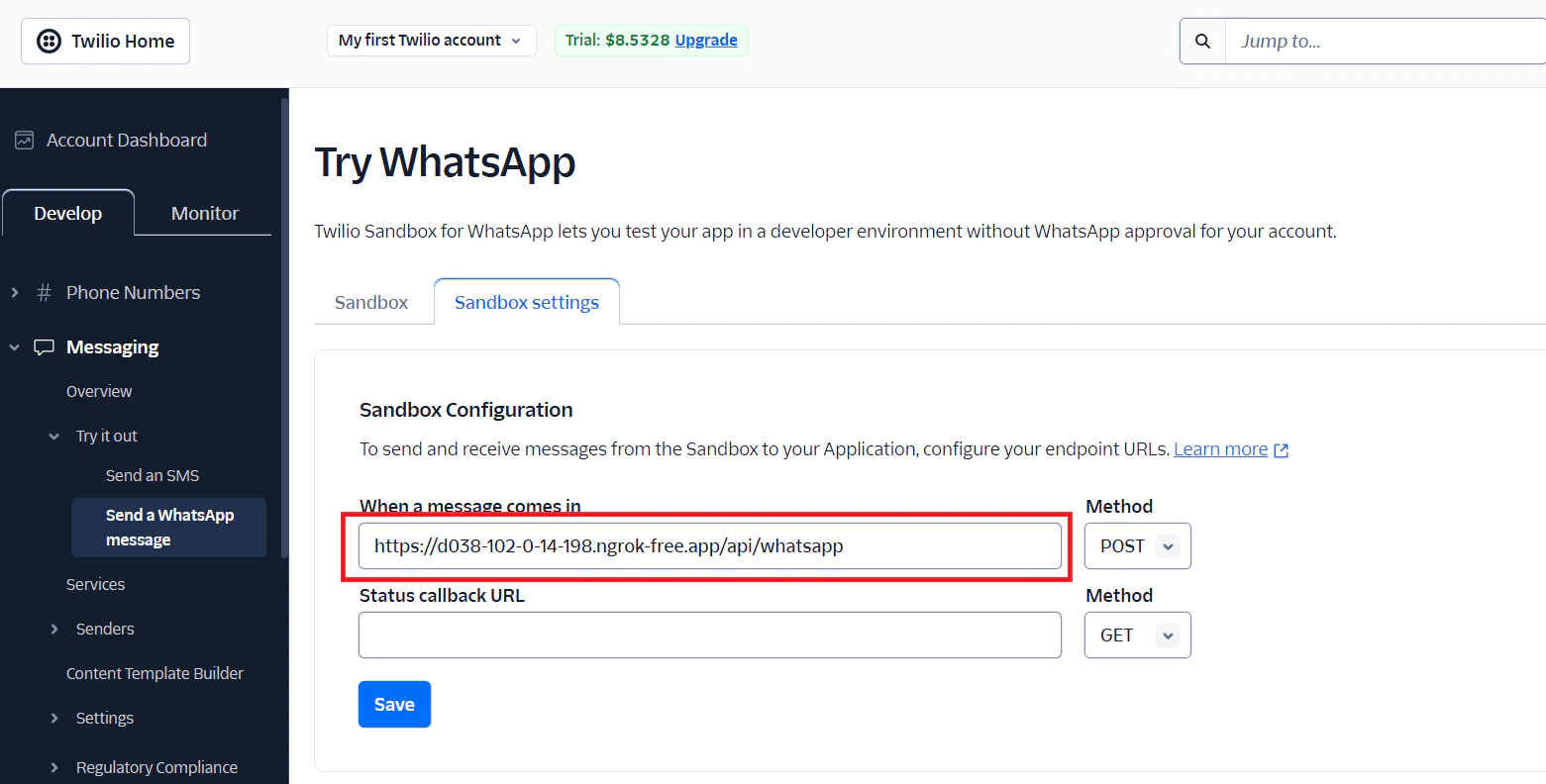

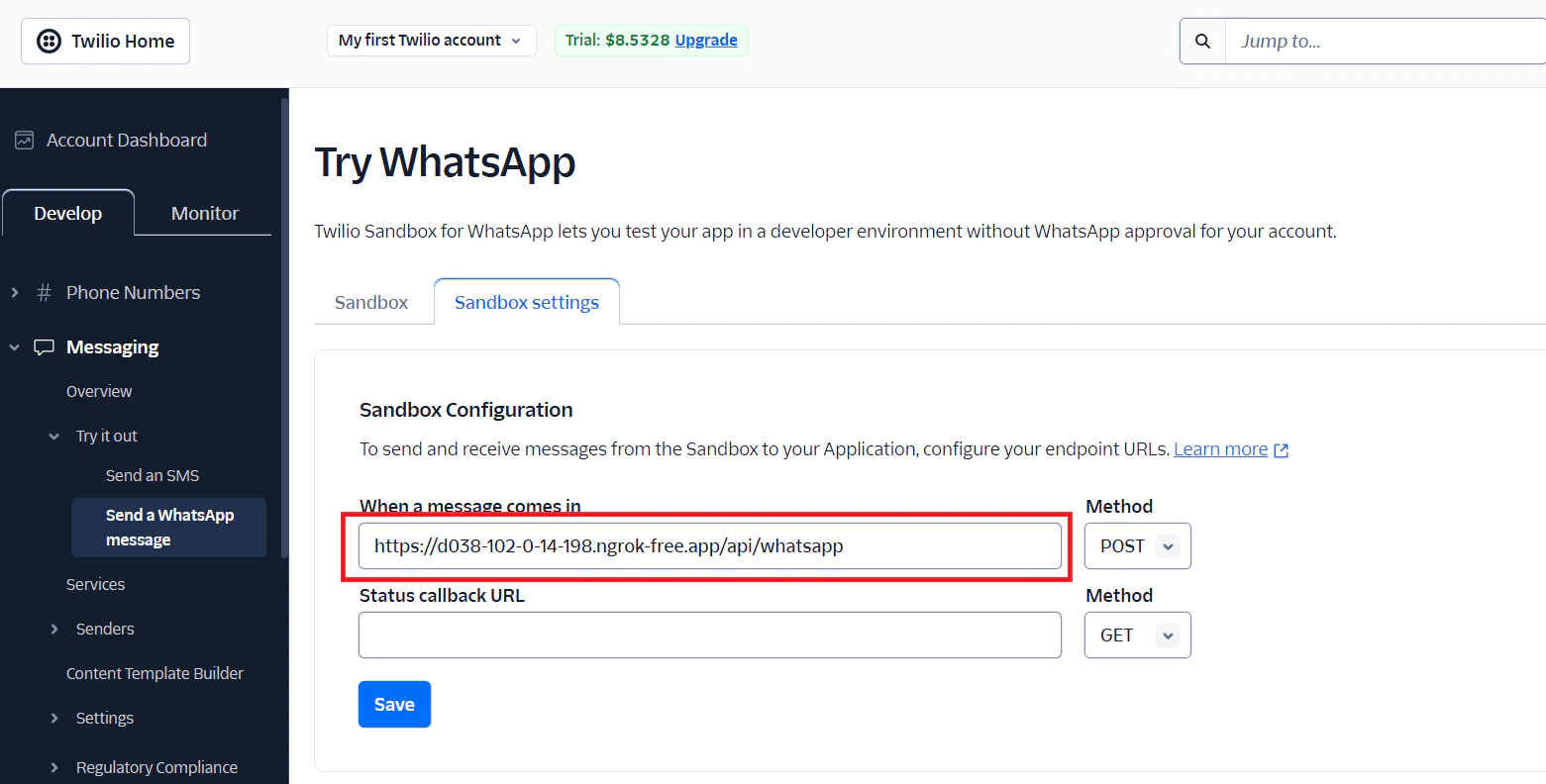

Head on to the Twilio Try WhatsApp page, be sure that the phone number you listed in the settings file is connected to the WhatsApp sandbox by sending the Join code you see to the provided number. Then click on the Sandbox Settings tab and configure the sandbox settings as follows.

- In the When a message comes in field, paste the ngrok forwarding URL and add

/api/whatsappto the URL endpoint. As that is where the webhook requests will be processed. - Set the Method to POST

Click the Save button to confirm the configuration. Confirm you are on the right track as depicted in the screenshot below.

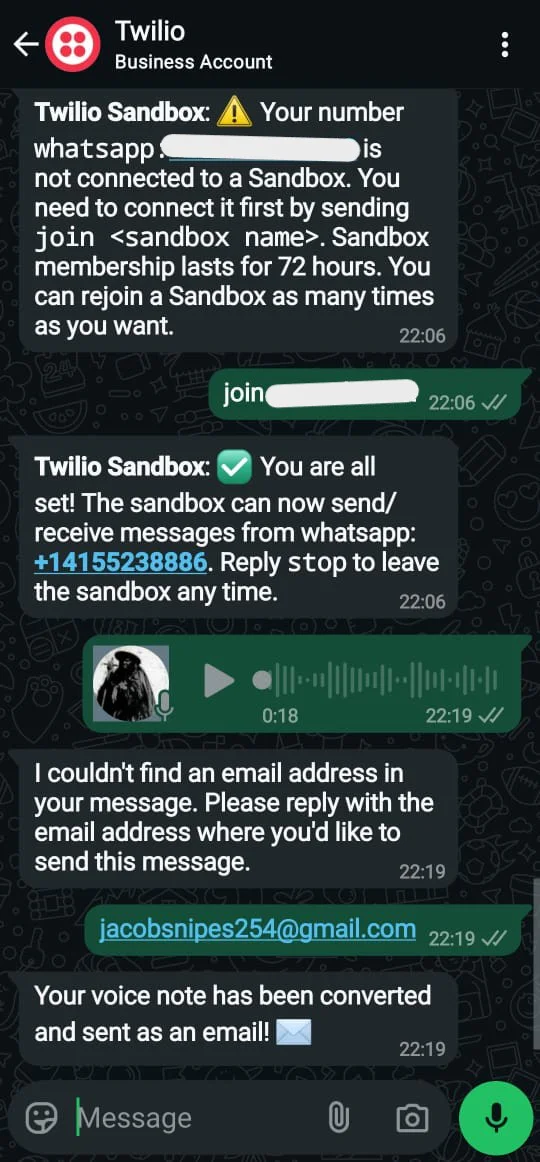

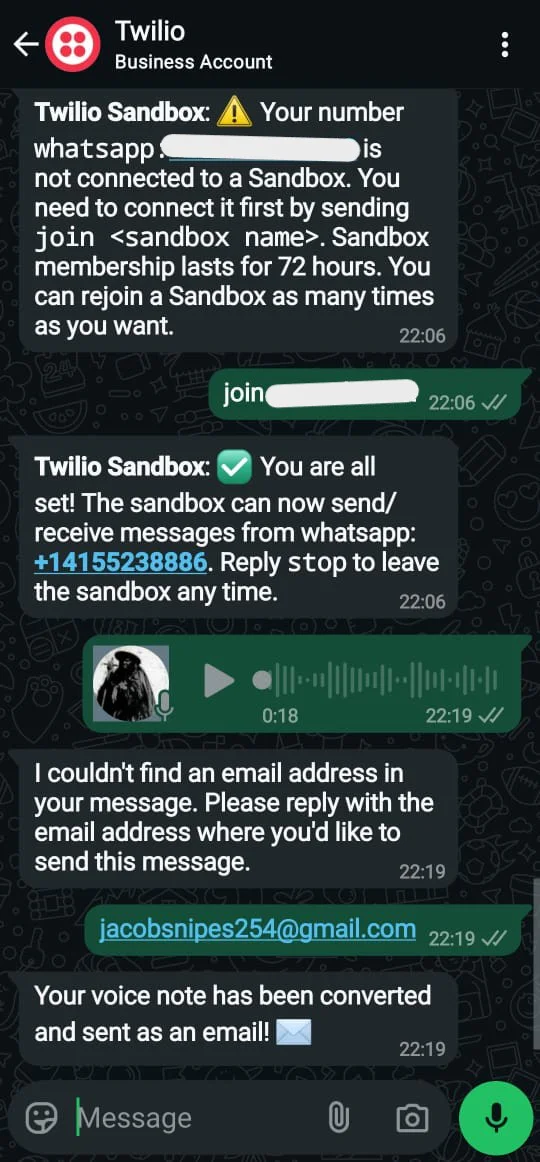

Your application is now connected to the sandbox. Now, send a voice note to test the transcription, personalization, and email delivery process. If an email address is not picked in the voice note, you can either send another recorded audio containing the email address or just send the email address as text. Check the screenshot below for the application flow.

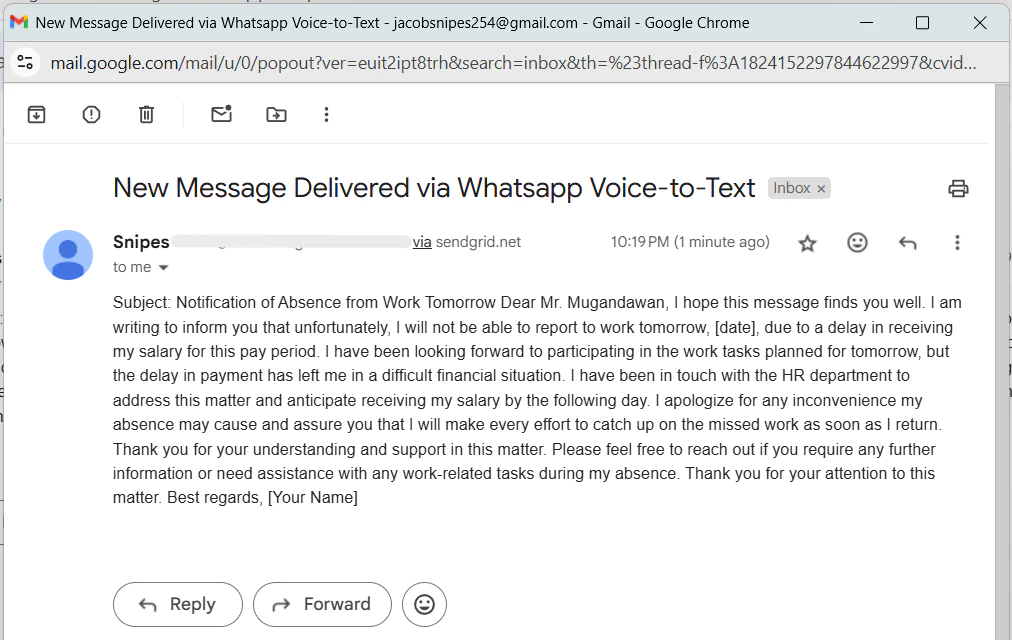

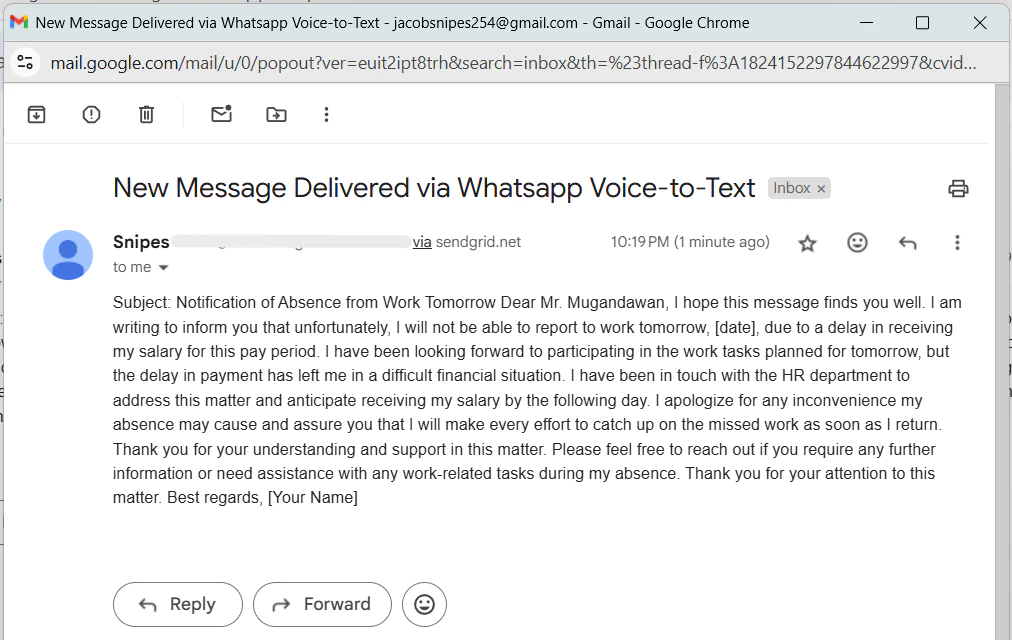

After sending a voice note, you should receive an email transcription from Twilio SendGrid. In this case, the received and personalized email as shown in the screenshot contains a transcription of the voice message. Remember that, up in EnhanceContentAsync, you instructed the AI to change the contents of your voicemail to include email headers and appropriate professional language, so the transcription of what you said aloud won't be an exact match for what is in the email. If you are happy with the results, verify the rest of the output. If not, you can always alter the provided AI prompt to adjust the email transcription.

Verifying the Output

- Check the Email – Ensure the email was delivered successfully to the intended recipient.

- Confirm the Transcription Accuracy – Compare the email's text with the original voice note. Minor errors may occur due to background noise or unclear speech.

- Ensure Proper Formatting – The email should have a clear subject, structured content, and proper paragraph formatting.

- Check the Sender Details – Verify that the email is sent from the configured SendGrid email address.

Points to check out

- If the transcription is inaccurate, feel free to try other transcription tools.

- If the email was not received, verify your SendGrid API key and email configuration.

At this point, the application has successfully processed and transcribed the voice note and personalized it into an email.

What's Next for Your WhatsApp Voice-to-Email System?

You've built a robust, AI Agentic-powered WhatsApp voice-to-email application that:

- Receives voice notes via Twilio WhatsApp and processes them in real-time.

- Transcribes audio using AssemblyAI for accurate speech-to-text conversion.

- Enhances transcriptions with OpenAI to create well-structured, professional emails.

- Delivers transcriptions via SendGrid, ensuring seamless email communication.

Applicatory use cases:

AI for the Visually Impaired – Develop voice-driven systems that transcribe, summarize, and deliver emails, helping visually impaired users navigate digital communication more efficiently.

CRM and Business Integration – Connect with customer management systems (CRM) to log voice interactions and automate business workflows.

Automated Response System – Implement AI-driven auto-replies for WhatsApp messages, providing instant feedback or call-to-action responses.

Multi-Language Support – Expand the transcription service to detect and process multiple languages, enabling global accessibility.

Bonus materials for insights and to further your skills:

- Sound Intelligence with Audio Identification and Recognition using Vector Embeddings via Twilio WhatsApp

- Enable Real Time Human-to-Human Voice Translation with Twilio ConversationRelay

Jacob automates applications using Twilio for communication, OpenAI for brain-like processing, and vector databases for intelligent search. He loves seeing real-world problems solved with AI and the agentic wave. Check out more of his work on GitHub .

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.