A/B Testing Twilio with Eppo

Time to read:

A/B Testing Twilio with Eppo

Do you have an idea to improve your customer’s experience, but aren’t quite certain if it will work? Have you wondered if you could test your idea with some users before you pitch it to your manager?

Well, you can, with A/B testing. An A/B test is an experiment in which you randomly assign users one of two (or more) versions of a product or feature to determine which one performs better.

Let’s work through an example. Say you send SMS reminders to your customers asking them to confirm attendance for an upcoming appointment, but only 10% of users respond on average. You have the idea of a nifty new message that could lead to a much larger percentage of users replying.

In this tutorial, you will learn to run an A/B test to identify the potential difference in user response rates between the current and new SMS messages using Eppo , an end-to-end experimentation platform.

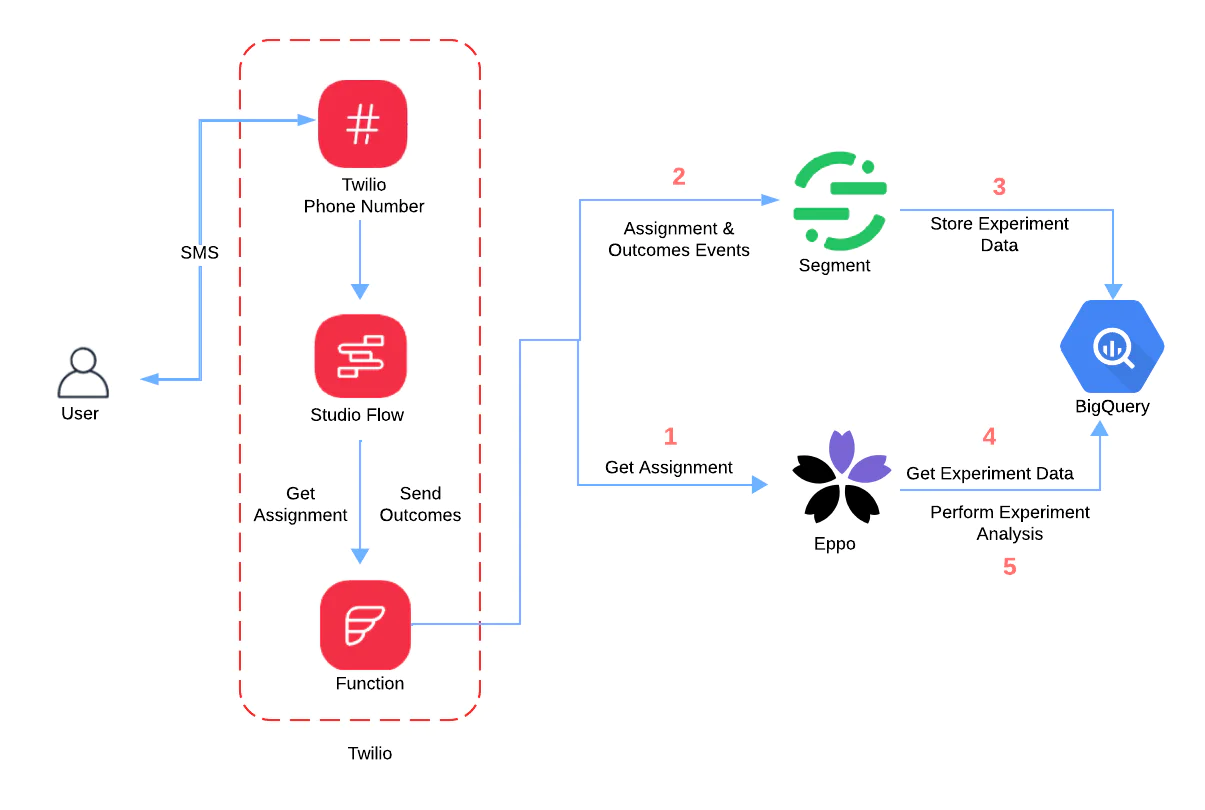

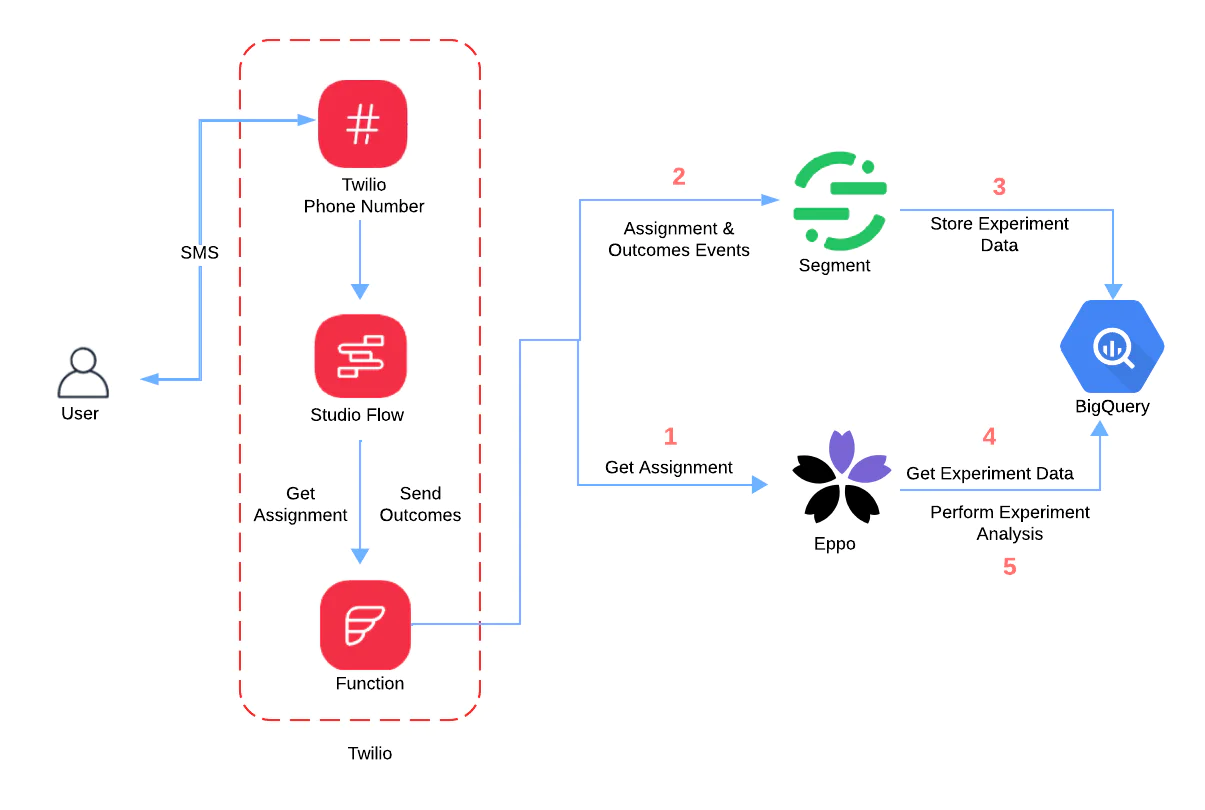

Architecture

Before you learn to implement the solution, let’s understand the architecture.

Components and Prerequisites

These are the key components of this solution:

- A Twilio Phone Number lets you send and receive messages. If you don’t have a Twilio account, you can sign up for a free trial account and follow these instructions to buy a number. Note that you will need to fulfill the requirements to register and use the number of your choice before you can complete the tutorial

- Twilio Studio, a drag-and-drop tool to build voice and messaging applications. It will be included in your Twilio account.

- Twilio Functions, which is a serverless environment to host your code, and is also included in your Twilio account.

- Eppo, a warehouse-native A/B experimentation platform. If you don’t have an account, you can request one here .

- Segment, a Customer Data Platform tool that can collect data and send it to a data warehouse. You can sign up for a free account .

- BigQuery, an enterprise data warehouse from Google. You can sign up for a free Sandbox instance but you are also welcome to use your current data warehouse in this project (more on that later).

Workflow

These are the major steps involved in A/B testing your SMS messages, which you’ll be building throughout this tutorial:

- When your Studio Flow is executed, it starts by invoking a Function ( get-assignment ) which connects with Eppo.

- Eppo will determine which of the two SMS messages, current or new, a user should receive from Twilio. This is called an assignment .

- Before returning the assignment to your Studio Flow, the Function will also log the assignment details as an event in Segment.

- Once the Studio Flow receives a response from the get-assignment Function, it sends the assigned message in an SMS to the user via a Twilio phone number and then waits for a response.

- When the user responds, or if 4 hours have gone by without a response, the Studio Flow will invoke another Function ( log-outcome ). This Function will log the user’s response (replied or not replied) as an event in Segment.

- Segment will periodically (usually every hour) push the data collected into two BigQuery tables - Assignments & Experiment Outcomes.

- Eppo will read the two tables periodically (usually once a day) and perform some SQL computations in BigQuery to analyze the experiment data and provide a report to you.

Implementation Steps

Let’s go through the steps to implement this project, starting with reviewing the prerequisites.

Additional Prerequisites

In addition to getting yourself access to the accounts and number listed in the Components and Prerequisites section earlier, you will need the following.

- Node version 18 or greater

- An IDE such as VS Code

- Git or another tool to clone a repository from GitHub

- Twilio CLI to deploy Twilio functions

Configure BigQuery

Your data warehouse will be your source of truth for this experiment so Eppo can accurately measure the outcome using the business metrics you’ve already defined in your enterprise data warehouse.

This tutorial will use BigQuery but you will also find instructions to configure a warehouse of your choice.

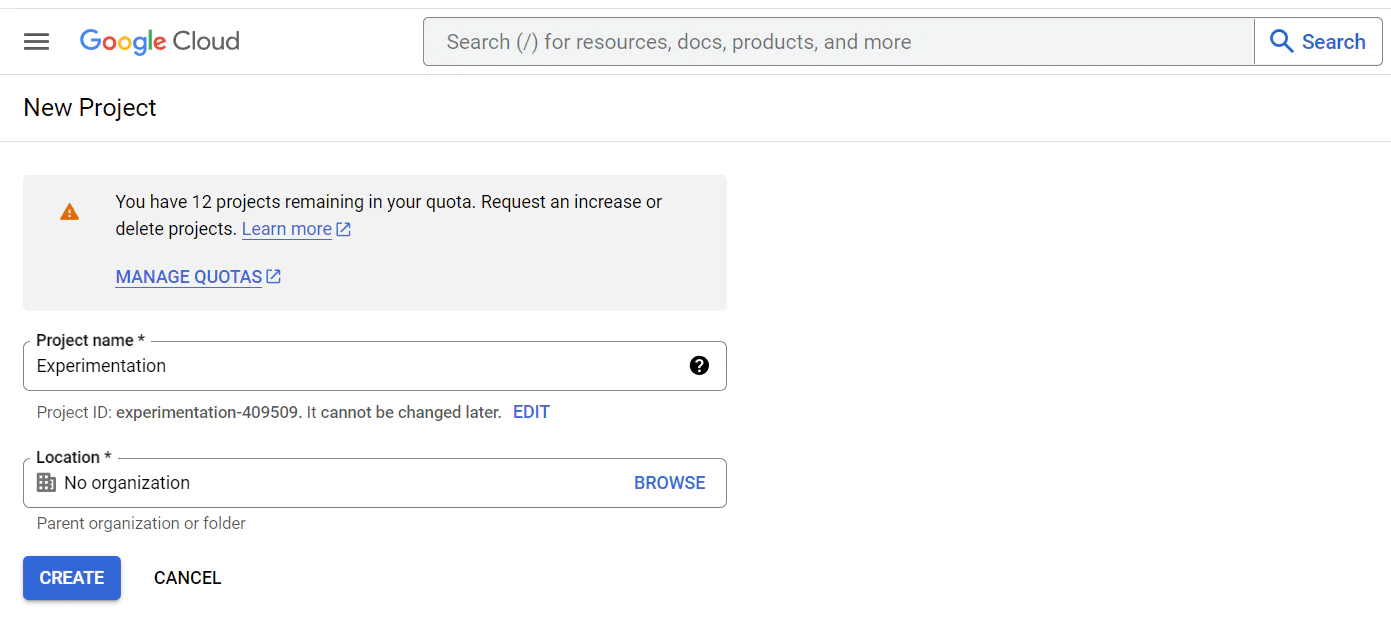

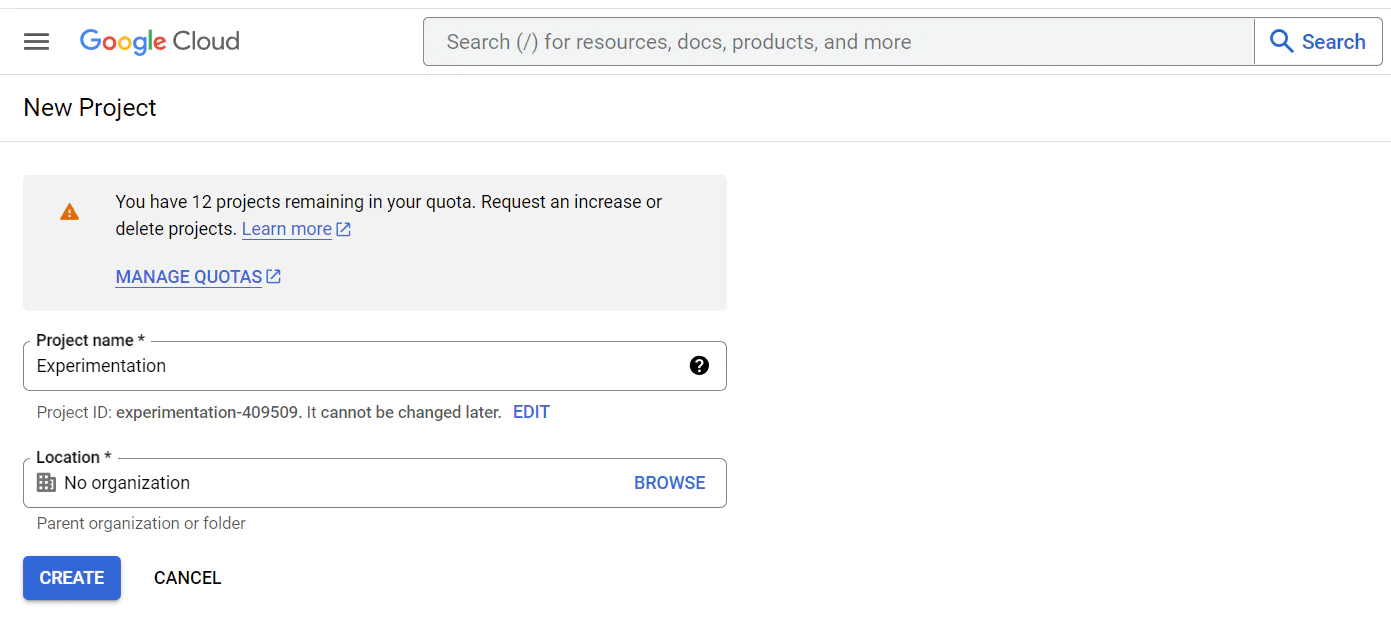

Create Project

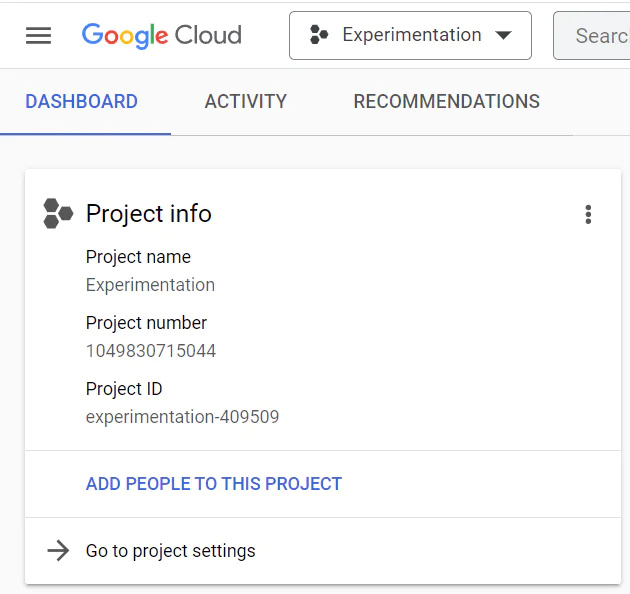

A project is a container for BigQuery datasets.

1. If you don’t already have a Project in your Google Cloud account, create one by going here . Give it a name of your choice and click Create

2. From the Projects Dashboard, note down the Project ID for later use. Note that the Project ID (experimentation-409509, in my screenshot below) is different from the Project name and Project number.

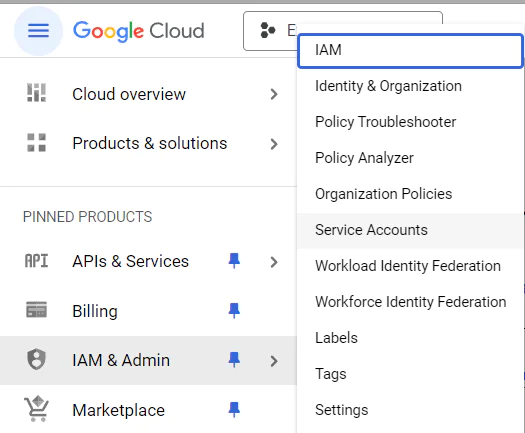

Create Service Accounts

You have to create two Services Accounts which will grant Segment and Eppo the ability to read or write to your BigQuery datasets.

1. In the left navigation panel, go to IAM & Admin > Service Accounts.

2. Click on Create Service Account.

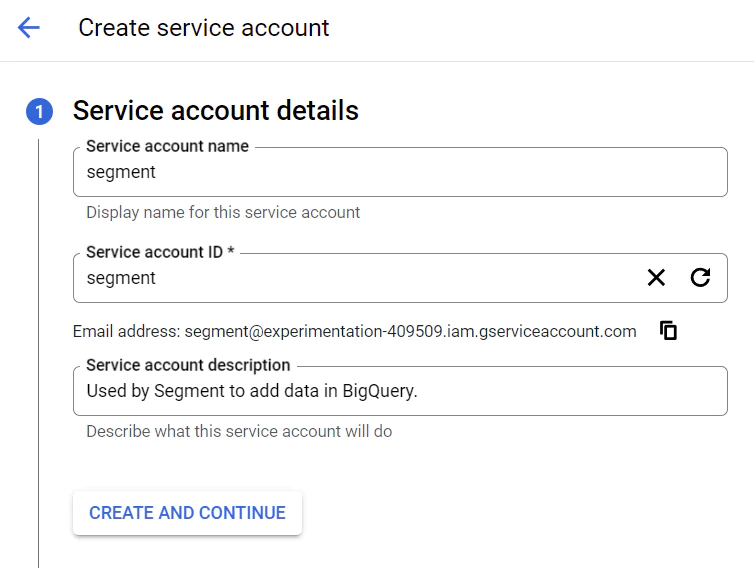

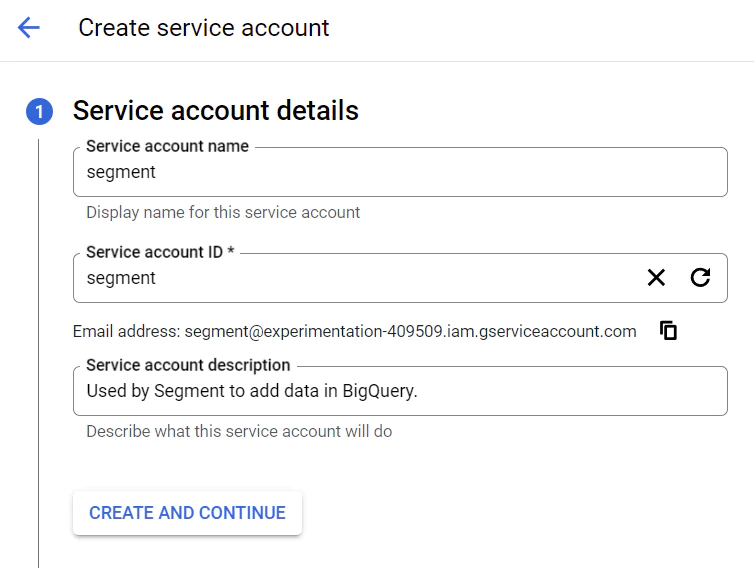

3. Name the Service Account Name segment and optionally enter the description. The Service Account ID is auto-generated so you don’t need to edit it. Then click Create and Continue.

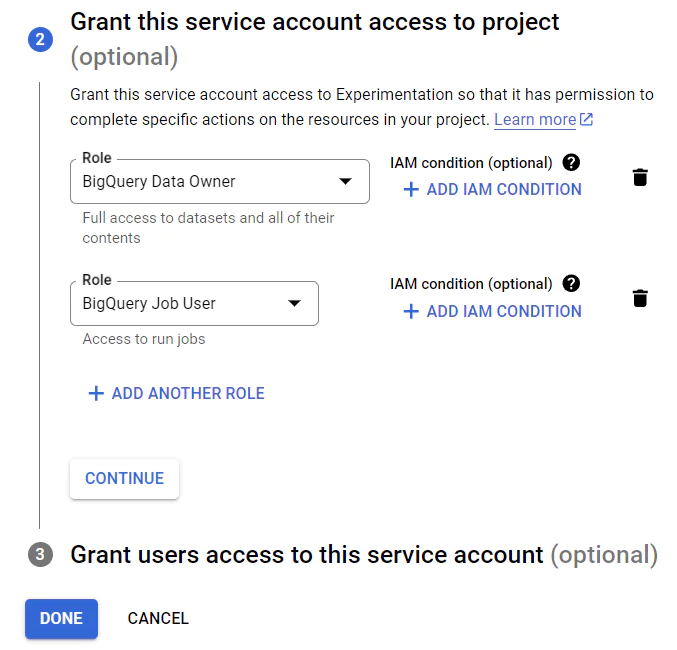

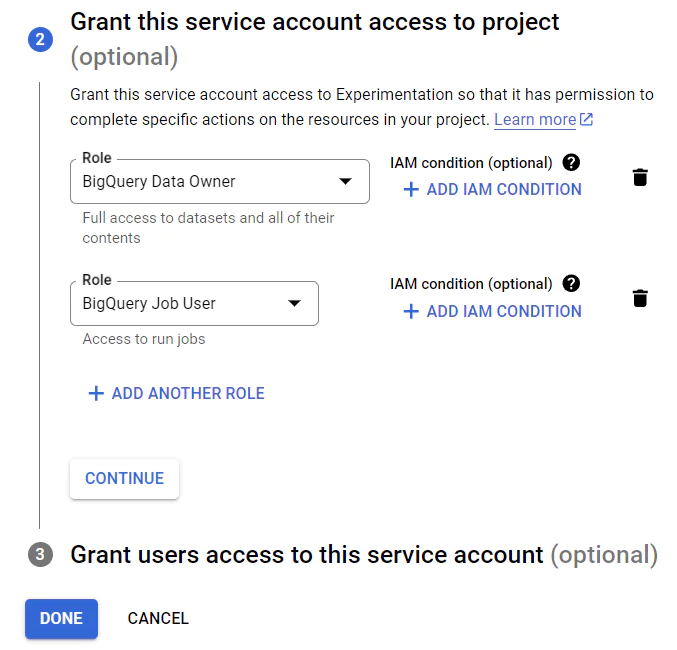

4. Add two roles - BigQuery Data Owner and BigQuery Job User (if you are creating this service account for Eppo, then add the BigQuery Data Viewer role too). Then click on Done.

Your Service Account is now created!

5. Next, click on the Service Account, navigate to Keys > Add Key > Create New Key > Create. Download, and save the JSON file for later use.

6. Now, repeat steps 1 through 5 to create another Service Account for Eppo with an exception in step 4, where you must remember to add one additional role - BigQuery Data Viewer.

Your Service Accounts are now created!

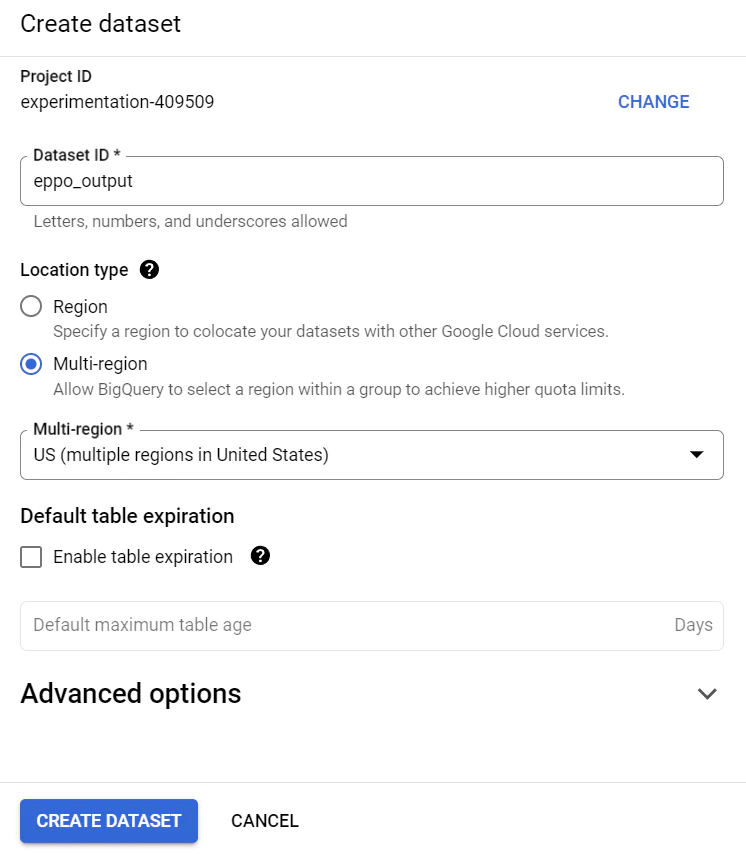

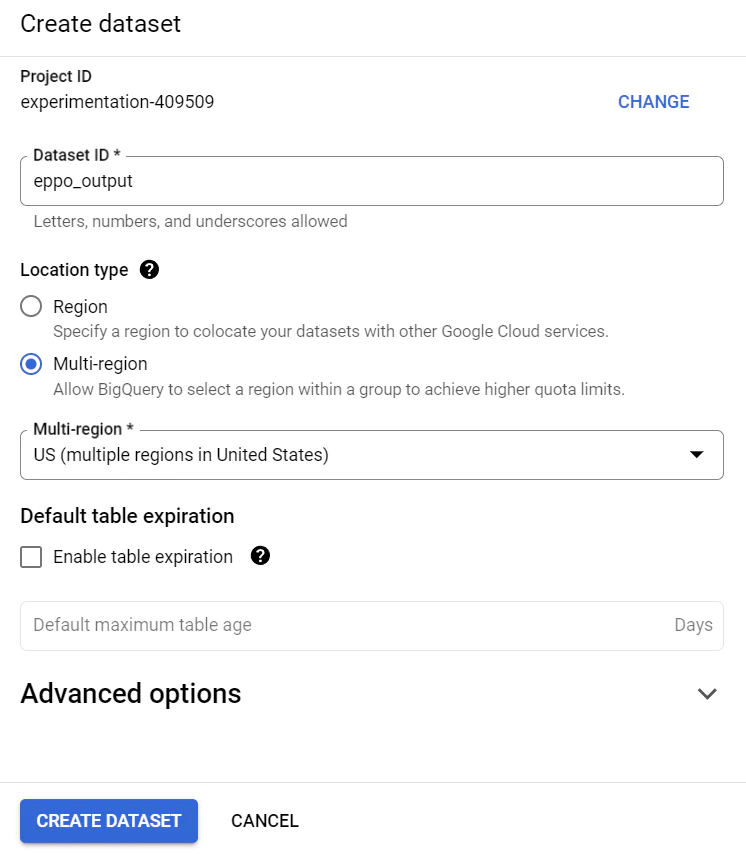

Create a Dataset for Eppo

Eppo requires a Dataset in which it will create intermediary tables to analyze and compute the results of experiments. So, go ahead and create one now.

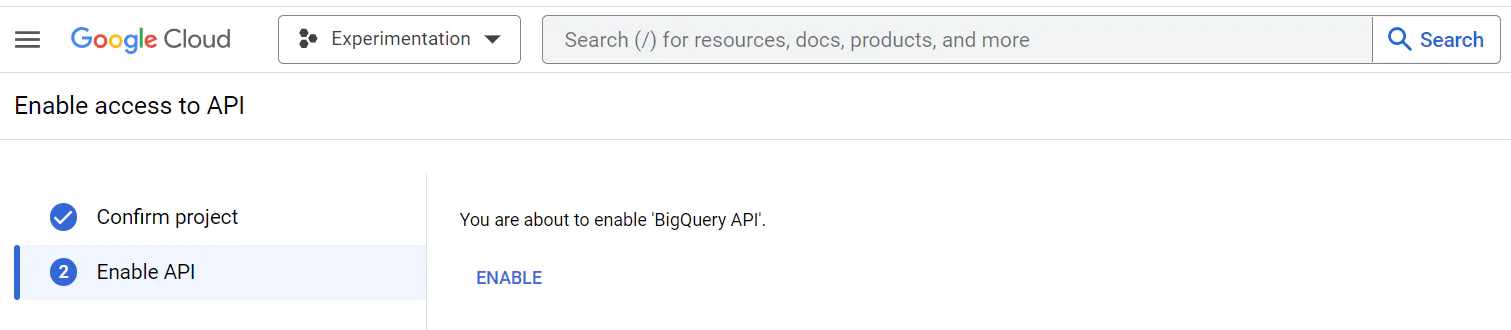

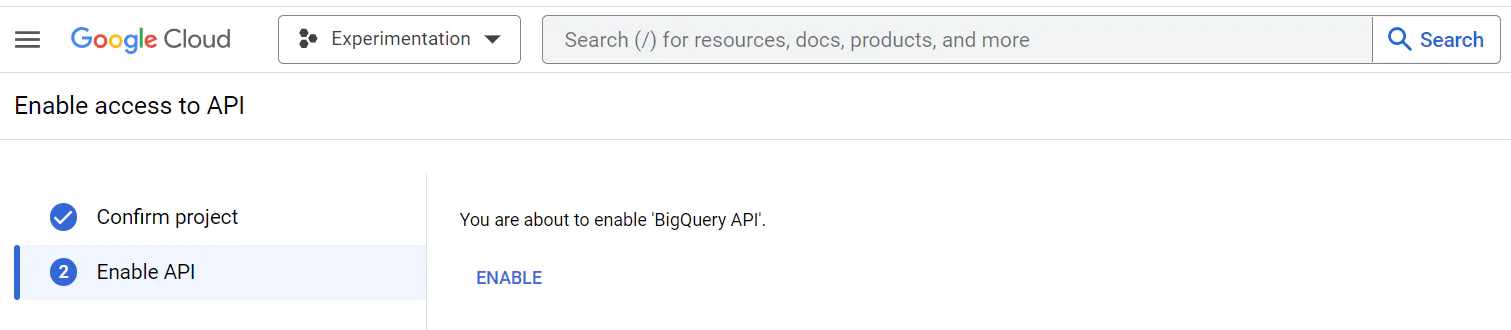

1. First go here to enable the BigQuery API.

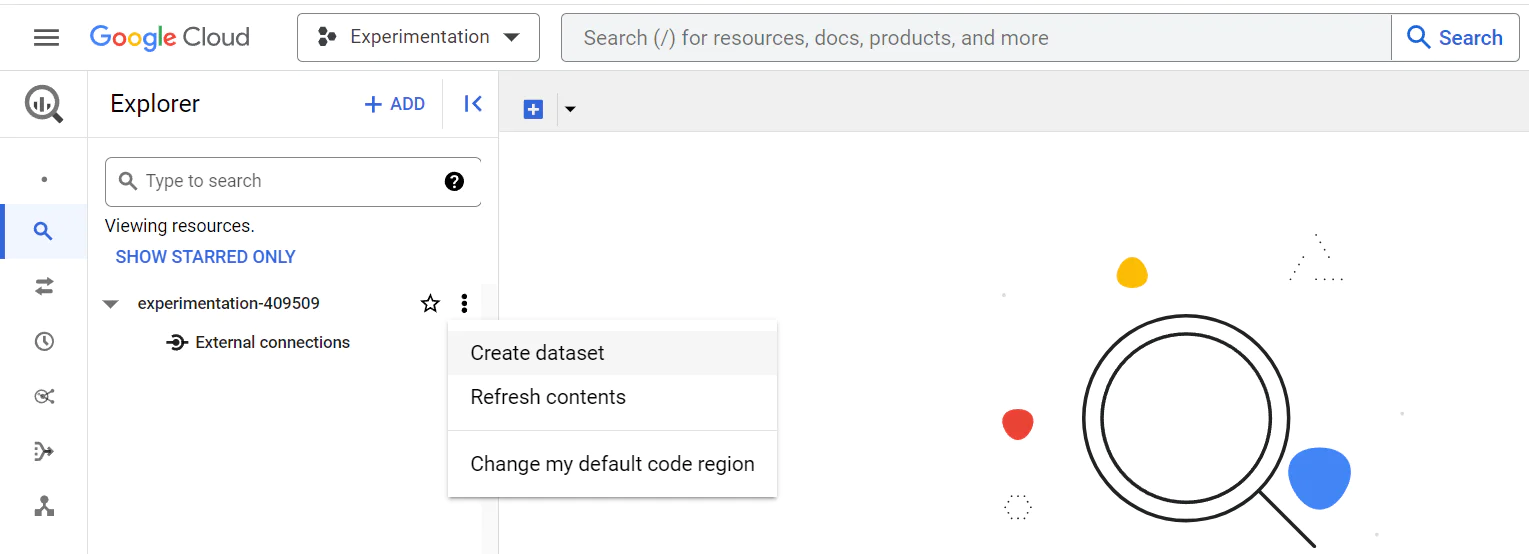

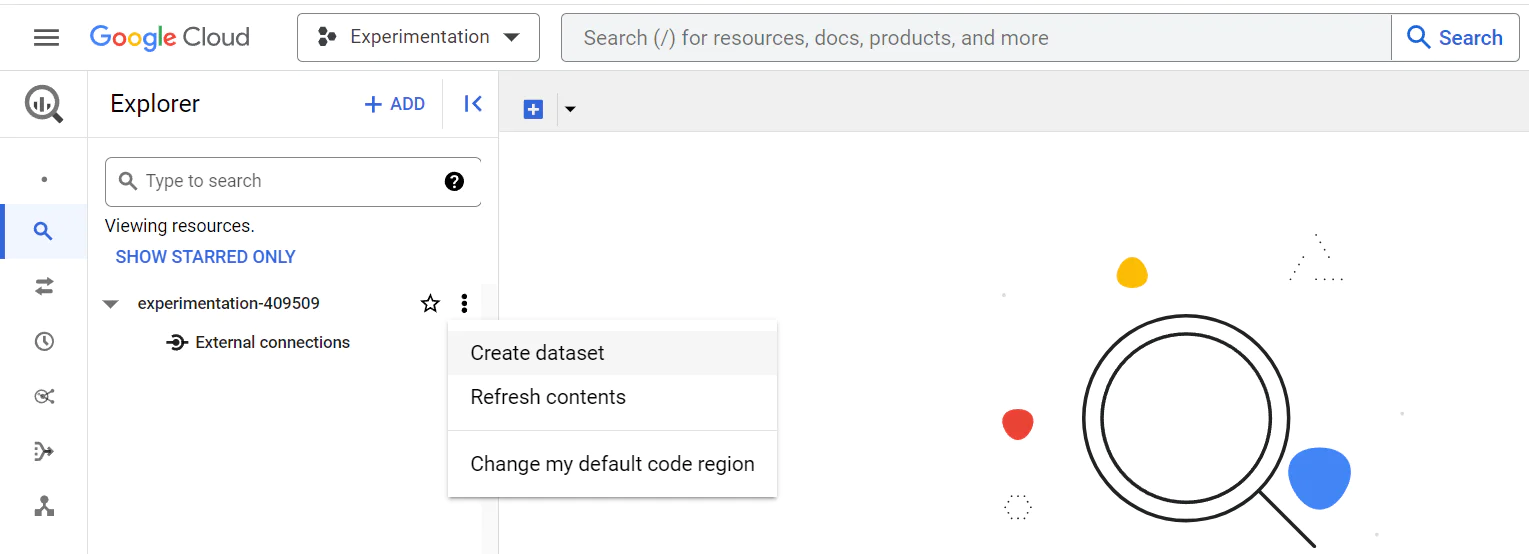

2. From the main navigation panel on the left, navigate to BigQuery. Find your project and click on the actions link (three dots on the right) and then on Create dataset.

3. Name the table eppo_output and click Create Dataset.

Your BigQuery configuration is now completed!

Set up Segment

There are two key components in Segment - Source and Destination. A Source allows Segment to collect data from external applications, which in our case is our Studio Flow. A Destination is an application to which Segment forwards your data – which in our case is BigQuery. Here’s how you would set them up.

Add Sources

In this project, you will create two Sources - one for collecting the assignment a user was given (old vs new message) and another for collecting the outcome (did the user reply or not).

Follow these steps to create your first Source.

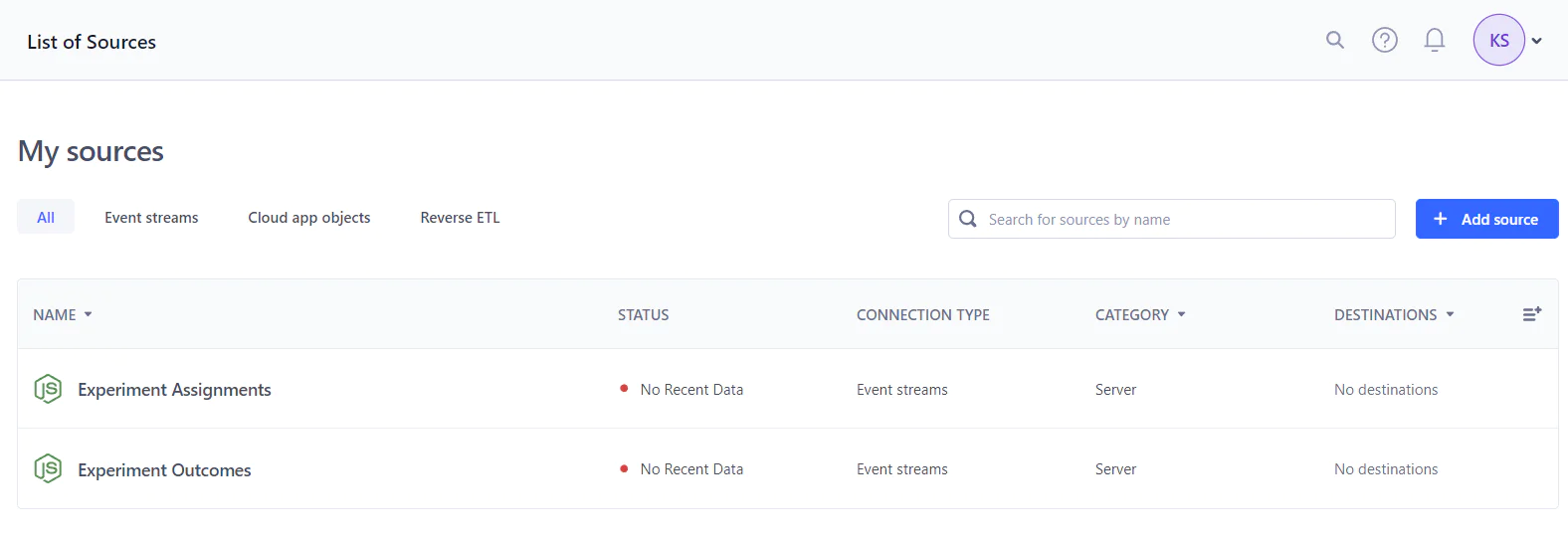

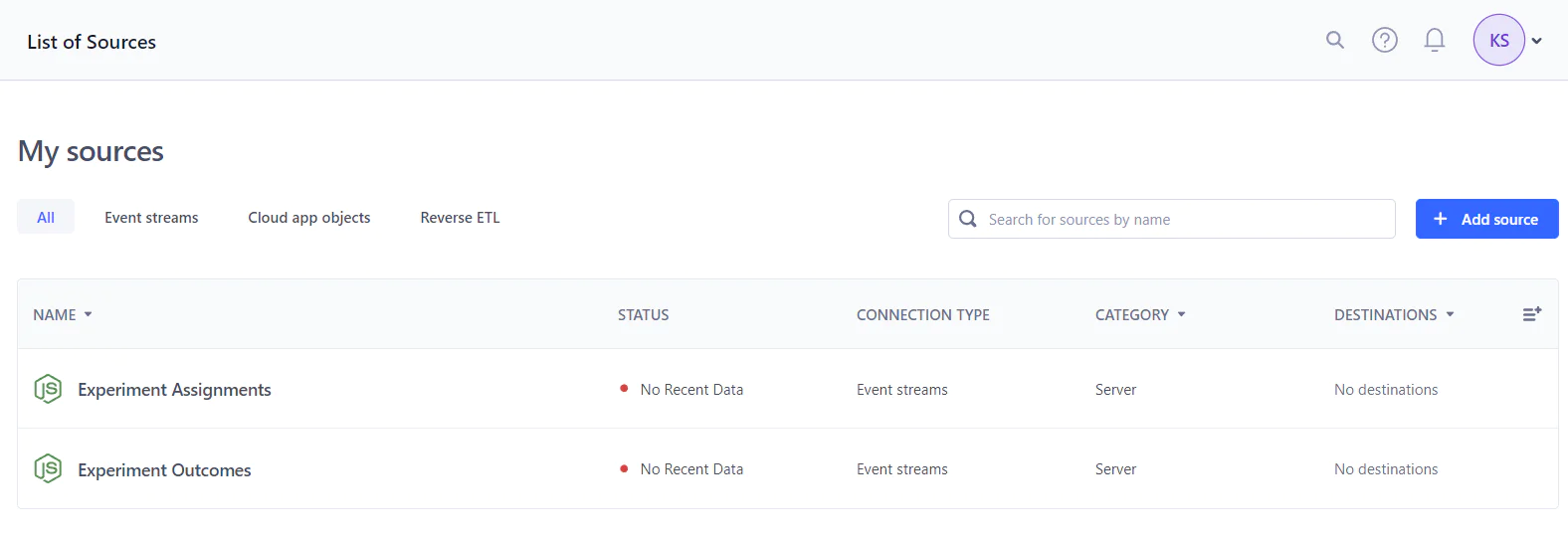

1. Log in to your Segment Workspace and click on Connections > Sources on the left panel.

2. From your List of Sources, click the Add Source button.

3. From the Catalog of Sources, search for and select the Node.js source.

4. Click on Add Source.

5. Name the source Experiment Assignments. Leave all other fields blank, and click on the Add Source button at the bottom of the page.

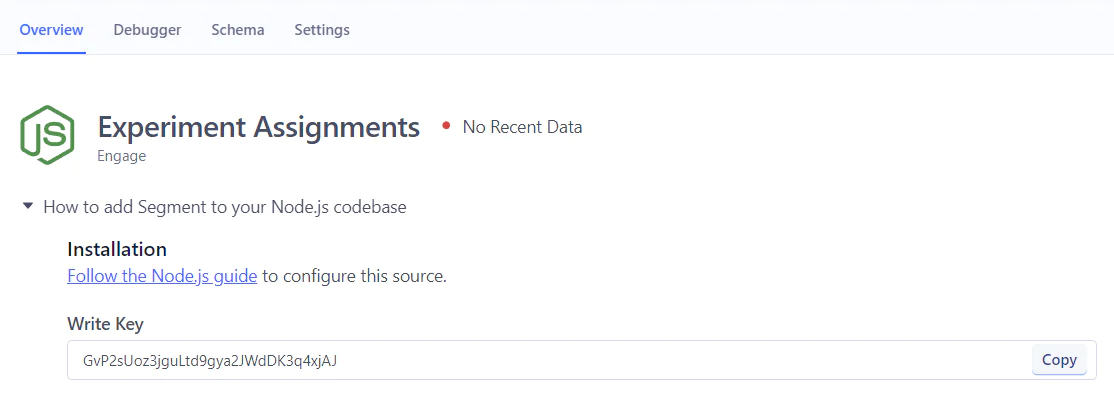

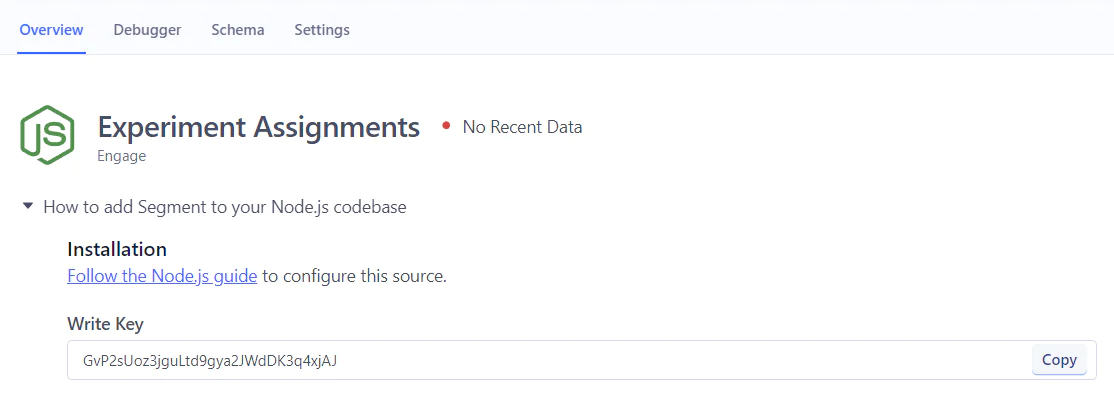

6. Your Node.js source is now ready! Be sure to save the Write Key for later use.

7. Next, repeat steps 1 through 6 to create another Node.js Source named Experiment Outcomes. Remember to note down its Write Key as well.

Once the above steps are completed, your List of Sources should appear as follows:

Add a Destination

The instructions are for sending data to BigQuery, but you can set up a different data warehouse as a Destination using the applicable instructions here.

1. In your Segment Workspace and click on Connections > Destinations on the left panel.

2. From your List of Destinations, click the Add Destination button.

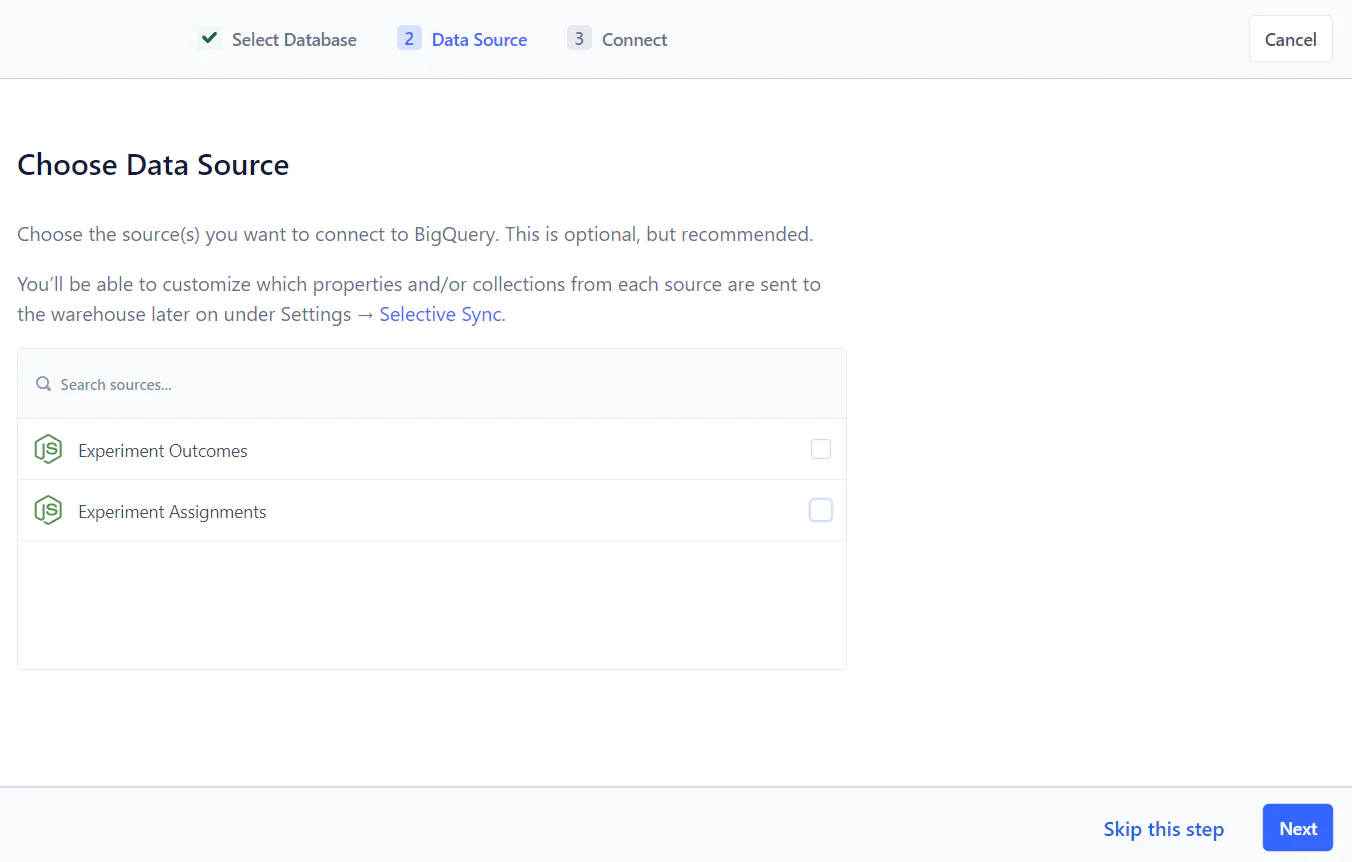

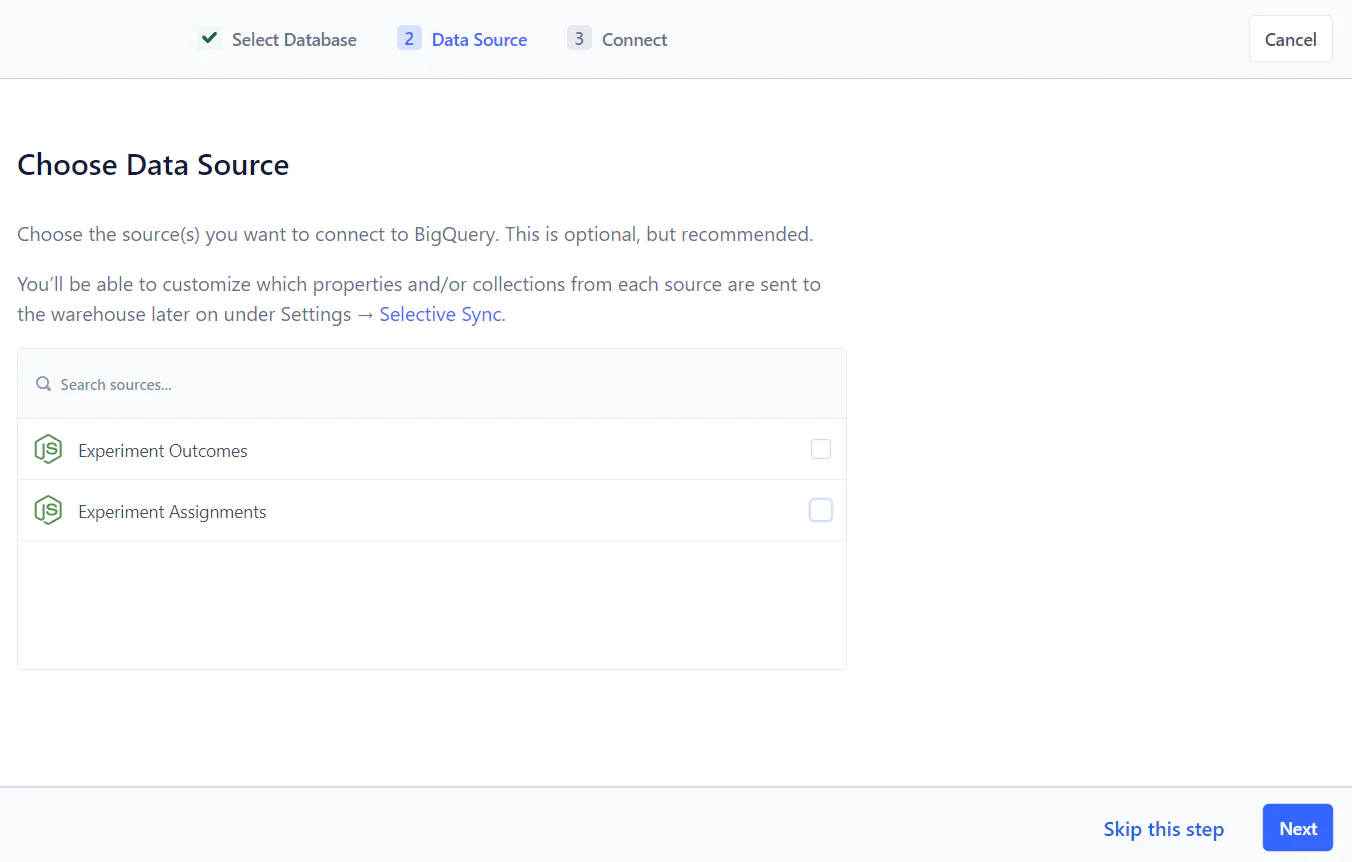

3. From the Catalog of Destinations, search for and select the BigQuery Destination.

4. Select the checkboxes next to the Experiment Assignments and Experiment Outcomes Sources, and then click Next.

5. Give the Destination any name of your choice.

From the BigQuery setup earlier, grab the Project ID and contents of the JSON file for the Segment Service Account, and plug them into the credentials section.

The Location defaults to US unless you configured a different region during the BigQuery setup.

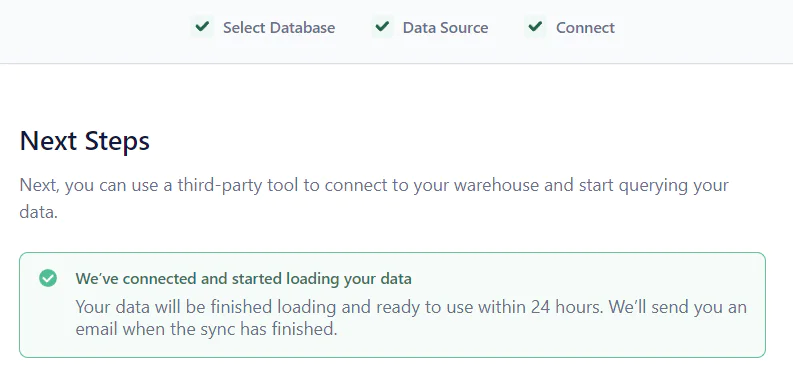

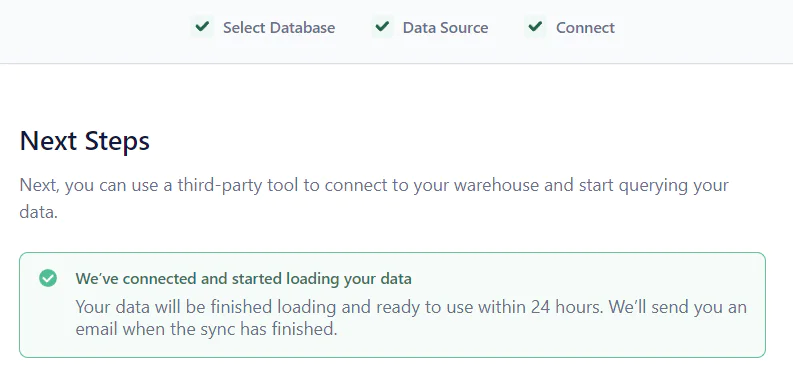

If the connection succeeds, you will see the following screen:

Your Segment configuration is now completed!

Create a Feature Flag in Eppo

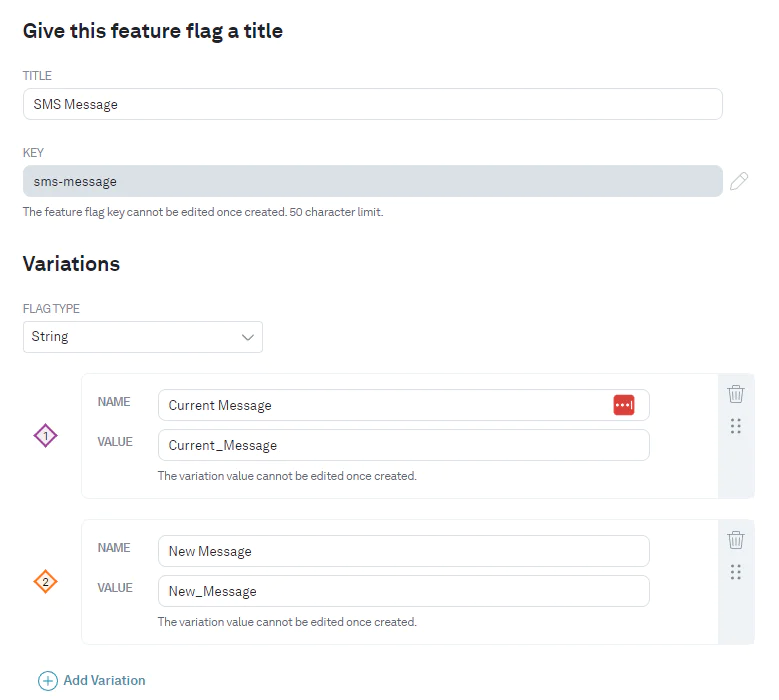

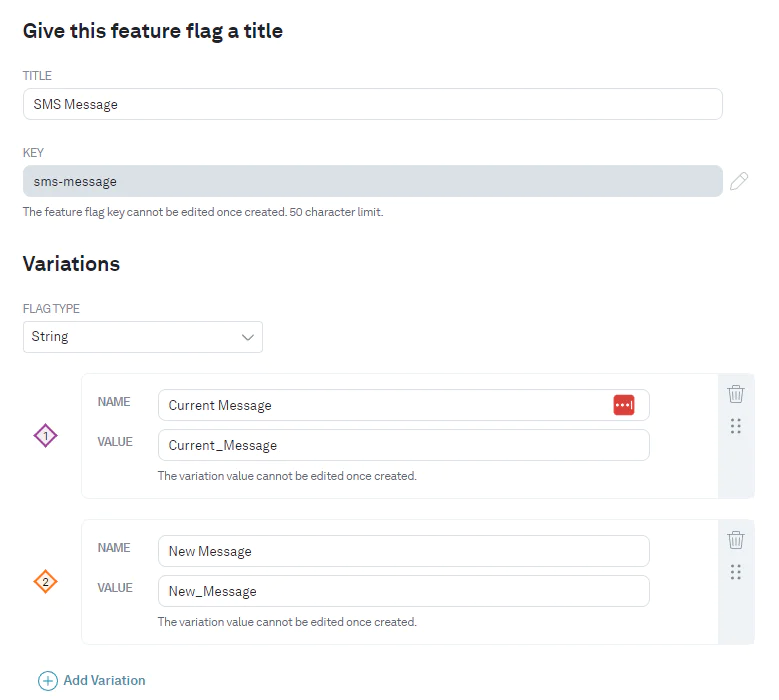

For the experimentation to work correctly, users must be randomly assigned to the two SMS messages (current and new). A Feature Flag in Eppo will determine which message a user should see and you will later learn to use it. For now, go ahead and set it up.

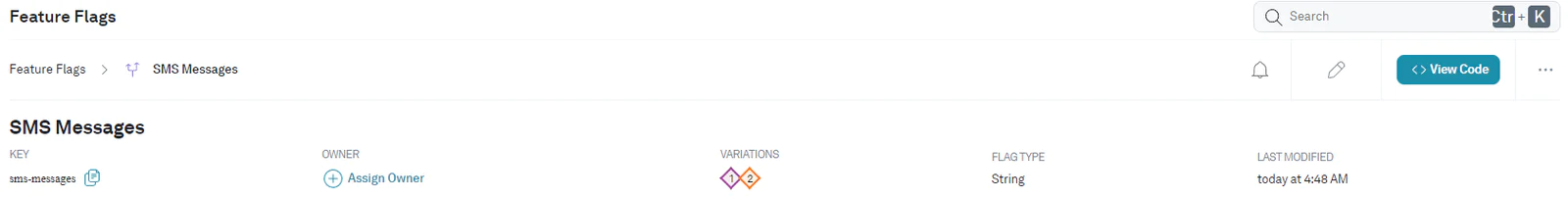

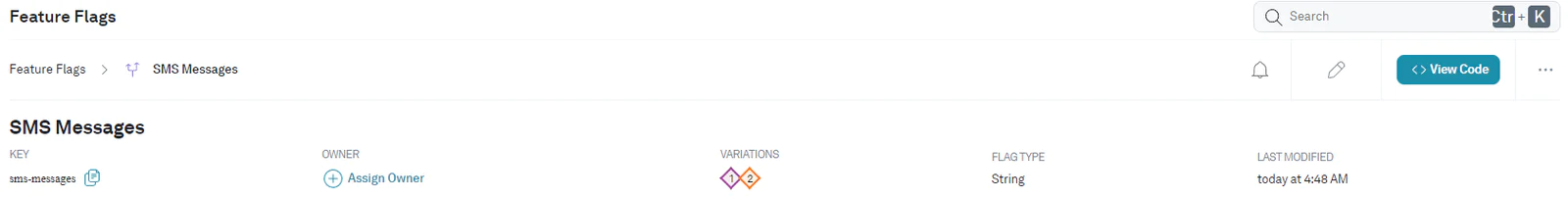

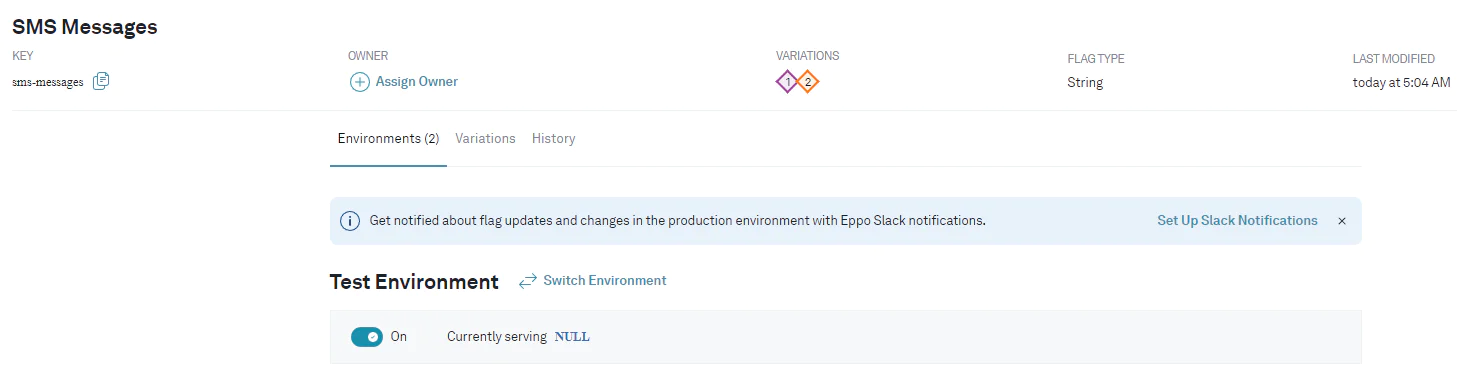

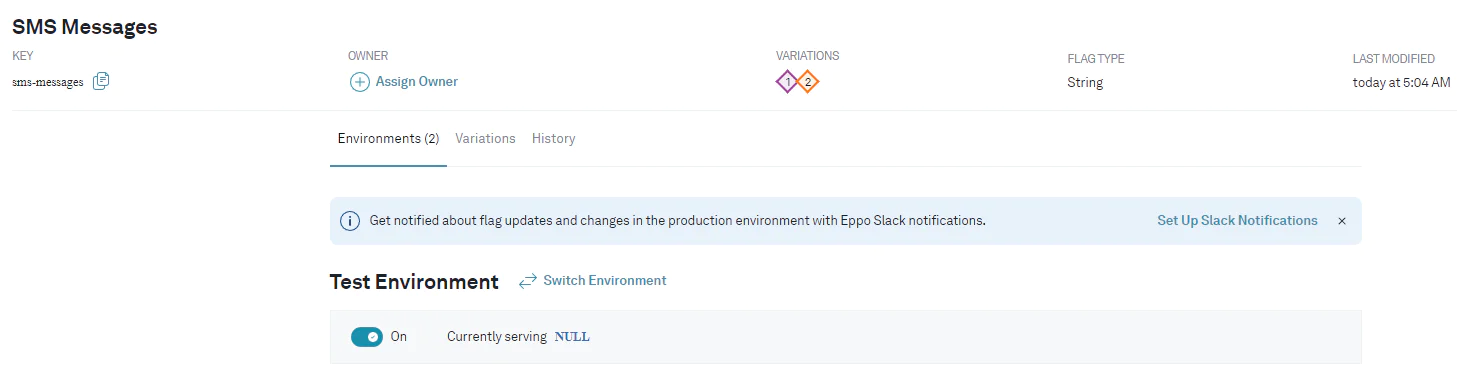

1. Login to Eppo and navigate to Feature Flags > Create > Feature Flag.

2. Name the Feature Flag SMS Message and add two variations - Current_Message and New_Message and then click Create New Flag.

3. Under the feature flag title (SMS Message), you will find the Feature Flag Key (sms-message). Make note of it for later use.

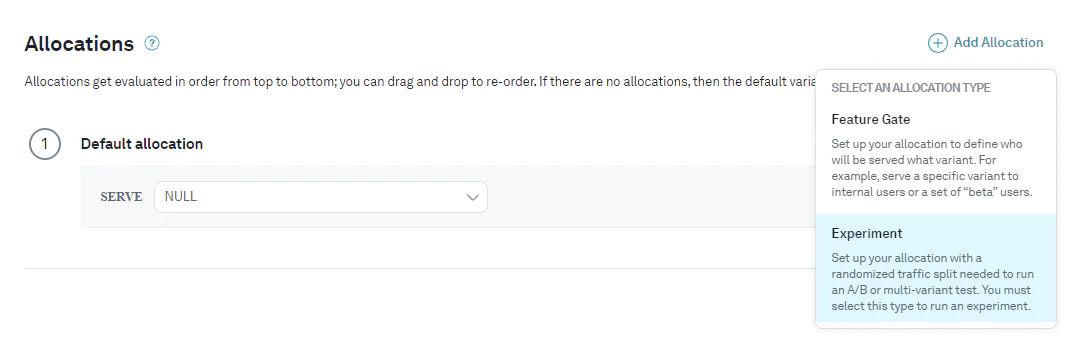

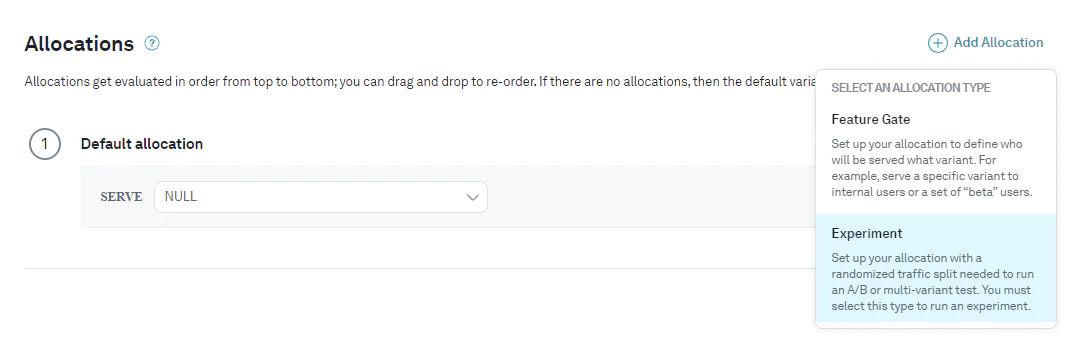

4. Next, click on Create Allocations > Experiment to create an allocation.

5. Name the allocation Even Split. Leave all settings default and click Save. These settings will ensure that current and new messages are each assigned on average to 50% of users.

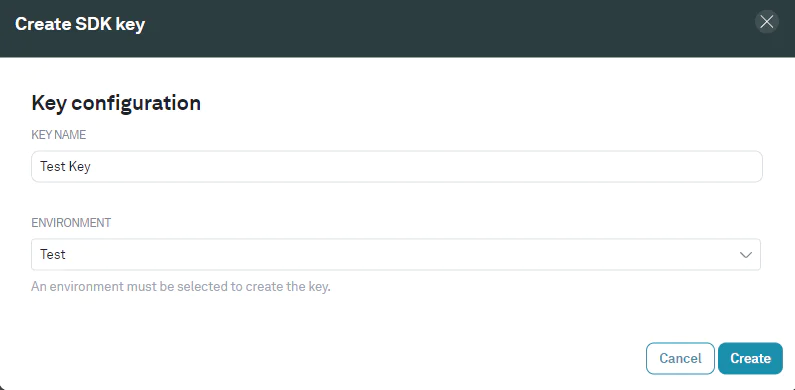

6. To invoke a Feature Flag, you will later use the Eppo Node SDK. For that you need an SDK key. So navigate to Feature Flags > Create > SDK Key which will present the following modal.

Enter a Key Name of your choice, select the Test environment and click Create.

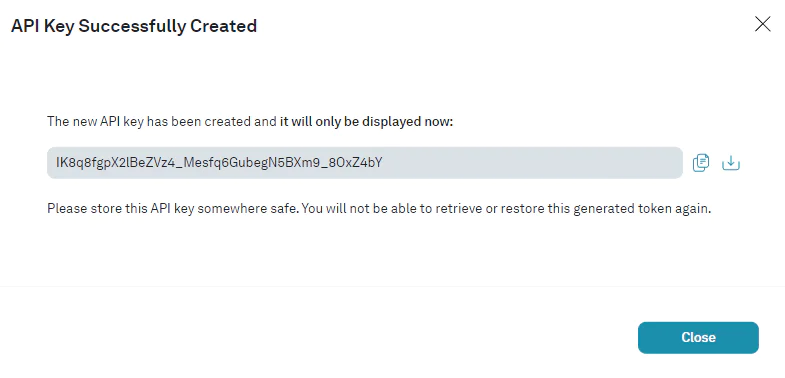

Be sure to save the Key that comes up next on the screen as it will not be available afterwards.

7. Finally, switch On the SMS Messages Feature Flag in the Test environment by clicking the switch button. This is what it looks like after switching On the Flag:

Deploy Functions

Next, you will download and deploy two Twilio Functions from this repository. But let’s first understand the Functions with some key code snippets.

Code Overview

The get-assignment function serves two purposes:

1. It uses the Eppo Node SDK to fetch the feature flag assignment from Eppo.

const assignment = eppoClient.getStringAssignment(event.userID, event.experimentKey);

2. It uses the Segment Analytics Node SDK to log an event as to which SMS message (current or new) was sent to a user.

The log-outcome function has one goal only - it uses the Segment Analytics Node SDK to log an event about whether or not the user replied to the SMS.

Deploy to Twilio

Follow the steps below to deploy the Functions to your Twilio account:

- Open a terminal on your computer and run git clone https://github.com/datakabeta/twilio-eppo.git to clone the repository.

- You should now see a local folder named twilio-eppo. Navigate to this directory in the terminal and run npm install to install the required dependencies.

- Next, rename the .env.example file to .env and enter the values for the Eppo and Segment SDK Keys you had saved previously.

Warning: The Segment Write Keys are retrievable anytime from the Sources configuration but if you missed saving the Eppo SDK previously, you will have to create a new one. - Finally, run twilio serverless:deploy to deploy the Functions to your Twilio account.

Once the deployment is complete, you will find the eppo service listed in your Twilio console. This service includes both Functions.

Your Functions are now fully deployed!

Publish Studio Flow

The steps to send SMS appointment reminders using Studio Flow are described in this tutorial. Feel free to check it out first, especially if you’re not familiar with Studio Flow.

For this project, you will use a modified version of the same Flow that supports A/B testing. You will find the Flow definition in a file named studio-flow.json which is part of the code repository you cloned earlier. Let’s import the Flow and deploy it into your Twilio account.

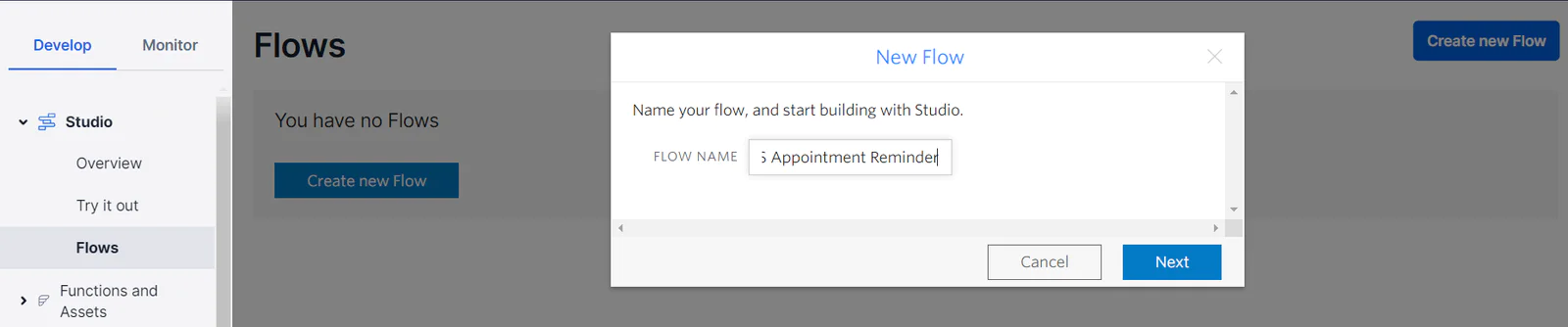

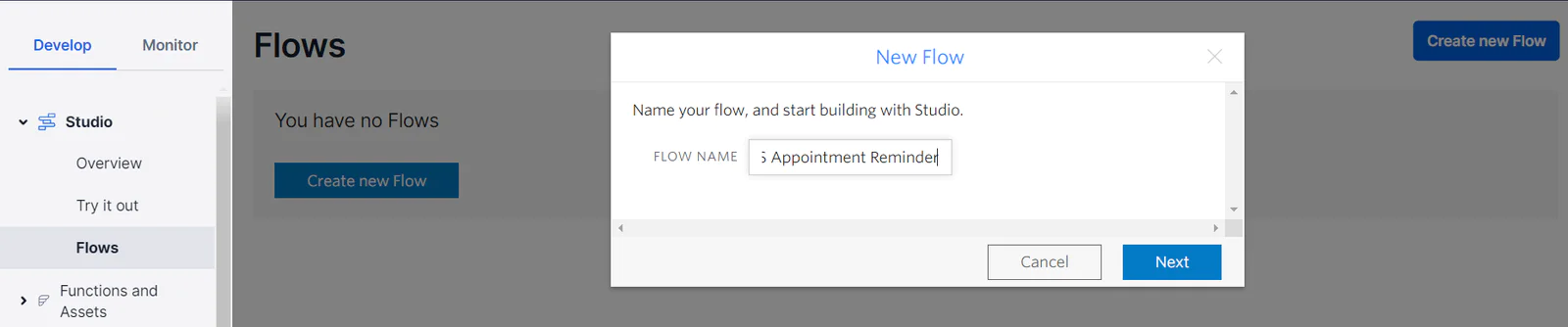

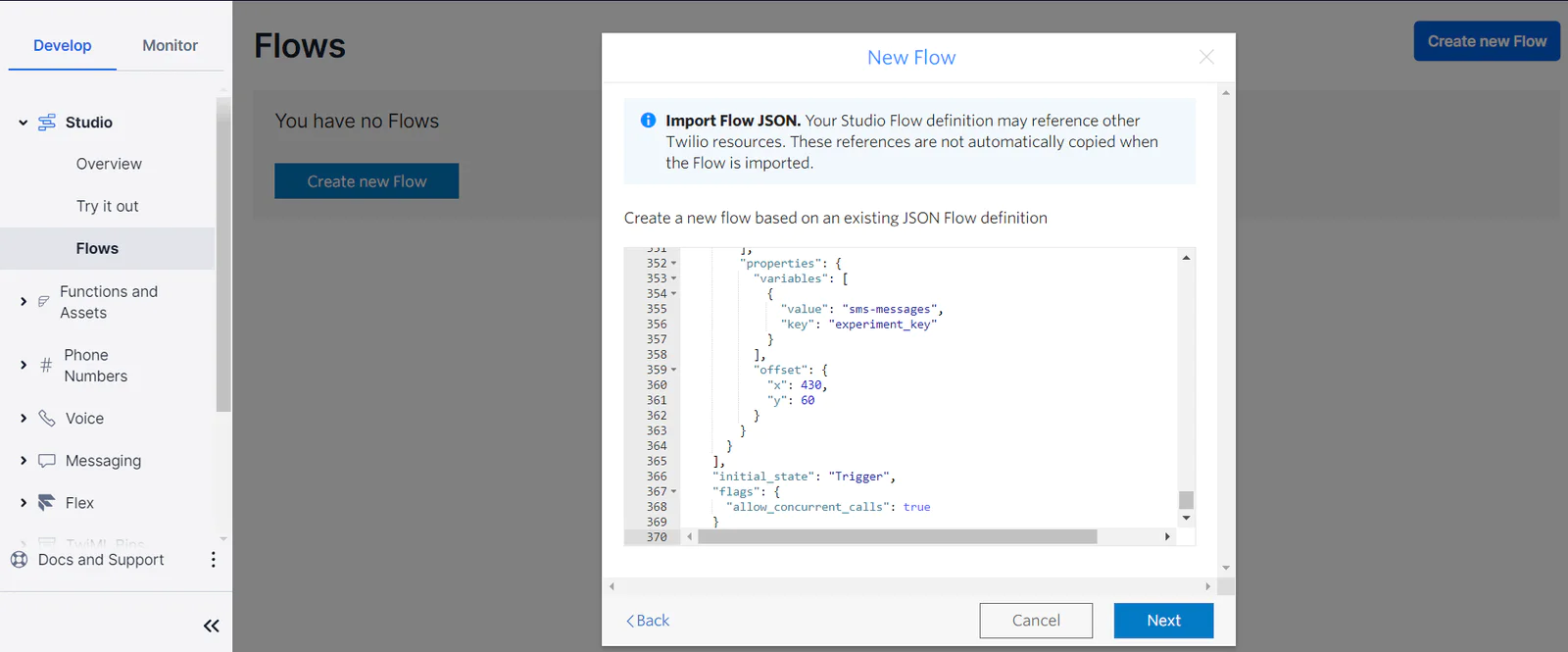

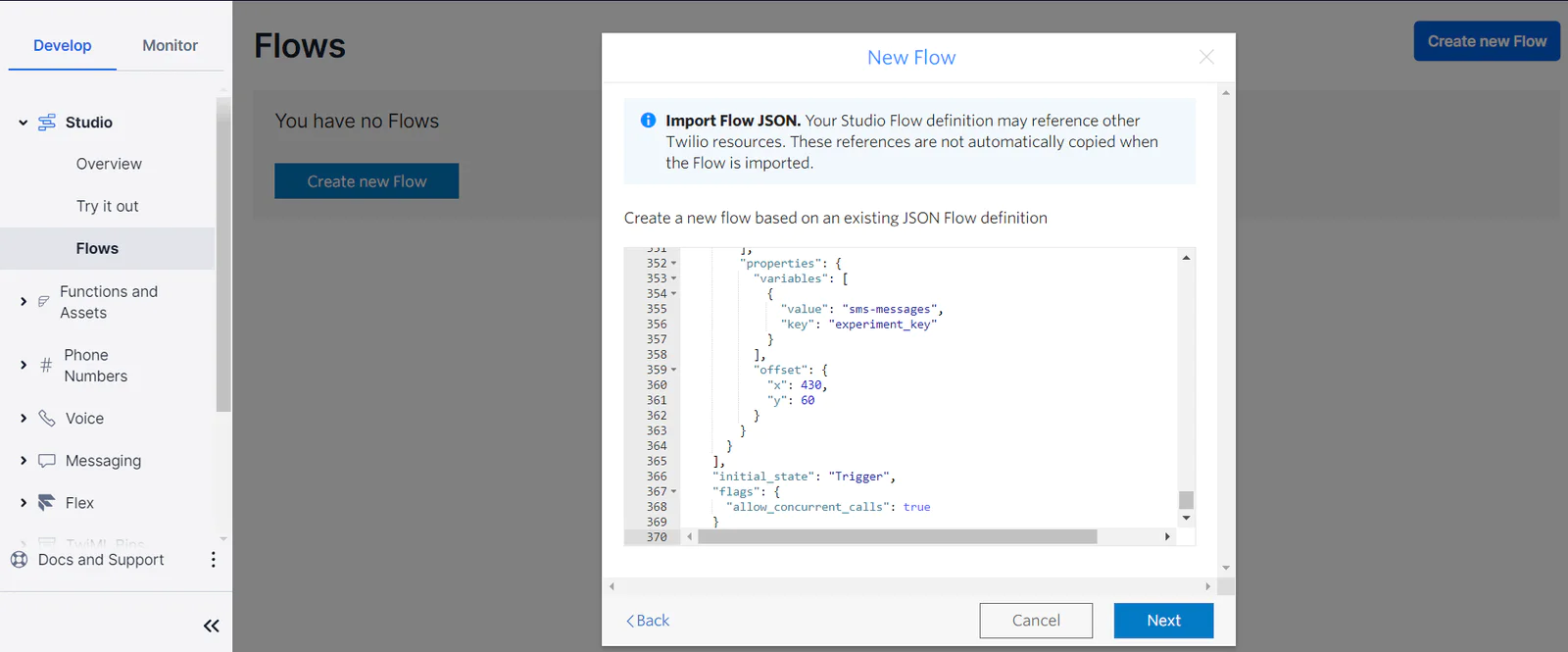

1. Navigate to Studio > Flows > Create new Flow and give it a name of your choice.

2. Then click on Import from JSON and click Next.

3. Copy-paste the contents of studio-flow.json and click Next.

The Studio Flow import is completed.

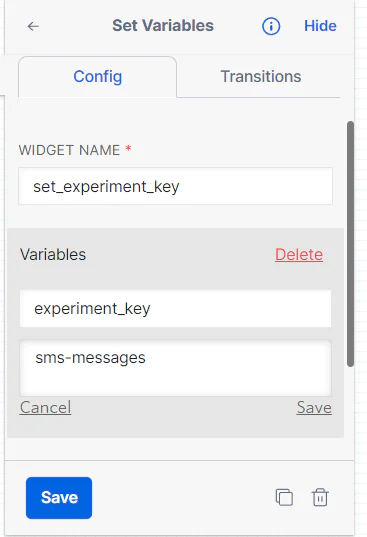

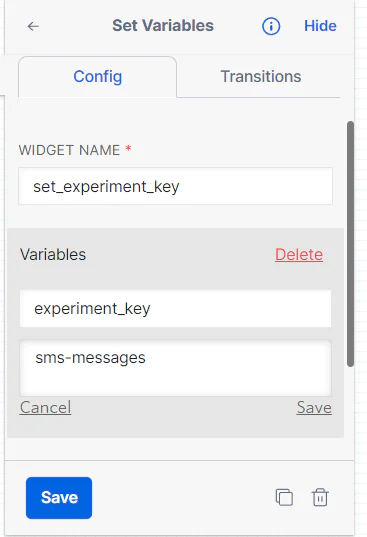

4. The set_experiment_key is used to set the Eppo Feature Flag key for reuse in other widgets, and its value is already set to sms-messages. But, if your Feature Flag key is different from mine, be sure to click on the set_experiment_key widget and change the value of the experiment_key.

5. Finally, click on the red Publish button at the top to deploy the widget. Once deployment is completed, the Publish button will be unclickable.

Your Studio Flow is now ready!

Create an Experiment in Eppo

In this 2nd and final part of setting up Eppo, you will create an Experiment. There are several steps leading up to the creation of an Experiment.

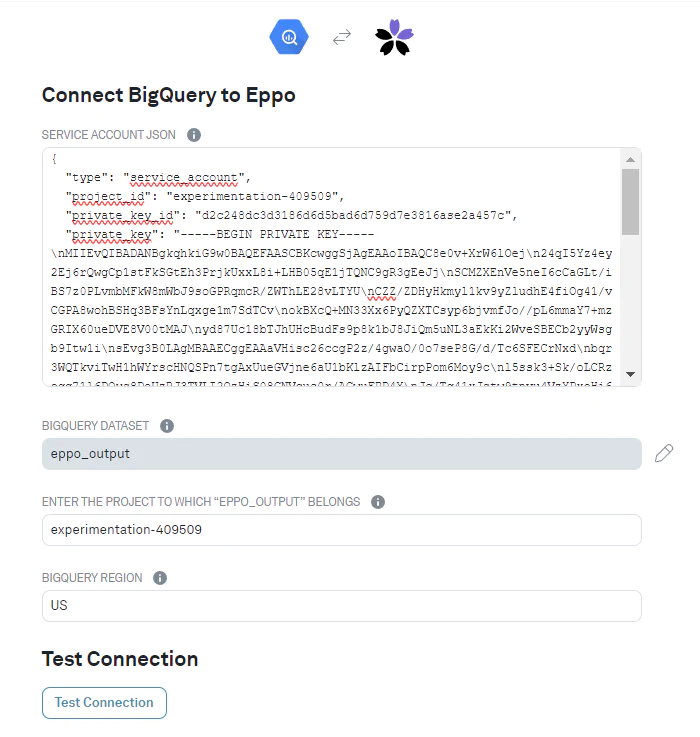

Connect BigQuery to Eppo

An Experiment creates intermediary tables in BigQuery to compute the results of an experiment. So, you have to first set up the Eppo-BigQuery connection.

Login to Eppo and navigate to Admin > Settings > Data Warehouse Connection. Now copy-paste the BigQuery Service Account JSON created earlier for Eppo. Also, set the Bigquery Dataset to eppo_output, the Project Name to the Project ID you saved earlier, and the BigQuery Region to US (or a different region if you configured it as such).

Then click Test Connection.

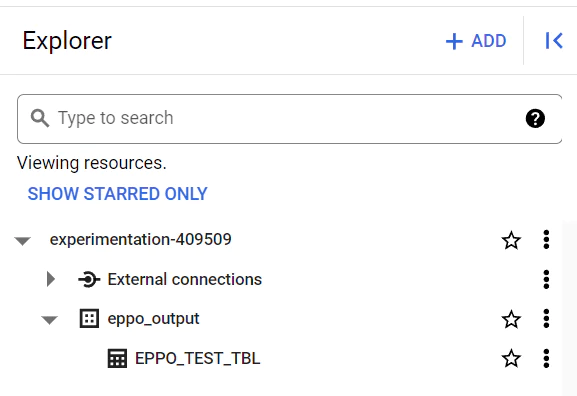

You will see a confirmation message in Eppo once the connection is successful. You can also verify that the test was successful by opening the eppo_ouput dataset in the BigQuery console and verifying that a table named EPPO_TEST_TBL has been created.

Test Run

Before you set up an Experiment, you need to do a test run and send a few SMS appointment reminders to test users.

This might feel like the wrong order, but Eppo needs to learn how to use the assignment and experiment outcomes data created by Segment in your data warehouse before Eppo can analyze it.

In short: this process of annotating data requires that you have some sample data in BigQuery in the first place!

So, go ahead and create some sample data by firing off SMS messages from the Studio Flow to test phone numbers, then replying to some of those messages. This will log some events in Segment.

The Segment/BigQuery sync may take some time. Once completed, go to your BigQuery console where you will find two new tables created by Segment with data pertaining to your test runs:

The table names should be experiment_assignments and experiment_outcomes. In case your tables are named differently, make a note of them for use in the Assignment and Fact SQL configurations.

Prepare for an Experiment

An Experiment definition requires 4 components - Entities, Assignment SQL, Fact SQL, and Metrics. Let’s understand what they’re all about.

- Entities are labels for the subjects of your experiment. For instance, if your messages will be sent to Prospective Customers, you should create an Entity named Prospective_Customers.

- Assignment SQL is a SQL query that Eppo will run in BigQuery to retrieve the assignments allocated by the SMS Messages Feature Flag so it knows which message was seen by each user.

- A Fact SQL is a SQL query that Eppo will run in BigQuery to retrieve the outcomes of the experiment (i.e., whether or not the user responded to the SMS). The results of a Fact SQL feed into the Metrics definition.

- Metrics measure and compare the impact of the experiment, for each group of users, on your business metrics. For example, User Response Rate is a metric that you may use to ascertain the success of each SMS reminder message in getting users to reply.

Now that you understand what you have to configure, follow the steps below to set them up.

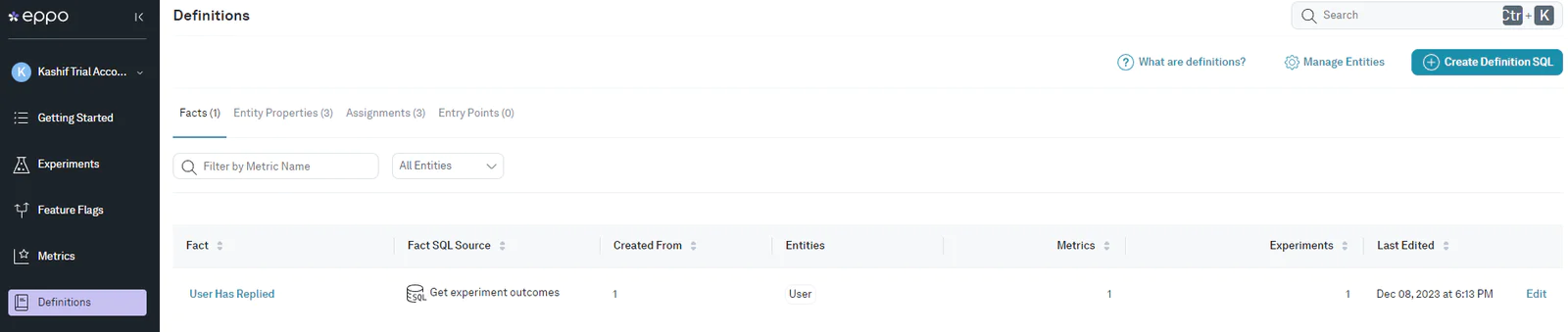

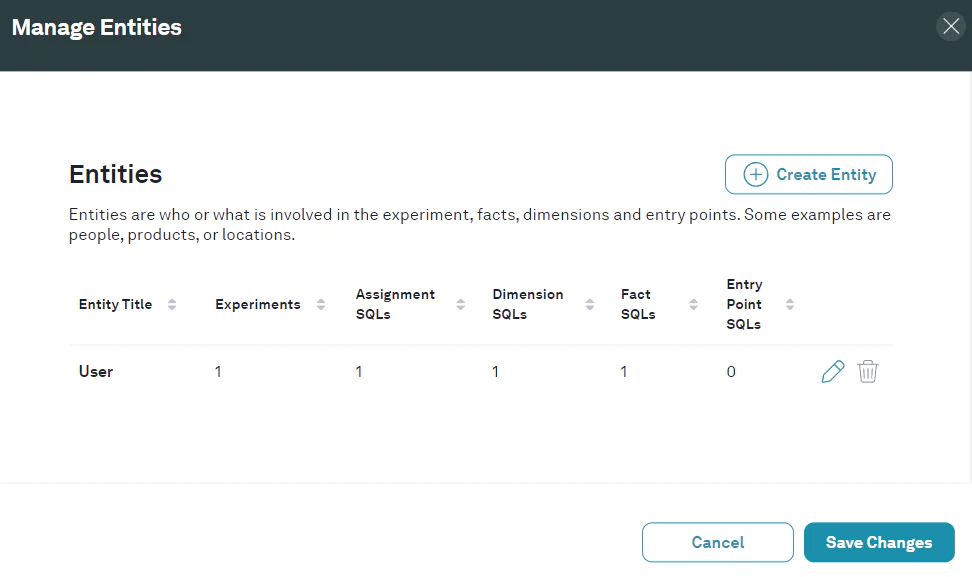

1. To create an Entity, navigate to Definitions in the menu. Then click on Manage Entities > Create Entity and type in User.

When completed, you should see the User Entity as shown below:

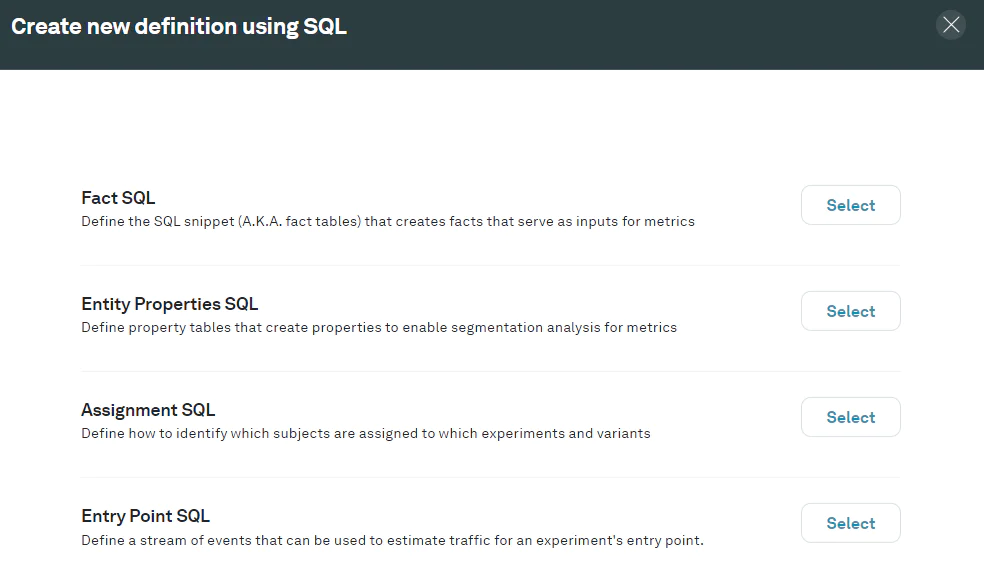

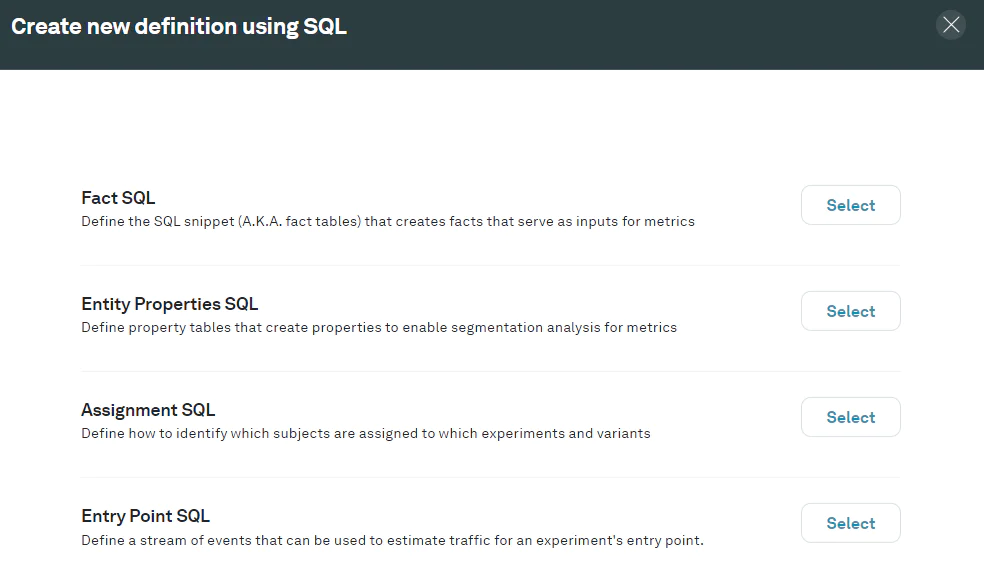

2. To create an Assignment SQL, go back to the Definitions page, click on the Create Definition SQL button and select Assignment SQL.

In the Create New Assignment SQL modal, enter the following SQL query. Make sure to change the table name and path, if yours is different from mine.

Then, click Run.

You should now see sample data retrieved from the BigQuery Assignments table. Map the Experiment Subjects, Timestamp of Assignment, Experiment Key, and Variant fields to the USER_ID, ORIGINAL_TIMESTAMP, EXPERIMENT, and VARIATION columns respectively.

Then click on Save and Close to complete the Assignment SQL definition.

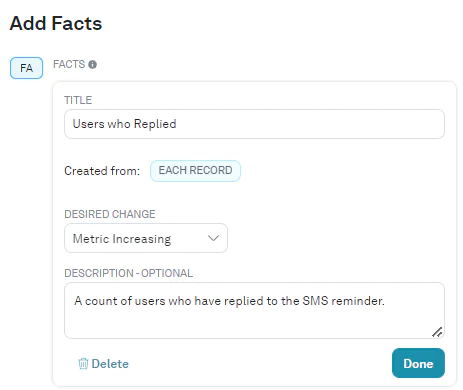

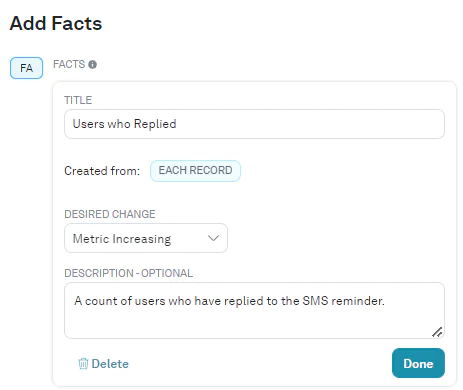

3. To create a Fact SQL, go back to the Create Definition SQL modal and select Fact SQL.

In the modal that opens up, enter the following SQL query. Make sure to change the table name and path, if yours is different from mine.

Then, click Run.

You should now see some sample data retrieved from the BigQuery Experiment Outcomes table. Map the Timestamp of Creation and Entity fields to Original_Timestamp and User_ID columns, as shown below.

Also, click on Add Fact. Set the Title to Users who Replied and Desired Change to Metric Increasing. The description field is optional. Then click on Save and Close to complete the Fact SQL definition.

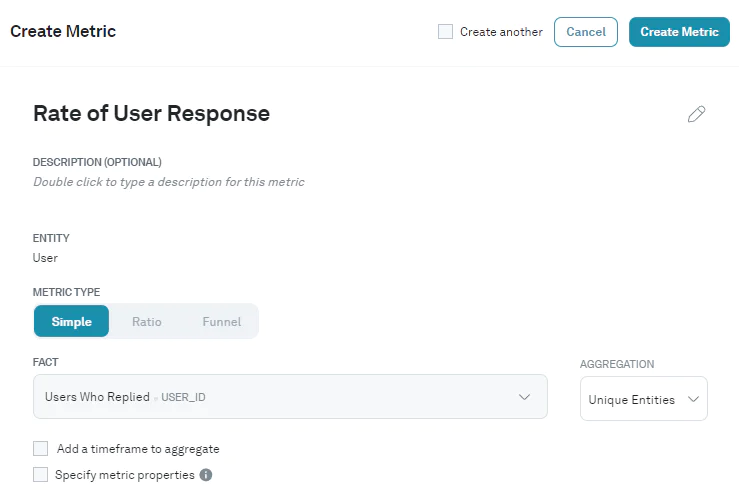

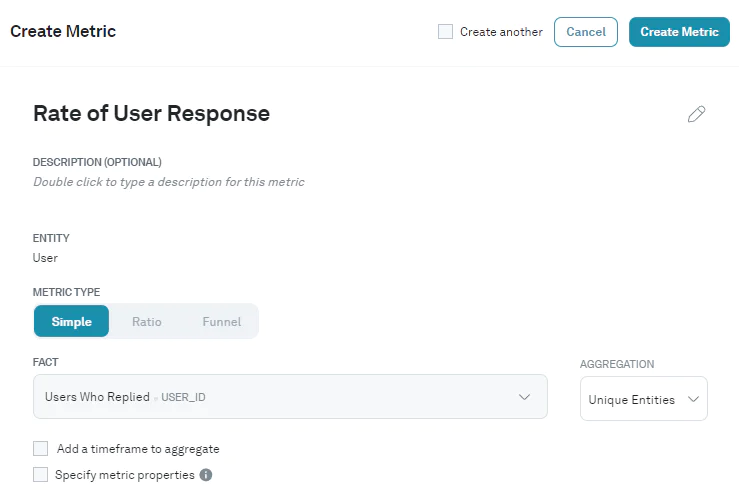

4. To create a Metric definition, navigate to Metrics > Create > Metrics.

Give this Metric the title Rate of User Response. Then select the Fact you just created, “Users who Replied,” and choose to aggregate it by Unique Entities. Leave other fields at default values and click Create Metric.

Congrats, you’ve now created an Experiment definition!

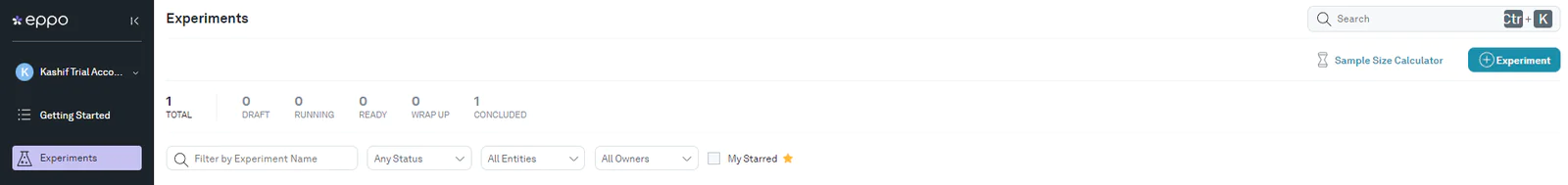

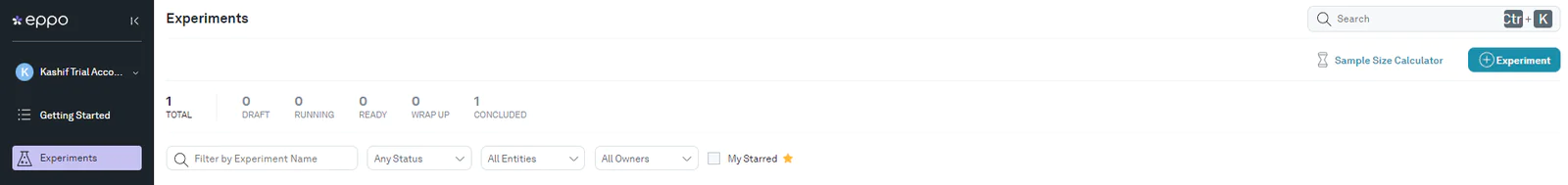

Create an Experiment

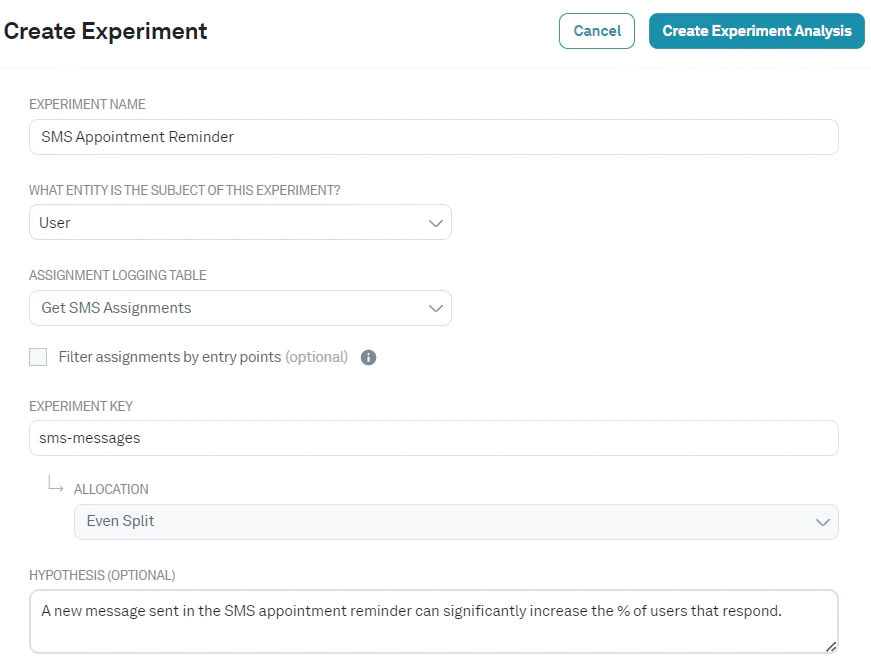

This is how you will create an Experiment definition.

1. In the main navigation panel, navigate to Experiments and click the blue button with a plus sign.

2. Give the experiment a name. Select User as the Entity, Get SMS Assignments as the Assignment Logging Table and sms-messages as the Experiment Key. The hypothesis section is optional.

Then click Create Experiment Analysis.

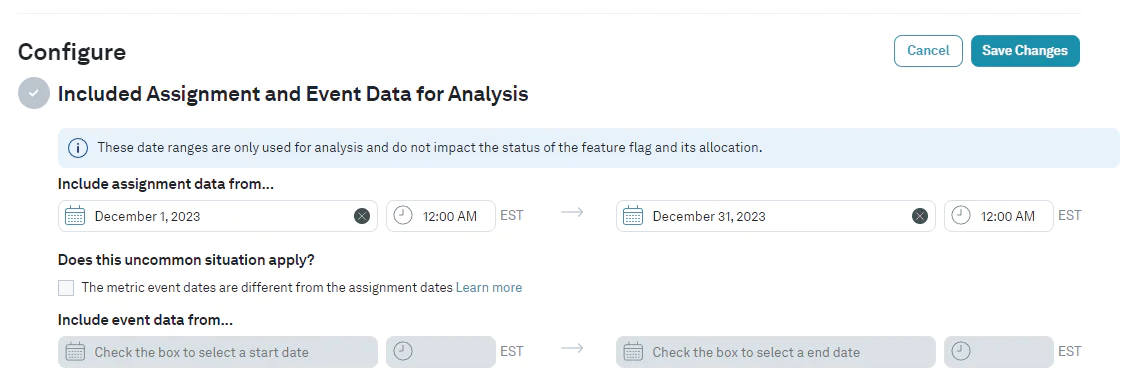

3. Pick the date range during which you intend to run the Experiment. Leave all other fields with default values and click Save Changes.

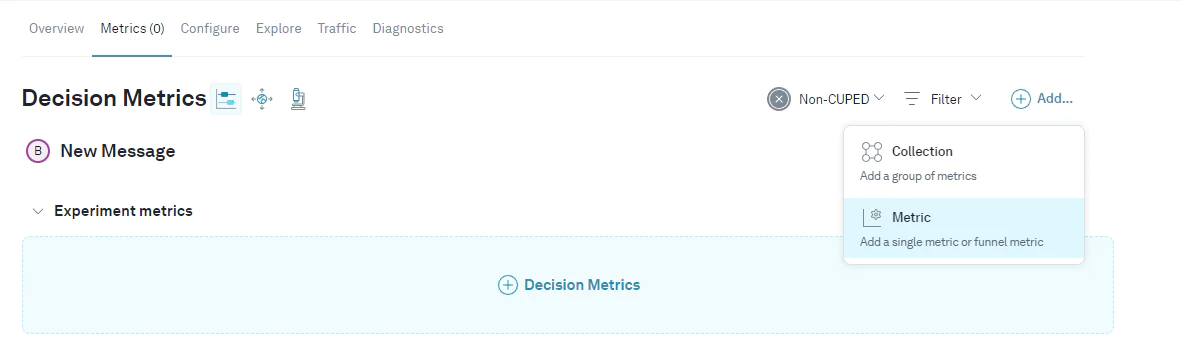

4. In the Decisions Metrics section of the Experiment, click Add > Metric and select the Rate of User Response Metric as shown below:

5. You’ve created your first Experiment!

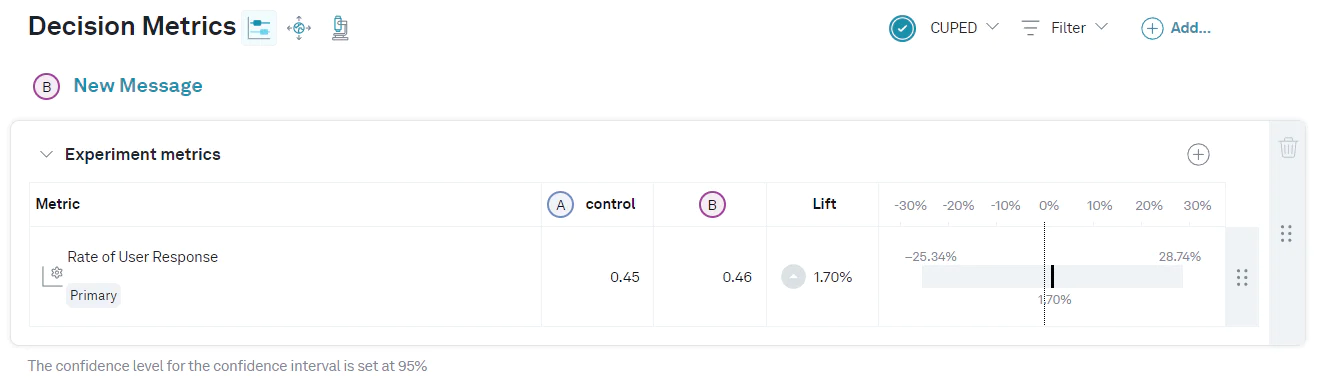

Experiment analysis runs every day until the end of the experiment, and you can see the impact on your Metrics described in terms of Lift and its Confidence Interval. Here’s a screenshot of the Metrics for my Experiment:

The Lift in the Rate of User Response metric, calculated from my experiment data, averages 1.7%. The statistical analysis estimates with 95% confidence the range of possible values of true Lift. Since this range includes a 0% Lift it leads me to the conclusion that the new SMS message is no better at getting users to respond than the current message.

Now that’s anti-climatic but aren’t I glad I tested my idea before rolling it out?

Conclusion

Congratulations, you have run your first A/B test! Hopefully, you found that your idea works better than the status quo (and mine!).

This tutorial is only a starting point. There’s much more to experiment with in the Twilio ecosystem; think IVR flows, creating chat widget UIs, and sending email messages.

The goal of experimentation is to test your ideas to determine whether they are likely to improve your user’s experience and business outcomes. You’ve now learnt all you need to run them. The world’s your oyster, happy experimenting!

Kashif loves solving business problems with software and statistics. You can find out more about his work at Data Ka Beta and connect with him on LinkedIn.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.