Build a WhatsApp Chatbot to Learn German using GPT-3, Twilio and Node.js

Time to read:

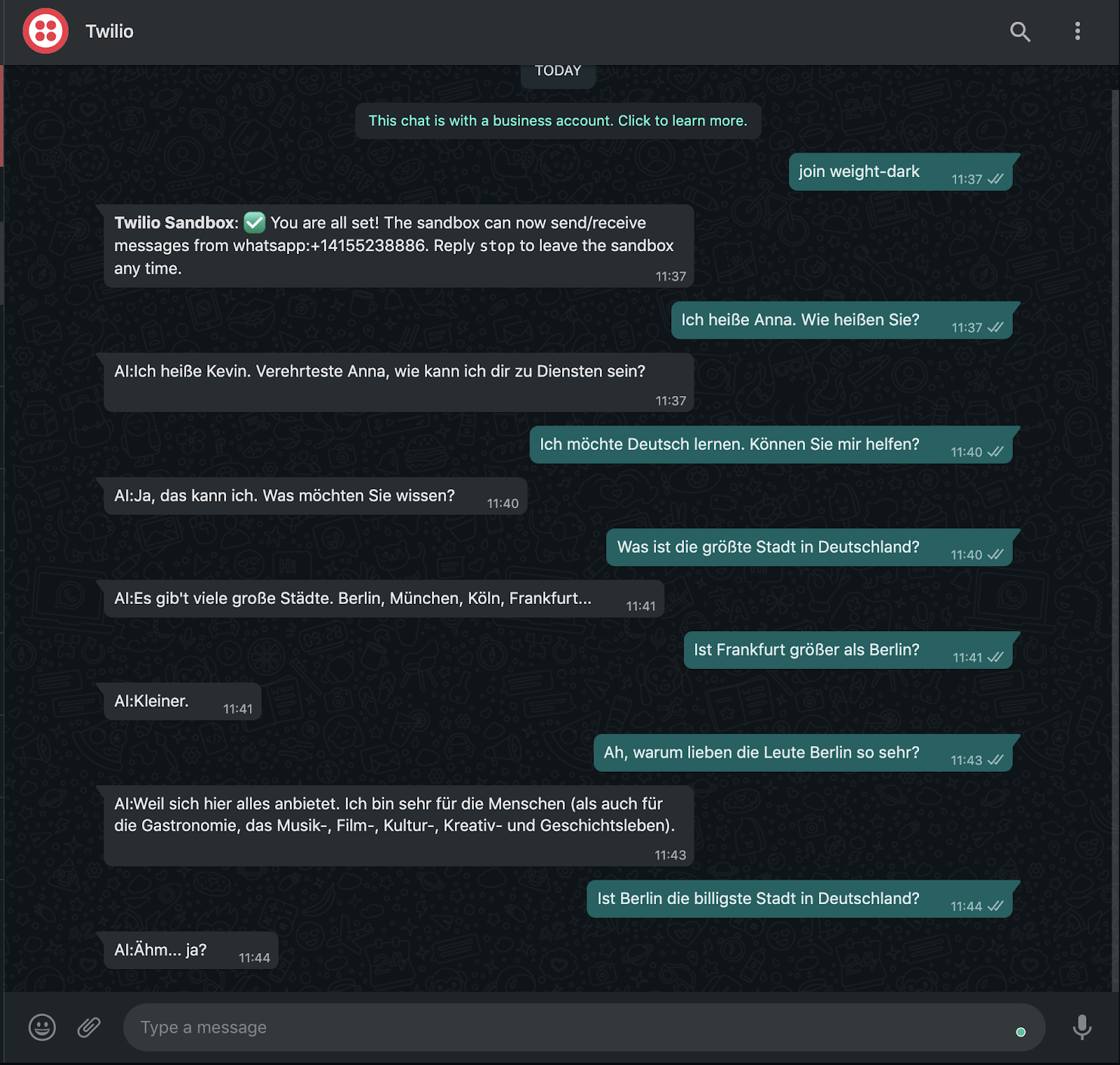

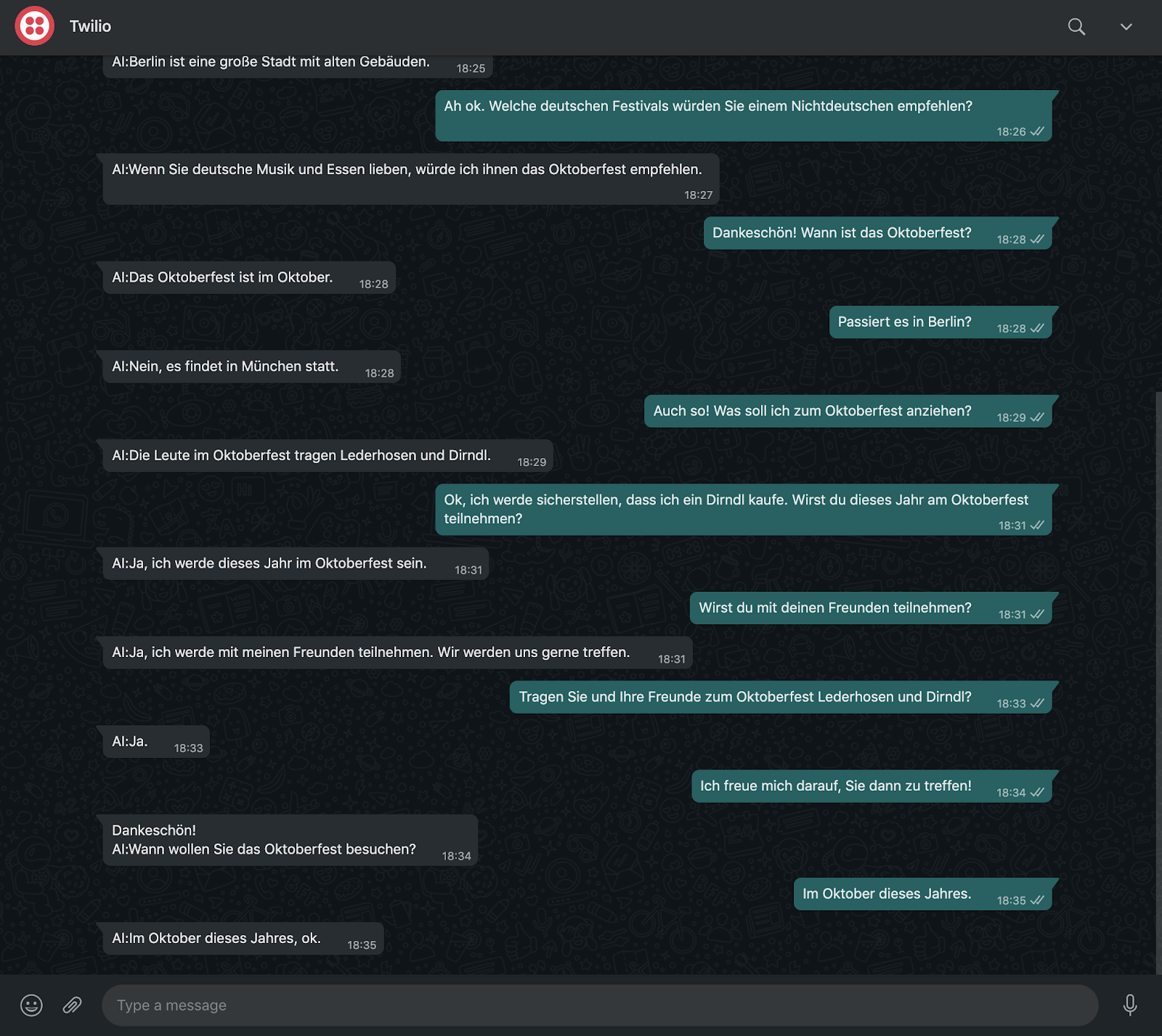

Learning a new language can be hard and sometimes enrolling to a language school is not enough. The only way around it, is constant practice. At times, those around you might not share a similar interest or you might not be making enough progress from the learning options you have. As this can get challenging in the long run and demotivate you from learning the language, why not build an AI powered chatbot to lessen the burden? Check out the chat I had below with the GPT-3 language model:

In this tutorial, I will walk you through on how to build an AI chatbot to help and encourage you to practice a language that you are learning, in a creative and fun way using the most popular language model of 2020, GPT-3! As I’m in the quest of being fluent in German, I will use this language as an example for this tutorial.

Prerequisites

To complete this tutorial you will need the following:

- Twilio account. Sign up for free if you don’t have one. Use the link provided to obtain $10 credit once you upgrade to a paid account.

- Node version 12 or newer.

- OpenAI account to obtain an API Key for the GPT-3 model and access to the Playground.

- Ngrok to expose our application to the internet.

- A phone with WhatsApp installed and an active mobile number.

What is GPT-3?

GPT-3, Generative Pretrained Transformer 3, is the largest language model ever created (at the time of this writing) with 175 billion parameters! It was developed by researchers at OpenAI and it is so powerful that it produces indistinguishable human-like text. The language model was announced in May 2020 and has not been released to the public yet since then. You can, however, request private beta access here. GPT-3 has a range of capabilities from content generation, code generation, semantic search, chat and many more.

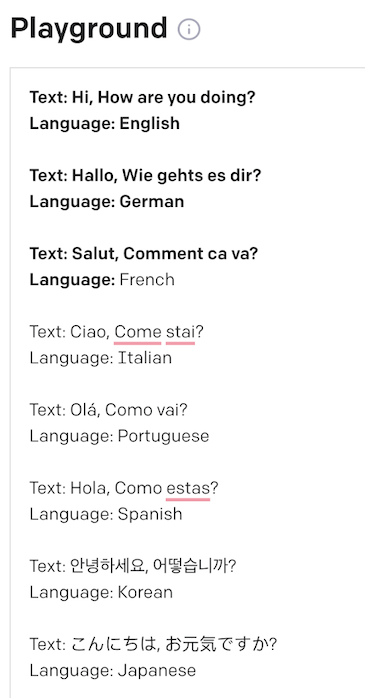

OpenAI researchers provided an API and a Playground, which is fairly simple to use and interact with. Here’s an example of some text I fed into the model via the Playground for the model engine to autocomplete greetings in different languages:

Here's the output i got:

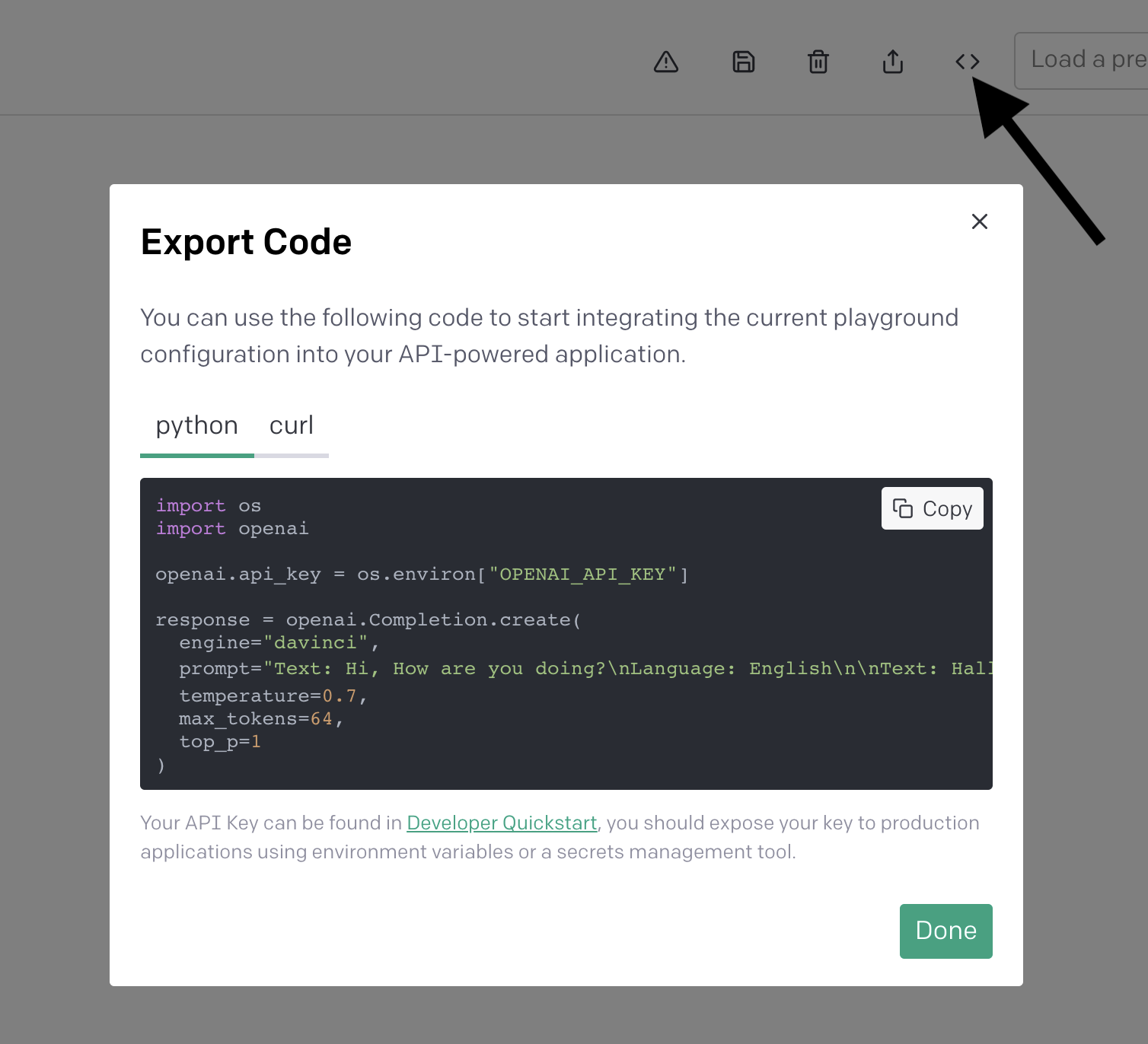

Aside from providing a nice playground interface, folks at OpenAI have also provided a way in which you can export the playground configuration and integrate it with your Python code to use in your application by clicking on the code icon (<>) as shown below:

In this tutorial, however, I will show you how to do this in Node and Javascript.

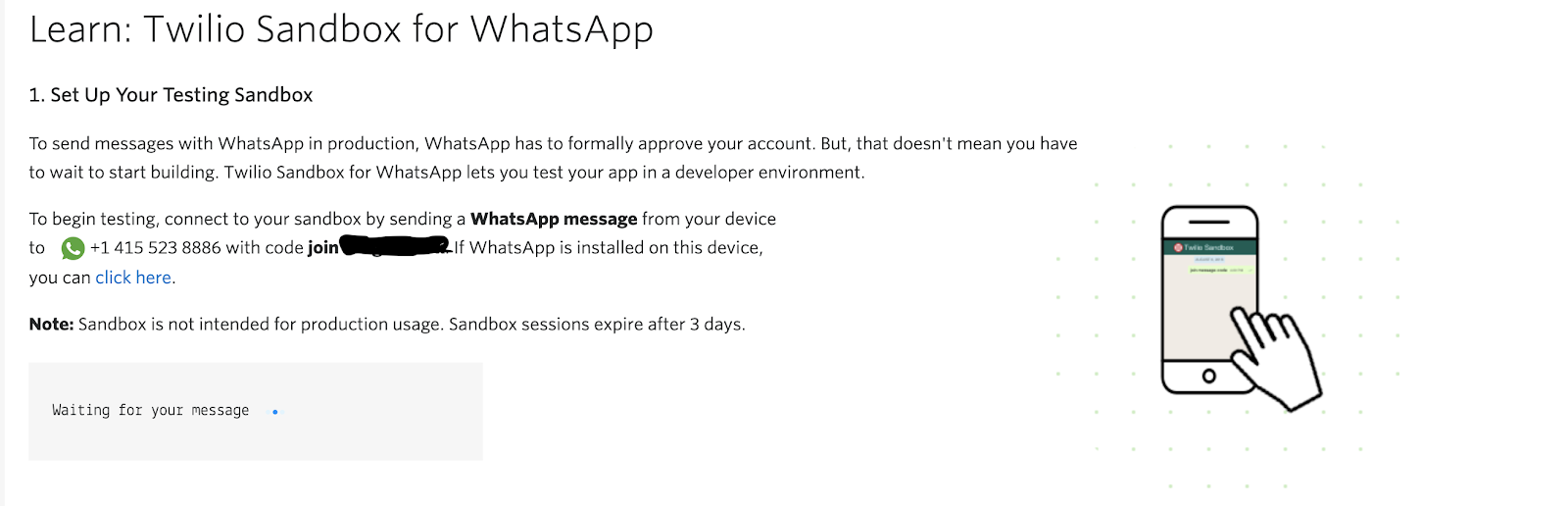

Activate Twilio Sandbox for WhatsApp

After obtaining your Twilio account, navigate to the Twilio console and head over to the `All Products & Services` tab on the left. Select `Programmable Messaging`and click on `Try it Out` then `Try WhatsApp` to activate the sandbox as shown below:

Send the code displayed on your console (join ****), as a WhatsApp message from your personal mobile number to the WhatsApp sandbox number indicated in the page. After a successful activation, you should receive a confirmation message stating you are connected to the sandbox and are all set to receive and send messages.

Set up your development environmentIn your preferred terminal, run the following commands:

The above commands create our working directory, initializes an empty Node.js application and installs the dependencies needed to build the application which are:

- Twilio Javascript library to work with Twilio APIs.

- Axios , a helper library for making HTTP requests.

- Express Js for building our small API.

Send GPT-3 Requests with Node.js

Inside our project folder gpt3-whatsapp-chatbot, create a file called bot.js and in your favorite text editor, add the following lines of code.

The above function makes a POST request to the OpenAI API with the given parameters.

prompt: a sample text to teach the engine what we expect. The model engine will use this text to generate a response attempting to match the pattern you gave it. In this case, having a basic introductory conversation in German.max_tokens: the maximum number of tokens the engine can generate per requesttemperature: a numeric value that controls the randomness of the response. In this case, we are setting it to 0.9 to get more creative responses.stop: specifies the point at which the API will stop generating further tokens.

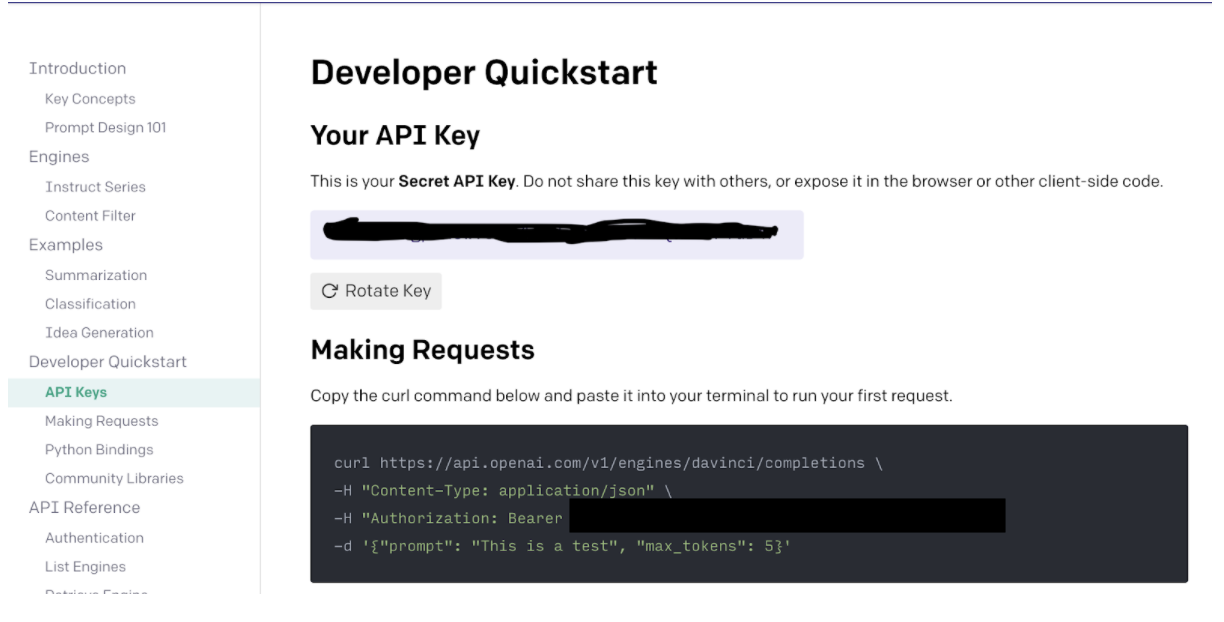

We need to use the OPENAI_SECRET_KEY to authenticate to the OpenAI API and from the set configuration and prompt passed to the complete function, we expect the OpenAI engine, in this case, davinci to respond to the question with its name.

But before running the application, in the OpenAI console, navigate to Documentation > Developer Quickstart > API keys to obtain the OpenAI secret API key as shown below.

Open your terminal and run the following commands to export the OpenAI API key:

If you are in windows, run:

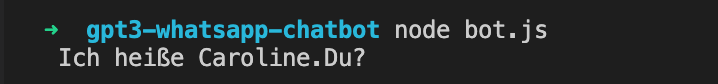

Next, run the application:

You should get a response from the API as shown below.

Note: Running the command gives different results every time as a new response is generated.

Building our API

For our WhatsApp chatbot to interact with the function we have just built, we will need to build a small API, and then configure a webhook in the Twilio console to receive incoming messages.

Create another file named api.js inside our project folder and add the following lines of code.

The implementation above creates a webhook, which is basically the /whatsapp endpoint that we will later configure in the Twilio console, to receive any incoming message that we send to the sandbox. The message is then passed on to the complete function that communicates with the GPT-3 engine as we will see below.

The response from the engine, in this case answer, is then sent back to the user using TwiML(Twilio Markup Language).

Ensure to keep an eye on the console as we have set it to log any errors.

Next, update the bot.js file to the following:

The generatePrompt function appends the incoming WhatsApp message to the current session chat log. This is important since we don’t want the engine to lose context of our conversation. This function is then called in the complete function, which returns the prompt that is sent as part of the parameters to the model engine.

I have reduced the temperature to 0.1 but you can play around with it as much as you want.

Configure WhatsApp API Webhook

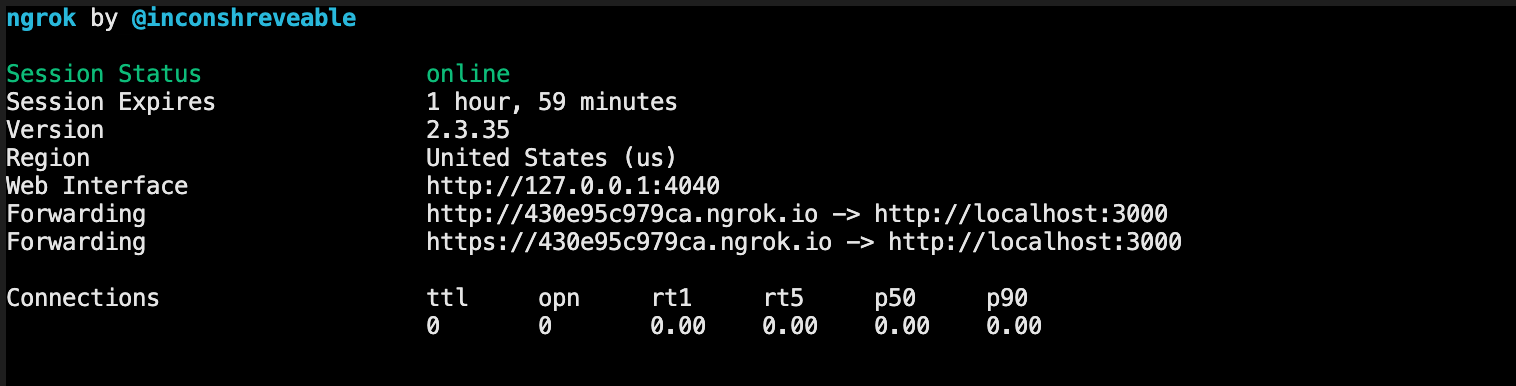

A webhook is a HTTP callback triggered after a certain event has occurred. We need a webhook that will be triggered once we send a message to the WhatsApp sandbox. And we will need ngrok to expose the endpoint we have just created to the internet. In order to do this, first start the application with the following command:

Next, navigate to where you have downloaded ngrok in your terminal, and run the following command.

This exposes the port 3000, where our app is currently running, to the internet. You should see a similar output.

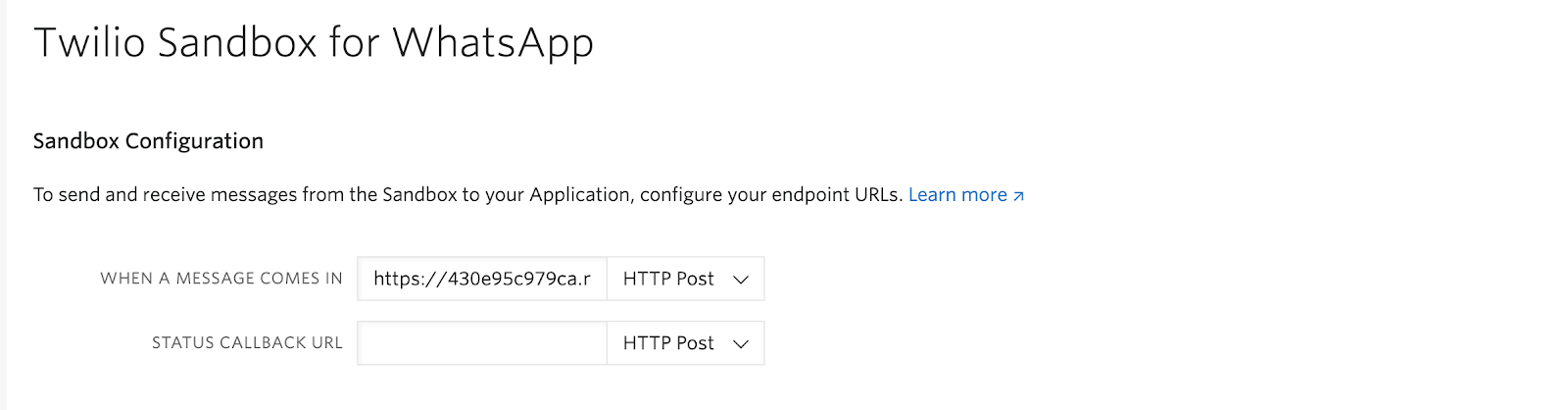

To configure the webhook, in your Twilio console, navigate to Programmable Messaging > Settings > WhatsApp Sandbox Settings. Add /whatsapp at the end of the https URL generated by ngrok and add it in the When a Message comes in field as shown below:

Ensure to save your changes.

Testing the Application

The fun part begins! Take your phone and start having a conversation with the bot we have just built. I adjusted the temperature to 0.7 and had the following conversation. You can play with the parameters sent to the GPT-3 model engine as much as you want.

Conclusion

In this tutorial, we have learnt how to create a WhatsApp chat bot using GPT-3 and Node.js. Hope you had fun doing it as much as I did. GPT-3 has endless possibilities and I can’t wait to see what you build!

Ensure to check out more GPT-3 related articles listed below.

- Control a Spooky Ghost Writer for Halloween with OpenAI's GPT-3 Engine, Python, and Twilio WhatsApp API

- Building a Chatbot with OpenAI's GPT-3 engine, Twilio SMS and Python

- Generating Dragon Ball Fan Fiction with OpenAI's GPT-3 and Twilio SMS

Felistas Ngumi is a software developer and an occasional technical writer.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.