Baby Proofing with Raspberry Pi, Machine Learning and Twilio Programmable SMS

Time to read:

This post is part of Twilio’s archive and may contain outdated information. We’re always building something new, so be sure to check out our latest posts for the most up-to-date insights.

Anyone with a baby and a cat knows maintaining the peace requires constant vigilance. Thankfully, complete vigilance can now be bought for the low price of a Raspberry Pi, a webcam and the time it takes to read the rest of this article. Here’s how I turned my Raspberry Pi into a 24/7 rent-a-cop.

Bottom line

Using machine learning and Twilio, your Raspberry Pi can continuously monitor any area of your home using an off-the-shelf USB webcam. This guide will walk you through how to set up your Raspberry Pi with YOLO, a real time object detection library, Darknet, an open-source neural network framework, OpenCV, a library for image processing, NNPack, a package for accelerating neural network computation, and Twilio Programmable SMS for sending notifications.

Background

This project came out of a personal need. We’re the proud owners of:

- A Cat-obsessed baby

- A Baby-obsessed cat

This seems like a recipe for adorable playtime and cute Instagram photos, except for our baby’s lack of dexterity and our cat’s lack of patience for anything but the most gentle of pats. To give ourselves a break from peace-keeping duties we fenced off an area of our living room as a “baby-safe cat-free zone.” Unfortunately, our warlord of a cat does not respect the sovereignty of this area and takes every opportunity to sneak in.

This sounds like a problem technology can solve! So I converted a Raspberry Pi and webcam into an AI rent-a-cop that uses Twilio to send me a text message whenever our cat attempts a cross-border covert operation. Sure, like any rent-a-cop, the Pi can’t keep order on its own (next project) but it does give us peace of mind.

Hardware Needed

- A Raspberry Pi (works with RPi 3b+ and RPi 4, I recommend RPi 4)

- A USB webcam (eg. Logitech one)

- A power supply for your RPi (eg. RPI3 Power Supply for RPi3 or RPi4 power supply)

- A monitor for your RPi (I’m partial to the 5'' touch display which plugs in directly to your RPi with an HDMI⇔micro HDMI cable), mouse and keyboard

Setting up YOLO, Darknet and NNPack on the Raspberry Pi

First we’ll prepare everything that YOLO and Darknet need. YOLO (https://pjreddie.com/darknet/yolo/) is short for You Only Look Once, a network for object detection. It works by determining the location of objects in the image and then classifying the objects. Unlike many other approaches it uses a single neural network for the whole image which means it only needs to process the image once (hence the name), allowing it to run faster and for real time object detection even with limited computational power.

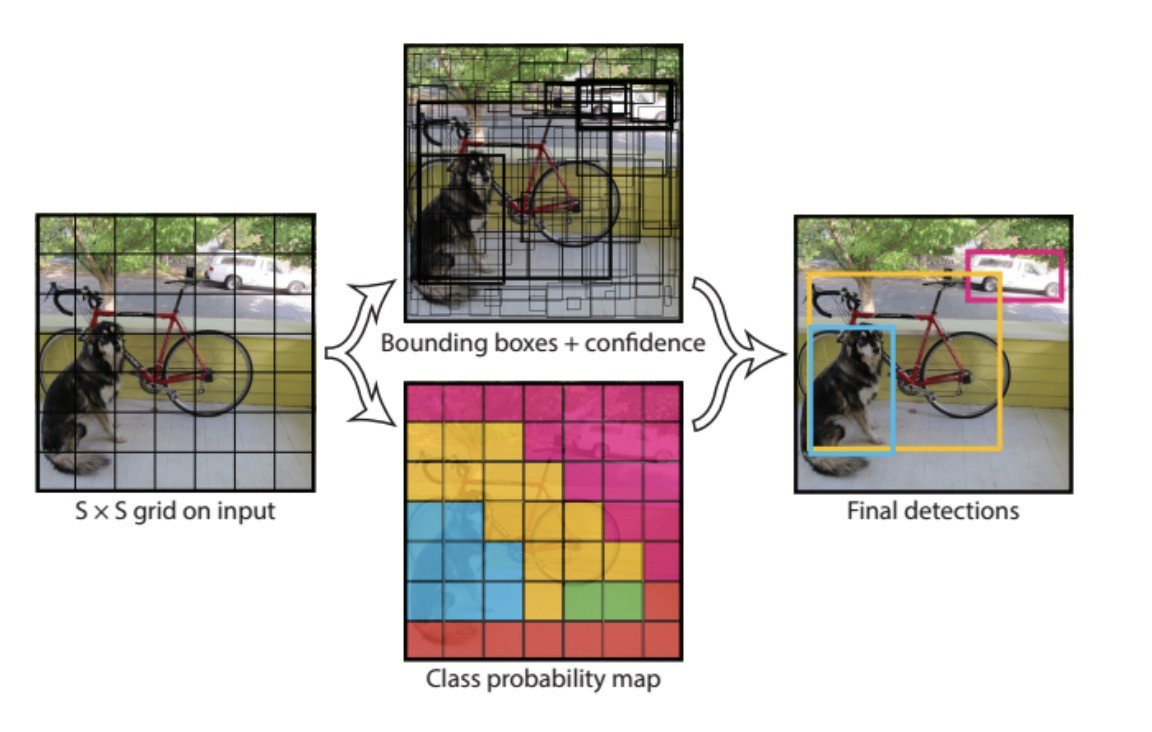

On a high level it works by dividing up the image into an SxS grid of cells, and each of those grid cells predicts B bounding boxes and C class probabilities. Each bounding box has 5 components: the (x,y) coordinates representing the center of the box, the width and height of the box, and the confidence score of whether there is an object in the box. For each bounding box, the cell predicts a class which combined with the other components gives a final score of the probability that the box contains a specific type of object.

You can read more here: https://arxiv.org/pdf/1506.02640v5.pdf

Darknet is a neural network framework that is built by the same people as YOLO. It’s used for training neural networks and sets the architecture for YOLO. You can also base YOLO on tensor flow (called DarkFlow) but the official version is based on Darknet, which is also easier to use.

NNPack is a package for accelerating neural network computation without relying on a GPU. I used the https://github.com/shizukachan/darknet-nnpack fork of the original darknet with NNPack.

First of all get your Raspberry Pi up and running (see https://www.raspberrypi.org/documentation/setup/ if it’s your first time using it) and plug in your webcam, monitor, keyboard and mouse.

Next let’s build NNPack, which needs to be built from source code. Before we can do that we have to install a few build dependencies. Install the PeachPy assembler library, the Confu configuration system, and the Ninja build system:

Then download and set up NNPack:

Make sure the smoke test passes (sometimes it will throw a false-negative the first time, just run it again):

Now copy the build files for NNPack to the appropriate system directories:

Finally clone the Darknet source code:

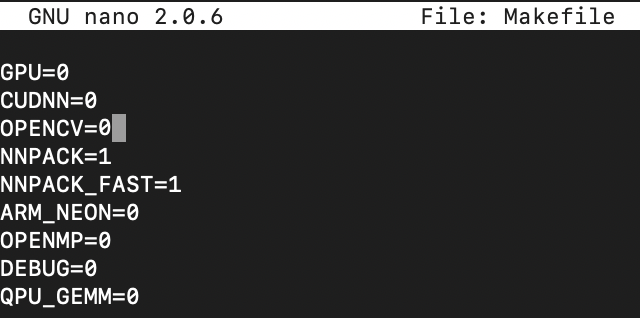

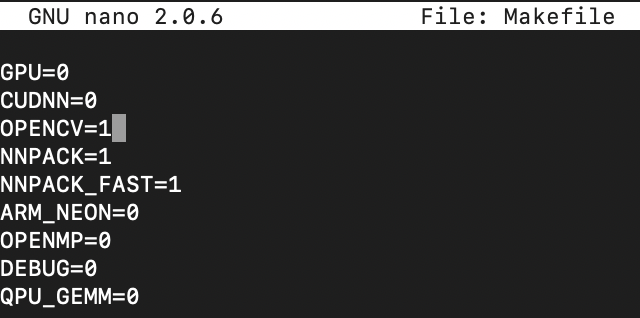

We'll temporarily set the the OPENCV flag to 0 so we can verify that YOLO works before we install OpenCV. Open Makefile in your favorite text editor and set OPENCV=0:

Then save, exit your editor and build it:

Luckily for us the makers of YOLO and Darknet provide a model that has 70 objects including "cat", so we can use this pre-trained model. There's a regular (more accurate) version and one specifically designed for constrained environments. On RPi 4 the regular version will work but it will be very slow, and on RPi 3 it will seg fault, so we'll use the reduced version. To download the model, from your darknet-nnpack directory type in:

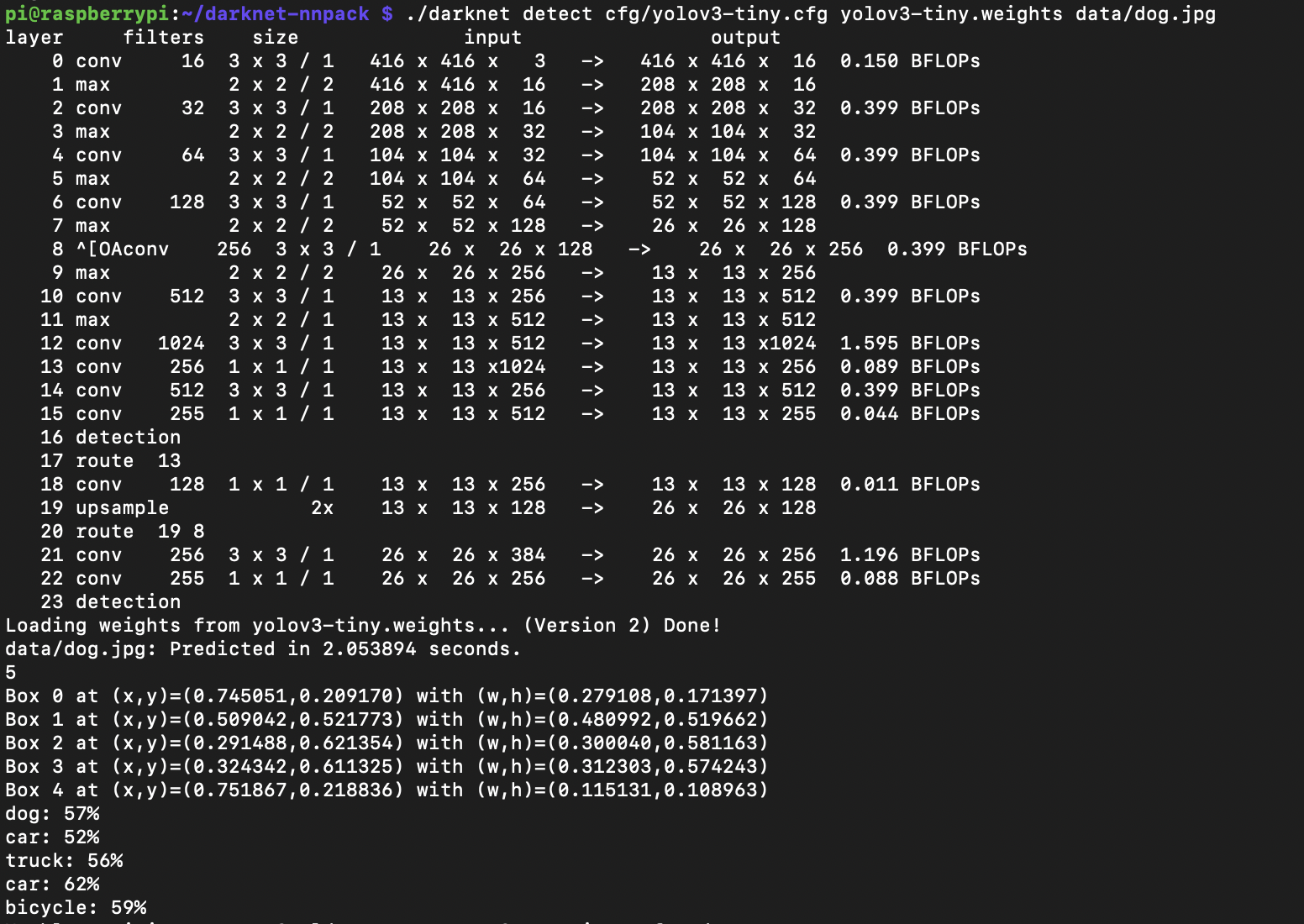

The Darknet-NNPack repository contains a few sample images that you can use to make sure YOLO works. For example, try with data/eagle.jpg, data/dog.jpg, data/person.jpg, or data/horses.jpg:

You should see the bounding boxes and class predictions displayed as below:

If this works you’re ready to move onto the next step of setting up OpenCV and using YOLO in real time with your webcam’s input.

Setting up OpenCV

Darknet needs OpenCV in order to process video from the webcam. The official documentation says we need CUDA (an NVIDIA specific library for utilizing GPUs) but with NNPack we’ll be able to skip it and still attain satisfactory performance. CUDA only works with NVIDIA GPUs which Raspberry Pi doesn’t have.

There are two ways to install OpenCV. Using a package manager such as apt-get or building it from source. The simplest method is to do it via apt-get:

Compiling from source is much trickier and will take over an hour on the Raspberry Pi. If you want to do it that way you can follow this tutorial by pyimagesearch: https://www.pyimagesearch.com/2017/09/04/raspbian-stretch-install-opencv-3-python-on-your-raspberry-pi/. Skip the steps about creating and working in a virtual environment, you’ll want to compile it directly on the file system rather than in a virtual environment. I tested it with a few versions of OpenCV and found that the latest versions of OpenCV require C++ so you’ll want to install OpenCV 3.4 or earlier. It’s also very important to change the swap file size back, I burnt out an SD card by forgetting to do so. Installing with a package manager saves you from a lot of hassle.

Compiling Darknet with OpenCV

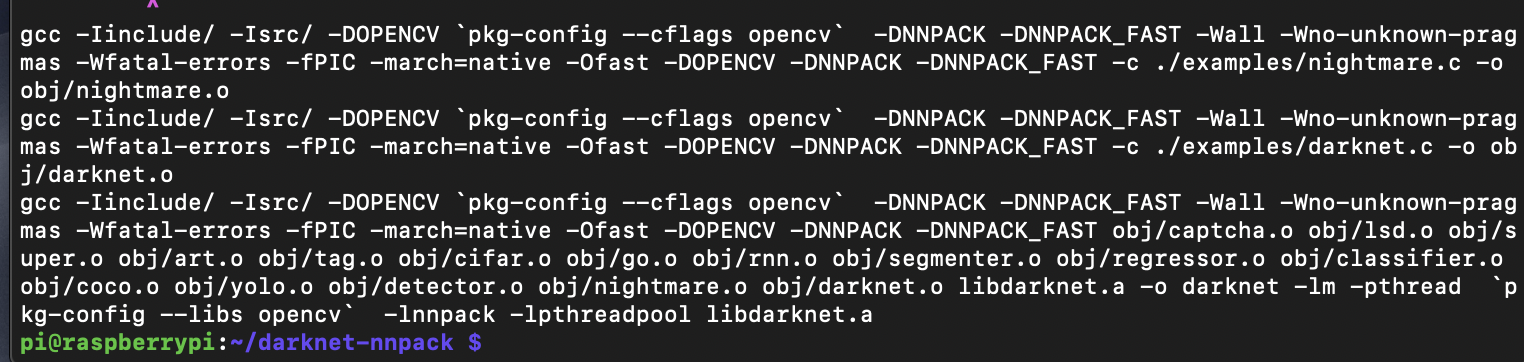

We’ll now recompile Darknet with the OPENCV flag set set to on:

Edit the Makefile so that OPENCV = 1.

And then run:

It might take a while to compile. When it’s done run the detector with the YOLO tiny weights file by typing in the following into terminal from the darknet-nnpack directory:

You should see a video stream with detections:

Setting up Twilio Notifications

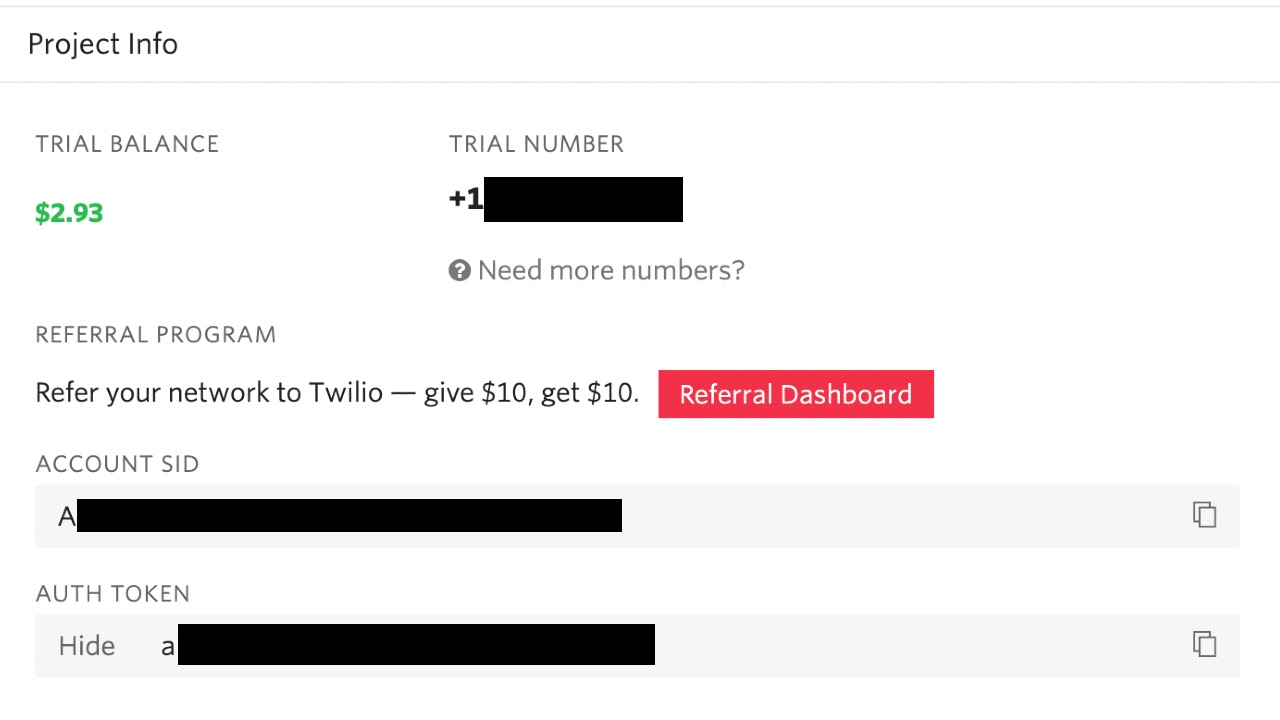

First, create a free Twilio account and get your trial phone number (this is the “from” phone number):

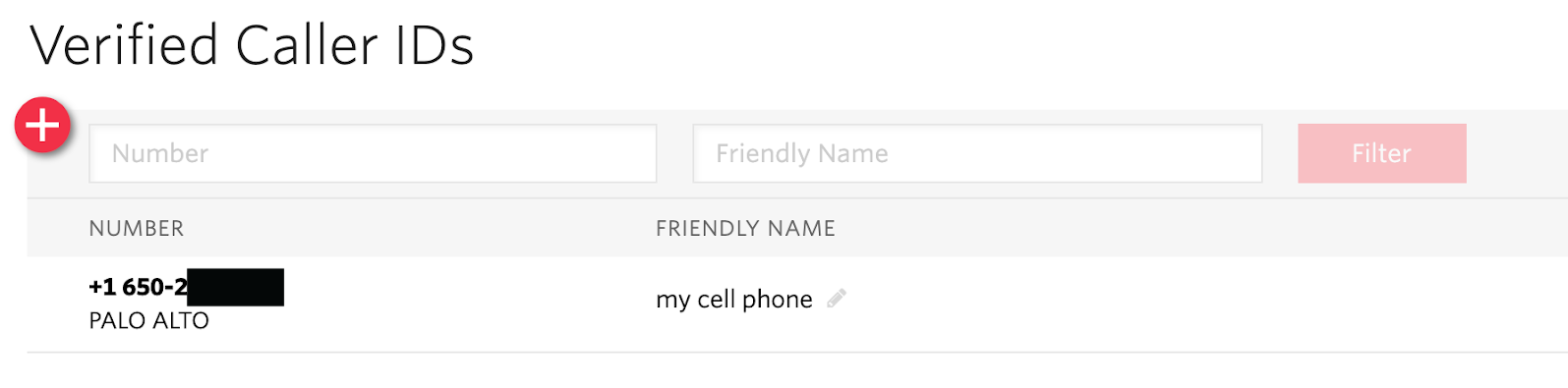

Next, add your cell phone number (this is the “to” phone number) as a verified number:

Now you’ll be able to send messages to your verified phone number from the Twilio number.

In order to use Twilio’s SMS API we’ll need to download and build the Twilio library for C. We also need to install the dependent library libcurl.

Install libcurl if you don’t already have it:

Next download and build twilio:

Now that Twilio is compiled, call it by opening a new terminal and typing in:

The command above has a few placeholders that you need to replace:

- Replace

*ACCOUNTSID*and*AUTHTOKEN*with the information you can find on your Twilio console. - Replace

*FROMPHONE*with your Twilio trial phone number. - Replace

*TOPHONE*with your phone number (eg. +16502223333)

Make sure the phone numbers are given in E.164 format.

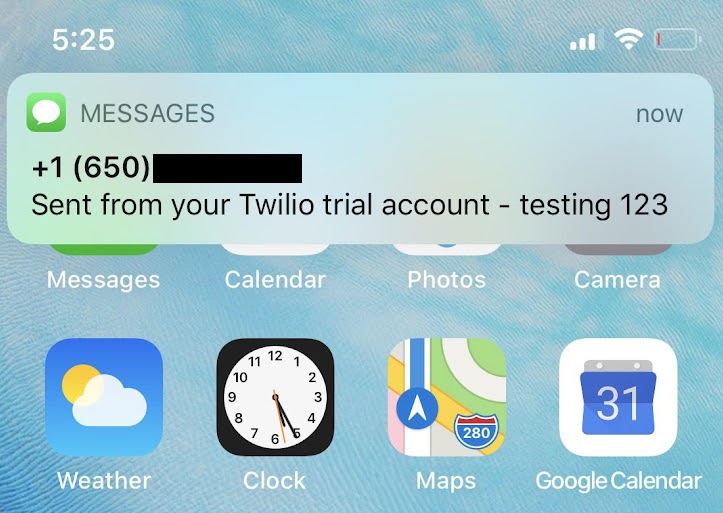

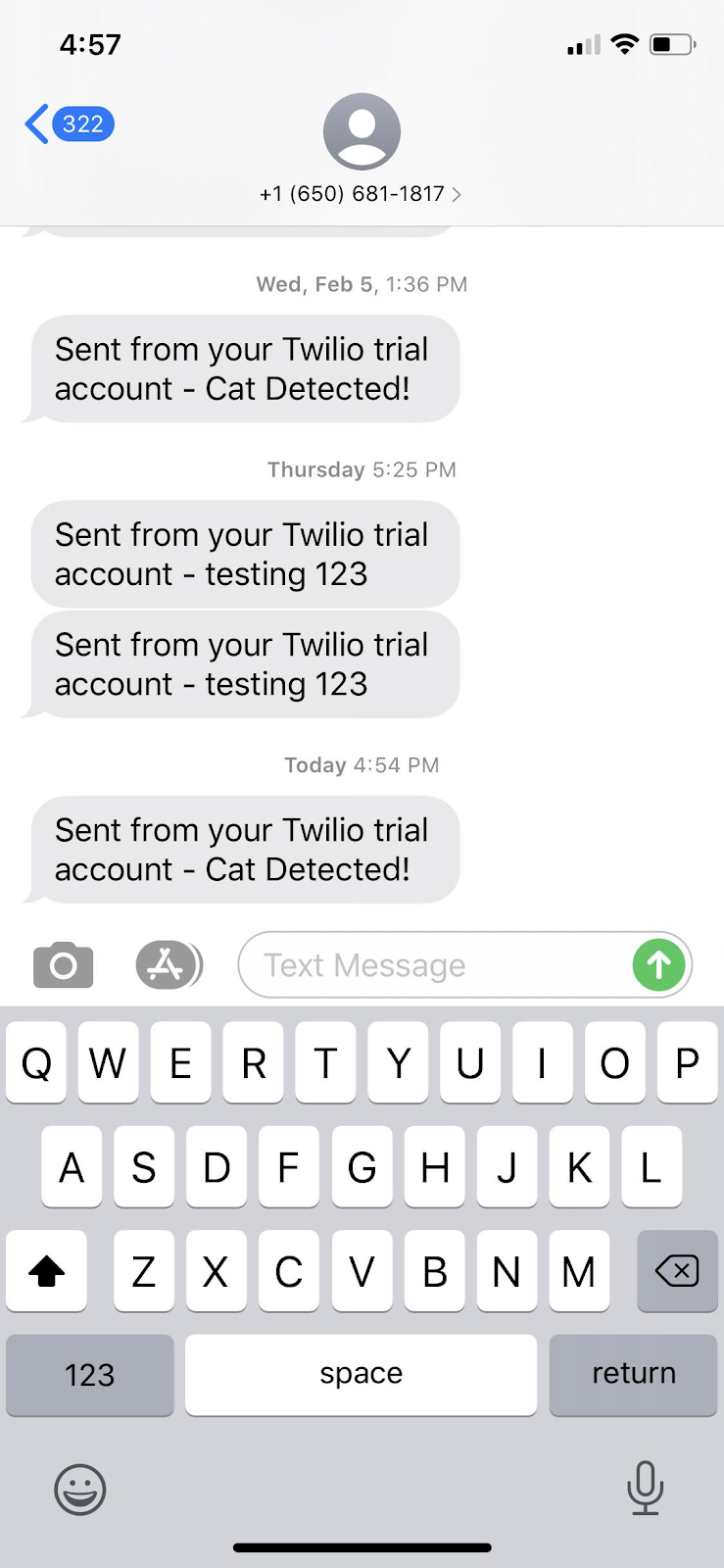

After you run the command you should receive a text message from Twilio:

Detect cats and send SMS

The src/demo.c example from darknet has essentially almost all the functionality we need so instead of rewriting from scratch we’ll just modify that for our purposes.

Go to the darknet-nnpack directory and open src/demo.c with your favorite editor:

At the top of the file, add in two variables we’ll use later on to make sure we don’t spam our phones with too many text messages:

In the detect_in_thread() function, find the draw_detections() call and right after add in a call to a new function called detect_cat():

Below the detect_in_thread() function let's define the new detect_cat() function:

Remember to replace the four placeholders as you did above.

Here’s the full demo.c file with our modifications: https://gist.github.com/alina/5c6dbe009b787065e6837e352aa0f059

With our changes we’re checking to see if a cat is detected in the live feed from the webcam, and if it is then sending an SMS to ourselves. We don’t want to spam our phones by sending a message every cycle, so we limit it to only one text every 2 minutes.

Remember to run make from the /darknet-nnpack directory to rebuild the application:

And then run it:

This will launch a window showing you the output of your webcam with bounding boxes around any objects it detects. Point it to a cat (or picture of a cat if one isn’t readily available) and you should receive a text message alerting you.

Now place the webcam wherever you want cats monitored and enjoy!

Alina Libova Cohen is a venture capitalist, entrepreneur and software engineer. Most recently she was a General Partner at Initialized Capital and prior to that led start up investing for Tamares. Before transitioning to VC she was a software engineer at Facebook following their acquisition of RecRec, a computer vision start up she cofounded. You can follow her on Twitter: @alibovacohen.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.