Sound Intelligence with Audio Identification and Recognition using Vector Embeddings via Twilio WhatsApp

Time to read:

Sound Intelligence with Audio Identification and Recognition using Vector Embeddings via Twilio WhatsApp

In recent years, the growth of messaging platforms, especially in business environments, has led to an increasing demand for innovative solutions that can analyze audio messages. However, processing and understanding audio files, especially in the context of customer interactions, can be challenging. Common issues involve:

- Difficulty in transcribing audio accurately, especially when dealing with various accents, speech speeds, and background noises.

- Lack of personalized responses based on the content of the audio message.

- No integration between speech recognition and content-based recommendation systems.

- Inability to store and search audio data efficiently for later retrieval and comparison.

This application addresses these challenges by leveraging voice analysis and natural language processing to enable intelligent music discovery through WhatsApp. The system analyzes audio messages using librosa to extract five key voice characteristics: pitch stability, voice texture, speech rhythm, vocal resonance, and articulation clarity. Rather than traditional audio fingerprinting, OpenAI's Whisper model is used for transcription and text-embedding-ada-002 to generate vector embeddings. The voice analysis features are stored alongside these embeddings in a Qdrant vector database, enabling similarity-based matching for personalized recommendations.

By integrating with Twilio's WhatsApp Business API, which provides access to over 2 billion users globally, the application processes audio messages asynchronously using FastAPI's background tasks. The system combines acoustic analysis with GPT-4.o for generating contextual music recommendations, providing users with personalized song suggestions based on both their voice characteristics and the content of their messages. Each recommendation includes the song name, artist, reasoning for the match, and platform links.

This architecture is particularly significant as it demonstrates:

- The practical application of multi-modal and multilingual AI in consumer applications particularly in the context of voice-based music discovery through messaging platforms.

- The feasibility of complex audio processing in asynchronous messaging environments

- A scalable approach to content recommendation that combines both acoustic and semantic features

- An implementation pattern for handling compute-intensive tasks in a message-based system

- Leveraging existing messaging infrastructure to deliver AI capabilities to billions of users without additional software installation

In this tutorial, you will build a sound intelligent system by integrating FastAPI, Twilio Whatsapp, OpenAI, and Qdrant. This application processes incoming audio messages, extracts key features, transcribes the audio, generates embeddings for similarity search, and provides personalized music recommendations.

Prerequisites

Before you begin, ensure that you have the following:

- A free Twilio account. Sign up here if you have not yet created an account.

- Python 3.7 or higher installed globally

- An OpenAI account.

- ngrok. You will use this handy utility to connect the application running on your system to a public URL that Twilio can connect to handle webhook.

- Familiarity and basic knowledge in Python.

Set up the project

Using an editor of your choice, open your terminal and run the commands below. This creates your project structure and sets up a virtual environment.

The virtual environment allows you to manage your project's dependencies independently, preventing conflicts with other Python projects you might have.

Once your virtual environment is active, proceed to install the required packages.

These packages are all required for the application to run properly. They handle various functionalities: fastapi is the web framework that handles your backend. httpx for async HTTP requests. numpy is used for numerical computations and manipulations. librosa for audio processing. pydantic for data validation. twilio WhatsApp messaging will provide you with the frontend. qdrant-client for vector database operations. openai handles all the AI services providing the models to be used. python-dotenv loads the environment variables. uvicorn for ASGI server.

Create a .env file

In the Audio-Identification directory, create a file named .env and include the following lines. Replace the placeholders with your actual Twilio credentials and OpenAI API key. These APIs enable communication with third-party applications. Your application requires APIs to authenticate with OpenAI and Twilio. The key configuration will be loaded from the .env file.

Next, obtain the required credentials if you don't have them already.

Obtain the Credentials

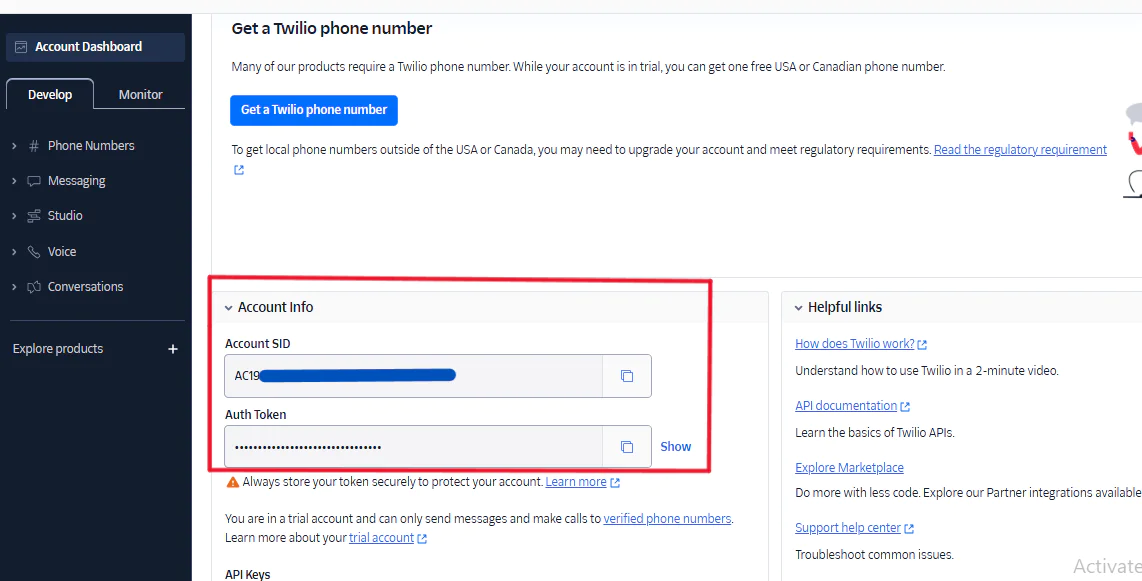

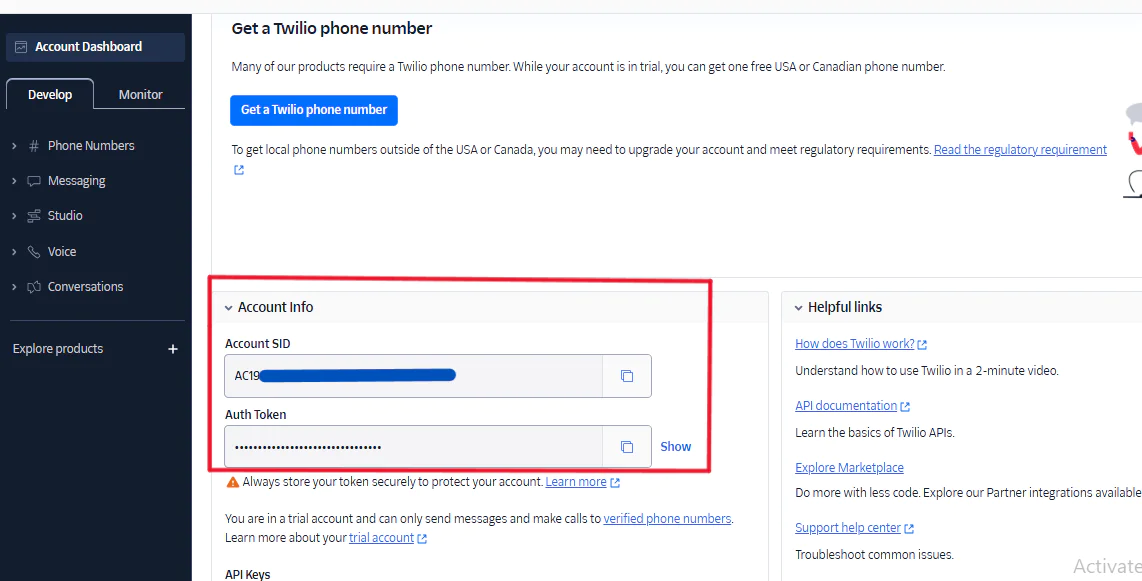

Configure your Twilio Account

Navigate to find your Account SID and Auth Token within the Twilio Console. Copy and add them to the .env file. These credentials play a critical role in authenticating requests to Twilio's API endpoints. They establish the connection between your FastAPI application and Twilio's services. As shown in the screenshot below.

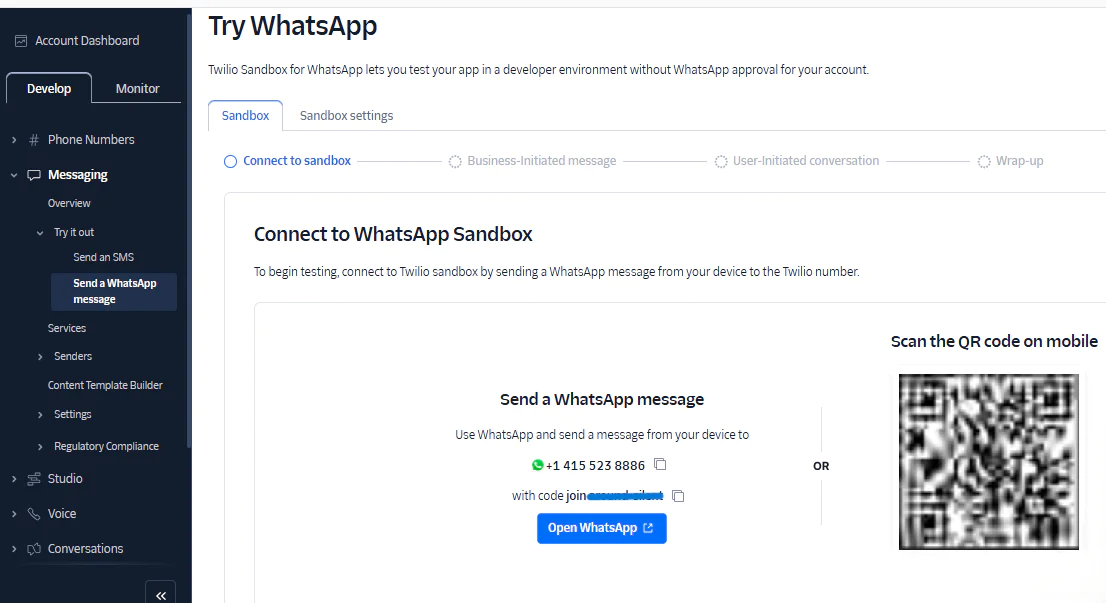

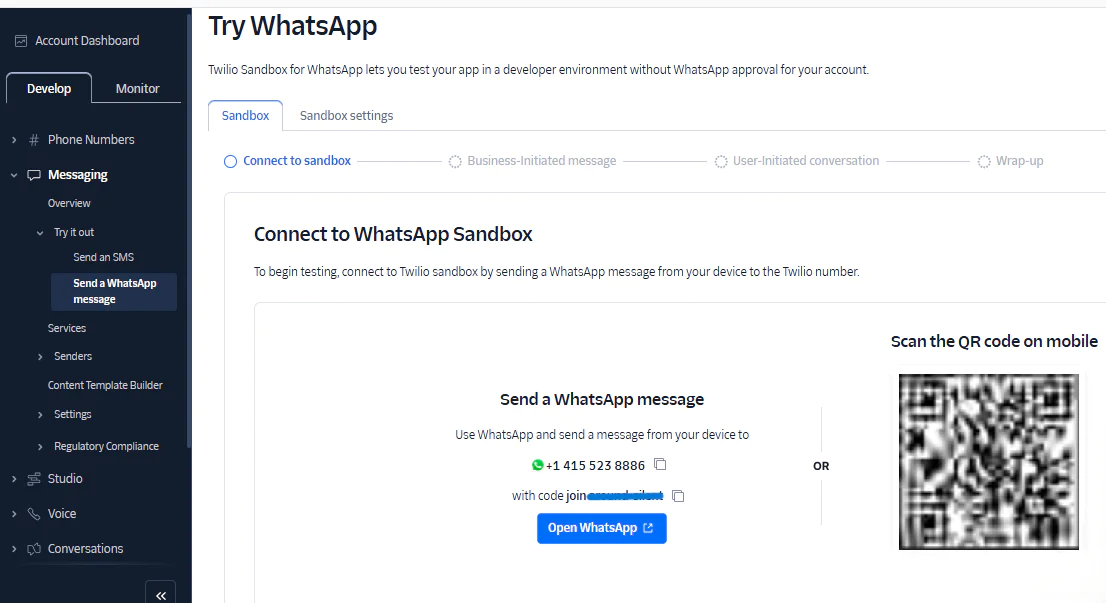

Set up a Twilio Whatsapp Sandbox

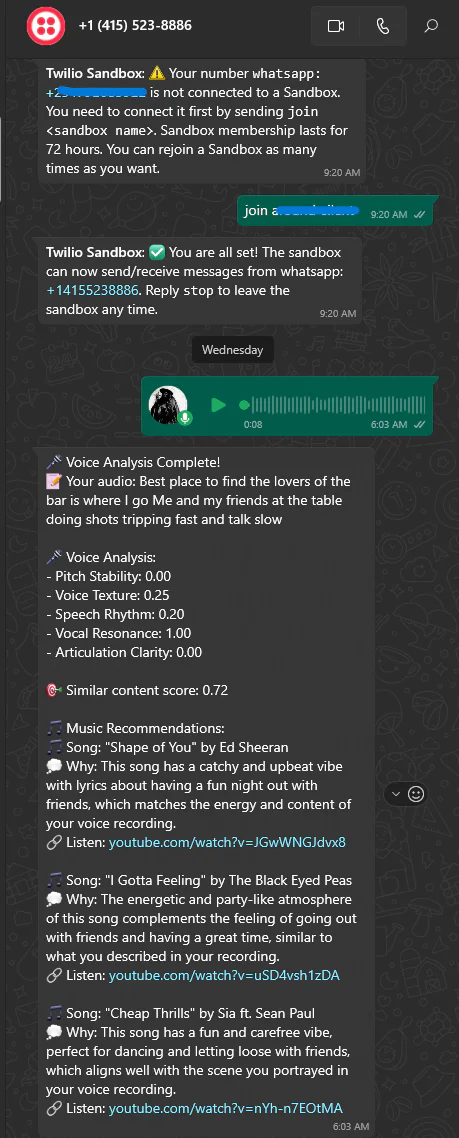

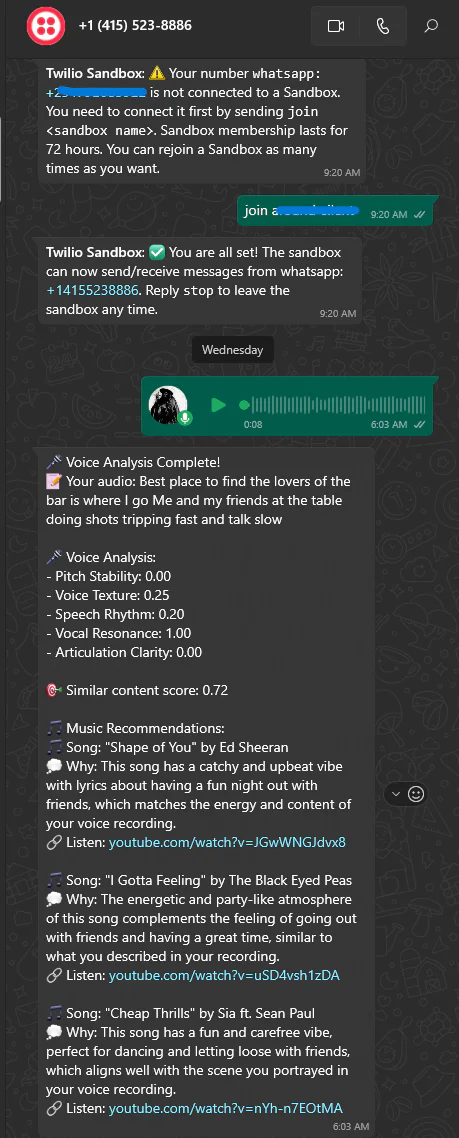

Go to Messaging → Try it Out → Send a WhatsApp Message in your Twilio Console. You'll see instructions to join your sandbox. Send a WhatsApp message to +14155238886 with the join phrase code listed on your WhatsApp message screen, or scan the qr code displayed. This connects your WhatsApp number to the Twilio sandbox. The screenshot below visualizes what is expected of you.

If successfully connected, you should receive a message. Twilio Sandbox: ✅You are all set! The sandbox can now send/receive messages from whatsapp: +14155238886. Reply stop to leave the sandbox any time.

Next, set up your OpenAI account.

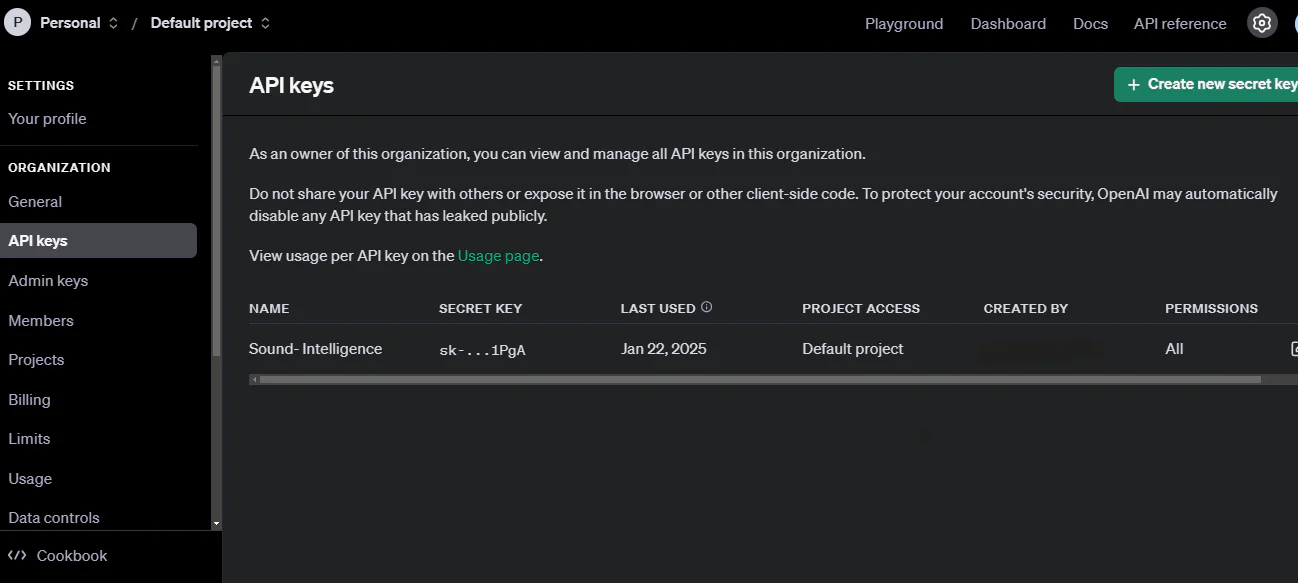

Configure your OpenAI Account

To set up your OpenAI account and obtain an API key, visit the OpenAI website and log in to your OpenAI dashboard. Follow the provided instructions to generate an API key tailored to your account. Securely copy and add it to the .env file. It will be used for authentication of requests to the OpenAI API from your FastAPI application. It enables it to leverage OpenAI's natural language processing capabilities effectively.

Click on Create new secret key. You are prompted to enter the name of the API key and then click again on Create new secret key to generate the API key. Copy the API key and add it to your .env file under the OPENAI_API_KEY. See the screenshot below for confirmation you are on the right track.

With the setup done right, proceed to development, where you will bind everything together and bring the application to life.

Build the Application

Import Required Libraries

To begin, you need to import all the required libraries. The code below includes all necessary imports. Create a file named app.py in the Audio-Identification directory. Copy the code below and paste it there.

The imports in this application create a comprehensive foundation for a voice analysis and music recommendation system. By combining standard Python libraries os, typing, datetime, uuid for basic operations. Specialized audio processing libraries librosa and numpy for voice analysis. Web framework components FastAPI for API creation. Integration tools Twilio and OpenAI for external services. Data validation with Pydantic for robust data handling, and database management Qrant for storing voice embeddings. The application can receive WhatsApp voice messages, analyze their characteristics, store the data, and generate personalized music recommendations. The inclusion of async capabilities with asynccontextmanager and BackgroundTasks. Lastly, proper logging ensures efficient operation and monitoring, while environment management dotenv maintains secure configuration.

Set Up Logging and Environment Variables

This code snippet sets up essential application configuration. Update it in your app.py file.

The logging.basicConfig() configures logging to capture and display informational messages. You use load_dotenv() to import environment variables securely. QDRANT_PATH Specifies local storage for vector database.

It prepares the application's foundational logging and configuration infrastructure, ensuring secure and traceable execution. Next, convert datatypes.

Convert NumPy Data Types to Native Python Types

This utility function safely converts NumPy data types to standard Python types. Update it in your app.py file.

The convert_numpy_to_python function is a versatile utility designed to transform NumPy data types into standard Python native types. It recursively handles various data structures, ensuring smooth conversion of complex NumPy arrays and nested data containers. When encountering a NumPy array, the function converts it to a Python list using tolist(). For single NumPy scalar values, it extracts the Python scalar value with item(). By providing a comprehensive type conversion mechanism, the function helps bridge the gap between NumPy's specialized data types and Python's native type system. Thus preventing potential serialization or compatibility issues. Next, manage lifespan events and configure app in FastAPI.

App Configuration

In this section, you configure management and FastAPI lifespan. Update the app.py file with the code below.

The Settings class uses Pydantic's BaseSettings to load configuration values, such as Twilio and OpenAI credentials, from a .env file. This ensures sensitive data remains secure while allowing easy access to environment variables. By defining env_file = ".env", the class simplifies loading and managing configuration settings.

Instantiating settings = Settings() validates and centralizes environment variables for use throughout the app. This ensures clean and efficient configuration management. Next, move on to audio processing and voice analysis.

Process Audio and Analyze Voice

This code snippet defines a structured approach to audio processing, combining data modeling with sophisticated voice characteristic extraction and transcription techniques. Update it in the app.py file.

# Audio Processing and Similarity Search

The AudioRecord class defines a structured data model for audio records using Pydantic's BaseModel, containing an ID, feature list, and metadata dictionary. The AudioProcessor class is a sophisticated audio analysis tool that leverages multiple libraries to extract detailed voice characteristics.

The analyze_voice_characteristics method uses librosa to perform advanced audio feature extraction, calculating complex metrics like pitch stability, rhythm, voice texture, and articulation clarity. It transforms raw audio signals into a normalized feature vector that captures nuanced voice properties. The method extracts Mel-frequency cepstral coefficients (MFCCs), spectral centroid, and other advanced audio features, then normalizes them to a 0-1 scale for consistent interpretation.

The transcribe_and_embed method combines multiple AI services to process audio comprehensively. It first analyzes voice characteristics, then uses OpenAI's Whisper model to transcribe the audio, and finally generates a text embedding using OpenAI's embedding model. This method creates a rich, multi-dimensional representation of the audio input.

The download_audio function provides a robust mechanism for downloading audio files from URLs, handling redirects and authentication. It uses httpx for asynchronous HTTP requests and includes comprehensive error handling to manage various network and download scenarios.

The search_similar_audio function interfaces with the Qdrant vector database to find similar audio embeddings, enabling content-based audio retrieval by comparing vector similarities. With these classes in place you are equipped to move to the next phase which is Music recommendation and audio processing workflow.

Recommend Music and Perform Audio Processing

In this section, you will implement a comprehensive audio processing pipeline that transforms voice recordings into personalized music recommendations. By integrating advanced AI services like OpenAI's GPT and Whisper models, the code transcribes audio, analyzes voice characteristics, searches for similar content, and generates tailored song suggestions, all delivered seamlessly via WhatsApp. Update the app.py file with the code below.

The get_recommendations function leverages OpenAI's GPT model to generate personalized music recommendations based on a transcription. It uses a prompt engineering approach, instructing the AI to provide song suggestions with artist names, reasoning, and hypothetical platform links, all formatted with emojis for enhanced readability. You can tweak the prompt here and there. Feel free to try different OpenAI models to see how they perform.

The store_audio_embedding function is crucial for building a persistent audio similarity database. It uses Qdrant to store audio embeddings with unique identifiers, converting NumPy types to standard Python types and combining voice analysis metadata with the original audio information. This enables future content-based audio retrieval and matching.

The process_audio_message function orchestrates the entire audio processing workflow. It downloads the audio, transcribes it, analyzes voice characteristics, searches for similar audio, and generates personalized music recommendations. If no similar audio is found, it stores the new audio embedding for future matching. The function culminates by sending a comprehensive WhatsApp message with transcription, voice analysis, and music recommendations, providing a seamless user experience. Each function is equipped with an error handler. Hence logging issues and ensuring the application remains robust and responsive even when individual processing steps encounter challenges. With this backend logic in order, now move on to lay a foundation for the frontend which in your case is whatsapp for the user interaction.

Service Endpoints

In this section, you will implement the crucial API endpoints for the audio recognition service. Handle the incoming WhatsApp audio messages and provide service management capabilities. Update the code in the app.py file.

The whatsapp_webhook endpoint processes incoming WhatsApp media messages. It extracts the media URL and sender information, using FastAPI's BackgroundTasks to asynchronously handle audio processing. If an audio message is received, it triggers the process_audio_message function in the background. For non-audio messages, it sends a guidance message to the user.

The root() endpoint provides basic service metadata, returning version, status, and configured AI models. This helps clients quickly verify the service is operational and understand its capabilities.

The health_check() endpoint performs a comprehensive system status check, verifying the initialization and configuration of critical services like Qdrant, OpenAI, and Twilio. This is crucial for monitoring and debugging the application's infrastructure.

The final if __name__ == "__main__" block allows the application to be run directly using Uvicorn, specifying the host and port for deployment.

To test your application, run the server now using this line in terminal:

You are almost done. Now you will need to set up the Twilio webhook to go live.

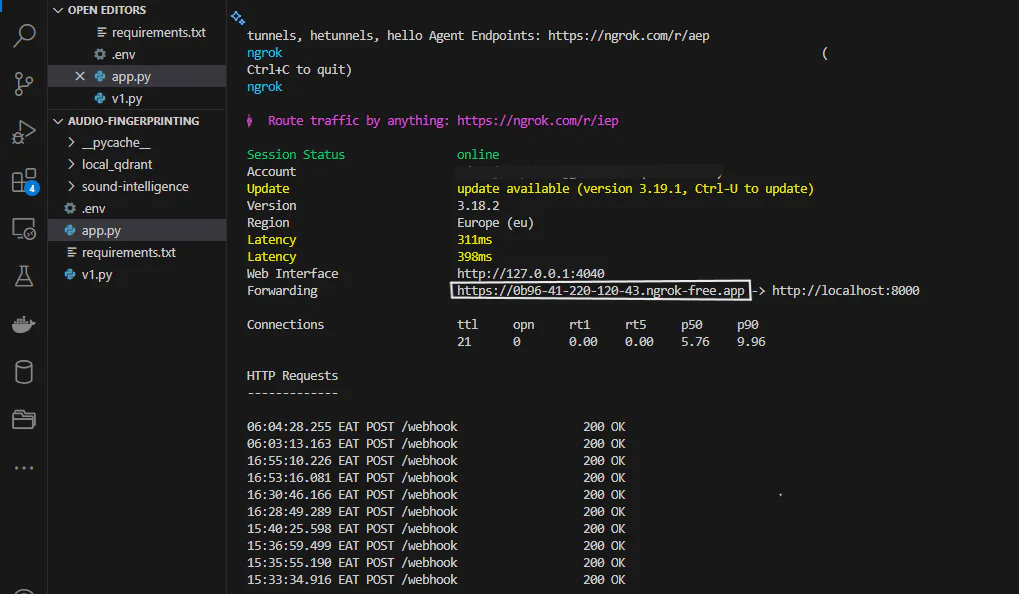

Set up the Twilio WhatsApp Webhook

Twilio receives an incoming message. It then automatically forwards it to your application's designated webhook URL. To enable this functionality, you'll need to make your application publicly accessible via ngrok. Simply open a new terminal and execute the specified command to establish the connection.

Copy the Forwarding URL exactly as it appears in the terminal output after running the ngrok command. This URL will be used to connect your local application with Twilio's webhook. As shown in the screenshot below.

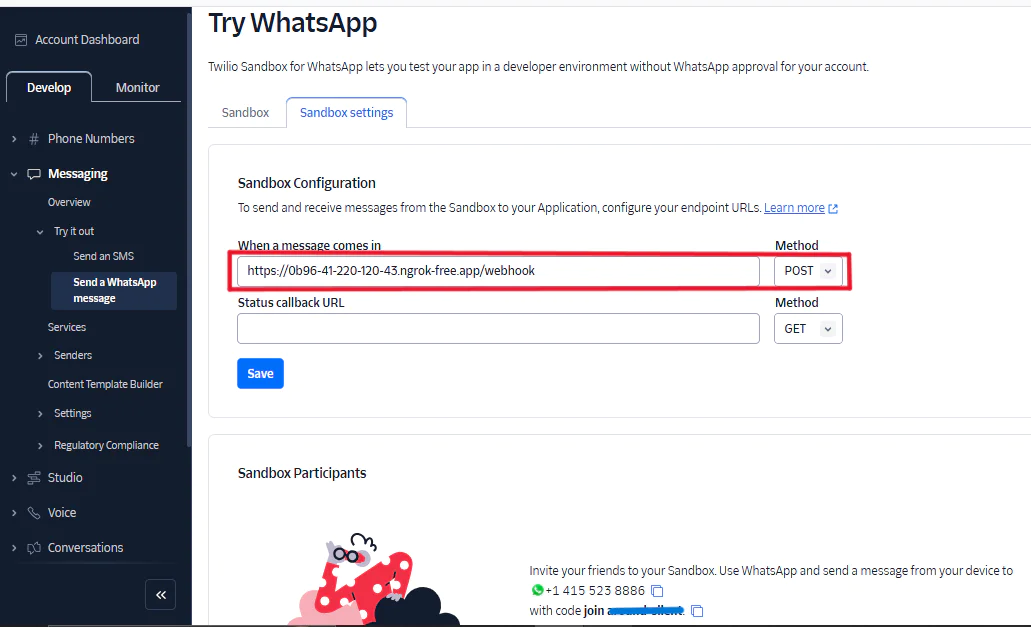

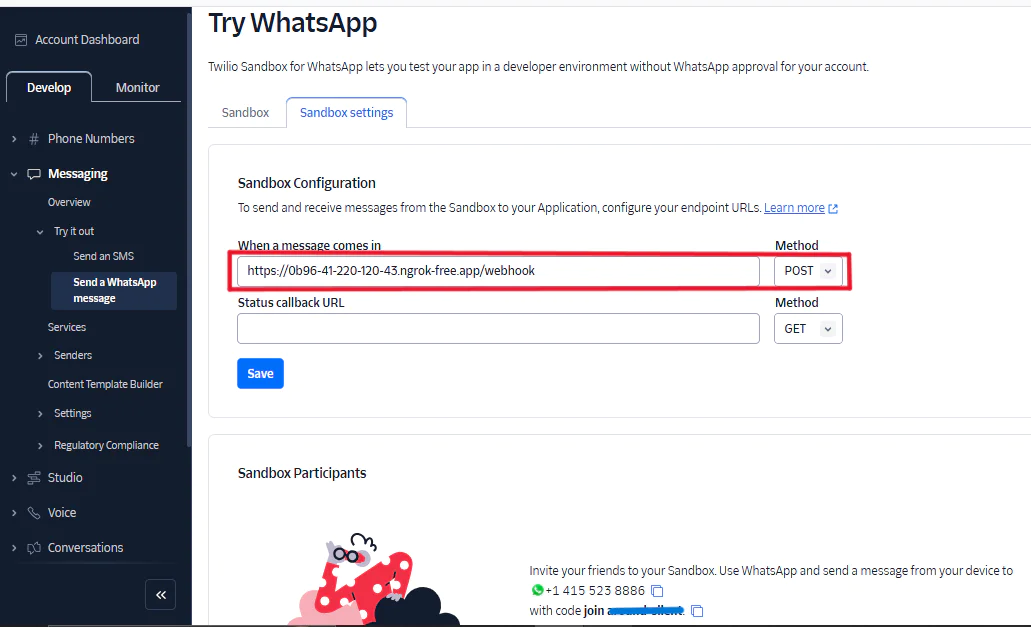

Head on to Twilio Try WhatsApp page, click on the Sandbox Settings tab and configure the sandbox settings as follows.

- In the When a message comes in field, paste the ngrok forwarding URL and add

/webhookto the end - Set the Method to POST

Click the Save button to confirm the configuration. Confirm you are on the right track as depicted in the screenshot below.

Next, proceed to test your application to see if everything works as expected.

Test your Application

To test the application, ensure all environment variables are set in your .env file.

Run the FastAPI application using uvicorn app:app --host 127.0.0.1--port 8000 --reload. Open WhatsApp and send a short voice recording of you singing or send pre-recorded audio files to your Twilio sandbox number. See the screenshot below of how it perfectly works. Feel free to adjust it to suit your curiosities and needs.

What's next for your WhatsApp Voice Analysis and Music Recommendation System?

You've created a full-featured, AI-powered voice analysis application that:

- Receives voice notes via Twilio WhatsApp

- Transcribes audio using OpenAI Whisper

- Analyzes voice characteristics with librosa

- Generate embeddings using text embedding ada 002

- Stores and searches audio embeddings with Qdrant

- Generates personalized music recommendations

With this system, you can provide users with unique insights into not only their voice but also prerecorded audio and tailored music suggestions.

Take a look at these articles for inspiration and further your skills:

- Build a Movie Recommendation App with Python, OpenAI, and Twilio SendGrid

- How to Handle Incoming WhatsApp Audio Messages in Go

- How to Create Ads that Click to WhatsApp

Jacob Snipes is a Software Developer and Machine Learning Engineer with a passion for building intelligent systems that solve real-world problems. He thrives on transforming complex challenges into impactful results by leveraging natural language processing (NLP), computer vision, deep learning, generative AI, and vector databases. He can be reached via email.

Related Posts

Related Resources

Twilio Docs

From APIs to SDKs to sample apps

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars

Learn from customer engagement experts to improve your own communication.

Ahoy

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.